当前位置:网站首页>Deep learning (tentsorflow2. version) three good student performance problems (1)

Deep learning (tentsorflow2. version) three good student performance problems (1)

2022-06-26 09:45:00 【knighthood2001】

🥰 Blog's front page :knighthood2001

Comments are welcome on thumb up ️

️ love python, Looking forward to progress and growth with you !!️

Catalog

The introduction of "three good" students' achievements

Build a neural network to solve the problem of "three good" students' grades

Code of constructed neural network

The introduction of "three good" students' achievements

Let's look at such a problem : A school is going to select three good students , We know , Three good students “ Three good " It means having good moral character 、 Study well 、 Good sports : But to be selected , Now we all need quantitative , in other words The school will score according to moral education 、 Intellectual education and physical education 3 To calculate a total score , Then determine who can be selected as the "three good students" according to the total score . hypothesis The school's rule for calculating the total score is : Moral education share 60% , Intellectual education accounts for 30%, Sports account for 10% . If this rule is expressed by a formula, it is like this

| Total score = Moral education score *0.6 + Intellectual education points * 0.3 + Physical education scores *0.1 |

You can see , The formula for calculating the total score of three good students is actually 3 Each term score is multiplied by a weight (weight) value , Then add and sum .

The above is the background for us to solve the problem . that , We need to Problem solved That's true : Parents with two children , Know your child's 3 Item score and total score , however The school did not tell parents the rules for calculating the total score . Parents guess that the way to calculate the total score is to put 3 The term scores are multiplied by different weights and then added to obtain , The only thing I don't know is how much these weights are . Now parents want to use artificial intelligence neural network method to roughly calculate this 3 How many weights are there respectively . Let's assume that the first parent's child A Of Moral education is divided into 90、 Intellectual education is divided into 80、 Physical education is divided into 70、 The total score is 85, And separately w1、w2、w3 To represent moral education 、 The weight multiplied by the scores of intellectual education and physical education , You can get this formula :

| 90 * w1 + 80 * w2 + 70 * w3 = 85 |

Another child B Of Moral education is divided into 98、 Intellectual education is divided into 95、 Physical education is divided into 87、 The total score is 96, We can get this formula :

| 98 * w1 + 95 * w2 + 87 * w3 = 96 |

From the mathematical method of solving equations , There is a total of... In these two formulas 3 An unknown number , In theory, as long as there is 3 An inequivalent formula , You can work out the answer . But we only have data for two students , There are only two formulas , It is impossible to solve this problem by solving the equation . So at this point , You can use Neural network method To try to solve this problem .

Build a neural network to solve the problem of "three good" students' grades

Theoretical knowledge

① Neural network model diagrams generally contain 1 Input layers 、1 One or more hidden layers , as well as 1 Output layers .

② Generally speaking , Input layer It describes the form of input data ; We use squares to represent a number of each input data ( Or a field ), It is called an input node ; The input node is usually x Named after the , If there are multiple values , Then use x1,x2,...,xn To represent the .

③ Hidden layer It is the most important part to describe the structure of the neural network model we designed ; There may be more than one hidden layer ; There will be... In every layer 1 One or more neurons , We use circles to represent , It's called a neuron node or a hidden node , Sometimes referred to simply as nodes ; Each node receives the data from the previous layer and outputs the data to the next layer after certain operations , Conform to the characteristics of neurons , These operations on neuron nodes are called computational operations or operations (operation, abbreviation op)

④ Output layer It is generally the last layer of the neural network model , Will contain 1 One or more output nodes represented by diamonds , The output node represents the final result of the whole neural network : The nodes of the output layer are generally used to y Named after the , But not necessarily .

⑤ We are in the neural network model diagram , It is generally agreed in the... Of each node Lower right ( Sometimes it's on the lower left because of the crowd ) The name of the tag node , At the node upper left Mark the calculations made by this node , for example ,x1、x2、x3、n1、n2、n3、y Are all node names ,“*w1”、 “*w2”、 “*w3” These represent node operations .

Now let's go back to the model itself , It's a standard Feedforward neural networks , That is, a neural network in which signals are always transmitted forward . The input layer has 3 Nodes x1、x2、x3, They represent the moral education points mentioned above 、 Intellectual education and physical education . Because the problem is relatively simple , Hidden layer we only designed one layer , Among them is 3 Nodes n1、n2、n3, Respectively for 3 Input scores for processing , The way to deal with it is to multiply by 3 A weight w1、w2、w3. The output layer has only one node y, Because we only require a total score ; node y The operation is to n1、n2、n3 this 3 The value output from the nodes Add to sum .

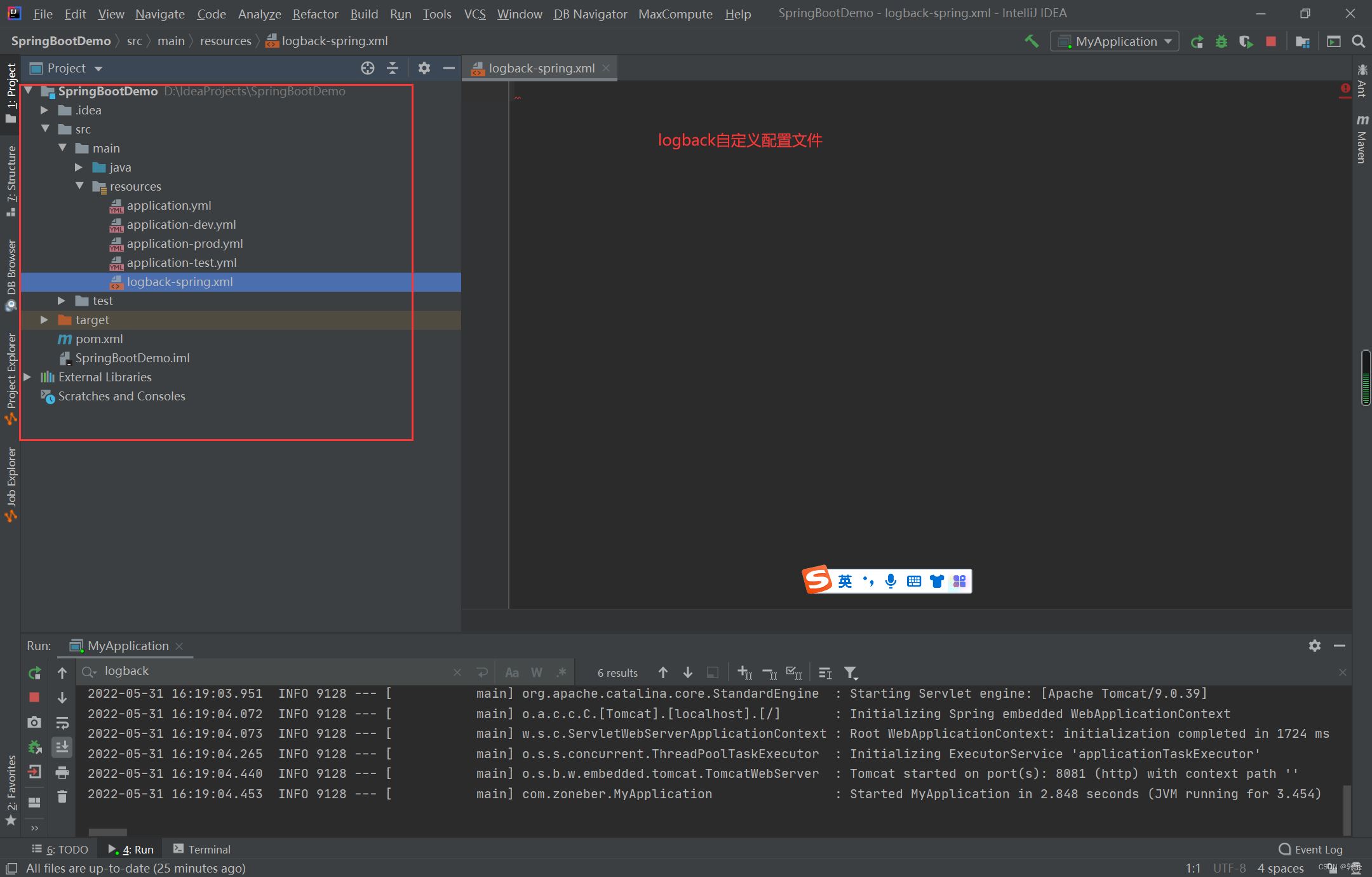

Code of constructed neural network

import tensorflow as tf

# placeholder and eager execution Are not compatible

tf.compat.v1.disable_eager_execution()

# Define three input nodes

x1 = tf.compat.v1.placeholder(dtype=tf.float32)

x2 = tf.compat.v1.placeholder(dtype=tf.float32)

x3 = tf.compat.v1.placeholder(dtype=tf.float32)

# Define weights ( Variable parameters )

w1 = tf.Variable(0.1, dtype=tf.float32)

w2 = tf.Variable(0.1, dtype=tf.float32)

w3 = tf.Variable(0.1, dtype=tf.float32)

# Hidden layer

n1 = x1 * w1

n2 = x2 * w2

n3 = x3 * w3

# Output layer

y = n1 + n2 + n3

# conversation , An object that manages the operation of a neural network

sess = tf.compat.v1.Session()

init = tf.compat.v1.global_variables_initializer()

# stay sess Run the initialization function in the session

sess.run(init)

# Perform a neural network calculation

result = sess.run([x1, x2, x3, w1, w2, w3, y], feed_dict={x1: 90, x2: 80, x3: 70})

print(result)

# [array(90., dtype=float32), array(80., dtype=float32), array(70., dtype=float32), 0.1, 0.1, 0.1, 24.0]

Code explanation

tf.compat.v1.disable_eager_execution()Need to add this , as a result of placeholder and eager execution Are not compatible

# Define three input nodes

x1 = tf.compat.v1.placeholder(dtype=tf.float32)

x2 = tf.compat.v1.placeholder(dtype=tf.float32)

x3 = tf.compat.v1.placeholder(dtype=tf.float32)Definition 3 Input nodes ,placeholder( Place holder ), So called placeholders , That is, when writing the program, you are not sure what number to enter , And only when the program is running will it enter , When programming, just define this node , First “ Take a seat ”.

dtype yes “data type” Abbreviation , Indicates the type of value represented by the placeholder ,tf.float32 yes tensorflow Medium 32 Bit floating point , The box 32 Bit binary to represent a decimal , commonly 32 Bit floating-point numbers can meet the needs of calculation .

# Define weights ( Variable parameters )

w1 = tf.Variable(0.1, dtype=tf.float32)

w2 = tf.Variable(0.1, dtype=tf.float32)

w3 = tf.Variable(0.1, dtype=tf.float32)This is used to define the weight of each score , In the neural network , Neuron parameters such as weights that change frequently during training ,tensorflow Call them variables .

Definition w1、w2、w3 Except that the function uses tf.Variable Out of function , Other and definition placeholders x1、x2、x3 It's similar , There is another difference except that dtype Parameter to specify the value type , also Another initial value parameter was passed in , This parameter is not in the form of a named parameter , This is because tf.Variable The function specifies that the first parameter is used to specify that the initial value of the variable parameter can be seen , We put w1、w2、w3 The initial values of are set to 0.1.

# Hidden layer

n1 = x1 * w1

n2 = x2 * w2

n3 = x3 * w3

# Output layer

y = n1 + n2 + n3

Here we define the hidden layer and the output layer .

This completes the definition of neural network model , Next, let's look at how to input data into this neural network and get the result of the operation .

# conversation , An object that manages the operation of a neural network

sess = tf.compat.v1.Session()

init = tf.compat.v1.global_variables_initializer()

# stay sess Run the initialization function in the session

sess.run(init)So let's define a sess Variable , It contains a session (session) object , We can A conversation is simply understood as an object that manages the operation of a neural network , With the conversation object , Our neural network can be formally operated .

The first step of session object management neural network , Generally, all variable parameters should be initialized , That is to give all variable parameters their own initial values ,

First let the variables init be equal to global_variables_initializer The return value of this function , It returns an object dedicated to initializing variable parameters . Then call the session object sess Member function of run(), close init Variables as parameters , We can initialize all the variable parameters in the neural network model we defined before .run(init) Is in the sess Run the initialization function in the session . What initial values are assigned to each variable parameter , It was defined by us just now w1、w2、w3 Is determined by the first parameter of . We set it to 0.1.

# Perform a neural network calculation

result = sess.run([x1, x2, x3, w1, w2, w3, y], feed_dict={x1: 90, x2: 80, x3: 70})

print(result)

# [array(90., dtype=float32), array(80., dtype=float32), array(70., dtype=float32), 0.1, 0.1, 0.1, 24.0]

result = sess.run([x1, x2, x3, w1, w2, w3, y], feed_dict={x1: 98, x2: 95, x3: 87})

print(result)

# [array(98., dtype=float32), array(95., dtype=float32), array(87., dtype=float32), 0.1, 0.1, 0.1, 28.0]Here we make a real calculation ,sess.run Functional The first parameter Is an array , Represents which result items we need to view ; Another parameter feed_dict, Represents the data we want to input .

give the result as follows

# [array(90., dtype=float32), array(80., dtype=float32), array(70., dtype=float32), 0.1, 0.1, 0.1, 24.0]# [array(98., dtype=float32), array(95., dtype=float32), array(87., dtype=float32), 0.1, 0.1, 0.1, 28.0]After checking :

90*0.1+80*0.1+70*0.1=24

98*0.1+95*0.1+87*0.1=28

correct , It shows that the result of the neural network is correct .

Follow up the training of neural network , Coming soon ......

边栏推荐

- 进入页面输入框自动获取焦点

- PHP does not allow images to be uploaded together with data (no longer uploading images before uploading data)

- Shared by Merrill Lynch data technology expert team, smoking detection related practice based on Jetson nano

- "One week's data collection" - logic gate

- 软件测试---如何选择合适的正交表

- Badge series 8: generate a personalized Badge

- [open5gs] open5gs installation configuration

- MySQL单表500万条数据增、删、改、查速度测试

- 全面解读!Golang中泛型的使用

- Introduction to QPM

猜你喜欢

Edge computing is the sinking and extension of cloud computing capabilities to the edge and user sides

logback

VI summary of common commands

首期Techo Day腾讯技术开放日,628等你

How does flutter transfer parameters to the next page when switching pages?

Catalogue gradué de revues scientifiques et technologiques de haute qualité dans le domaine de l'informatique

Summary of common commands of vim

Regular expression learning

Optimization of power assisted performance of QPM suspended window

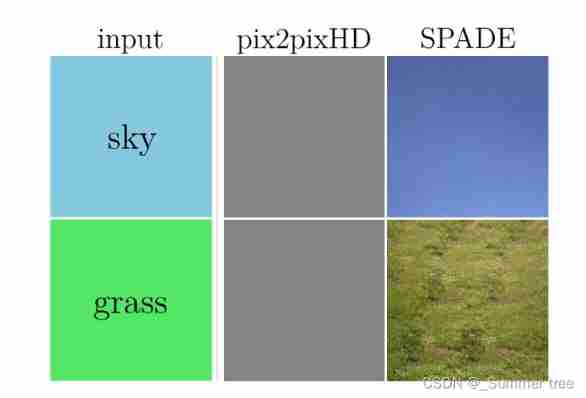

【CVPR 2019】Semantic Image Synthesis with Spatially-Adaptive Normalization(SPADE)

随机推荐

工企专利匹配数据(数十万数据量)1998-2014年

集合对象复制

Install new version cmake & swig & tinyspline

Jz2440 - - - utiliser le programme de gravure uboot

LeetCode 剑指 Offer II 091.粉刷房子 - 原地修改

Js--- get the data with the same key value in the object array to get a new array

异常记录-23

首期Techo Day腾讯技术开放日,628等你

jz2440---使用uboot燒錄程序

使用递归或while循环获取父/子层级结构的名称

2021-11-12 vrep视觉传感器配置

Common circuit design

【AAAI 2021】Few-Shot One-Class Classification via Meta-Learning 【FSOCC via Meta-learning】

halcon 光度立体

Halcon photometric stereoscopic

3 big questions! Redis cache exceptions and handling scheme summary

Board end power hardware debugging bug

"One week to solve the model electricity" - negative feedback

Leetcode connected to rainwater series 42 (one dimension) 407 (2D)

【CVPR 2021】DatasetGAN: Efficient Labeled Data Factory with Minimal Human Effort