当前位置:网站首页>Hands on learning and deep learning -- a brief introduction to softmax regression

Hands on learning and deep learning -- a brief introduction to softmax regression

2022-06-12 08:13:00 【Orange acridine 21】

1、 First, let's explain the difference between regression and classification :

Regression estimates a continuous value , For example, predict the price of a house ;

Classification predicts a discrete category , For example, I predict whether a picture is a cat or a dog .

example 1:MNIST ( Handwritten digit recognition )10 class

example 2:ImageNet( Classification of natural objects )1000 class

2、Kaggle A typical classification problem on

example 1、 Divide the microscopic picture of human protein into 28 class

example 2、 Divide malware into 9 Categories

example 3、 Will be malicious Wikipedia Comment sharing 7 class

3、 From regression to multiclass classification

Return to : Single continuous data output ; Natural interval R; The difference between the loss function and the real value is the loss .

classification : Usually multiple outputs ; The output of the i The first element is predicted to be i Class confidence ;

4、 From regression to multiclass classification —— Mean square loss

Suppose there is n Categories , Encode the category with one valid digit , Long for n Vector , from y1 To yn, Hypothesis number 1 i One is the real category , be yi be equal to 1, The other elements are equal to 0.( There is just one position that is valid )

The mean square loss can also be used for training , Select the maximum value as the predicted value

5、 From regression to multiclass classification —— No calibration ratio

Encode the category with one valid digit , The maximum value is used as the prediction

Need confidence to identify the correct class Oy Much larger than other incorrect classes Oi( Large margin )

The output is a probability ( non-negative , And for 1), Now the output is a O1 To On Vector , Introduce a new operation word "Jiao" softmax, We will softmax Effect to O Get one above y_hat, It is a long for n Vector , He has attributes , Each element is nonnegative and the sum is 1.

y_hat The inside number i The elements are equal to O The inside number i Elements are indexed ( The benefits of the index are , I don't care what it's worth , Can become nonnegative ) And then divide by all of them Ok Do index . then y_hat It's a probability .

Finally, you get two probabilities , A real , A predictive , Then compare the difference between the two probabilities as a loss .

6、softmax And cross entropy to do the loss

Cross entropy is often used to measure the difference between two probabilities :

hypothesis p,q Is a discrete probability

Take its loss as :

The gradient is the difference between the real probability and the predicted probability :

7、 summary

- softmax Regression is a multi class classification model

- Use softmax The operator obtains the prediction confidence of each class

- Cross entropy is used to measure the difference between prediction and labeling

边栏推荐

- (P15-P16)对模板右尖括号的优化、函数模板的默认模板参数

- A brief summary of C language printf output integer formatter

- Leetcode notes: biweekly contest 69

- C # hide the keyboard input on the console (the input content is not displayed on the window)

- Py&GO编程技巧篇:逻辑控制避免if else

- Leetcode notes: Weekly contest 280

- (P21-P24)统一的数据初始化方式:列表初始化、使用初始化列表初始化非聚合类型的对象、initializer_lisy模板类的使用

- 2.1 linked list - remove linked list elements (leetcode 203)

- Leetcode notes: biweekly contest 70

- Mathematical knowledge - derivation - Basic derivation knowledge

猜你喜欢

Detailed explanation of Google open source sfmlearner paper combining in-depth learning slam -unsupervised learning of depth and ego motion from video

Model Trick | CVPR 2022 Oral - Stochastic Backpropagation A Memory Efficient Strategy

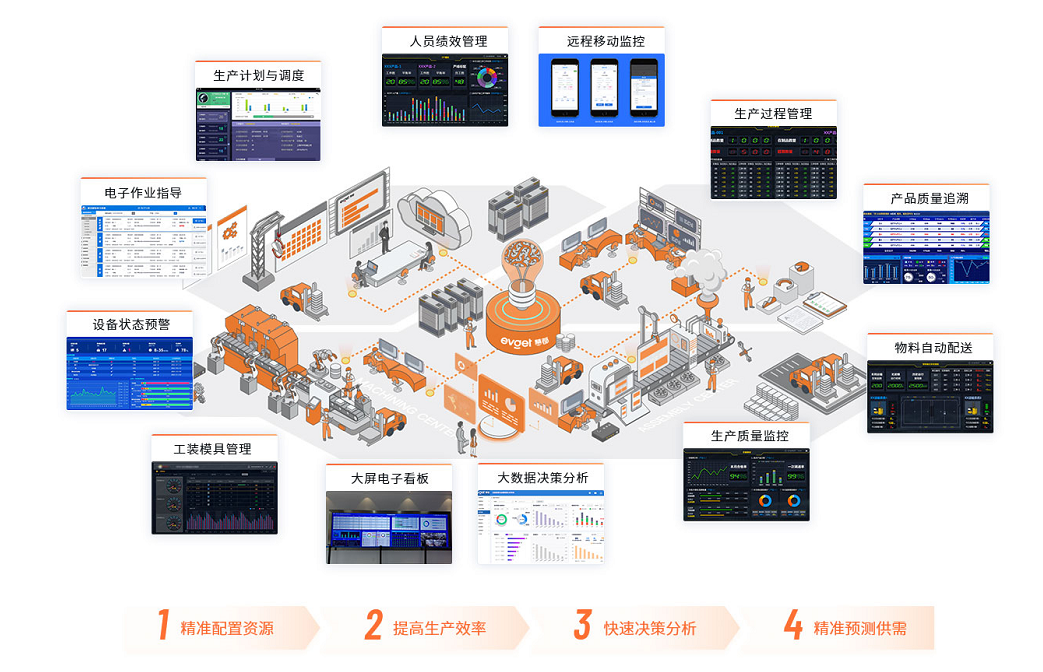

建立MES系统,需要注意什么?能给企业带来什么好处?

MATLAB image processing -- image transformation correction second-order fitting

FPGA implementation of right and left flipping of 720p image

Vision Transformer | Arxiv 2205 - LiTv2: Fast Vision Transformers with HiLo Attention

Figure neural network makes Google maps more intelligent

Three data exchange modes: line exchange, message exchange and message packet exchange

企业为什么要实施MES?具体操作流程有哪些?

ctfshow web4

随机推荐

MYSQL中的触发器

Vision Transformer | Arxiv 2205 - LiTv2: Fast Vision Transformers with HiLo Attention

从AC5到AC6转型之路(1)——补救和准备

Pytorch profiler with tensorboard.

Summary of structured slam ideas and research process

ERP的生产管理与MES管理系统有什么差别?

Record the treading pit of grain Mall (I)

目前MES应用很多,为什么APS排程系统很少,原因何在?

Procedure execution failed 1449 exception

2.2 linked list - Design linked list (leetcode 707)

Explanation and explanation on the situation that the volume GPU util (GPU utilization) is very low and the memory ueage (memory occupation) is very high during the training of pytoch

(P14)overrid关键字的使用

Literature reading: raise a child in large language model: rewards effective and generalizable fine tuning

Prediction of COVID-19 by RNN network

Vision Transformer | CVPR 2022 - Vision Transformer with Deformable Attention

(P36-P39)右值和右值引用、右值引用的作用以及使用、未定引用类型的推导、右值引用的传递

802.11 protocol: wireless LAN protocol

Three data exchange modes: line exchange, message exchange and message packet exchange

模型压缩 | TIP 2022 - 蒸馏位置自适应:Spot-adaptive Knowledge Distillation

(P40-P41)move资源的转移、forward完美转发