当前位置:网站首页>TensorFlow2 study notes: 6. Overfitting and underfitting, and their mitigation solutions

TensorFlow2 study notes: 6. Overfitting and underfitting, and their mitigation solutions

2022-08-04 06:05:00 【Live up to [email protected]】

1. What is overfitting and underfitting

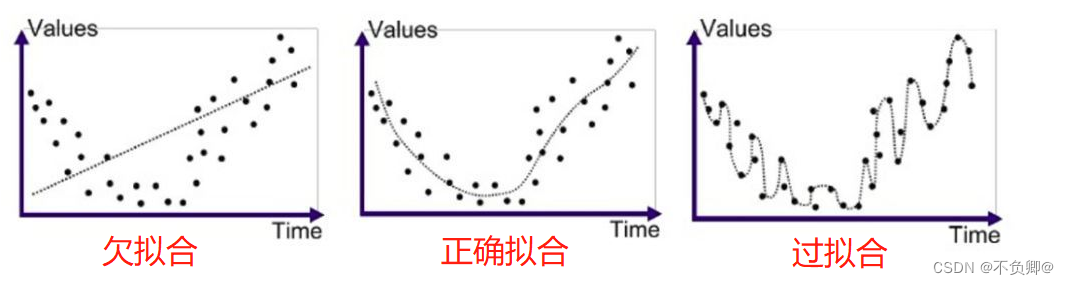

There are two most common results that may be encountered in both machine learning and deep learning modeling, one is called over-fitting (over-fitting)The other is called underfittingUnder-fitting.

Overfitting

Definition: Overfitting refers to the fact that the model fits the training data too well, which is reflected in the evaluation indicators, that is, the model performs very well on the training set, but in thePoor performance on test set and new data.In layman's terms, overfitting means that the model learns the data so thoroughly that it also learns the features of the noisy data, which will lead to inability to identify well in the later testing.The data, that is, cannot be classified correctly, and the generalization ability of the model is too poor.

Underfitting

Definition: Underfitting refers to the situation that the model does not perform well during training and prediction, which is reflected in the evaluation indicators, which is the performance of the model on the training set and test setNeither bad.Underfitting means that the model does not capture the data features well and cannot fit the data well.

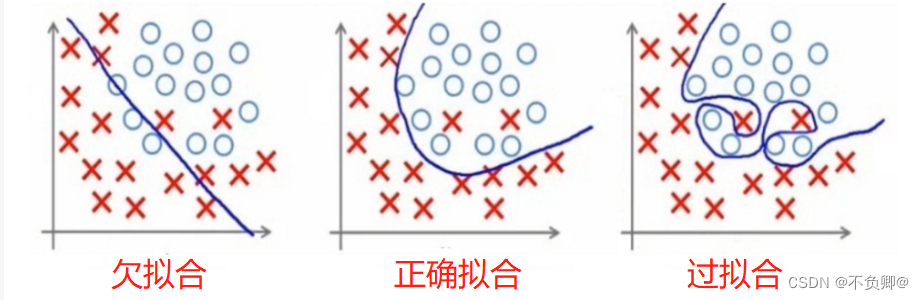

Intuitive performance, as shown below:

Three Fit States in Regression Algorithms

Three Fit States in Classification Algorithms

2. Overfitting solution

- Cleaning data

- Increase the training set

- Use regularization

- Increase the regularization parameter

3. Underfitting solution

- Cleaning data

- Increase the training set

- Use regularization

- Increase the regularization parameter

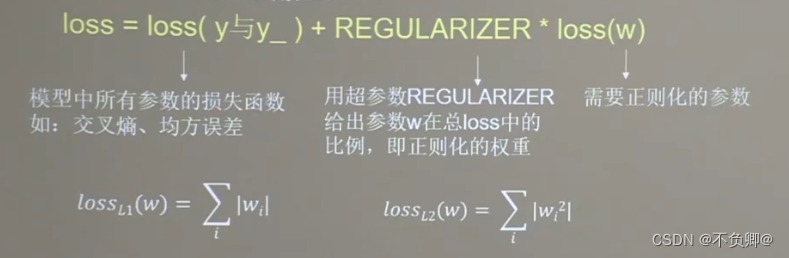

4. Regularization and how to use it

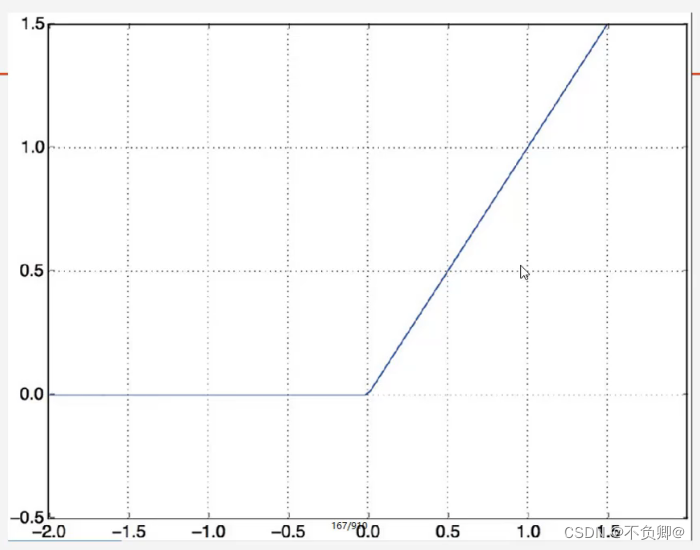

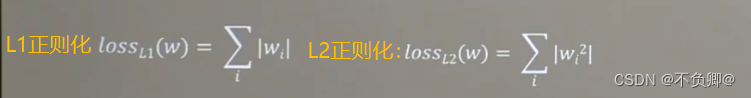

- L1 regularization: sum the absolute values of all parameters w.There is a high probability that many parameters will become 0, so this method can reduce the complexity by sparse parameters (that is, reducing the number of parameters).

- L2 regularization: sum the squared absolute values of all parameters w.Make the parameter close to 0 but not 0, so this method can reduce the complexity by reducing the parameter value.Reduce overfitting due to noise in the dataset.

版权声明

本文为[Live up to [email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/216/202208040525327629.html

边栏推荐

猜你喜欢

随机推荐

关系型数据库-MySQL:体系结构

SQL的性能分析、优化

剑指 Offer 20226/30

(十二)树--哈夫曼树

【树 图 科 技 头 条】2022年6月28日 星期二 伊能静做客树图社区

Android connects to mysql database using okhttp

剑指 Offer 2022/7/3

剑指 Offer 2022/7/4

SQL练习 2022/6/30

Install dlib step pit record, error: WARNING: pip is configured with locations that require TLS/SSL

剑指 Offer 2022/7/2

NFT市场开源系统

(六)递归

关系型数据库-MySQL:多实例配置

多项式回归(PolynomialFeatures)

ISCC-2022

SQl练习 2022/6/29

简单明了,数据库设计三大范式

字典特征提取,文本特征提取。

攻防世界MISC———Dift