当前位置:网站首页>Mlperf training v2.0 list released, with the same GPU configuration, the performance of Baidu PaddlePaddle ranks first in the world

Mlperf training v2.0 list released, with the same GPU configuration, the performance of Baidu PaddlePaddle ranks first in the world

2022-07-05 07:49:00 【Paddlepaddle】

This article is already in Flying propeller The official account is issued , Please check the link :

MLPerf Training v2.0 The list is released , In the same way GPU The performance of Baidu PaddlePaddle under configuration is the first in the world

stay 6 month 30 The latest MLPerf Training v2.0 In the list , Baidu uses Flying propeller frame (PaddlePaddle) And Baidu AI Cloud Baige computing platform BERT Large Model GPU Training performance results , In the same way GPU Ranked first in all submission results under the configuration , It has surpassed highly customized optimization and has been in the leading position in the list for a long time NGC PyTorch frame , It shows the world Flying propeller Performance advantages of the framework .

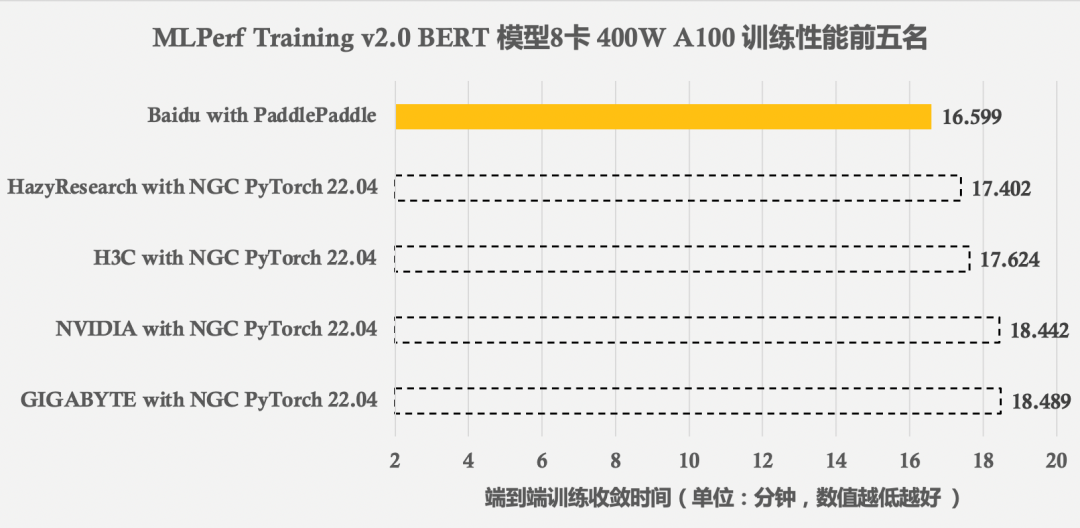

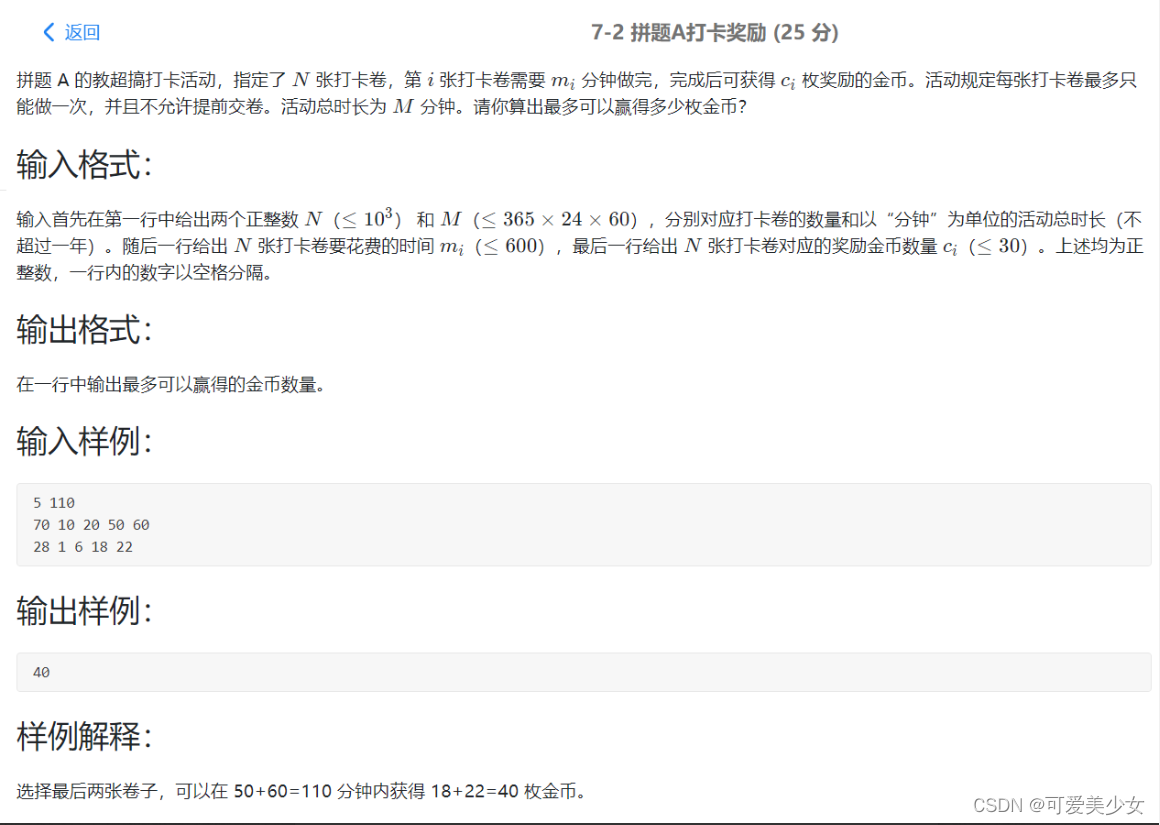

chart 1 MLPerf Training v2.0 BERT Top five training results of model effectiveness

chart 1 It shows MLPerf Training v2.0 BERT Model in 8 card NVIDIA GPU A100(400W Power waste ,80G memory ) The training performance results of the top five , Baidu Flying propeller The proposal is faster than other submission results 5%-11% Unequal .

“ World first ” The black technology behind it

Flying propeller stay BERT Model 8 card GPU Training has created the world's best training performance , It comes from Flying propeller The basic performance of the framework and the leadership of Distributed Technology , as well as Flying propeller And NVIDIA GPU Deep collaborative optimization .

For deep learning model training tasks , From data reading to model calculation , From the bottom operator to the upper distributed strategy , From multi device load balancing to whole process scheduling mechanism , Will affect the final training performance . Flying propeller Based on leading architecture design and long-term practice , Systematic optimization work has been made in high-performance training , Mainly reflected in the following aspects :

Load balancing of data reading and model training

Aiming at the problem of load imbalance that often occurs in distributed training , Train the model and read the data 、 Pretreatment is allocated to different devices , Ensure that heterogeneous computing power makes the best use of everything , Implementation data IO And the balance of calculation .

Calculation acceleration of variable length sequence input model

For variable length sequence input models, most of them adopt padding The problem of redundant computation caused by filling alignment , Provide efficient support for variable length input and corresponding model structure , Give Way GPU Computing resources focus on efficient computing , Especially for Transformer The calculation efficiency of class model is significantly improved .

High performance operator library and fusion Optimization Technology

For the ultimate demand of framework foundation performance optimization , Developed a high-performance operator Library PHI, Fully optimize GPU Kernel Implementation , Improve the parallelism of the internal calculation of the operator , And through operator fusion to reduce the imitation memory overhead , Develop GPU The ultimate performance of .

High speedup hybrid parallel training strategy

For traditional data parallel performance 、 The bottleneck of video memory is limited , It realizes the parallel of fused data 、 Model parallel 、 A hybrid parallel distributed training strategy of grouping parameter slicing parallel strategy , Distributed training performance with Superlinear acceleration can be achieved in some scenarios .

Asynchronous scheduling of the whole process

The synchronization frequency of each link in the model training process is high 、 Low degree of time overlap , Design Asynchronous scheduling mechanism , Most of the synchronization operations are removed while ensuring the convergence of the model , Data processing 、 Training and collective communication are almost asynchronous scheduling , Improve end-to-end performance .

Help big model Technological innovation and industrial landing

Baidu has always attached importance to the technological research and development of large models , And is committed to promoting the industrial landing of large models . Large model training requires deep learning framework to provide strong support in high-performance distributed training .

Flying propeller Distributed training starts from industrial practice , Continuously strengthen the leading edge , Successively released the industry's first general heterogeneous parameter server architecture 、4D Hybrid parallel training strategy 、 Many bright technologies such as end-to-end adaptive distributed training architecture , And fully Polish according to different model structures and sparse and dense characteristics , Supportable including computer vision 、 natural language processing 、 Personalized recommendation 、 Different algorithms in a wide range of fields, including scientific computing, achieve high-performance training on heterogeneous hardware , Effectively help the rapid iteration of large model technology innovation exploration .

Flying propeller Leading distributed technology and high-performance training features , Supported based on Flying propeller The software and hardware solutions of are MLPerf Continue to achieve excellent performance on , It supports the release of many industry-leading Wenxin large models , For example, the world's first knowledge enhancement model of 100 billion “ Pengcheng - Baidu · Literary heart ”, Knowledge enhanced power industry NLP Big model “ State Grid - Baidu · Literary heart ”, Knowledge enhanced financial industry NLP Big model “ PUFA - Baidu · Literary heart ”, And domestic hardware clusters AlphaFold2 Ten million level protein structure analysis model .

Conclusion

Flying propeller stay MLPerf Training v2.0 Got BERT Model training performance is the world's first eye-catching achievement . It's not just because Flying propeller The long-term efforts of the framework in the field of performance optimization , It cannot be separated from the help of hardware ecology . In recent years , Flying propeller Our technical strength is deeply recognized by the majority of hardware manufacturers , Cooperation is getting closer , Integrated software and hardware for coordinated development , Ecological co creation is fruitful . Not long ago (5 month 26 Japan ),NVIDIA And Flying propeller Co launched NGC-Paddle The official launch . At the same time MLPerf In the list ,Graphcore Also by using Flying propeller The framework has achieved excellent results . future , Flying propeller Will continue to build performance advantages , Continuous technological innovation in software and hardware collaborative performance optimization and large-scale distributed training , For the majority of users to provide more convenient 、 Easy to use 、 Deep learning framework with excellent performance .

MLPerf Introduce

MLPerf By AI Benchmark in the field of artificial intelligence initiated by world-renowned academic researchers and industry experts .MLPerf It aims to provide a fair 、 A practical benchmark platform , Show industry-leading AI The best performance of software and hardware system , The test results have been obtained AI General recognition in the field . Almost all mainstream hardware manufacturers and software service providers in the world will refer to MLPerf Build your own benchmark system based on the published results , To test the new AI Accelerating chip and deep learning framework in MLPerf Performance on the model .

Live broadcast announcement

7 month 6 Japan ( Wednesday )20:00, Flying propeller Chief architect Yu dianhai and Flying propeller Zeng Jinle, a senior R & D Engineer, will broadcast live , Uncover secrets for everyone GPU Under configuration , Baidu Flying propeller performance 「 World first 」 The key technology behind it .

Focus on Flying propeller official account , The background to reply 【 Study 】 Sign up , There are more gifts waiting for you in the live studio !

Focus on 【 Flying propeller PaddlePaddle】 official account

Get more technical content ~

This article is shared in Blog “ Flying propeller PaddlePaddle”(CSDN).

If there is any infringement , Please contact the [email protected] Delete .

Participation of this paper “OSC Source creation plan ”, You are welcome to join us , share .

边栏推荐

- Day08 ternary operator extension operator character connector symbol priority

- [idea] common shortcut keys

- mysql 盲注常见函数

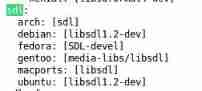

- Package ‘*****‘ has no installation candidate

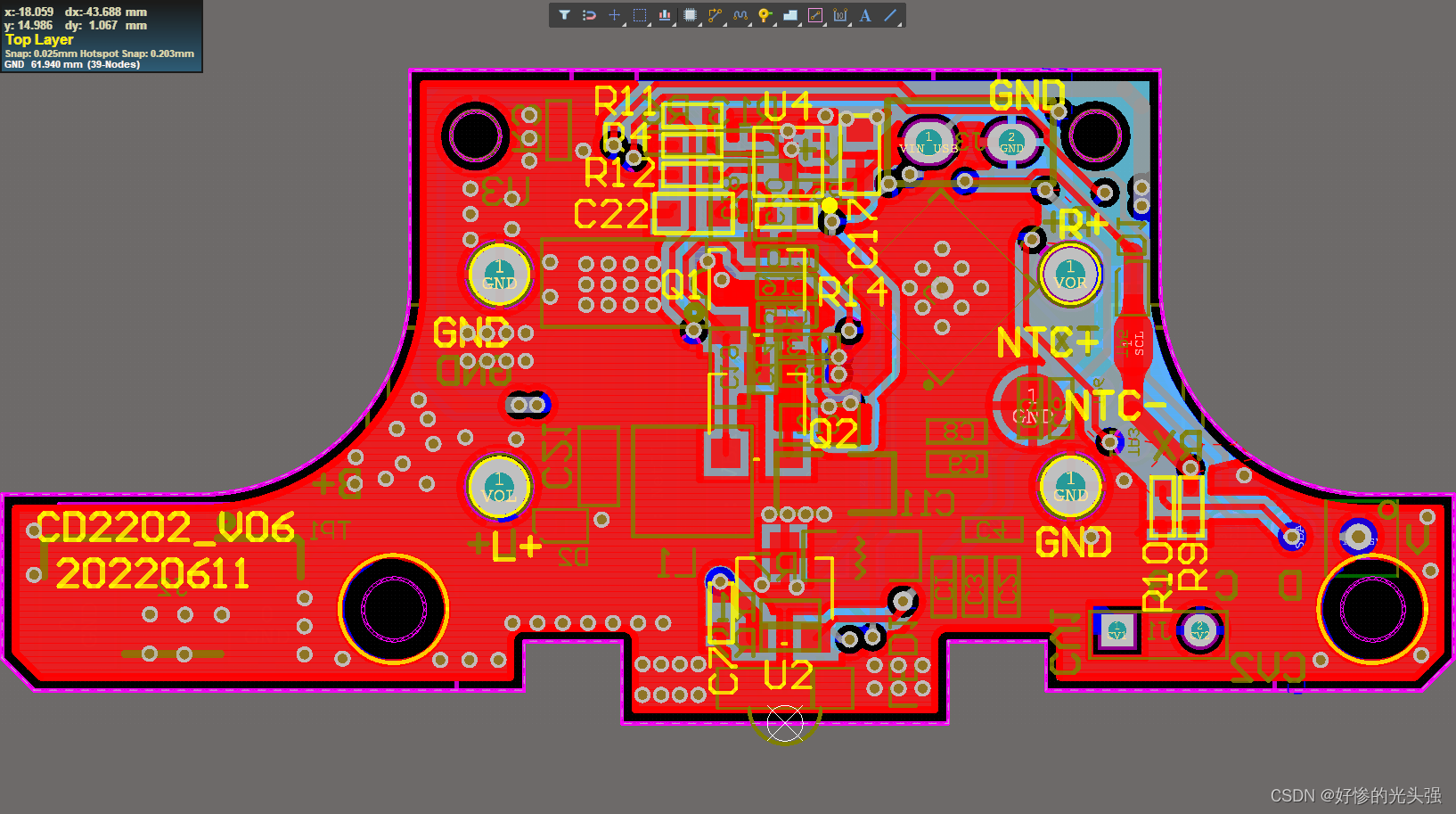

- Altium designer 19.1.18 - clear information generated by measuring distance

- Good websites need to be read carefully

- deepin 20 kivy unable to get a window, abort

- About yolov3, conduct map test directly

- High end electronic chips help upgrade traditional oil particle monitoring

- The global and Chinese market of lithographic labels 2022-2028: Research Report on technology, participants, trends, market size and share

猜你喜欢

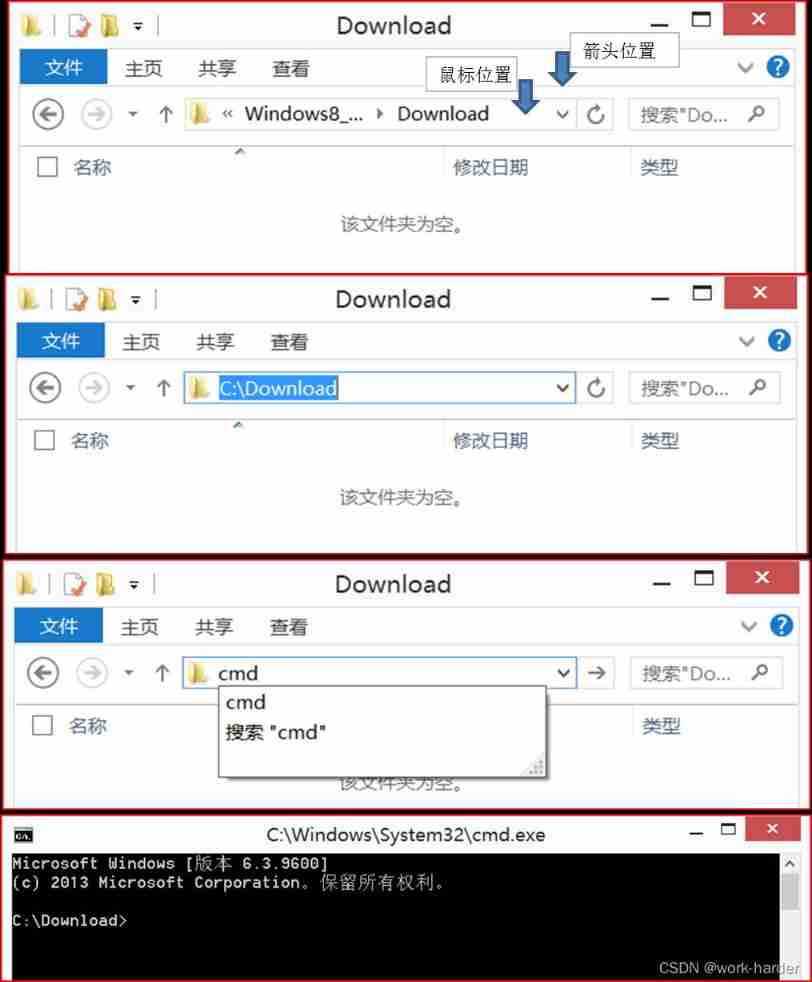

The folder directly enters CMD mode, with the same folder location

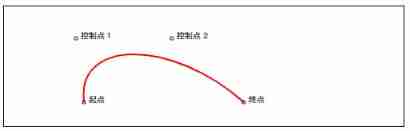

What is Bezier curve? How to draw third-order Bezier curve with canvas?

Altium designer 19.1.18 - change the transparency of copper laying

Acwing - the collection of pet elves - (multidimensional 01 Backpack + positive and reverse order + two forms of DP for the answer)

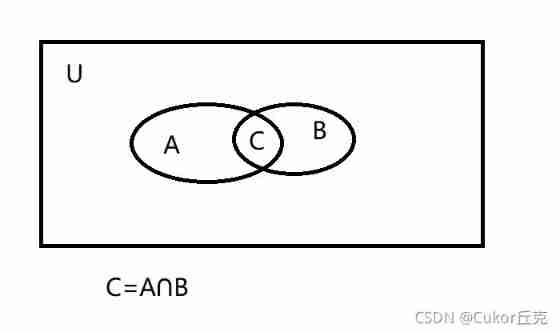

Set theory of Discrete Mathematics (I)

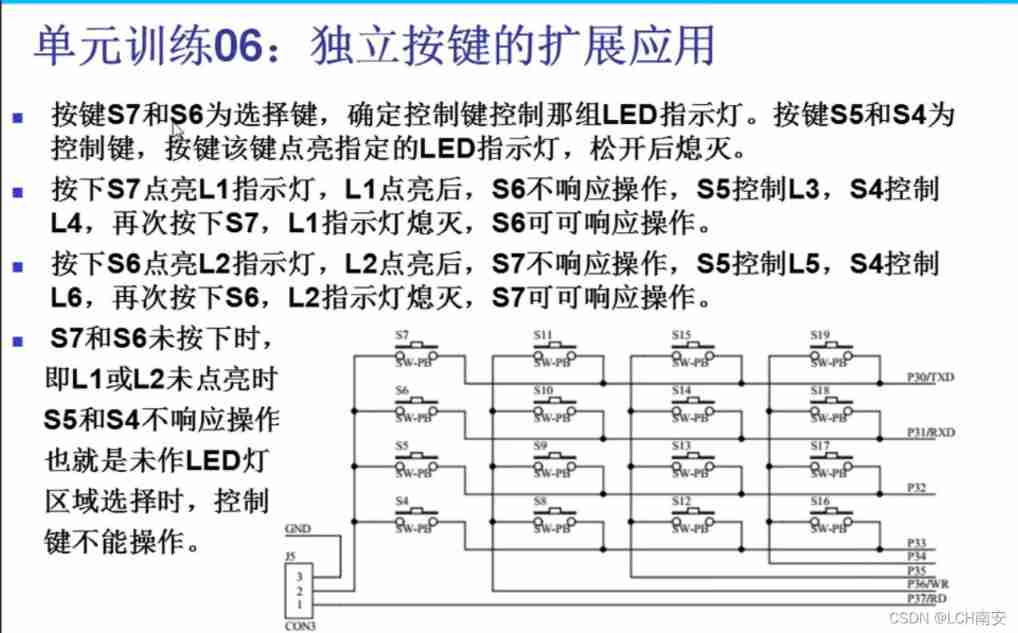

Extended application of single chip microcomputer-06 independent key

Could NOT find XXX (missing: XXX_LIBRARY XXX_DIR)

II Simple NSIS installation package

Practical application cases of digital Twins - fans

A complete set of indicators for the 10000 class clean room of electronic semiconductors

随机推荐

Cygwin installation

Apple system optimization

Opendrive ramp

How to realize audit trail in particle counter software

Altium designer 19.1.18 - Import frame

Practical application cases of digital Twins - fans

Function of static

Opendrive record

Deepin get file (folder) list

[neo4j] common operations of neo4j cypher and py2neo

1089 Insert or Merge 含测试点5

Set theory of Discrete Mathematics (I)

[popular science] some interesting things that I don't know whether they are useful or not

Global and Chinese market of digital shore durometer 2022-2028: Research Report on technology, participants, trends, market size and share

Temperature sensor DS18B20 principle, with STM32 routine code

Esmini longspeedaction modification

The folder directly enters CMD mode, with the same folder location

High end electronic chips help upgrade traditional oil particle monitoring

Use go language to read TXT file and write it into Excel

UE5热更新-远端服务器自动下载和版本检测(SimpleHotUpdate)