当前位置:网站首页>Chapter 3 of hands on deep learning - (1) linear regression is realized from scratch_ Learning thinking and exercise answers

Chapter 3 of hands on deep learning - (1) linear regression is realized from scratch_ Learning thinking and exercise answers

2022-07-02 17:14:00 【coder_ sure】

List of articles

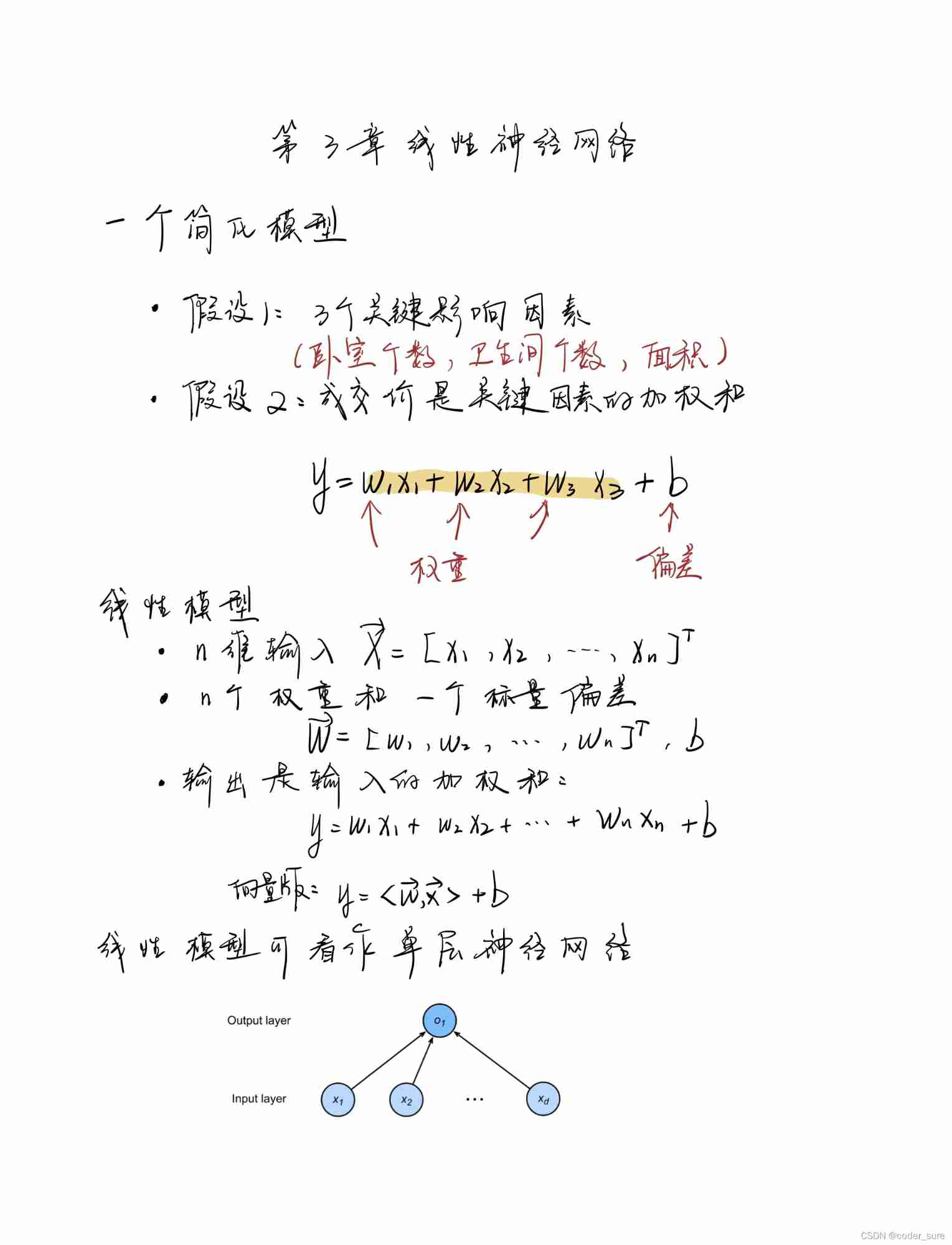

3.1 Linear regression

author github link : github link

Learning notes

exercises

- If we initialize the weight to zero , What's going to happen . Is the algorithm still valid ?

- Suppose you are George · Simon · ohm , This paper attempts to establish a model for the relationship between voltage and current . Can you use automatic differentiation to learn the parameters of the model ?

- You can be based on Planck's law Use spectral energy density to determine the temperature of an object ?

- If you want to calculate the second derivative, what problems may you encounter ? How would you solve these problems ?

- Why is it

squared_lossYou need to use... In the functionreshapefunction ? - Try using different learning rates , Observe how fast the value of the loss function decreases .

- If the number of samples cannot be divided by the batch size ,

data_iterWhat happens to the behavior of functions ?

Problem solving

1. If we initialize the weight to zero , What's going to happen . Is the algorithm still valid ?

Experiments show that the algorithm is still effective , It looks better

whole 0 Initialization is also a common choice , Compared with normal distribution initialization, it may move towards different local optima , The algorithm is still effective .

# w = torch.normal(0, 0.01, size=(2,1), requires_grad=True)

w = torch.zeros((2,1) ,requires_grad=True)

epoch 1, loss 0.036967

epoch 2, loss 0.000132

epoch 3, loss 0.000050

4. If you want to calculate the second derivative, what problems may you encounter ? How would you solve these problems ?

The calculation formula of first-order derivative function cannot be obtained directly . resolvent : Find the first derivative and save the calculation diagram .

Example : y = x 3 + c o s x , x = π 2 , π y=x^3+cosx,x=\frac{\pi}{2},\pi y=x3+cosx,x=2π,π, Find the first derivative and the second derivative respectively

Reference resources

import torch

import math

import numpy as np

x = torch.tensor([math.pi / 2, math.pi], requires_grad=True)

y = x ** 3 + torch.cos(x)

true_dy = 3 * x ** 2 - torch.sin(x)

true_d2y = 6 * x - torch.cos(x)

# Find the first derivative , After saving the calculation diagram , To find the second derivative

dy = torch.autograd.grad(y, x,

grad_outputs=torch.ones(x.shape),

create_graph=True,

retain_graph=True) # Keep the calculation diagram for calculating the second derivative

# After the tensor, add .detach().numpy() Only tensor values can be output

print(" First derivative true value :{} \n First derivative calculation value :{}".format(true_dy.detach().numpy(), dy[0].detach().numpy()))

# Find the second derivative . above dy The first element of is the first derivative

d2y = torch.autograd.grad(dy, x,

grad_outputs=torch.ones(x.shape),

create_graph=False # No more calculation charts , Destroy the previous calculation diagram

)

print("\n Second order conduction true value :{} \n Second derivative calculation value :{}".format(true_d2y.detach().numpy(), d2y[0].detach().numpy()))

5. Why is it squared_loss You need to use... In the function reshape function ?

y ^ \hat{y} y^ It's a column vector , y y y It's a row vector

6. Try using different learning rates , Observe how fast the value of the loss function decreases .

Try it on your own :

- Low learning rate loss The decline is relatively slow

- Excessive learning rate loss Unable to converge

7. If the number of samples cannot be divided by the batch size ,data_iter What happens to the behavior of functions ?

The number of samples left at the end of execution cannot be divided , Will report a mistake

边栏推荐

- IP地址转换地址段

- Connect Porsche and 3PL EDI cases

- GeoServer:发布PostGIS数据源

- Notice on holding a salon for young editors of scientific and Technological Journals -- the abilities and promotion strategies that young editors should have in the new era

- 只是巧合?苹果iOS16的神秘技术竟然与中国企业5年前产品一致!

- C语言中sprintf()函数的用法

- Error when uploading code to remote warehouse: remote origin already exists

- QWebEngineView崩溃及替代方案

- 剑指 Offer 24. 反转链表

- 数字IC手撕代码--投票表决器

猜你喜欢

Go zero micro service practical series (VIII. How to handle tens of thousands of order requests per second)

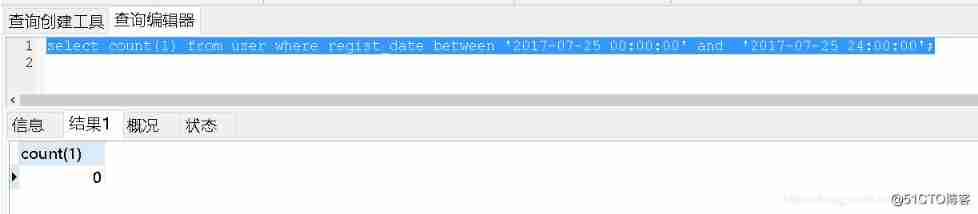

In MySQL and Oracle, the boundary and range of between and precautions when querying the date

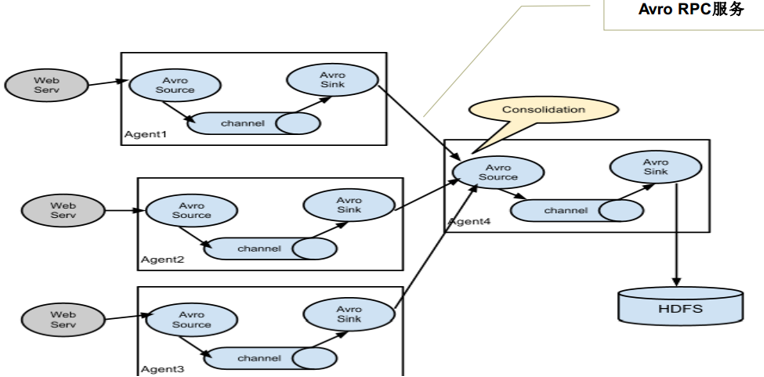

【云原生】简单谈谈海量数据采集组件Flume的理解

剑指 Offer 25. 合并两个排序的链表

剑指 Offer 21. 调整数组顺序使奇数位于偶数前面

电脑自带软件使图片底色变为透明(抠图白底)

Green bamboo biological sprint Hong Kong stocks: loss of more than 500million during the year, tiger medicine and Beijing Yizhuang are shareholders

Error when uploading code to remote warehouse: remote origin already exists

深度之眼(三)——矩阵的行列式

Executive engine module of high performance data warehouse practice based on Impala

随机推荐

The macrogenome microbiome knowledge you want is all here (2022.7)

Changwan group rushed to Hong Kong stocks: the annual revenue was 289million, and Liu Hui had 53.46% voting rights

Dgraph: large scale dynamic graph dataset

OpenPose的使用

宝宝巴士创业板IPO被终止:曾拟募资18亿 唐光宇控制47%股权

Go zero micro service practical series (VIII. How to handle tens of thousands of order requests per second)

Qstype implementation of self drawing interface project practice (II)

寒门再出贵子:江西穷县考出了省状元,做对了什么?

Lampe respiratoire PWM

对接保时捷及3PL EDI案例

Executive engine module of high performance data warehouse practice based on Impala

Just a coincidence? The mysterious technology of apple ios16 is even consistent with the products of Chinese enterprises five years ago!

A few lines of code to complete RPC service registration and discovery

Configure MySQL under Linux to authorize a user to access remotely, which is not restricted by IP

【Leetcode】14. 最長公共前綴

畅玩集团冲刺港股:年营收2.89亿 刘辉有53.46%投票权

基于多元时间序列对高考预测分析案例

Leetcode1380: lucky numbers in matrix

In MySQL and Oracle, the boundary and range of between and precautions when querying the date

剑指 Offer 26. 树的子结构