当前位置:网站首页>"Redis source code series" learning and thinking about source code reading

"Redis source code series" learning and thinking about source code reading

2022-07-02 09:07:00 【Programmer Qiqi】

Preface

Through the previous source code reading and analysis , We start the service , Acceptance and processing of data flow , whole DB structure , Detailed storage data structure and other aspects of learning for Redis6.0 Have a more systematic cognition . In particular, we should analyze and think deeply about some excellent designs after learning , Can we use these experiences for reference in our actual work ? This time I will discuss with you what I have learned from my study .

Server model

stay Redis6.0 Previous versions of the server used single process and single thread processing , The advantage is to avoid the concurrent locking overhead , The disadvantage is that you can't make full use of CPU Multi-core processing of . In the current business scenario , CPU It usually does not become the main bottleneck of the load , It's more about memory and network .Redis The multithreaded network model of is actually not a standard

Multi-Reactors/Master-Workers Model , stay 6.0.0 In the version of the , I/O Threads are only responsible for completing I/O Stream processing task , Of course, the main thread will also take part in I/O Mission , The real process of command execution is completed in the main thread . In this way, the lock free processing instructions can still be kept . In this way, the compatibility of the original system is maintained , And we can use multi-core to improve I/O performance . The thinking that this brings to me is a typical treatment method that we do not need to be completely confined to historical experience . We can make our own server processing strategies for different scenarios .

Share a case, In a highly concurrent distributed system , The processing of the same user request may involve locking , The transaction operations , Idempotency check, etc . Analogy to redis Handling of commands , It's essentially the same , The data requested by the user is equivalent to set operation , The data in the database is equivalent to RedisDB Objects to be manipulated in , In business, we usually control concurrency through distributed locks , Use the isolation level of the database to control transaction locks , Use database unique key to do idempotent control, etc . This universal scheme can avoid mistakes to a great extent , But the performance is affected to some extent , Moreover, if not handled properly, deadlocks often occur , So can we refer to Redis The processing method on multithreading is used to realize the business ? The answer is yes .

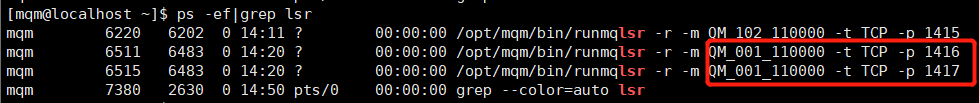

Pictured , stay server We implement a typical Master-Workers Architecturally tcp The server , User requests can be processed according to the number of thread pools specified in the configuration file .

// establish process

func (process *taskProcess) createGoPool() {

for i := 0; i < process.maxGo; i++ {

go func(processId int) {

ch := make(chan *server.ServerContext, 128)

process.goPool.Store(processId, ch)

for {

select {

case data, ok := <-ch:

if !ok {

fmt.Println("process is not ok ", processId)

}

// Processing request data

process.dealData(data)

}

}

}(i)

}

}When the request comes, the distribution rules are customizable , Joining our business can be based on UID To divide requests , According to UID%threadNum Appoint processId To distribute requests to the specified goroutine To deal with , The pseudocode is as follows :

func (dispatcher *Dispatcher) parseMsg(info *requestInfo) {

// analysis body data

requestBody := &server.RequestMsgBody{}

err := json.Unmarshal(info.msg, &requestBody)

if err != nil {

writeinfo := fmt.Sprintf(" Error parsing : %+v, Message drop : %s", err, string(info.msg))

write, _ := comm.GbkToUtf8([]byte(writeinfo))

info.conn.Write(write)

return

}

// If there is a request from the current user ID, The same thread is used to process , Otherwise, idle threads are used to process and record users ID With threads ID

var processId int

if val, isExistsUidProcessId := dispatcher.activeUserProcessMap.Load(requestBody.Md.Uid); buyOk {

processId = val.(int)

} else {

processId = NewTaskProcess.GetIdleProcess()

}

// Distribute data

taskData := &server.ServerContext{

Conn: info.conn,

Body: requestBody,

}

// Send data distribution messages

ch, ok := NewTaskProcess.goPool.Load(processId)

if ok {

ch.(chan *server.ServerContext) <- taskData

}

// maintain UID Yes processId The relationship between

// Send successfully , preservation map & Count

if requestBody.Md.Uid > 0 {

dispatcher.activeUserProcessMap.Store(requestBody.Md.Uid, processId)

dispatcher.activeUserCount(requestBody.Md.Uid, 1)

}

}Based on this scheme, the user request can be processed in a single thread , Don't worry about any lock consumption , Of course, the load balancing gateway layer also needs to add a plug-in for request distribution , Link the request to the cluster's server Binding , In this way, it is possible to simulate the implementation of single thread processing the user's request , When concurrency is extremely high , It can greatly avoid the overhead caused by various locks . And the processing capacity can also be improved 30%. Of course, this is not a general solution , It is a special implementation based on a special business scenario . It is also an inspiration to us .

Progressive type rehash

When there are millions of key, Occupancy GB In memory , Capacity expansion is a very time-consuming operation , meanwhile CPU Usage will also soar . Regarding this redis The incremental capacity expansion strategy is used , Review the redishashtable Structure :

#dict The data structure of the dictionary

typedef struct dict{

dictType *type; // A straight line dictType structure ,dictType Structure contains custom functions , These functions make key and value Can store any type of data

void *privdata; // Private data , preserved dictType Function in structure Parameters

dictht ht[2]; // Two hash tables

long rehashidx; //rehash The tag ,rehashidx=-1 Indicates that there is no rehash,rehash Every barrel moved is right rehashidx Add one

int itreators; // The number of iterators that are iterating

}

#dict In structure ht[0]、ht[1] Data structure of hash table

typedef struct dictht{

dictEntry[] table; // The address where an array is stored , The hash node is stored in the array dictEntry The address of

unsingned long size; // Hashtable table Size , The starting size is 4

unsingned long sizemask; // Is used to hash Values map to table Index of location , The size is (size-1)

unsingned long used; // The record hash table already has nodes ( Key value pair ) The number of

} When the expansion conditions are met , Will rehashidx Set as 0 identification rehash Start , In a progressive way rehash During the process , The deletion of the dictionary 、 lookup 、 Update and other operations will be performed on two hash tables . for instance , To find a key in a dictionary , The program will start with ht[0] Search inside , If you don't find it , It will continue until ht[1] Search inside , All new key value pairs added to the dictionary will be saved to ht[1] Inside , At a certain point in time , Will ht[0] Data migration for is complete , And will ht[1] Set as ht[0], ht[1] Set as NULL, For the next time rehash To prepare for .

In an exchange with sina students , It is found that they also use a similar method to update the local cache , We know that sina was once the largest redis colony , Like when hot news breaks out , Comment on , forward , The magnitude of hot news will rise exponentially , If you use Redis Performance is far from enough , So they also use a lot of local cache , Using the local cache involves the timely updating of the cache , It is also unavoidable to update a large number of local caches, which will involve read-write lock overhead , A lot of capacity expansion CPU Consumption, etc , For this reason, they also adopted two sets map To avoid such problems , The business model is roughly as follows :

When the request arrives, the local cache will be read first ( Memory ) data , At the same time, the local cache will have settings from various sources , When the amount of data is large, there will be very large cpu shake , At the same time, the request fluctuates greatly , Create a bad experience , So there are two in the structure map, One is read-only map, The other is writable map, Read only when reading the cache map, When updating the cache , Write all caches to writable map, After the update is written , Cache the latest map Accelerate assignment to read-only map, At the same time, clear the update map, The pseudocode is as follows :

package main

import "sync"

type localCache struct {

lock sync.RWMutex

Cache map[string]interface{}

update map[string]interface{}

}

func (c *localCache) Get(key string) interface{} {

c.lock.RLock()

defer c.lock.RUnlock()

cache, ok := c.Cache[key]

if ok {

return cache

}

return nil

}

func (c *localCache) Set(key string, val interface{}) {

c.update[key] = val

}

// Initialize the length of the data to be updated

func (c *localCache) MakeUpdateCache(len int) {

c.update = make(map[string]interface{}, len)

}

func (c *localCache) Rehash() {

c.lock.RLock()

defer c.lock.RUnlock()

c.Cache = c.update

c.MakeUpdateCache(0)

}This will only update db Not frequently for a map Carry out the expansion operation , At the same time, it can guarantee Cache There will be no historical dirty data in the field . Only in execution Rehash() The memory allocation action will not be executed until the method is completed , And in the case of more reading and less writing , RWMutex The performance can reach the level of mutual exclusion 8 About times ( Reference resources Go Language high performance programming ). This is based on progressive rehash Thoughts on local caching that can be brought to me , But I haven't practiced this plan myself , Therefore, various details need to be improved , Also welcome to discuss .

Dynamic memory allocation

Share a problem at work , There is one case The phenomenon is that when the request concurrency is large, it will occur cpu Surge in usage , It is relatively simple to view the business logic of relevant interfaces , Load data from disk into memory map in , Then return map Medium key. Then start looking at the system call relationships , I found that there was a very right cpu Time consuming in php On the memory allocation mmap, debug Found using php Array of += Operation to merge objects , And the data of each operation is relatively large , So it created cpu High utilization rate , The solution is simple , You can change a similar operation to a shared memory operation , adopt lstat Call to get the total size of the file to be saved , We only need to open up a piece of memory storage at one time , You can use shared memory operations :

$shmid = shmop_open($systemid, $mode, $permissions, $size);Parameter 4 is the memory size to be opened , You can reduce the number of memory allocations , Reduce frequent memory allocation .

data structure

Redis Many excellent data structures are implemented in , Some are more than just typical implementations , Instead, it makes improvements according to actual needs , Such as Skiplist The backward pointer is added to the . As for data structure and algorithm design, we may not have much time to think deeply and apply in our daily work , The industry is also relatively mature , Reliable schemes can be used for reference , But in fact, if we put aside the existing scheme , The data structures and algorithms we need can be seen everywhere in our work , Such as the organization and search of parent-child nodes , Build your own weight queue and other scenarios .

A few days ago, there was a scenario where the client was given a set of reason options , There may be a secondary option under each option , So this scenario can be an infinite category , In the initial implementation, it is found through recursive calls . The following optimization uses the depth traversal of the tree to find , Personal thinking is to be able to use the appropriate data structure to achieve different businesses, which helps to improve their thinking ability .

summary

So far about Redis6.0.0 Version of the source code analysis is ready to come to a conclusion , In this process, I found that one of the spur to myself is in addition to learning knowledge , It also needs to absorb and digest knowledge through thinking , In the follow-up study, we should also have the ability of execution and thinking :)

边栏推荐

- gocv opencv exit status 3221225785

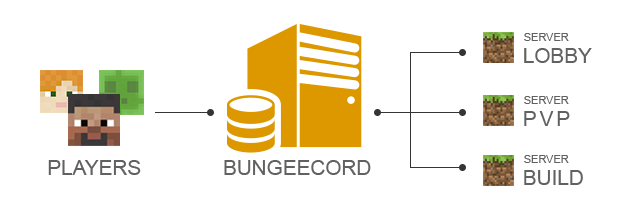

- Service de groupe minecraft

- Minecraft插件服开服

- Select sort and insert sort

- Minecraft group service opening

- History of Web Technology

- Tensorflow2 keras 分类模型

- Mysql安装时mysqld.exe报`应用程序无法正常启动(0xc000007b)`

- Aneng logistics' share price hit a new low: the market value evaporated by nearly 10 billion yuan, and it's useless for chairman Wang Yongjun to increase his holdings

- AMQ6126问题解决思路

猜你喜欢

队列管理器running状态下无法查看通道

CSDN Q & A_ Evaluation

C4D quick start tutorial - Chamfer

【Go实战基础】gin 如何绑定与使用 url 参数

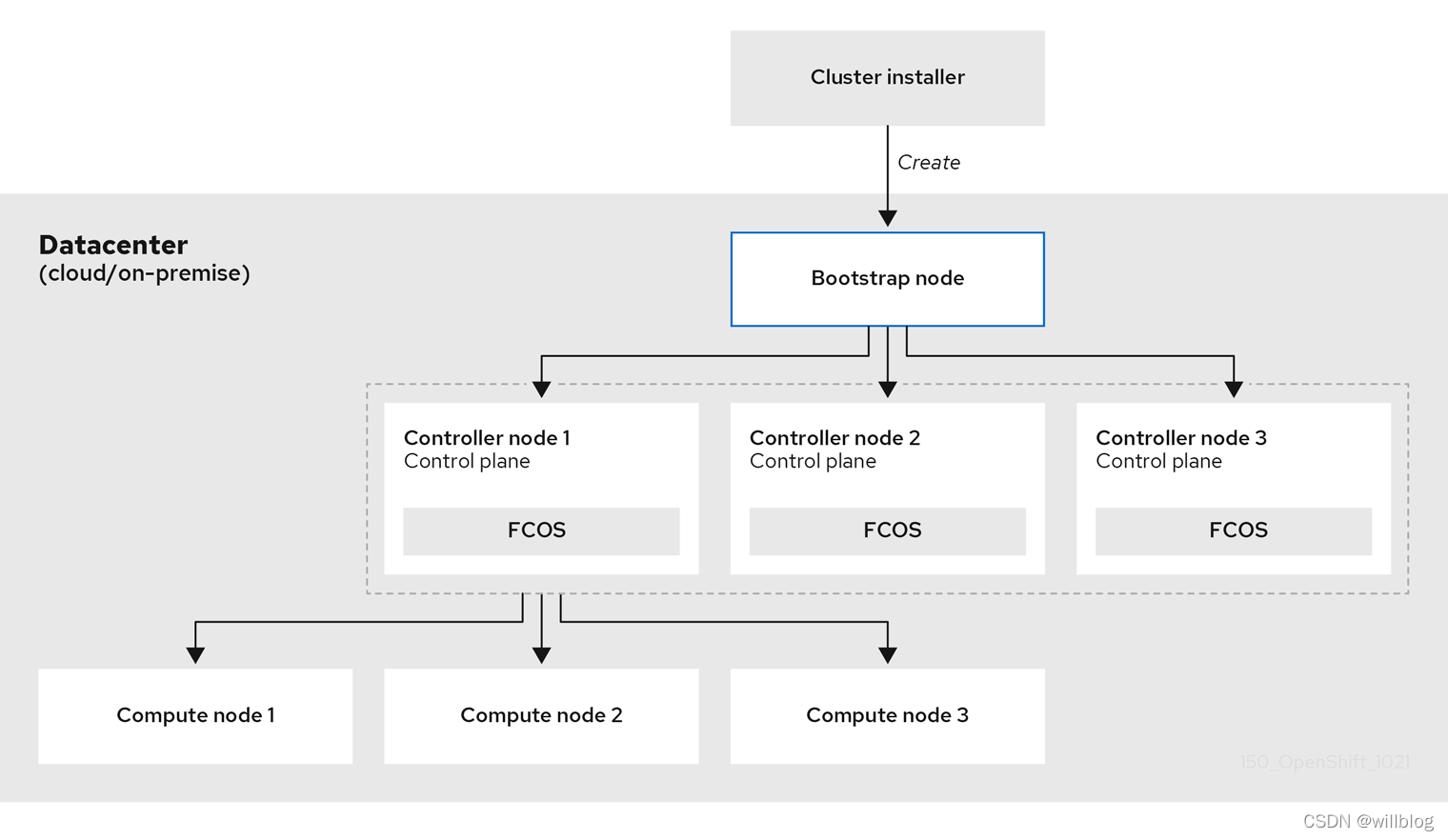

Openshift container platform community okd 4.10.0 deployment

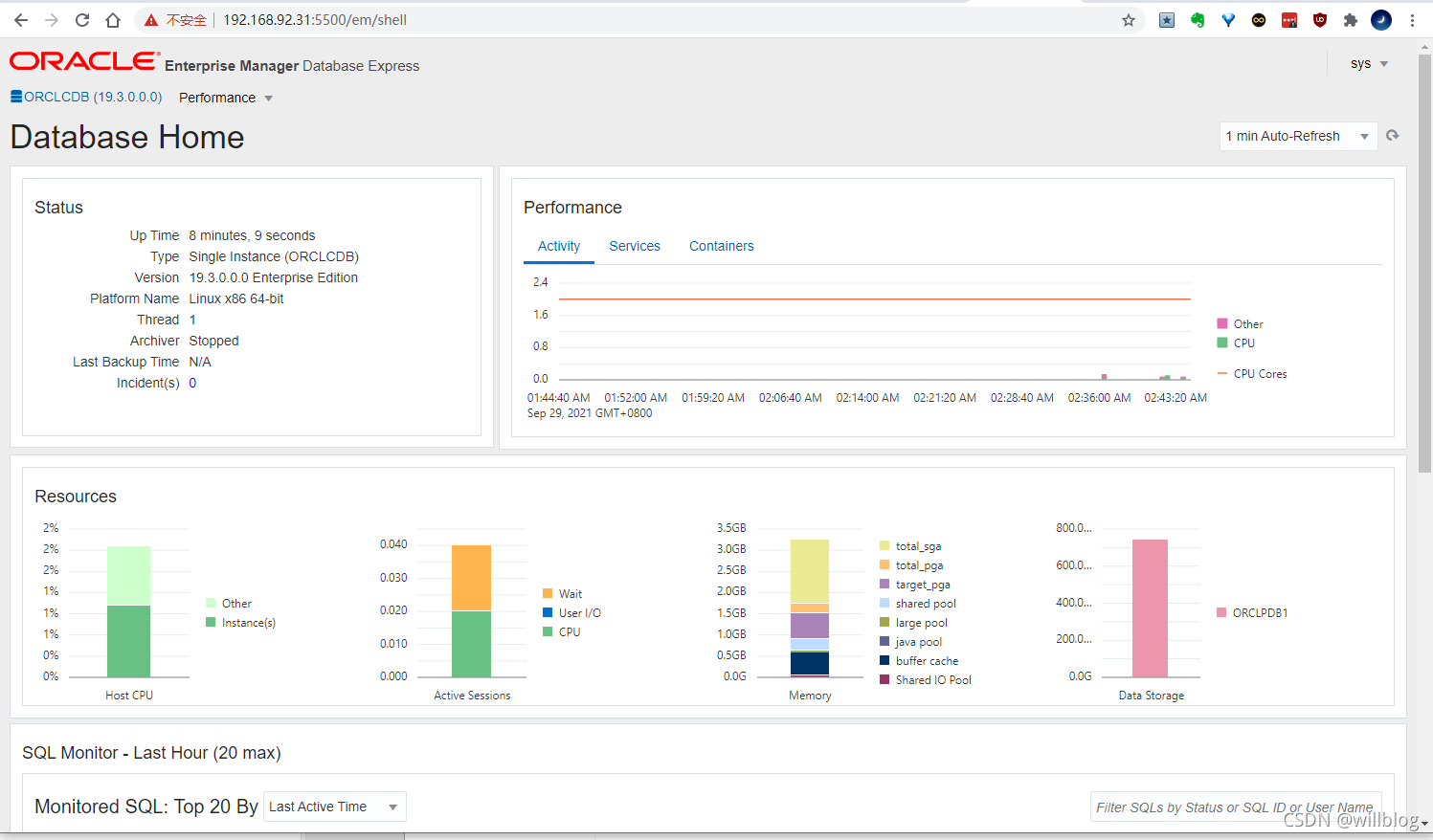

Installing Oracle database 19C for Linux

Linux二进制安装Oracle Database 19c

Minecraft install resource pack

Minecraft group service opening

十年開發經驗的程序員告訴你,你還缺少哪些核心競爭力?

随机推荐

汉诺塔问题的求解与分析

C Baidu map, Gaode map, Google map (GPS) longitude and latitude conversion

Win10 uses docker to pull the redis image and reports an error read only file system: unknown

Use of libusb

Oracle 相关统计

Redis zadd导致的一次线上问题排查和处理

Finishing the interview essentials of secsha system!!!

Qunhui NAS configuring iSCSI storage

gocv拆分颜色通道

Minecraft空岛服开服

Qt的拖动事件

Oracle修改表空间名称以及数据文件

C# 将网页保存为图片(利用WebBrowser)

Minecraft模组服开服

Linux安装Oracle Database 19c

Redis sorted set data type API and application scenario analysis

Judge whether it is Sudoku

Connect function and disconnect function of QT

以字节跳动内部 Data Catalog 架构升级为例聊业务系统的性能优化

【Go实战基础】gin 如何验证请求参数