当前位置:网站首页>[CV] Wu Enda machine learning course notes | Chapter 8

[CV] Wu Enda machine learning course notes | Chapter 8

2022-07-07 07:49:00 【Fannnnf】

If there is no special explanation in this series of articles , The text explains the picture above the text

machine learning | Coursera

Wu Enda machine learning series _bilibili

Catalog

8 Representation of neural networks

8-1 Nonlinear hypothesis

For an image , If the gray value of each pixel or other feature representation method is taken as a data sample , The data set will be very large , If we use the previous regression algorithm to calculate , There will be a very large computational cost

8-2 Neurons and the brain

8-3 Forward propagation - Model display I

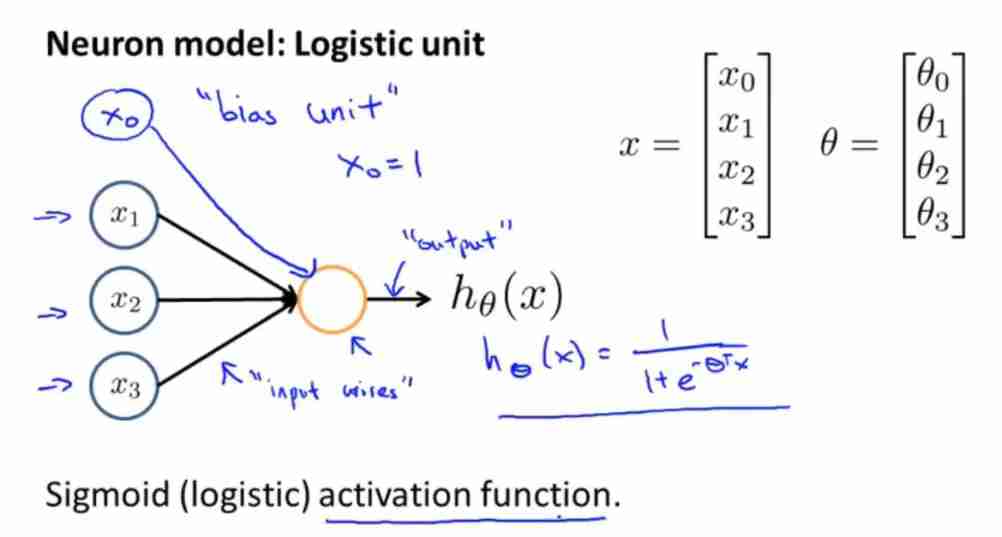

- The figure above refers to a with Sigmoid Artificial neuron of activation function , In terms of neural networks , g ( z ) = 1 1 + e − θ T X g(z)=\frac{1}{1+e^{-θ^TX}} g(z)=1+e−θTX1 It is called activation function

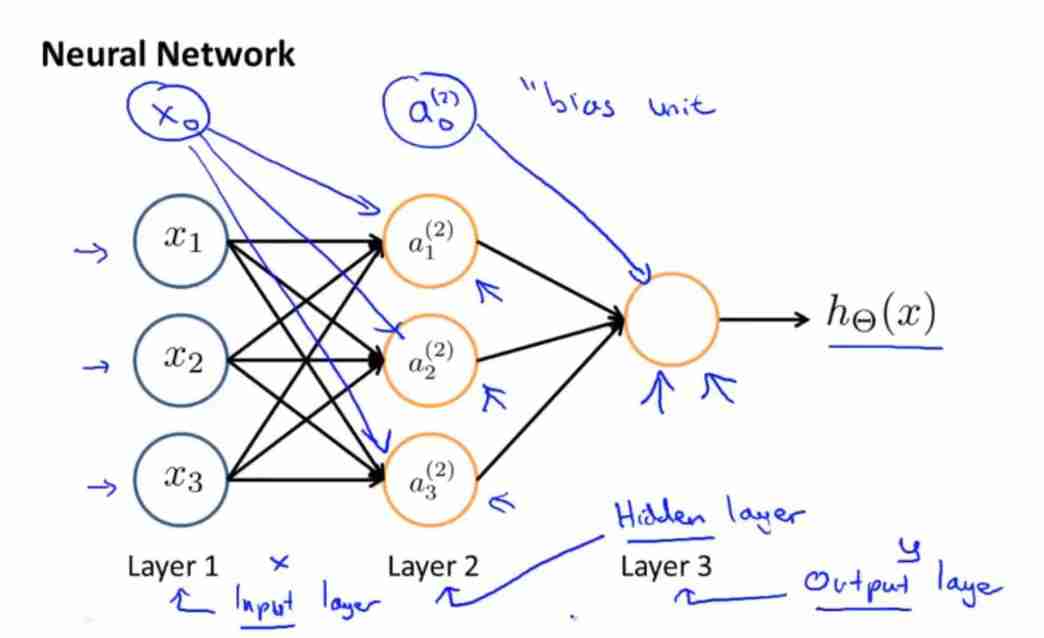

- Neural network refers to a set of Neural Networks , first floor (Layer 1) Called the input layer (Input Layer), The second floor (Layer 2) Called hidden layer (Hidden Layer), The third level (Layer 3) Called output layer (Output Layer)

- use a i ( j ) a_i^{(j)} ai(j) To represent the j j j Layer of the first i i i Activation items of neurons (“activation” of unit i i i in layer j j j), The so-called activation term refers to the value calculated and output by a specific neuron

- use Θ ( j ) \Theta^{(j)} Θ(j) Says from the first j j j Layer to tier j + 1 j+1 j+1 Layer weight matrix ( Parameter matrix ), That's what happened before θ \theta θ matrix ( Previous θ \theta θ It can be called parameter p a r a m e t e r s parameters parameters It can also be called weight w e i g h t s weights weights)

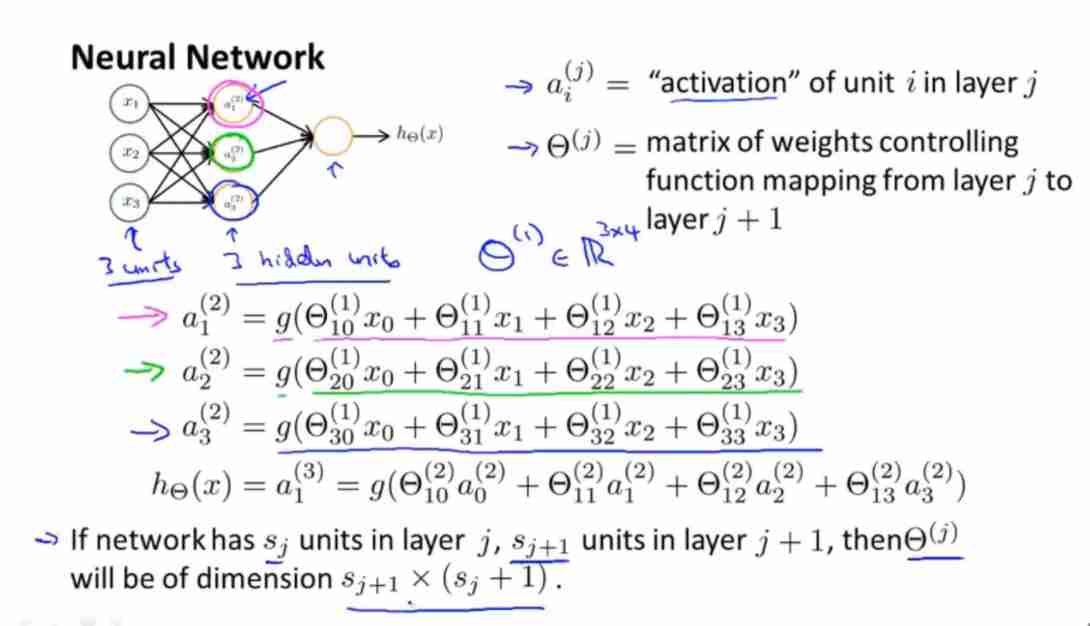

- a 1 ( 2 ) a_1^{(2)} a1(2)、 a 2 ( 2 ) a_2^{(2)} a2(2) and a 3 ( 2 ) a_3^{(2)} a3(2) The calculation formula of has been written in the above figure

- among Θ ( 1 ) \Theta^{(1)} Θ(1) It's a 3 × 4 3×4 3×4 Matrix

- If the neural network is in the j j j Layer has a s j s_j sj A unit , In the j + 1 j+1 j+1 Layer has a s j + 1 s_{j+1} sj+1 A unit , that Θ ( j ) \Theta^{(j)} Θ(j) It's a s j + 1 × ( s j + 1 ) s_{j+1}×(s_j+1) sj+1×(sj+1) Matrix

8-4 Forward propagation - Model display II

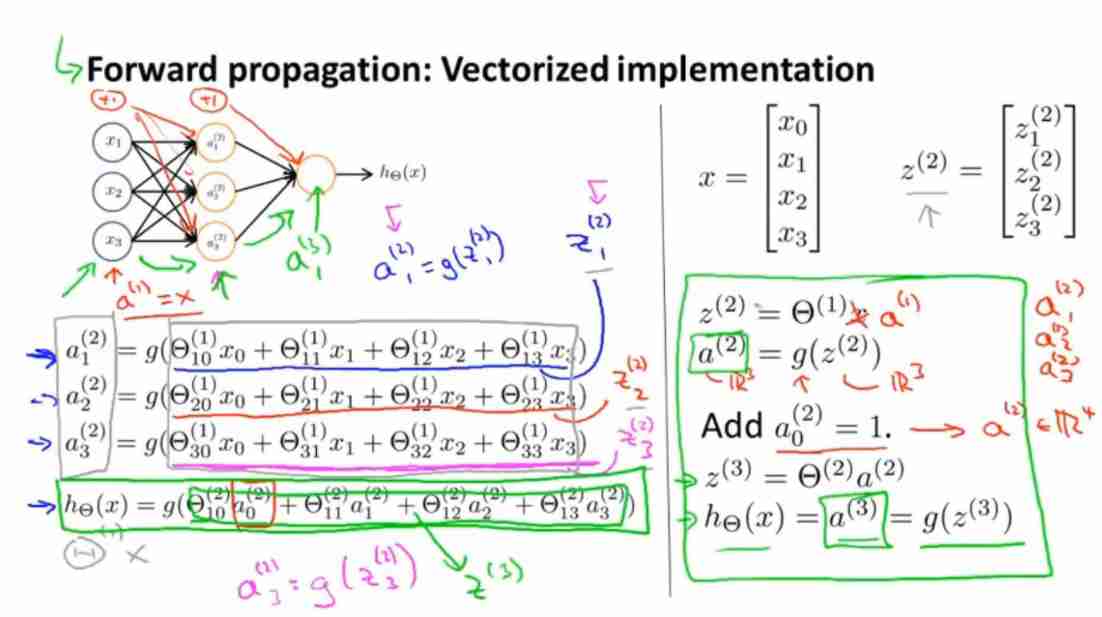

Vectorization of forward propagation :

- Put Θ 10 ( 1 ) + Θ 11 ( 1 ) + Θ 12 ( 1 ) + Θ 13 ( 1 ) \Theta^{(1)}_{10}+\Theta^{(1)}_{11}+\Theta^{(1)}_{12}+\Theta^{(1)}_{13} Θ10(1)+Θ11(1)+Θ12(1)+Θ13(1) Expressed as z 1 ( 2 ) z_1^{(2)} z1(2)

- be a 1 ( 2 ) = g ( z 1 ( 2 ) ) a_1^{(2)}=g(z_1^{(2)}) a1(2)=g(z1(2))

- Extend to the whole domain , Activation value of the second layer a ( 2 ) = g ( z ( 2 ) ) a^{(2)}=g(z^{(2)}) a(2)=g(z(2)), among z ( 2 ) = Θ ( 1 ) a ( 1 ) z^{(2)}=\Theta^{(1)}a^{(1)} z(2)=Θ(1)a(1), In addition, you need to add an offset term a 0 ( 2 ) = 1 a^{(2)}_0=1 a0(2)=1

8-5 Examples and understanding I

8-6 Examples and understanding II

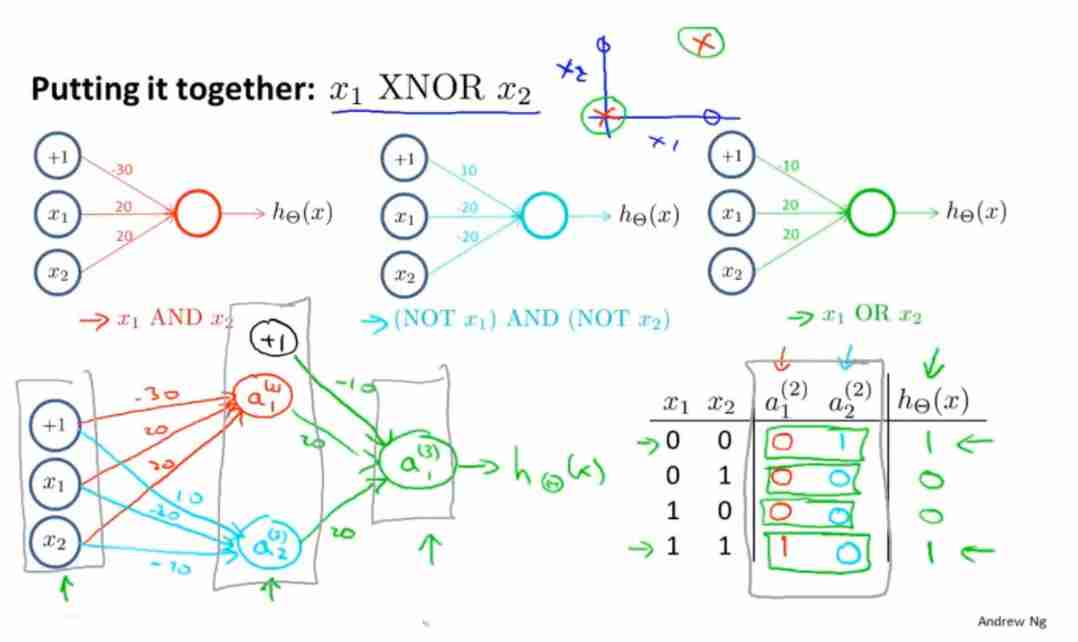

The figure above shows the calculation x 1 x_1 x1 XNOR x 2 x_2 x2 The neural network of

From the first floor to the second floor, calculate x 1 x_1 x1 AND x 2 x_2 x2 obtain a 1 ( 2 ) a_1^{(2)} a1(2), Calculation (NOT x 1 x_1 x1) AND (NOT x 2 x_2 x2) obtain a 2 ( 2 ) a_2^{(2)} a2(2)

And then to a 1 ( 2 ) a_1^{(2)} a1(2) and a 2 ( 2 ) a_2^{(2)} a2(2) by x 1 x_1 x1 and x 2 x_2 x2 Calculation x 1 x_1 x1 OR x 2 x_2 x2 The result is x 1 x_1 x1 XNOR x 2 x_2 x2

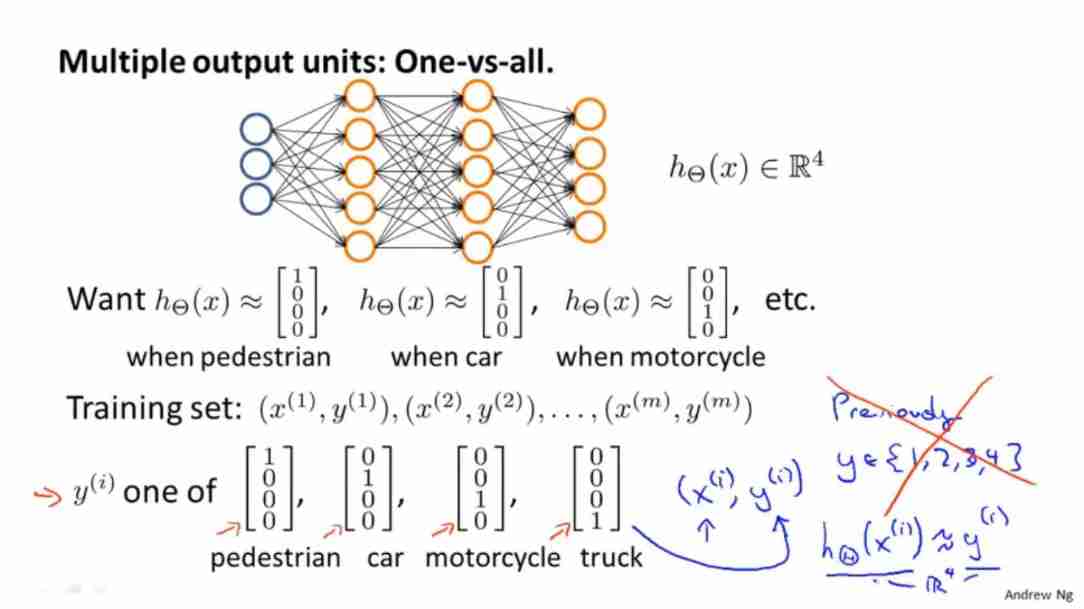

8-7 Multivariate classification

There are four outputs :pedestrian、car、motorcycle、truck

So there are four output units

Output y ( i ) y^{(i)} y(i) For one 4 D matrix , May be :

[ 1 0 0 0 ] or [ 0 1 0 0 ] or [ 0 0 1 0 ] or [ 0 0 0 1 ] in Of Its in One individual \begin{bmatrix} 1\\ 0\\ 0\\ 0\\ \end{bmatrix} or \begin{bmatrix} 0\\ 1\\ 0\\ 0\\ \end{bmatrix} or \begin{bmatrix} 0\\ 0\\ 1\\ 0\\ \end{bmatrix} or \begin{bmatrix} 0\\ 0\\ 0\\ 1\\ \end{bmatrix} One of them ⎣⎢⎢⎡1000⎦⎥⎥⎤ or ⎣⎢⎢⎡0100⎦⎥⎥⎤ or ⎣⎢⎢⎡0010⎦⎥⎥⎤ or ⎣⎢⎢⎡0001⎦⎥⎥⎤ in Of Its in One individual

respectively pedestrian or car or motorcycle or truck

边栏推荐

- pytest+allure+jenkins環境--填坑完畢

- PHP exports millions of data

- [2022 CISCN]初赛 web题目复现

- Button wizard script learning - about tmall grabbing red envelopes

- Iterable、Collection、List 的常见方法签名以及含义

- Regular e-commerce problems part1

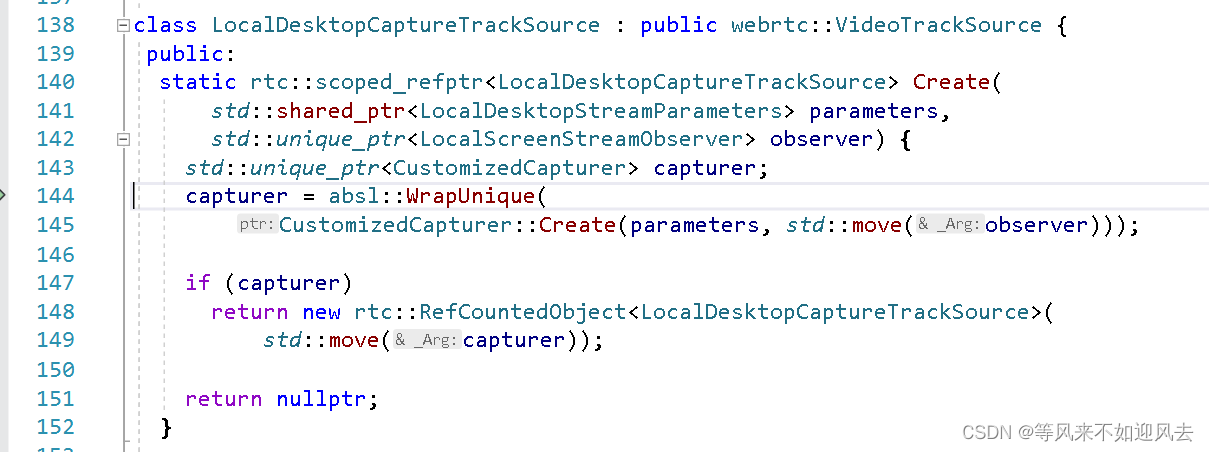

- [webrtc] M98 screen and window acquisition

- Interviewer: what development models do you know?

- IO流 file

- gslx680触摸屏驱动源码码分析(gslX680.c)

猜你喜欢

Common validation comments

![[webrtc] m98 Screen and Window Collection](/img/b1/1ca13b6d3fdbf18ff5205ed5584eef.png)

[webrtc] m98 Screen and Window Collection

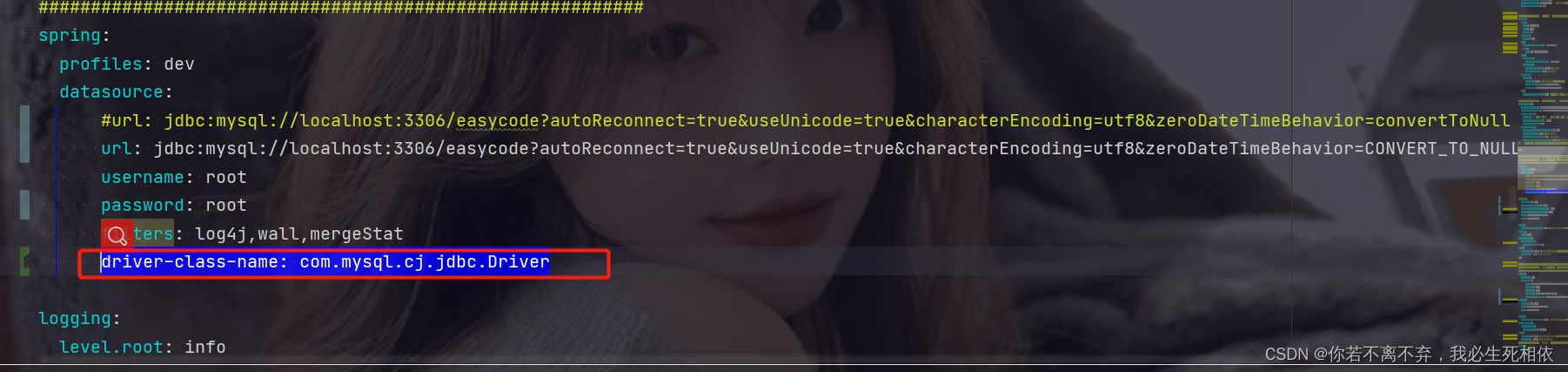

The configuration that needs to be modified when switching between high and low versions of MySQL 5-8 (take aicode as an example here)

【webrtc】m98 screen和window采集

图解GPT3的工作原理

![[P2P] local packet capturing](/img/4e/e1b60e74bc4c44e453cc832283a1f4.png)

[P2P] local packet capturing

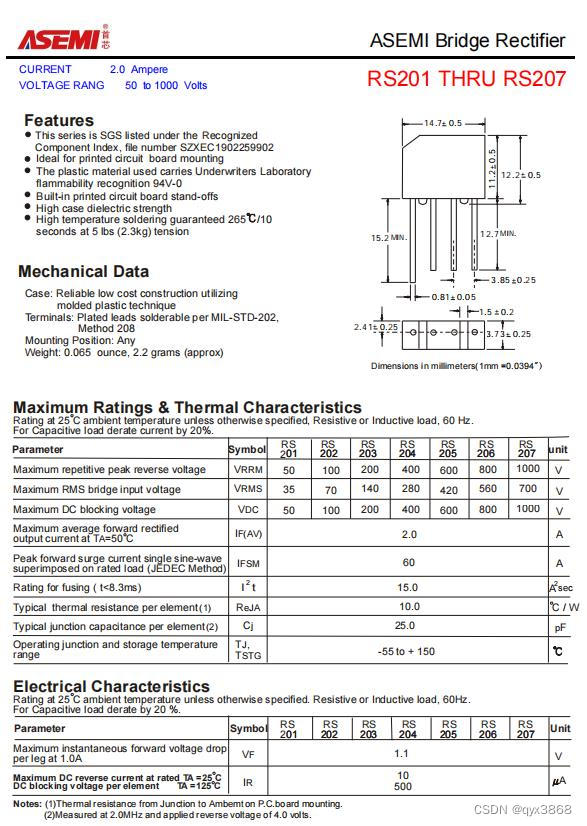

Asemi rectifier bridge rs210 parameters, rs210 specifications, rs210 package

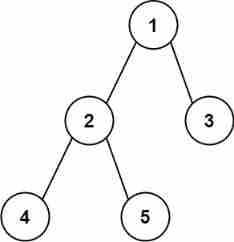

Leetcode-543. Diameter of Binary Tree

![[SUCTF 2019]Game](/img/9c/362117a4bf3a1435ececa288112dfc.png)

[SUCTF 2019]Game

How can a 35 year old programmer build a technological moat?

随机推荐

Six methods of flattening arrays with JS

面试结束后,被面试官在朋友圈吐槽了......

[guess-ctf2019] fake compressed packets

UWB learning 1

【obs】win-capture需要winrt

Tongda injection 0day

Outsourcing for four years, abandoned

ASEMI整流桥RS210参数,RS210规格,RS210封装

[Stanford Jiwang cs144 project] lab4: tcpconnection

2022-07-06: will the following go language codes be panic? A: Meeting; B: No. package main import “C“ func main() { var ch chan struct

After 95, the CV engineer posted the payroll and made up this. It's really fragrant

Live broadcast platform source code, foldable menu bar

[UTCTF2020]file header

Pytest+allure+jenkins environment -- completion of pit filling

[2022 ACTF]web题目复现

大视频文件的缓冲播放原理以及实现

../ And/

Common validation comments

nacos

vus.SSR在asynData函数中请求数据的注意事项