当前位置:网站首页>[cann document express issue 05] let you know what operators are

[cann document express issue 05] let you know what operators are

2022-06-24 19:59:00 【Hua Weiyun】

What is an operator

Deep learning algorithm is composed of computing units , We call these computational units operators (Operator, abbreviation OP). In the network model , The operator corresponds to the computational logic in the layer , for example : Convolution layer (Convolution Layer) It's an operator ; Fully connected layer (Fully-connected Layer, FC layer) The weight summation process in , It's an operator .

Another example is :tanh、ReLU etc. , Is an operator used as an activation function in the network model .

The name of the operator (Name) And type (Type)

- The name of the operator : Identify an operator in a network , The names of operators in the same network should be unique .

- The type of operator : Each operator in the network implements logical matching according to the operator type , There may be more than one operator of the same type in a network .

As shown in the figure below ,Conv1、Pool1、Conv2 Are the names of operators in this network , among Conv1 And Conv2 The types of operators are Convolution, It means doing convolution calculation once respectively .

tensor (Tensor)

- Tensors are containers for operators to compute data , Including input data and output data .

- Tensor descriptor (TensorDesc) It is a description of input data and output data , It mainly contains the following attributes :

Next, we will introduce the shape and data layout format in the lower tensor descriptor in detail .

shape (Shape)

The shape of the tensor , Like shape (3,4) Indicates that the first dimension has 3 Elements , The second dimension is 4 Elements , It's a 3 That's ok 4 Matrix array of columns . How many numbers are there in the shape , It means how many dimensions this tensor has . The first element of the shape depends on how many elements are in the outermost bracket of the tensor , The second element of the shape depends on how many elements are in the second bracket starting from the left in the tensor , And so on . for example :

Let's take a look at the physical meaning of shape , hypothesis shape=(4, 20, 20, 3).

Suppose there is 4 A picture , namely shape in 4 The meaning of , The width and height of each photo are 20, That is to say 20*20=400 Pixel , Each pixel is marked by red / green / blue 3 Color composition , namely shape Inside 3 The meaning of , This is it. shape=(4, 20, 20, 3) The physical meaning of .

Data layout format

In the field of deep learning , Multidimensional data is stored in multidimensional arrays , For example, the characteristic graph of convolutional neural network (Feature Map) Usually saved in a four-dimensional array , namely 4D Format :

- N:Batch Number , For example, the number of images .

- H:Height, Feature map height , That is, the number of pixels in the vertical height direction .

- W:Width, Width of feature map , That is, the number of pixels in the horizontal width direction .

- C:Channels, Signature channel , For example, color RGB Graphic Channels by 3.

Because data can only be stored linearly , So these four dimensions have corresponding order . Different deep learning frameworks will store feature map data in different order , such as Caffe, The order is [Batch, Channels, Height, Width], namely NCHW.TensorFlow in , The order is [Batch, Height, Width, Channels], namely NHWC.

In one format RGB For example ,NCHW in ,C On the outside , What's actually stored is “RRRRRRGGGGGGBBBBBB”, That is, all pixel values of the same channel are stored together in sequence ; and NHWC in C On the innermost layer , What is actually stored is “RGBRGBRGBRGBRGBRGB”, That is, the pixel values at the same position of different channels are sequentially stored together .

Although the stored data is the same , But different storage order will lead to inconsistent data access characteristics , So even if you do the same operation , The corresponding computing performance will be different .

It's rising AI In the processor , In order to improve the efficiency of data access , Tensor data are used NC1HWC0 Five dimensional format of . among C0 Strongly related to microarchitecture , be equal to AI Core Size of matrix calculation unit in , This part of data needs to be stored continuously ;C1 Yes, it will C Dimensions are in accordance with C0 Number after splitting , namely C1=C/C0. If you don't divide , The last piece of data needs to be completed to align C0.

More about

Learn more about , You can log in to shengteng community https://www.hiascend.com/, Read the relevant documents :

Rise CANN The document center is dedicated to providing developers with better content and more convenient development experience , help CANN Developers co build AI ecology . Any comments and suggestions can be fed back in shengteng community , Your every attention is our driving force .

边栏推荐

- Server lease error in Hong Kong may lead to serious consequences

- 科技抗疫: 运营商网络洞察和实践白皮书 | 云享书库NO.20推荐

- Geoscience remote sensing data collection online

- Using dynamic time warping (DTW) to solve the similarity measurement of time series and the similarity identification analysis of pollution concentration in upstream and downstream rivers

- Php OSS file read and write file, workerman Generate Temporary file and Output Browser Download

- Unit actual combat lol skill release range

- 【Go語言刷題篇】Go從0到入門4:切片的高級用法、初級複習與Map入門學習

- Vs2017 setting function Chinese Notes

- Landcover100, planned land cover website

- Apache+php+mysql environment construction is super detailed!!!

猜你喜欢

What about the Golden Angel of thunder one? Golden Angel mission details

To open the registry

Two solutions to the problem of 0xv0000225 unable to start the computer

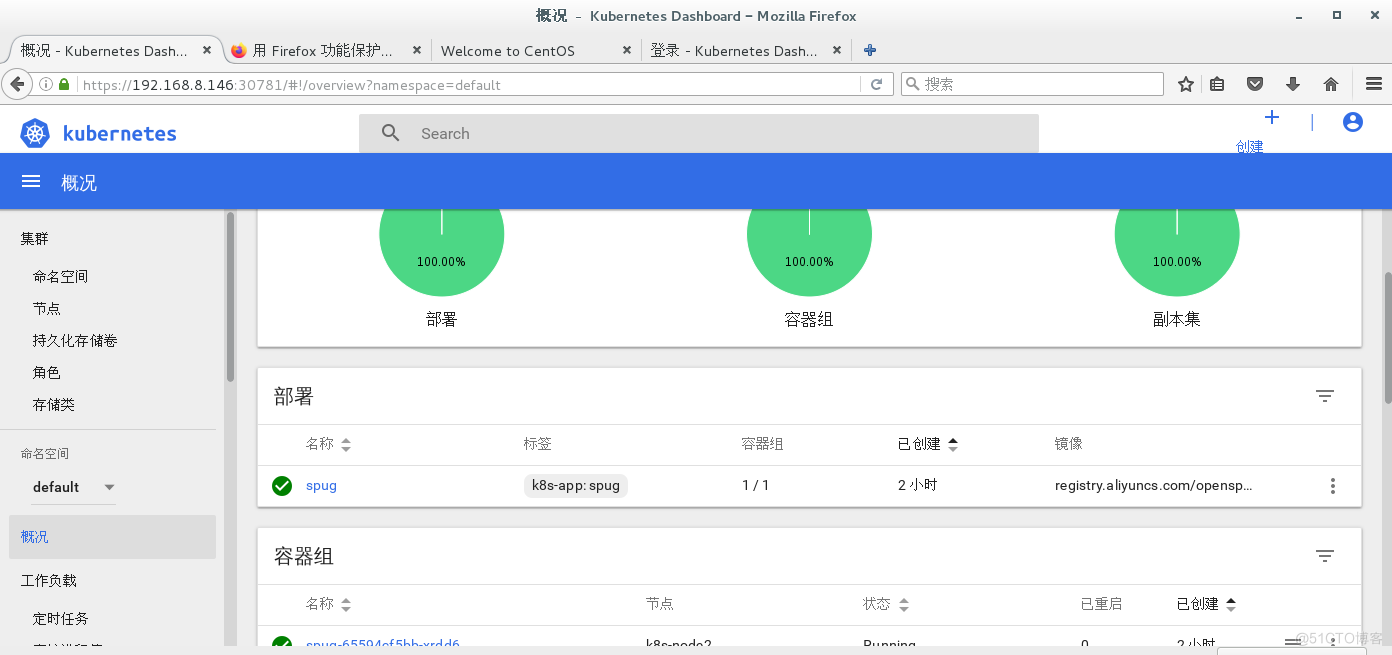

Kubernetes cluster deployment

How to use R package ggtreeextra to draw evolution tree

Digital twin industry case: Digital Smart port

UART communication (STM32F103 library function)

Confirm whether the host is a large terminal or a small terminal

Vs2017 setting function Chinese Notes

What is CNN (convolutional neural network)

随机推荐

The group offsets of the Kafka of the Flink SQL. If the specified groupid is not mentioned

At present, only CDC monitors Mysql to get the data of new columns. Sqlserver can't, can it

思源笔记工具栏中的按钮名称变成了 undefined,有人遇到过吗?

Teach you how to cancel computer hibernation

PingCAP 入选 2022 Gartner 云数据库“客户之声”,获评“卓越表现者”最高分

Download steps of STM32 firmware library

LCD12864 (ST7565P) Chinese character display (STM32F103)

The cdc+mysql connector joins the date and time field from the dimension table by +8:00. Could you tell me which one is hosted by Alibaba cloud

Todesk remote control, detailed introduction and tutorial

SQL export CSV data, unlimited number of entries

Pingcap was selected as the "voice of customers" of Gartner cloud database in 2022, and won the highest score of "outstanding performer"

Unityshader world coordinates do not change with the model

工作6年,月薪3W,1名PM的奋斗史

Showcase是什么?Showcase需要注意什么?

What type of datetime in the CDC SQL table should be replaced

应用实践 | 海量数据,秒级分析!Flink+Doris 构建实时数仓方案

网络安全审查办公室对知网启动网络安全审查,称其“掌握大量重要数据及敏感信息”

Does finkcdc support sqlserver2008?

LCD1602 string display (STM32F103)

JVM tuning