当前位置:网站首页>Transformer structure analysis and the principle of blocks in it

Transformer structure analysis and the principle of blocks in it

2022-07-03 20:41:00 【SaltyFish_ Go】

transformer The architecture of

Bit by bit feedforward network

Transformer It's a use encoder-decoder framework , Pure use attention Attention mechanism , There are many in encoder and decoder transformer block , Multiple attention is used in each block , And use a bit by bit feedforward network , and layer-norm Layer normalization (batchnorm Not suitable for nlp, Because the sentences are not the same length , Different dimensions and characteristics ).

Long attention

A collection of methods through different attention mechanisms concat, That is, use the same pair key,value,query Extract different information , Then the matrix is fully connected , The dimension of the output is determined by the last fully connected output .

transformer The architecture of

The first one in the decoder masked-multi-head-attention yes self-attention structure , The second long attention is not self-attention,attention Of key,value The input is the output of the encoder .

query From the target sequence

Bit by bit feedforward network

Bit by bit feedforward network in the architecture , It can be seen as a full connection layer for changing dimensions .

Layer normalization

Each sentence layernorm, Not like an image , Each channel or feature batchnorm( On the full connection layer or convolution layer )

边栏推荐

- JVM JNI and PVM pybind11 mass data transmission and optimization

- 【c】 Digital bomb

- 2.3 other data types

- 4. Data splitting of Flink real-time project

- jvm jni 及 pvm pybind11 大批量数据传输及优化

- Global and Chinese market of cyanuric acid 2022-2028: Research Report on technology, participants, trends, market size and share

- QT tutorial: signal and slot mechanism

- 2022 melting welding and thermal cutting examination materials and free melting welding and thermal cutting examination questions

- How to read the source code [debug and observe the source code]

- Sparse matrix (triple) creation, transpose, traversal, addition, subtraction, multiplication. C implementation

猜你喜欢

全网都在疯传的《老板管理手册》(转)

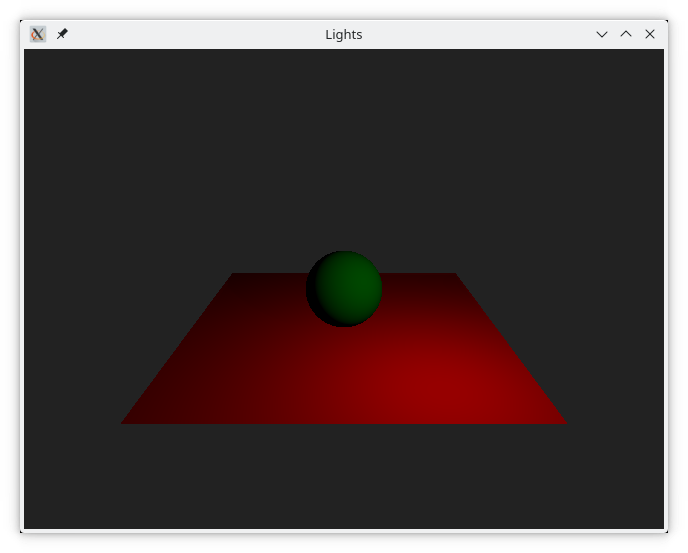

Qt6 QML Book/Qt Quick 3D/基础知识

Etcd raft Based Consistency assurance

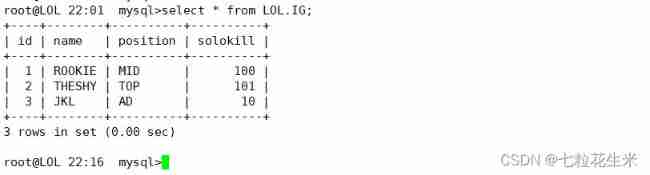

19、 MySQL -- SQL statements and queries

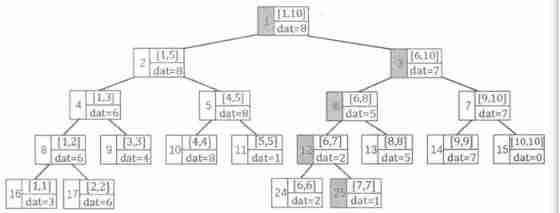

Line segment tree blue book explanation + classic example acwing 1275 Maximum number

如临现场的视觉感染力,NBA决赛直播还能这样看?

Operate BOM objects (key)

你真的知道自己多大了吗?

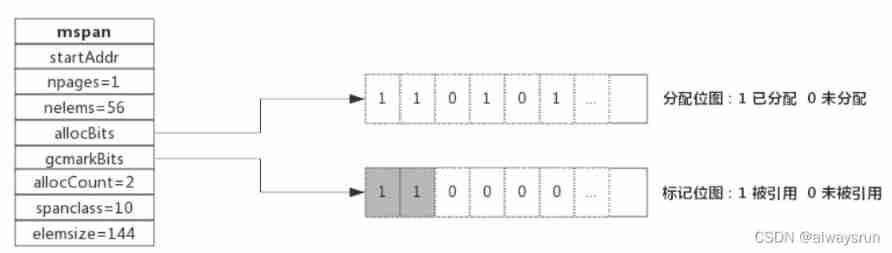

Introduction to golang garbage collection

2.7 format output of values

随机推荐

[effective Objective-C] - block and grand central distribution

Use nodejs+express+mongodb to complete the data persistence project (with modified source code)

In 2021, the global revenue of thick film resistors was about $1537.3 million, and it is expected to reach $2118.7 million in 2028

LabVIEW training

AI enhanced safety monitoring project [with detailed code]

47. Process lock & process pool & Collaboration

The global industrial design revenue in 2021 was about $44360 million, and it is expected to reach $62720 million in 2028. From 2022 to 2028, the CAGR was 5.5%

Haven't expressed the artifact yet? Valentine's Day is coming. Please send her a special gift~

Implementation of stack

2022 high voltage electrician examination and high voltage electrician reexamination examination

Basic knowledge of dictionaries and collections

Day6 merge two ordered arrays

CesiumJS 2022^ 源码解读[7] - 3DTiles 的请求、加载处理流程解析

Refer to some books for the distinction between blocking, non blocking and synchronous asynchronous

Global and Chinese market of liquid antifreeze 2022-2028: Research Report on technology, participants, trends, market size and share

Camera calibration (I): robot hand eye calibration

Cesiumjs 2022 ^ source code interpretation [7] - Analysis of the request and loading process of 3dfiles

Task of gradle learning

18、 MySQL -- index

Interval product of zhinai sauce (prefix product + inverse element)