当前位置:网站首页>Nodejs crawler captures ancient books and records, a total of 16000 pages, experience summary and project sharing

Nodejs crawler captures ancient books and records, a total of 16000 pages, experience summary and project sharing

2020-11-06 01:17:00 【:::::::】

Preface

Data from previous studies , The crawler that wrote a little bit of data crawling , But the writing is more casual . There are a lot of things that don't seem very reasonable right now It's a bit of a free time , I wanted to refactor the previous project . later Take advantage of this weekend , I just wrote a new project , This is the project guwen-spider. At present, this crawler is still a relatively simple type , Grab the page directly , Then extract the data from the page , Save data to database . By contrast with what I wrote before , I think the difficulty lies in the robustness of the whole program , And the corresponding fault tolerance mechanism . In the process of writing the code yesterday, it was also reflected , The real body code is actually written quickly , I spent most of my time in Do stability debugging , And find a more reasonable way to deal with the relationship between data and process control .

background

The background of the project is to grab a first level page, which is a list of directories , Click on a directory to go in It's a chapter And a list of space , Click the chapter or section to enter the specific content page .

summary

This project github Address : guwen-spider (PS: At the end of the line, there are colored eggs ~~ flee

Technical details of the project The project uses a lot of ES7 Of async function , More intuitive response to the process of the process . For convenience , In the process of traversing the data, the famous async This library , So it's inevitable to use a callback promise , Because the data processing takes place in the callback function , Inevitably, there will be some data transfer problems , In fact, it can also be used directly ES7 Of async await Write a method to achieve the same function . One of the best things about this is the use of Class Of static Method encapsulates the operation of the database , static seeing the name of a thing one thinks of its function Static methods Just follow prototype equally , It doesn't take up extra space . The project mainly uses

- 1 ES7 Of async await The coroutine does asynchronous logic processing .

- 2 Use npm Of async library To do loop traversal , And concurrent request operations .

- 3 Use log4js To do log processing

- 4 Use cheerio To deal with it dom The operation of .

- 5 Use mongoose To connect mongoDB Do data storage and operation .

Directory structure

<pre> ├── bin // entrance │ ├── booklist.js // Grasp the logic of books │ ├── chapterlist.js // Grab chapter logic │ ├── content.js // Grab content logic │ └── index.js // Program entrance ├── config // The configuration file ├── dbhelper // Database operation method Directory ├── logs // Project log directory ├── model // mongoDB Set operation instance ├── node_modules ├── utils // Tool function ├── package.json </pre>

Project implementation scheme analysis

Project is a typical case of multi-level crawling , At present, there are only three levels , namely List of books , Book items correspond to Chapter list , A chapter links to the corresponding content . There are two ways to grab such a structure , One is Directly from the outer layer to the inner layer After the inner layer grabs, the next outer layer grabs , There is also a way to first grab the outer layer and save it to the database , Then grab the links to all the inner chapters according to the outer layer , Save again , Then query the corresponding link unit from the database Grab the content of it . Each of these two schemes has its own advantages and disadvantages , I've tried both ways , The latter has one advantage , Because the three levels are captured separately , So it's more convenient , Save as much data as possible to the corresponding chapter . Think about it , If the former is adopted According to normal logic Traverse the first level directory and grab the corresponding secondary chapter directory , Then traverse the chapter list Grab the content , To level three Content unit capture complete When you need to save , If you need a lot of primary directory information , Need Data transfer between these hierarchical data , Think about it. It should be a complicated thing . So keep the data separate To some extent, it avoids unnecessary and complicated data transmission .

At present, we consider In fact, we need to grasp the number of ancient books is not much , Ancient books are probably only 180 This book covers all kinds of classics and histories . And the chapter itself is a small amount of data , That is to say, there are 180 Documents . this 180 There are 16000 chapters in this book , You need to visit 16000 pages to crawl to the corresponding content . So it is reasonable to choose the second one .

Project implementation

The main process has three ways bookListInit ,chapterListInit,contentListInit, They are grabbing the catalogue of books , Chapter list , The method of book content, the initialization method of public exposure . adopt async We can control the running process of these three methods , The book catalog grabs completes, saves the data to the database , Then the execution results are returned to the main program , If the operation is successful The main program is executed according to the list of books to the chapter list , In the same way, we can grasp the contents of books .

Project main entrance

/**

* The crawler grabs the main entrance

*/

const start = async() => {

let booklistRes = await bookListInit();

if (!booklistRes) {

logger.warn(' Book list grabbing error , Termination of procedure ...');

return;

}

logger.info(' The list of books was captured successfully , Now grab the book chapters ...');

let chapterlistRes = await chapterListInit();

if (!chapterlistRes) {

logger.warn(' Book chapter list grab error , Termination of procedure ...');

return;

}

logger.info(' The book chapter list was successfully captured , Now grab the book content ...');

let contentListRes = await contentListInit();

if (!contentListRes) {

logger.warn(' Book chapter content capture error , Termination of procedure ...');

return;

}

logger.info(' The book content is captured successfully ');

}

// Start the entrance

if (typeof bookListInit === 'function' && typeof chapterListInit === 'function') {

// Start crawling

start();

}

Introduced bookListInit ,chapterListInit,contentListInit, Three methods

booklist.js

/**

* Initialization method Return to the grab result true Grab the results false Grab failed

*/

const bookListInit = async() => {

logger.info(' Grab the list of books and start ...');

const pageUrlList = getPageUrlList(totalListPage, baseUrl);

let res = await getBookList(pageUrlList);

return res;

}

chapterlist.js

/**

* Initialization entry

*/

const chapterListInit = async() => {

const list = await bookHelper.getBookList(bookListModel);

if (!list) {

logger.error(' Failed to initialize query book catalog ');

}

logger.info(' Start grabbing the list of book chapters , The book catalogue consists of :' + list.length + ' strip ');

let res = await asyncGetChapter(list);

return res;

};

content.js

/**

* Initialization entry

*/

const contentListInit = async() => {

// Get a list of books

const list = await bookHelper.getBookLi(bookListModel);

if (!list) {

logger.error(' Failed to initialize query book catalog ');

return;

}

const res = await mapBookList(list);

if (!res) {

logger.error(' Capture chapter information , call getCurBookSectionList() Serial traversal operation , Error in execution completion callback , The error message has been printed , Please check the log !');

return;

}

return res;

}

Thinking about content capture

In fact, the logic of book catalog crawling is very simple , Just use async.mapLimit Do a traversal to save the data , But when we save content The logic of simplification is actually Traverse the chapter list Grab the content in the link . But the reality is that there are tens of thousands of links We can't save all of them to an array from the perspective of memory usage , And then iterate over it , So we need to modularize content crawling . The general way to traverse It's a certain number of queries at a time , To grab , The disadvantage of this is that it only classifies by a certain amount , There's no correlation between the data , Insert in bulk , If something goes wrong There are some small problems with fault tolerance , And we think it's a problem to keep a book as a collection . So we use the second method, which is to capture and save the content in a book unit . It's used here async.mapLimit(list, 1, (series, callback) => {}) This method is used to traverse , Inevitably, callbacks are used , It's disgusting .async.mapLimit() The second parameter of can set the number of simultaneous requests .

/*

* Content capture steps :

* The first step is to get a list of books , Look up a book through the book list and record A list of all the corresponding chapters ,

* The second step Traverse the chapter list to get the content and save it to the database

* The third step After saving the data Back to step one The next step is to capture and save the content of the book

*/

/**

* Initialization entry

*/

const contentListInit = async() => {

// Get a list of books

const list = await bookHelper.getBookList(bookListModel);

if (!list) {

logger.error(' Failed to initialize query book catalog ');

return;

}

const res = await mapBookList(list);

if (!res) {

logger.error(' Capture chapter information , call getCurBookSectionList() Serial traversal operation , Error in execution completion callback , The error message has been printed , Please check the log !');

return;

}

return res;

}

/**

* Traverse the list of chapters in the book catalog

* @param {*} list

*/

const mapBookList = (list) => {

return new Promise((resolve, reject) => {

async.mapLimit(list, 1, (series, callback) => {

let doc = series._doc;

getCurBookSectionList(doc, callback);

}, (err, result) => {

if (err) {

logger.error(' Book catalog grab asynchronous execution error !');

logger.error(err);

reject(false);

return;

}

resolve(true);

})

})

}

/**

* Get a list of chapters under a single book Call chapter list traversal to grab content

* @param {*} series

* @param {*} callback

*/

const getCurBookSectionList = async(series, callback) => {

let num = Math.random() * 1000 + 1000;

await sleep(num);

let key = series.key;

const res = await bookHelper.querySectionList(chapterListModel, {

key: key

});

if (!res) {

logger.error(' Get current books : ' + series.bookName + ' Chapter content failed , Go to the next book and grab it !');

callback(null, null);

return;

}

// Determine whether the current data already exists

const bookItemModel = getModel(key);

const contentLength = await bookHelper.getCollectionLength(bookItemModel, {});

if (contentLength === res.length) {

logger.info(' Current books :' + series.bookName + ' The database has been crawled , Go to the next data task ');

callback(null, null);

return;

}

await mapSectionList(res);

callback(null, null);

}

Data capture is finished How to save it is a problem

Here we go through key To sort the data , Every time according to key To get links , Traversal , The advantage of this is that the data stored is a whole , Now think about data preservation

- 1 It can be inserted in a holistic way advantage : Fast Database operations don't waste time . shortcoming : Some books may have hundreds of chapters That means saving the contents of hundreds of pages before inserting them , It's also a memory drain , May cause the program to run unsteadily .

- 2 It can be inserted into the database in the form of each article . advantage : Page capture is the way to save Make it possible to save data in time , Even if there is a subsequent error, there is no need to save the previous chapter again , shortcoming : Is also evident It is slow , Think about it. If you have to climb tens of thousands of pages do Tens of thousands of times *N Operation of database Here you can also make a buffer to save a certain number of pieces at a time It's also a good choice to save when the number is up .

/**

* Go through all the chapters in a single book Call the content grab method

* @param {*} list

*/

const mapSectionList = (list) => {

return new Promise((resolve, reject) => {

async.mapLimit(list, 1, (series, callback) => {

let doc = series._doc;

getContent(doc, callback)

}, (err, result) => {

if (err) {

logger.error(' Book catalog grab asynchronous execution error !');

logger.error(err);

reject(false);

return;

}

const bookName = list[0].bookName;

const key = list[0].key;

// Save as a whole

saveAllContentToDB(result, bookName, key, resolve);

// Save each article as a unit

// logger.info(bookName + ' Data capture complete , Go to the next book grab function ...');

// resolve(true);

})

})

}

Both have advantages and disadvantages , Here we've all tried . Two error saved sets are prepared ,errContentModel, errorCollectionModel, In case of insertion error Save the information to the corresponding set separately , Choose either of them . The reason for adding sets to hold data is Easy to view at one time and follow-up operation , Don't look at the log .

(PS , In fact, it's totally errorCollectionModel This collection will do ,errContentModel This collection can save chapter information completely )

// Save the wrong data name

const errorSpider = mongoose.Schema({

chapter: String,

section: String,

url: String,

key: String,

bookName: String,

author: String,

})

// Save the wrong data name Only keep key and bookName Information

const errorCollection = mongoose.Schema({

key: String,

bookName: String,

})

We will each book information content Put it in a new collection , Assemble to key To name it .

summary

Write this project In fact, the main difficulty lies in the control of program stability , The setting of fault tolerance mechanism , And wrong records , At present, the project can basically run directly Run through the whole process at one time . But there are also many problems in programming , Welcome to correct and exchange .

Colored eggs

After writing this project Made a base on React Open front-end website for page browsing And one based on koa2.x Developed server , The overall technology stack is equivalent to React + Redux + Koa2 , The front and back end services are deployed separately , Independence can better remove the coupling between the front and back-end services , For example, the same set of server-side code , Not only can I give web End Can also give Mobile ,app Provide support . At present, the whole set is very simple , But it can meet the basic query browsing function . I hope there will be time to enrich the project later .

- Address of the project Address : guwen-spider

- Corresponding to the front end React + Redux + semantic-ui Address : guwen-react

- Corresponding Node End Koa2.2 + mongoose Address : guwen-node

The project is very simple , But there is one more study and Research The development environment from the front end to the server side .

above です

Participation of this paper Tencent cloud media sharing plan , You are welcome to join us , share .

版权声明

本文为[:::::::]所创,转载请带上原文链接,感谢

边栏推荐

- C language 100 question set 004 - statistics of the number of people of all ages

- Network programming NiO: Bio and NiO

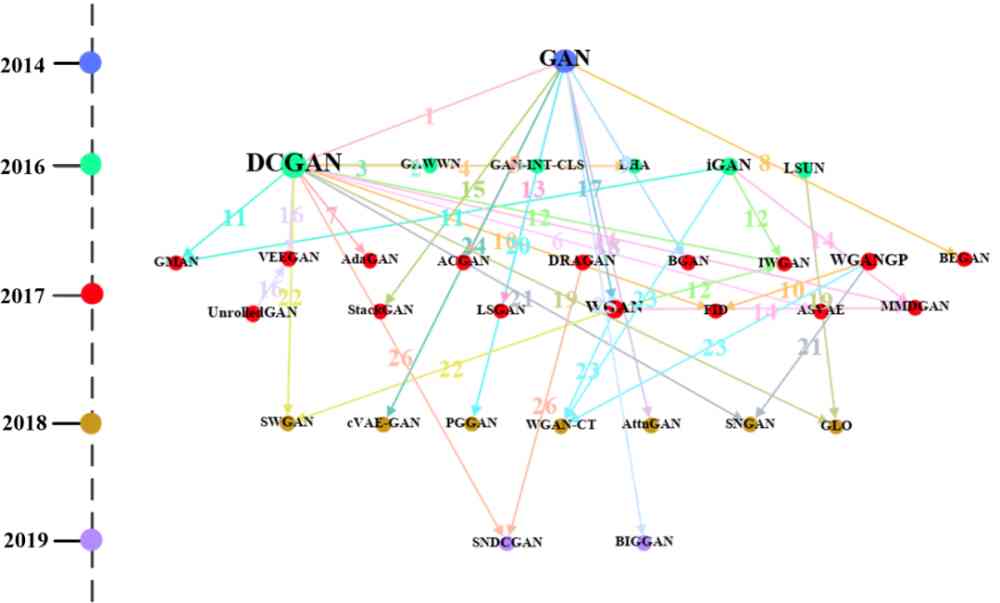

- 中国提出的AI方法影响越来越大,天大等从大量文献中挖掘AI发展规律

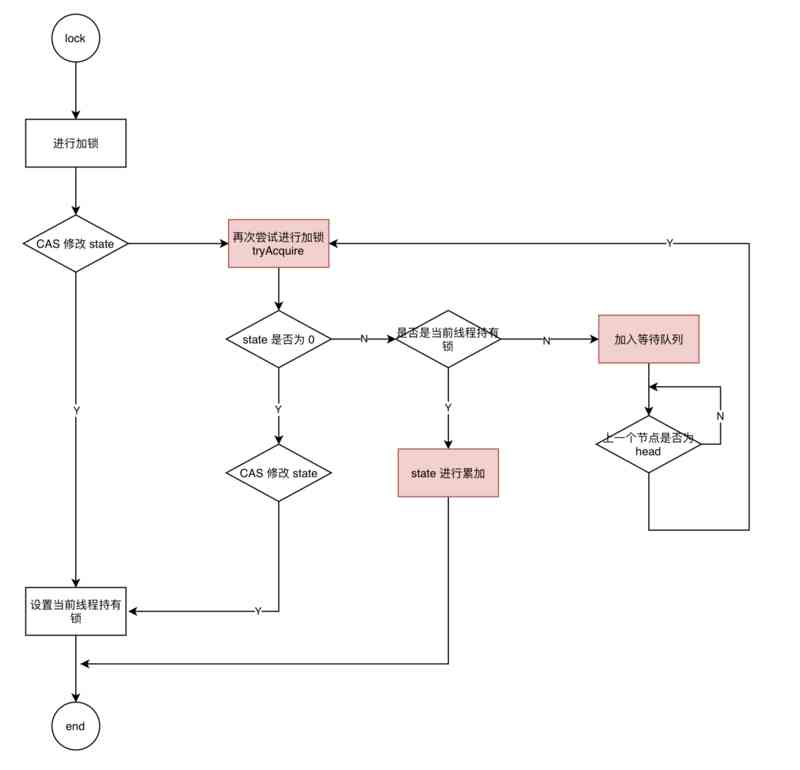

- Tool class under JUC package, its name is locksupport! Did you make it?

- The practice of the architecture of Internet public opinion system

- 事半功倍:在没有机柜的情况下实现自动化

- Filecoin最新动态 完成重大升级 已实现四大项目进展!

- 幽默:黑客式编程其实类似机器学习!

- Cocos Creator 原始碼解讀:引擎啟動與主迴圈

- Existence judgment in structured data

猜你喜欢

随机推荐

Asp.Net Core學習筆記:入門篇

Swagger 3.0 天天刷屏,真的香嗎?

Vue 3 responsive Foundation

DTU连接经常遇到的问题有哪些

Leetcode's ransom letter

技術總監,送給剛畢業的程式設計師們一句話——做好小事,才能成就大事

CCR炒币机器人:“比特币”数字货币的大佬,你不得不了解的知识

加速「全民直播」洪流,如何攻克延时、卡顿、高并发难题?

html

关于Kubernetes 与 OAM 构建统一、标准化的应用管理平台知识!(附网盘链接)

深度揭祕垃圾回收底層,這次讓你徹底弄懂她

至联云解析:IPFS/Filecoin挖矿为什么这么难?

DRF JWT authentication module and self customization

drf JWT認證模組與自定製

速看!互联网、电商离线大数据分析最佳实践!(附网盘链接)

用Keras LSTM构建编码器-解码器模型

This article will introduce you to jest unit test

全球疫情加速互联网企业转型,区块链会是解药吗?

Analysis of ThreadLocal principle

htmlcss