当前位置:网站首页>MySQL MHA high availability cluster

MySQL MHA high availability cluster

2022-07-05 08:13:00 【JackieZhengChina】

Catalog

3.1 Configure master-slave replication

One 、MHA summary

1.1.MHA What is it?

1.MHA(MasterHigh Availability) It's an excellent set of MySQL Software for failover and master-slave replication in high availability environment .

2.MHA The emergence of is to solve MySQL Single point problem .

3.MySQL During failover ,MHA Can do 0-30 Automatic failover within seconds .

4.MHA It can ensure the consistency of data to the greatest extent in the process of failover , To achieve real high availability .

1.2.MHA The composition of

(1)MHA Node( Data nodes )

MHA Node Run on each MySQL Server .

(2)MHA Manager( The management node )

MHA Manager It can be deployed separately on a separate machine , Manage multiple master-slave colony ; It can also be deployed in one slave Node .

MHA Manager It will detect the master node . When master Failure time , It can automatically send the latest data to slave Upgrade to a new master, And then put all the other slave Point back to the new master. The entire failover process is completely transparent to the application .

1.3.MHA Characteristics

1. During automatic failover ,MHA Trying to save binary logs from the down primary server , Ensure that data is not lost to the greatest extent .

2. Use semi synchronous replication , Can greatly reduce the risk of data loss , If only one slave Has received the latest binary log ,MHA You can apply the latest binary logs to all other slave Server , Therefore, the data consistency of all nodes can be guaranteed .

3. at present MHA Support one master multi-slave architecture , At least three servers , That is, one master and two slaves .

Two 、MHA Ready to build

2.1. Experimental thinking

- MHA framework ① Database installation ② One master and two slaves ③MHA build

- fault simulation ① The main library fails ② The alternate master database becomes the master database ③ The original fault main database is restored and rejoined to MHA Become a slave

3、 ... and 、MHA build

3.1 Configure master-slave replication

1. Turn off firewall 、 Enhancements

### Operate on all four servers

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

2. modify Master、Slave1、Slave2 The hostname of the node

### stay Master On

hostnamectl set-hostname Mysql1

su

### stay Slave1

hostnamectl set-hostname Mysql2

su

### stay Slave2

hostnamectl set-hostname Mysql3

su

3. stay Master、Slave1、Slave2 Add domain name resolution

192.168.40.10 Mysql1

192.168.40.100 Mysql2

192.168.40.30 Mysql3

4. Configure master-slave synchronization

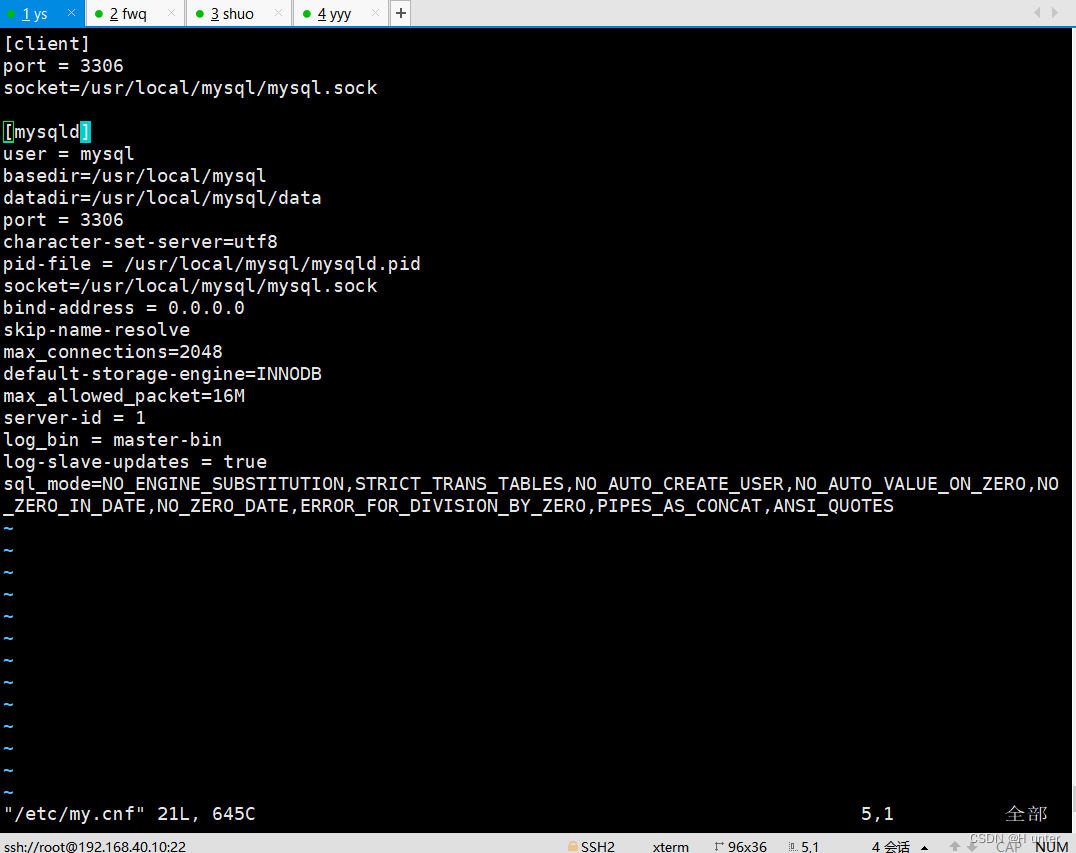

### modify Master、Slave1、Slave2 Node Mysql Master profile /etc/my.cnf

###Master node ###

vim /etc/my.cnf

[mysqld]

server-id = 1

log_bin = master-bin

log-slave-updates = true

systemctl restart mysqld

###Slave1 node ###

vim /etc/my.cnf

server-id = 2 ### Three servers server-id It can't be the same

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

systemctl restart mysqld

###Slave2 node ###

vim /etc/my.cnf

server-id = 3

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

systemctl restart mysqld

5.Master、Slave1、Slave2 Two soft links are created on each node

### Both the master server and the two slave servers are created

ln -s /usr/local/mysql/bin/mysql /usr/sbin/

ln -s /usr/local/mysql/bin/mysqlbinlog /usr/sbin/

ls /usr/sbin/mysql*

6. Login database master-slave configuration authorization

#################Master、Slave1、Slave2 All nodes are authorized ###################

grant replication slave on *.* to 'myslave'@'192.168.40.%' identified by '123456';

### Authorize master-slave users

grant all privileges on *.* to 'mha'@'192.168.40.%' identified by 'manager';

grant all privileges on *.* to 'mha'@'Mysql1' identified by 'manager';

grant all privileges on *.* to 'mha'@'Mysql2' identified by 'manager';

grant all privileges on *.* to 'mha'@'Mysql3' identified by 'manager';

# Refresh Library

flush privileges;

Be careful : Both the master server and the two slave servers should be authorized

7.Master Node to view binaries and synchronization points 、 stay Slave1、Slave2 The node performs a synchronization operation

################# stay master On ##################

show master status;

############## stay slave1、slave2 The node performs a synchronization operation ############

change master to master_host='192.168.400.10',master_user='myslave',master_password='123456',master_log_file='master-bin.000001',master_log_pos=1745;

start slave;

show slave status\G;

8. Set up two slave nodes read only mode

### Set up... On two slave servers

set global read_only=1;

9. Verify master-slave replication

### In the main master Create a library on 、 Table and insert data

create database test1;

use test1;

insert into info values(' Xiao Ming ');

select * from test1.info;

### stay slave1、slave2 Verify on

select * from test1.info;

3.2. install MHA Software

1. All servers have MHA Dependent environment , First installation epel Source

### stay master、slave1、slave2、mha Installed on the server

yum install epel-release --nogpgcheck -y

yum install -y perl-DBD-MySQL \

perl-Config-Tiny \

perl-Log-Dispatch \

perl-Parallel-ForkManager \

perl-ExtUtils-CBuilder \

perl-ExtUtils-MakeMaker \

perl-CPAN

2. install MHA software package , You must first install... On all servers node Components

- For each operating system, the version is different , here CentOS7.4 Must choose 0.57 edition .

- On all servers, you must first install node Components , Last in MHA-manager Install on node manager Components , because manager rely on node Components

## Download the required package to /opt Next ##

## Unzip and install... On each server node Components ##

cd /opt

tar zxf mha4mysql-node-0.57.tar.gz

cd mha4mysql-node-0.57

perl Makefile.PL

make && make install

Be careful : Install... On all four servers node Components

3. stay MHA-manager Install on node manager Components

### Put the bags you need in opt Next

tar zxvf mha4mysql-manager-0.57.tar.gz

cd mha4mysql-manager-0.57/

perl Makefile.PL

make && make install

cd /usr/local/bin/

#manager After the components are installed, install them in /usr/local/bin The following tools will be generated , It mainly includes the following :

masterha_check_ssh Check MHA Of SSH Configuration status

masterha_check_repl Check MySQL Copy status

masterha_manger start-up manager Script for

masterha_check_status Detect current MHA Running state

masterha_master_monitor testing master Is it down?

masterha_master_switch Control failover ( Automatic or Manual )

masterha_conf_host Add or remove configured server Information

masterha_stop close manager

#node Components will also be installed in /usr/local/bin Several scripts will be generated below ( These tools are usually made of MHAManager The script triggers , There is no need for human operation ) Mainly as follows :

save_binary_logs Save and copy master Binary log

apply_diff_relay_logs Identify differentiated relay log events and apply their differentiated events to other slave

filter_mysqlbinlog Remove unnecessary ROLLBACK event (MHA This tool is no longer used )

purge_relay_logs Clear relay logs ( It won't block SQL Threads )

4. Configure password less authentication on all servers

### stay manager Configure password less authentication to all database nodes on the node

ssh-keygen -t rsa # Press enter all the way

ssh-copy-id 192.168.40.10

ssh-copy-id 192.168.40.100

ssh-copy-id 192.168.40.30

#### The database servers can import each other ####

### stay master On

ssh-keygen -t rsa # Press enter all the way

ssh-copy-id 192.168.40.100

ssh-copy-id 192.168.40.30

### stay slave1 On

ssh-keygen -t rsa # Press enter all the way

ssh-copy-id 192.168.40.10

ssh-copy-id 192.168.40.30

### stay slave2 On

ssh-keygen -t rsa # Press enter all the way

ssh-copy-id 192.168.40.10

ssh-copy-id 192.168.40.100

### Password free login test ###

### stay manager Node

ssh 192.168.40.10

ssh 192.168.40.100

ssh 192.168.40.30

### stay master Node

ssh 192.168.40.100

ssh 192.168.40.30

### stay slave1 Node

ssh 192.168.40.10

ssh 192.168.40.30

### stay slave2 Node

ssh 192.168.40.10

ssh 192.168.40.100

5. stay manager Operation on node

(1)### stay manager Copy related scripts on node to /usr/local/bin Catalog

cp -rp /opt/mha4mysql-manager-0.57/samples/scripts /usr/local/bin

# After copying, there are four executable files

ll /usr/local/bin/scripts/

master_ip_failover # When switching automatically VIP Managed scripts

master_ip_online_change # When switching online vip Management of

power_manager # Script to shut down the host after the failure

send_report # Because the script that sends the alarm after the failover

(2)### When copying the above automatic switching VIP Manage scripts to /usr/local/bin Catalog , Use here master_ip_failover Scripts to manage VIP And failover

cp /usr/local/bin/scripts/* /usr/local/bin

(3)### modify master_ip_failover Delete all , Add the following , Modify related parameters

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

############################# Add content section #########################################

my $vip = '192.168.40.188'; # Appoint vip The address of

my $brdc = '192.168.40.255'; # Appoint vip The address of

my $ifdev = 'ens33'; # Appoint vip Bound network card

my $key = '1'; # Appoint vip Serial number of the bound virtual network card

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip"; # Represents that the value of this variable is ifconfig ens33:1 192.168.40.188

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down"; # Represents that the value of this variable is ifconfig ens33:1 192.168.40.188 down

my $exit_code = 0; # Specify the exit status code as 0

#my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;";

#my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key";

##################################################################################

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ([email protected]) {

warn "Got Error: [email protected]\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ([email protected]) {

warn [email protected];

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

## A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

# Delete Note

:2,87 s/^#//

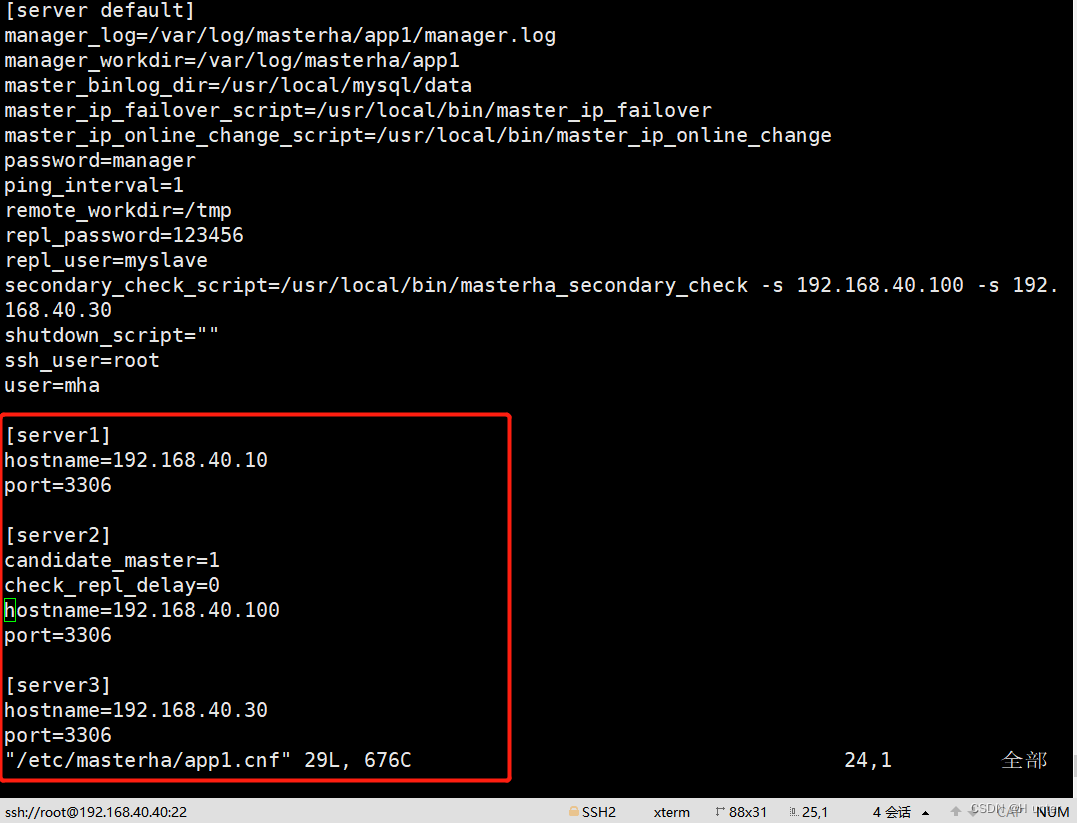

(4)### establish MHA Software directory and copy configuration files , Use here app1.cnf Configuration files to manage mysql Node server

# establish MHA Software directory and copy configuration files

cd /opt/mha4mysql-manager-0.57/samples/conf/

ls

mkdir /etc/masterha

cp app1.cnf /etc/masterha/

# modify app1.cnf The configuration file , Delete everything in the original text , Add the following

vim /etc/masterha/app1.cnf

[server default]

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/usr/local/mysql/data

manager_log=/var/log/masterha/app1/manager.log

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/usr/local/mysql/data

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

password=manager

ping_interval=1

remote_workdir=/tmp

repl_password=123456

repl_user=myslave

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.250.60 -s 192.168.250.70

shutdown_script=""

ssh_user=root

user=mha

[server1]

hostname=192.168.40.10

port=3306

[server2]

candidate_master=1

check_repl_delay=0

hostname=192.168.40.100

port=3306

[server3]

hostname=192.168.40.30

port=3306

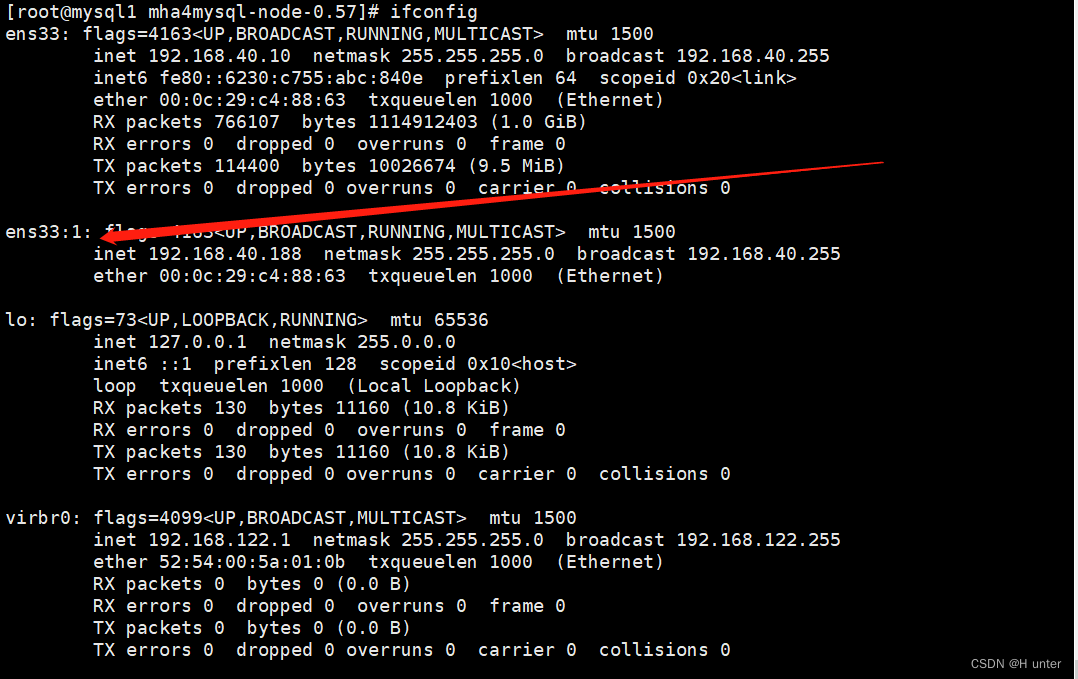

(5)### Turn on the virtual node at the master node IP

ifconfig ens33:1 192.168.40.188/24

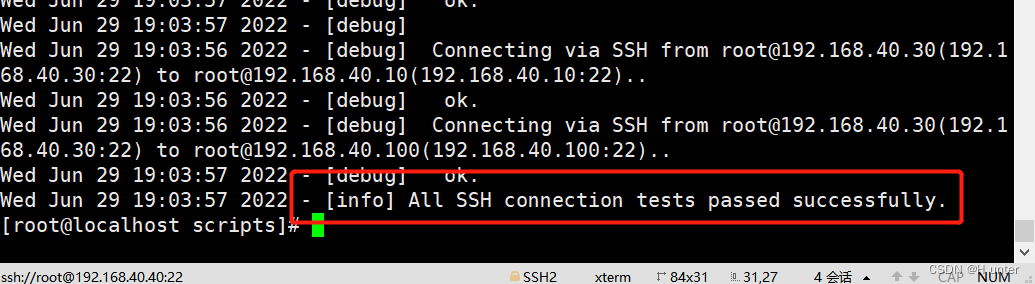

(6)### stay manager Testing on nodes ssh No password authentication , If it's normal, it will output successfully. As shown below

masterha_check_ssh -conf=/etc/masterha/app1.cnf

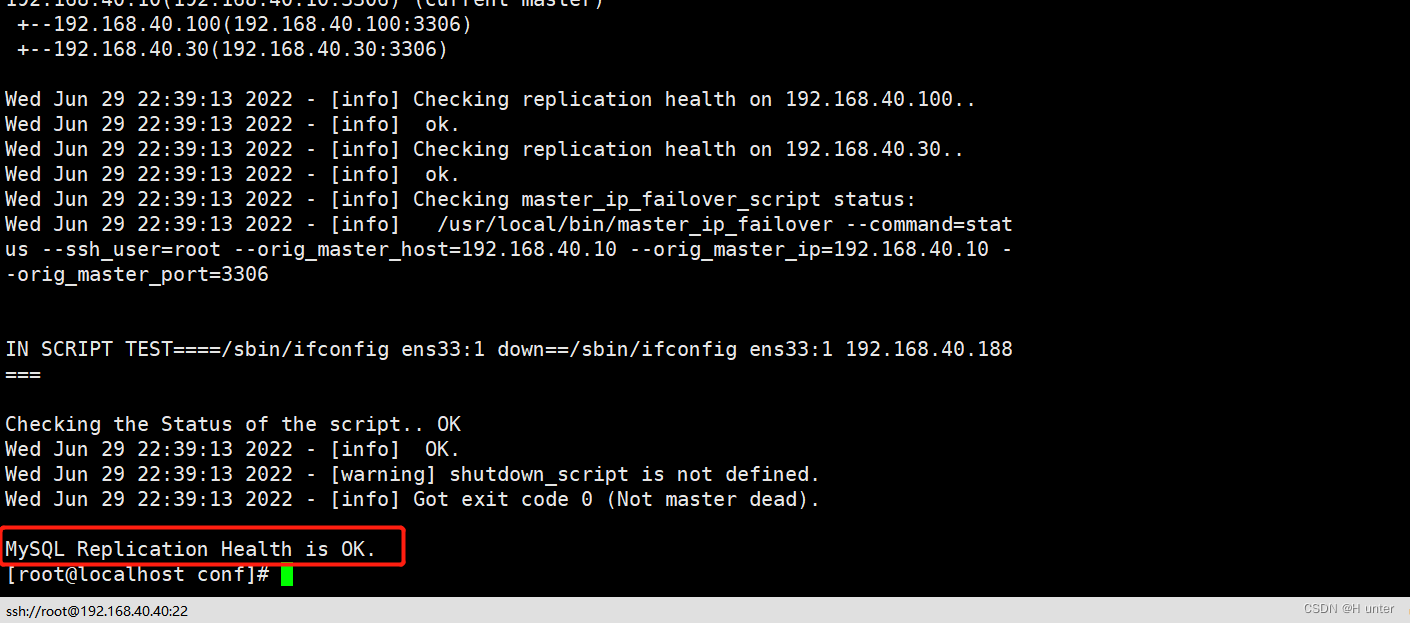

(7)### stay manager Testing on nodes mysql Master-slave connection , Last appearance MySQL Replication Health is OK The words indicate normal . As shown below .

masterha_check_repl -conf=/etc/masterha/app1.cnf

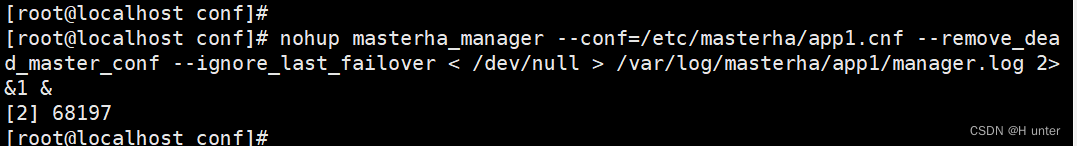

(8)### stay manager Start on the node MHA

nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

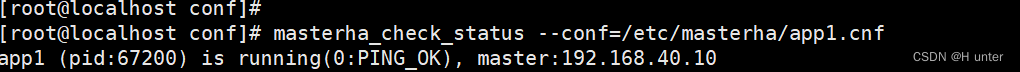

(9)### see MHA state , You can see the current master yes Mysql1 node .

masterha_check_status --conf=/etc/masterha/app1.cnf

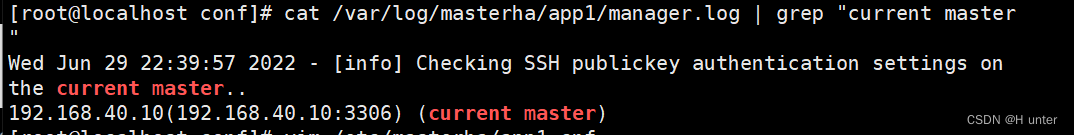

(10)### see MHA journal , Also to see the current master yes 192.168.250.118, As shown below .

cat /var/log/masterha/app1/manager.log | grep "current master"

(11)### see Mysql1 Of VIP Address 192.168.250.118 Whether there is , This VIP The address is not because manager Nodes stop MHA Service and disappear .

ifconfig

// To shut down manager service , You can use the following command .

masterha_stop --conf=/etc/masterha/app1.cnf

Or you can directly use kill process ID The way to turn off .

The configuration file is explained as follows :

[server default]

manager_log=/var/log/masterha/app1/manager.log #manager journal

manager_workdir=/var/log/masterha/app1 #manager working directory

master_binlog_dir=/usr/local/mysql/data/ #master preservation binlog The location of , The path here has to do with master Internally configured binlog The path is the same , In order to MHA Can find

master_ip_failover_script=/usr/local/bin/master_ip_failover # Set auto failover When the switch script , That's the script above

master_ip_online_change_script=/usr/local/bin/master_ip_online_change # Set the switch script for manual switching

password=manager # Set up mysql in root User's password , This password is the one that created the monitoring user in the previous article

ping_interval=1 # Set up the main monitoring library , send out ping Time interval between packages , The default is 3 second , Try three times when there is no response failover

remote_workdir=/tmp # Set the remote end mysql When a switch occurs binlog Where to save

repl_password=123456 # Set the password of the copy user

repl_user=myslave # Set the user of the copy user

report_script=/usr/local/send_report # Set the script of the alarm sent after switching

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.40.100 -s 192.168.40.30 # Specify the slave server to check IP Address

shutdown_script="" # Set to close the failed host script after the failure occurs ( The main function of the script is to shut down the host and prevent brain crack , It's not used here )

ssh_user=root # Set up ssh Login user name of

user=mha # Set up monitoring users root

[server1]

hostname=192.168.40.10

port=3306

[server2]

hostname=192.168.40.100

port=3306

candidate_master=1

# Set as candidate master, After setting this parameter , After the master-slave switch, the slave database will be promoted to the master database , Even if the slave library is not the latest in the cluster slave

check_repl_delay=0

# By default, if one slave backward master exceed 100M Of relay logs Words ,MHA Will not choose the slave As a new master, Because for this slave It takes a long time to recover ; By setting check_repl_delay=0,MHA Trigger switch in selecting a new master The replication delay will be ignored , This parameter is set for candidate_master=1 Is very useful , Because the candidate must be new in the process of switching master

[server3]

hostname=192.168.40.30

port=3306

(6)### stay manager Testing on nodes ssh No password authentication , If it's normal, it will output successfully. As shown below .

(7)### stay manager Testing on nodes mysql Master-slave connection , Last appearance MySQL Replication Health is OK The words indicate normal . As shown below .

(8)### stay manager Start on the node MHA

(9)### see MHA state , You can see the current master yes Mysql1 node .

(10)### see MHA journal , Also to see the current master yes 192.168.40.10, As shown below .

(11) see Mysql1 Of VIP Address 192.168.250.188 Whether there is , This VIP The address is not because manager Nodes stop MHA Service and disappear .

3.3. fault simulation

### stay manager Monitoring observation logging on the node

tail -f /var/log/masterha/app1/manager.log

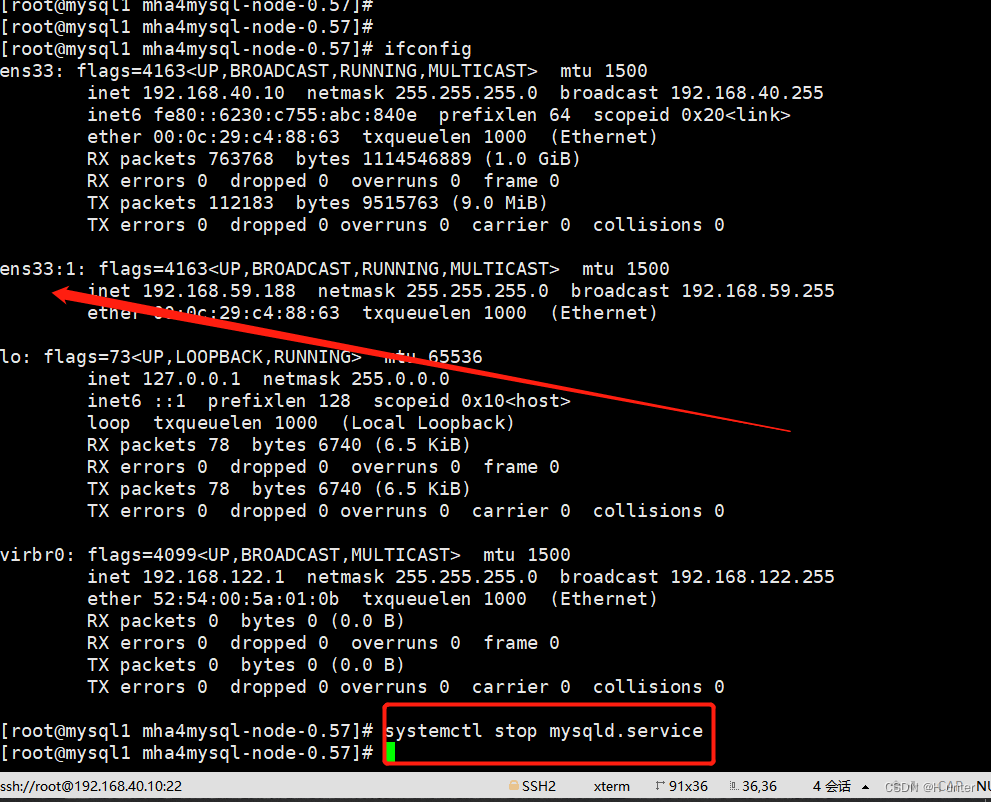

### stay Master node Mysql1 Stop on mysql service

systemctl stop mysqld

or

pkill -9 mysql

### stay slave1,slave2 Check out , After normal automatic switching once ,MHA The process will exit .HMA Will automatically modify app1.cnf The contents of the document , Will be down mysql1 The node to delete .

see mysql2 Take over VIP

ifconfig

Algorithm of failover alternative master database :

1. In general, the judgment from the database is from (position/GTID) Judge the pros and cons , The data are different , Closest to master Of slave, Become a candidate .

2. When the data is consistent , In the order of configuration files , Select an alternate master library .

3. Set weights (candidate_master=1), Mandatory assignment of alternate masters by weight .

(1) By default, if one slave backward master 100M Of relay logs Words , Even with weight , It's going to fail .

(2) If check_repl_delay=0 Words , Even behind a lot of logs , It is also mandatory to choose it as the alternative host .

### stay Master node Mysql1 Stop on mysql service

### stay slave1,slave2 Check out , see mysql2 Take over VIP

3.4. Troubleshooting

1.# Repair mysql1

systemctl restart mysqld

2.# Fix the master-slave

# In the current main database server Mysql2 View binaries and synchronization points

show master status;

# In the original master database server mysql1 Perform synchronous operation

change master to master_host='192.168.40.100',master_user='myslave',master_password='123456',master_log_file='master-bin.000003',master_log_pos=154;

start slave;

show slave status\G;

3.# stay manager Modify the configuration file on the node app1.cnf( Add this record to it , Because it will automatically disappear when it fails to detect )

vi /etc/masterha/app1.cnf

....

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.40.100 -s 192.168.40.30

....

[server1]

hostname=192.168.40.10

port=3306

[server2]

candidate_master=1

check_repl_delay=0

hostname=192.168.40.100

port=3306

[server3]

hostname=192.168.40.30

port=3306

4.# stay manager Start on the node MHA

nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

stay manager Modify the configuration file on the node app1.cnf( Add this record to it , Because it will automatically disappear when it fails to detect )

4. stay manager Start on the node MHA

Four 、 summary

1.MHA

① effect :MySQL High availability , Fail over .

② The core part of the :(1)MHA Components :manager: The main function : do MHA start-up , Close management and detection MySQL Various health states . niode: In case of failure , Save binary logs as much as possible , And realize failover (VIP Elegant address )

③MHA Required configuration files (2 individual ):

master_ip_failover: Command tool , The definition is based on VIP Detection and failover of (VIP from master ----》 new master)

appl.conf:MHA The main configuration file for , It mainly defines mha Working directory of 、 journal mysql Binary log location , Use MHA The login MySQL Users of 、 The password is used from the server . Identity synchronization master Account number 、 password ( Five ).

④ Fail over MHA What actions can be done :

(1)MHA Will try to detect many times master The state of being .

(2)MHA Will try many times , Save as much as possible master Binary file

(3)MHA Will be based on app1.cnf Configuration section in , From the server ------》 Location of the primary server

(4)MHA The final will be master Of VIP Switch the address to the location from the server

(5)MHA Then choose a new master after , Will be in the rest slave On the implementation change master operation , Point to the new master, To guarantee MySQL The health of the cluster .

2.MHA The breakdown of

① To make soft links .

② No interaction ( Password free login )

③ Five account authorization ( Three of these accounts are what the test environment needs to do )

④ Initial operation MHA When the function , You need to temporarily add virtual ip

⑤ The configuration file --- check (master_ip_failover 1 Failover scripts ,appl.cnf.mha Primary profile for )

⑥ Install first node node , Then install the master node .

---------------------

author :H unter

source :CSDN

original text :https://blog.csdn.net/m0_59579177/article/details/125531603

Copyright notice : This is the author's original article , Please attach a link to the blog post !

Content analysis By:CSDN,CNBLOG One click reprint plugin for blog posts

边栏推荐

- Sizeof (function name) =?

- [trio basic tutorial 18 from introduction to proficiency] trio motion controller UDP fast exchange data communication

- Several important parameters of LDO circuit design and type selection

- Soem EtherCAT source code analysis attachment 1 (establishment of communication operation environment)

- The firmware of the connected j-link does not support the following memory access

- matlab timeserise

- Bluetooth hc-05 pairing process and precautions

- Summary -st2.0 Hall angle estimation

- Use indent to format code

- Altium designer learning (I)

猜你喜欢

Design a clock frequency division circuit that can be switched arbitrarily

Negative pressure generation of buck-boost circuit

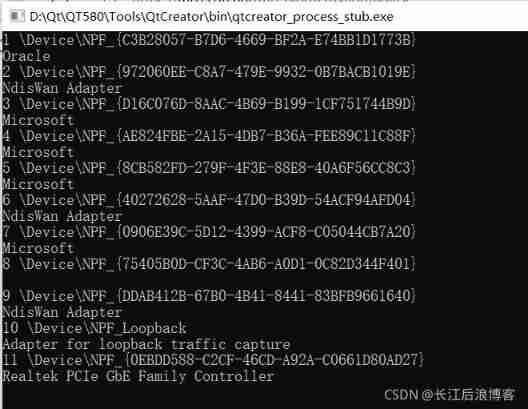

Soem EtherCAT source code analysis attachment 1 (establishment of communication operation environment)

C # joint configuration with Halcon

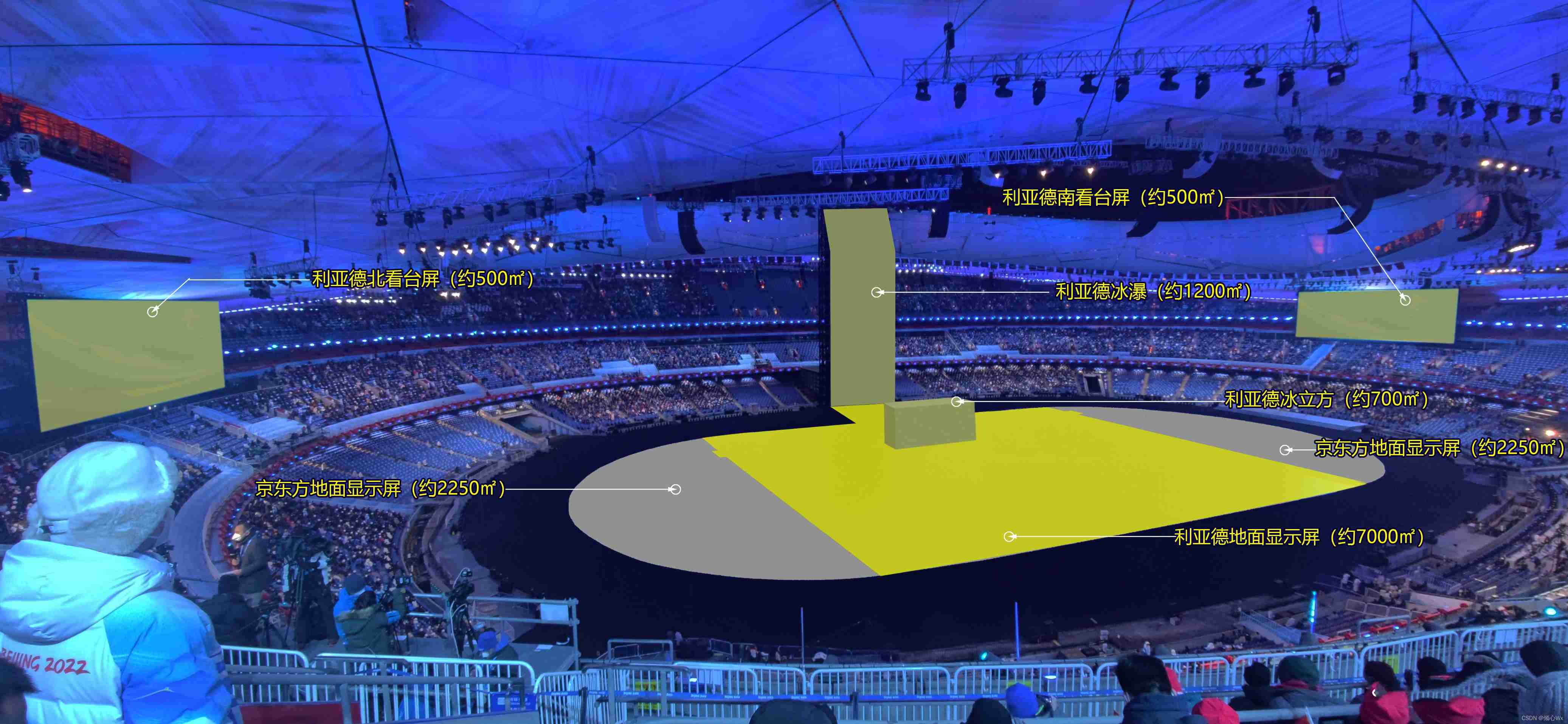

Record the opening ceremony of Beijing Winter Olympics with display equipment

Simple design description of MIC circuit of ECM mobile phone

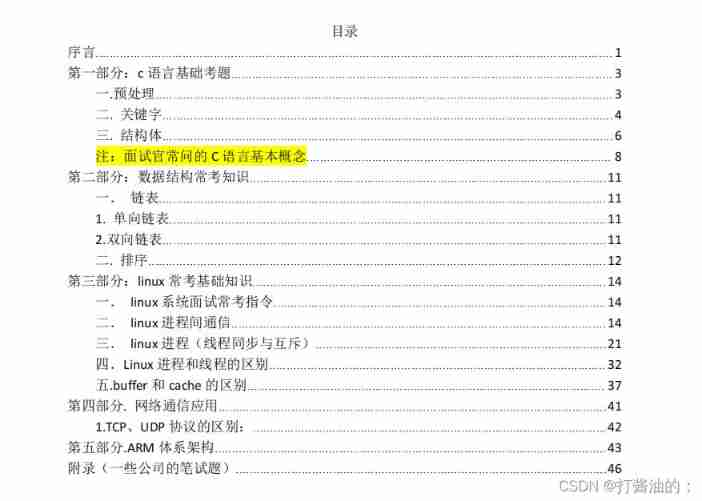

Interview catalogue

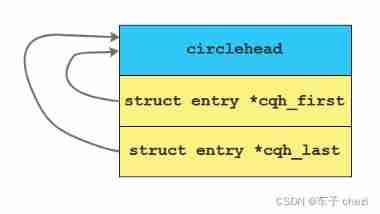

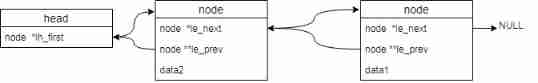

Circleq of linked list

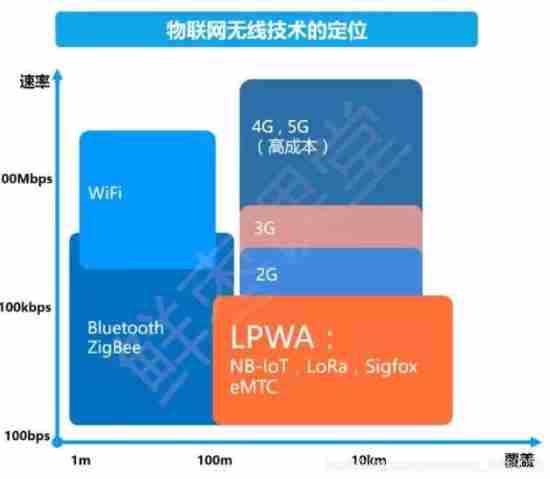

Nb-iot technical summary

List of linked lists

随机推荐

UEFI development learning 6 - creation of protocol

The research found that the cross-border e-commerce customer service system has these five functions!

Fundamentals of C language

On boost circuit

Slist of linked list

My-basic application 2: my-basic installation and operation

[tutorial 15 of trio basic from introduction to proficiency] trio free serial communication

Consul installation

Anonymous structure in C language

UEFI development learning 4 - getting to know variable services

Semiconductor devices (III) FET

Classic application of MOS transistor circuit design (1) -iic bidirectional level shift

Carrier period, electrical speed, carrier period variation

Hardware 1 -- relationship between gain and magnification

Ble encryption details

C WinForm [exit application] - practice 3

[trio basic tutorial 16 from introduction to proficiency] UDP communication test supplement

C WinForm [get file path -- traverse folder pictures] - practical exercise 6

Beijing Winter Olympics opening ceremony display equipment record 3

如何进行导电滑环选型