当前位置:网站首页>The gradient descent method and Newton method are used to calculate the open radical

The gradient descent method and Newton method are used to calculate the open radical

2022-07-27 02:04:00 【Adenialzz】

The gradient descent method and Newton method calculate the open radical

This article will introduce how not to switch , You can only use addition, subtraction, multiplication and division to realize the root sign x The solution of . It mainly introduces gradient descent and Newton method , And give C++ Realization .

Gradient descent method

Ideas / step

- Transformation problem , take x \sqrt{x} x The solution of is transformed into minimizing the objective function : L ( t ) L(t) L(t), L ( t ) = ( t 2 − x ) L(t)=(t^2-x) L(t)=(t2−x) , When L L L Tend to be 0 when , t t t It's what we want ;

- Iterative search makes L L L Smaller t t t ,

- Finally get small enough L L L At the time of the t t t , Even if have to L → 0 L\rightarrow 0 L→0, Get the results t t t

- solve L L L The minimum of , The derivative is 0 The point of

How to iterate

OK, The question now is how to iterate , So as to get as small as possible L L L, So , We want to make every iteration t t t The change of , L L L All towards smaller Direction Change a suitable step .

Determine how to iterate , It is nothing more than to determine each iteration Direction and step .

The most natural idea , We make t t t Move a small step in both directions of the government , Then compare , Which one L L L Smaller , Just move in which direction . namely :

- if L ( t + Δ t ) < L ( t ) L(t+\Delta t)<L(t) L(t+Δt)<L(t) , be t 1 = t + Δ t t_1=t+\Delta t t1=t+Δt;

- if L ( t − Δ t ) < L ( t ) L(t-\Delta t)<L(t) L(t−Δt)<L(t) , be t 1 = t − Δ t t_1=t-\Delta t t1=t−Δt;

Notice the Δ t \Delta t Δt It should be an infinite decimal greater than zero , namely 0 + 0^+ 0+ .

Next, let's make a little change to the above formula :

- if L ( t + Δ t ) − L ( t ) < 0 L(t+\Delta t)-L(t)<0 L(t+Δt)−L(t)<0 , be t 1 = t + Δ t t_1=t+\Delta t t1=t+Δt;

- if L ( t ) − L ( t − Δ t ) > 0 L(t)-L(t-\Delta t)>0 L(t)−L(t−Δt)>0 , be t 1 = t − Δ t t_1=t-\Delta t t1=t−Δt;

Write these two formulas together :

t 1 = t − L ( t + Δ t ) − L ( t ) ∣ L ( t + Δ t ) − L ( t ) ∣ ⋅ Δ t t_1=t-\frac{L(t+\Delta t)-L(t)}{|L(t+\Delta t)-L(t)|}\cdot \Delta t t1=t−∣L(t+Δt)−L(t)∣L(t+Δt)−L(t)⋅Δt

there L ( t + Δ t ) − L ( t ) ∣ L ( t + Δ t ) − L ( t ) ∣ \frac{L(t+\Delta t)-L(t)}{|L(t+\Delta t)-L(t)|} ∣L(t+Δt)−L(t)∣L(t+Δt)−L(t) Used to indicate the sign . Make a little more deformation :

t 1 = t − L ( t + Δ t ) − L ( t ) ∣ L ( t + Δ t ) − L ( t ) ∣ ⋅ Δ t = t − L ( t + Δ t ) − L ( t ) Δ t ∣ L ( t + Δ t ) − L ( t ) Δ t ∣ ⋅ Δ t = t − L ( t + Δ t ) Δ t Δ t ∣ L ( t + Δ t ) − L ( t ) Δ t ∣ = t − α L ′ ( t ) , α = Δ t ∣ L ( t + Δ t ) − L ( t ) Δ t ∣ → 0 + , L ′ ( t ) = L ( t + Δ t ) − L ( t ) Δ t \begin{align} t_1&=t-\frac{L(t+\Delta t)-L(t)}{|L(t+\Delta t)-L(t)|}\cdot \Delta t\\ &=t-\frac{\frac{L(t+\Delta t)-L(t)}{\Delta t}}{|\frac{L(t+\Delta t)-L(t)}{\Delta t}|}\cdot \Delta t\\ &=t-\frac{L(t+\Delta t)}{\Delta t}\frac{\Delta t}{|\frac{L(t+\Delta t)-L(t)}{\Delta t}|}\\ &=t-\alpha L'(t), \ \ \ \alpha=\frac{\Delta t}{|\frac{L(t+\Delta t)-L(t)}{\Delta t}|}\rightarrow 0^+,\ \ \ L'(t)=\frac{L(t+\Delta t)-L(t)}{\Delta t} \end{align} t1=t−∣L(t+Δt)−L(t)∣L(t+Δt)−L(t)⋅Δt=t−∣ΔtL(t+Δt)−L(t)∣ΔtL(t+Δt)−L(t)⋅Δt=t−ΔtL(t+Δt)∣ΔtL(t+Δt)−L(t)∣Δt=t−αL′(t), α=∣ΔtL(t+Δt)−L(t)∣Δt→0+, L′(t)=ΔtL(t+Δt)−L(t)

When a Take infinite hours , Although it must be guaranteed to decline , But the efficiency is too slow

Many functions in daily design , Relatively large steps can be allowed , such as α = 0.01 \alpha = 0.01 α=0.01. The reason is that , Ruobu grew up , Skip to the right place , bring L ( t 1 ) > L ( t 0 ) L(t1) > L(t0) L(t1)>L(t0). Another moment , It is still possible to jump back and make L ( t 2 ) < L ( t 1 ) L(t2) < L(t1) L(t2)<L(t1)

A large step size does not guarantee convergence , But most of the time, it can work well , Therefore , step α \alpha α, We call it learning rate , Usually a relatively small number is given , But not too small .

All in all , The learning rate generally needs to be manually debugged in different model tasks .

Code

float sqrt_grad_decent(float x) {

float t = x / 2;

float L = (t * t - x) * (t * t - x);

float alpha = 0.001;

while ( L > 1e-5 ) {

float delta = 2 * (t * t - x) * 2 * t;

t = t - alpha * delta;

L = (t * t - x) * (t * t - x);

printf("t=%f\n", t);

}

return t;

}

summary

Gradient descent method is by observing local , Algorithm that determines how to adjust . If the function has multiple extreme values , It may fall into local extremum , At this time, the choice of initial point directly affects the convergence result

A large step size may cross the local extreme value to some extent , But it may also cause vibration and lead to non convergence

The choice of step size , You need to find the appropriate value according to the characteristics of the function , If the derivative is particularly large , Then the step size is smaller , Derivative hours , The step length can be large . Otherwise, it is easy to cause convergence problems

There is a kind of Algorithm , You can search a suitable step in a certain range , Make each iteration more stable

Newton method 1

Gradient descent method is often used to solve Function minimum The situation of , Newton's method is often used to solve Function zero The situation of , namely L = 0 L=0 L=0 Root of time equation .

Ideas / step

- Transformation problem , Will solve x \sqrt{x} x Convert to solve L ( t ) = t 2 − x = 0 L(t)=t^2-x=0 L(t)=t2−x=0 Root of time , That is, the zero point of the function

- Iterative search t t t

How to iterate

Use a curve in t 0 t_0 t0 Tangent and x x x The intersection of the axes is taken as t 1 t_1 t1 , To approach the zero of the function . chart / Newton method

Tangent slope , It can also be expressed by derivative .

Consider two coordinate systems : Original coordinate system o 1 o1 o1 , New coordinate system o 2 o2 o2 , among o 2 o2 o2 With o 1 o1 o1 Medium ( x 1 , f ( x 1 ) ) (x_1,f(x_1)) (x1,f(x1)) Origin . It's in o 2 o2 o2 In coordinate system , The red tangent in the figure below can be represented as :

f o 2 ( x ) = f ′ ( x 1 ) x f_{o2}(x)=f'(x_1)x fo2(x)=f′(x1)x

Then the tangent line and x x x Axis intersection point :

f o 2 ( x 2 ) = f ′ ( x 1 ) ( x 2 − x 1 ) = − f ( x 1 ) f_{o2}(x_2)=f'(x_1)(x_2-x_1)=-f(x_1) fo2(x2)=f′(x1)(x2−x1)=−f(x1)

Then there are :

x 2 − x 1 = − f ( x 1 ) f ′ ( x 1 ) x 2 = x 1 − f ( x 1 ) f ′ ( x 1 ) x_2-x_1=-\frac{f(x_1)}{f'(x_1)}\\ x_2=x_1-\frac{f(x_1)}{f'(x_1)} x2−x1=−f′(x1)f(x1)x2=x1−f′(x1)f(x1)

Code

We already know the iterative method through the last section :

t 1 = t − L ( t ) L ′ ( t ) t_1=t-\frac{L(t)}{L'(t)} t1=t−L′(t)L(t)

Code :

float sqrt_newton1(float x) {

float t = x / 2;

float L = t * t - x;

while ( abs(L) > 1e-5 ) {

float dL = 2 * t;

t = t - L / dL;

L = t * t - x;

}

return t;

}

Newton method 2

Ideas

Since Newton's method is to find the zero point of a function , Can we find the zero point of the derivative of the function ? In this way, we can get the extreme value of the function .

And the objective function of gradient descent method L ( t ) = ( t 2 − x ) L(t)=(t^2-x) L(t)=(t2−x) It's the same , And the difference is , Iterations are different t 1 = t − f ′ ( t ) f ′ ′ ( t ) t_1=t-\frac{f'(t)}{f''(t)} t1=t−f′′(t)f′(t), And the step length ( Learning rate ) by 1.

Code

float sqrt_newton2(float x) {

float t = x / 2;

float L = (t * t - x) * (t * t - x);

while ( L > 1e-5 ) {

float dL = 2 * (t * t - x) * 2 * t;

float d2L = 12 * t * t - 4 * x;

t = t - dL / d2L;

L = (t * t - x) * (t * t - x);

}

return t;

}

Ref

边栏推荐

猜你喜欢

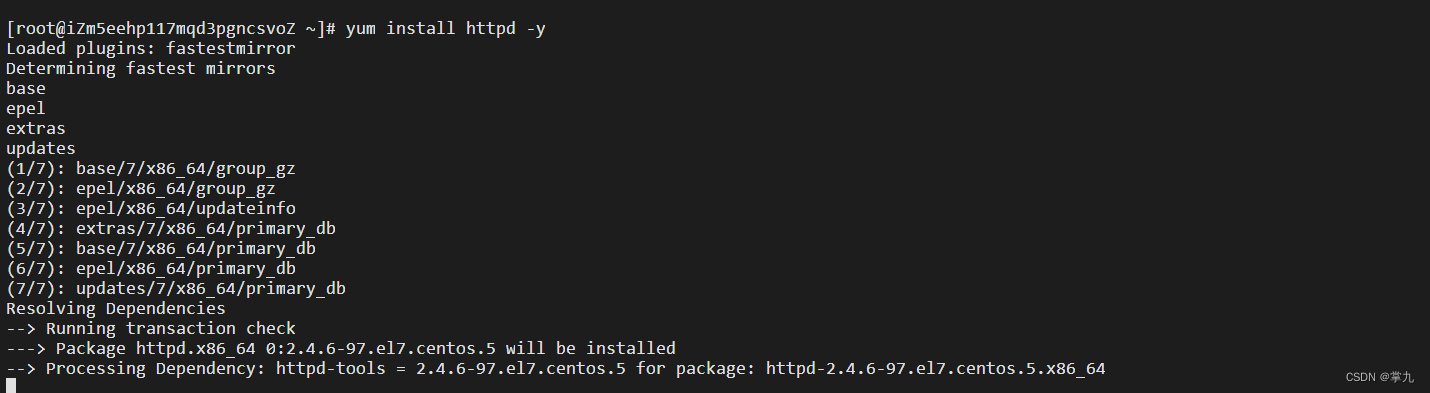

Docter的安装和基础操作

Text to image论文精读GR-GAN:逐步细化文本到图像生成 GRADUAL REFINEMENT TEXT-TO-IMAGE GENERATION

Docker高级篇之Mysql主从复制、Redis集群扩容缩容配置案例详解

![[translation] explicit and implicit batch in tensorrt](/img/17/3f00697d53ff43cd881960849da5f7.png)

[translation] explicit and implicit batch in tensorrt

Domain name analysis and DNS configuration installation

![[untitled]](/img/2a/53327cd39db7871fa780ed599420d0.png)

[untitled]

DF-GAN实验复现——复现DFGAN详细步骤 及使用MobaXtem实现远程端口到本机端口的转发查看Tensorboard

网络与VPC之动手实验

22FTP

使用ECS和OSS搭建个人网盘

随机推荐

超出隐藏显示省略号

ViTGAN:用视觉Transformer训练生成性对抗网络 Training GANs with Vision Transformers

[reprint] GPU compute capability table

Tinyint type map is received and returned as Boolean

QoS quality of service - QoS overview

introduction

【无标题】

解决方案:炼丹师养成计划 Pytorch+DeepLearning遇见的各种报错与踩坑避坑记录(二)

MySQL index

Docker高级篇之Mysql主从复制、Redis集群扩容缩容配置案例详解

IS指标复现 文本生成图像IS分数定量实验全流程复现 Inception Score定量评价实验踩坑避坑流程

FID指标复现踩坑避坑 文本生成图像FID定量实验全流程复现(Fréchet Inception Distance )定量评价实验踩坑避坑流程

指针常量与常量指针详细讲解

Connect mysql detailed graphic operations in ECs docker (all)

Codeforce problem 908 D. new year and arbitrary arrangement (probability DP)

HarmonyOS图像处理应用开发实战直播笔记

Desktop solution summary

使用ECS和OSS搭建个人网盘

MySQL主从复制与读写分离

Text to image论文精读RAT-GAN:文本到图像合成中的递归仿射变换 Recurrent Affine Transformation for Text-to-image Synthesis