当前位置:网站首页>Python Jieba segmentation (stuttering segmentation), extracting words, loading words, modifying word frequency, defining thesaurus

Python Jieba segmentation (stuttering segmentation), extracting words, loading words, modifying word frequency, defining thesaurus

2020-11-06 01:22:00 【Elementary school students in IT field】

List of articles

Reprint please indicate the source

Welcome to join Python Advance quickly QQ Group :867300100

“ stammer ” Chinese word segmentation : Do the best Python Chinese word segmentation component , Word segmentation module jieba, It is python Better use of word segmentation module , Support simplified Chinese , Traditional participle , It also supports custom Thesaurus .

jieba Word segmentation , Extract key words , Custom words .

The principle of stuttering participle

Here's the link

# One 、 Based on the stuttering segmentation, segmentation and keyword extraction

##1、jieba.cut Three patterns of participle

- jieba.cut Method accepts three input parameters : A string that requires a participle ;cut_all Parameter is used to control whether the full mode is adopted ;HMM Parameter to control whether to use

HMM Model

jieba.cut_for_search Method accepts two arguments : A string that requires a participle ; Whether to use HMM Model . This method is suitable for the search engine to build inverted index segmentation , Fine grain

The string to be segmented can be unicode or UTF-8 character string 、GBK character string . Be careful : Direct input is not recommended GBK character string , May be accidentally decoded into UTF-8

jieba.cut as well as jieba.cut_for_search The returned structure is all one iteration generator, have access to for Loop to get each word that comes after the participle (unicode), Or use

jieba.lcut as well as jieba.lcut_for_search Go straight back to list

jieba.Tokenizer(dictionary=DEFAULT_DICT) Create a new custom word splitter , It can be used to use different dictionaries at the same time .jieba.dt Is the default word splitter , All global participle correlation functions are mappings to the participle .

#coding=utf-8

import jieba,math

import jieba.analyse

'''

jieba.cut There are three main modes

# Analyze any comments from a zoo

str_text=" It's been a long time since I came to the harpinara wildlife park , In my memory, I still remember the galaxy where I organized spring outing when I was a child "

# All model cut_all=True

str_quan1=jieba.cut(str_text,cut_all=True)

print(' Full mode participle :{ %d}' % len(list(str_quan1)))

str_quan2=jieba.cut(str_text,cut_all=True)

print("/".join(str_quan2))

# print(str(str_1)) # For one generator use for Loop can get the result of participle

# str_1_len=len(list(str_1)) # Why? ? Here after the execution .join No execution , Please let me know

# Accurate model cut_all=False, The default is

str_jing1=jieba.cut(str_text,cut_all=False)

print(' Precise pattern participle :{ %d}' % len(list(str_jing1)))

str_jing2=jieba.cut(str_text,cut_all=False)

print("/".join(str_jing2))

# Search engine model cut_for_search

str_soso1=jieba.cut_for_search(str_text)

print(' Search engine word segmentation :{ %d}' % len(list(str_soso1)))

str_soso2=jieba.cut_for_search(str_text)

print("/".join(str_soso))

result

Full mode participle :{ 32}

Prefix dict has been built succesfully.

It's /TMD/ Long time / For a long time / Long time / It's been a long time / Didn't come / Ha / skin / Nala / In the wild / Wild animal / vivid / animal / zoo / 了 /// memory / In memory / still / Hours / When I was a child / When / School / organization / Spring Tour / Swim to / Of / The Milky Way / The Milky way / River system

Precise pattern participle :{ 19}

It's /TMD/ For a long time / Didn't come / Harpy / Nala / In the wild / zoo / 了 /,/ In memory / still / When I was a child / School / organization / Spring Tour / Go to / Of / The Milky way

Search engine word segmentation :{ 27}

It's /TMD/ Long time / Long time / For a long time / Didn't come / Harpy / Nala / In the wild / animal / zoo / 了 /,/ memory / In memory / still / Hours / When / When I was a child / School / organization / Spring Tour / Go to / Of / The Milky Way / River system / The Milky way 、

##2、 Keywords extraction 、 Keywords extraction **

import jieba.analyse

’analyse.extract.tags‘

'''

keywords1=jieba.analyse.extract_tags(str_text)

print(' Keywords extraction '+"/".join(keywords1))

keywords_top=jieba.analyse.extract_tags(str_text,topK=3)

print(' key word topk'+"/".join(keywords_to# Sometimes not sure how many keywords to extract , Percentage of total words available

print(' The total number of words {}'.format(len(list(jieba.cut(str_text)))))

total=len(list(jieba.cut(str_text)))

get_cnt=math.ceil(total*0.1) # Rounding up

print(' from %d Remove from %d Word '% (total,get_cnt))

keywords_top1=jieba.analyse.extract_tags(str_text,topK=get_cnt)

print(' key word topk'+"/".join(keywords_top1))''

result :

Keywords extraction TMD/ Harpy / Spring Tour / For a long time / In memory / Nala / The Milky way / Didn't come / zoo / When I was a child / In the wild / School / It's / organization / still

key word topkTMD/ Harpy / Spring Tour

The total number of words 19

from 19 Remove from 2 Word topkTMD/ Harpy 、

##3、 Add custom words and load custom thesaurus **

Add custom words

================# When dealing with ,jieba.add_word

# add_word(word,freq=None,tag=None) and del_word The dictionary can be dynamically modified in the program

# suggest_freq(segment,tune=Ture) Adjustable word frequency , Periods can or can't show

# notes : Automatic word frequency is used HMM The new word discovery function may be invalid

# '''

# str_jing2=jieba.cut(str_text,cut_all=False)

# print('add_word front :'+"/".join(str_jing2))

# # Add custom words

# jieba.add_word(' Harpinara ')

# str_jing3=jieba.cut(str_text,cut_all=False)

# print('add_word after :'+"/".join(str_jing3))

# # Correct word frequency

# jieba.suggest_freq(' Wildlife Park ',tune=True)

# str_jing4=jieba.cut(str_text,cut_all=False)

# print('suggest_freq after :'+"/".join(str_jing4))

#

result :

**add_word front :** It's /TMD/ For a long time / Didn't come / Harpy / Nala / In the wild / zoo / 了 /,/ In memory / still / When I was a child / School / organization / Spring Tour / Go to / Of / The Milky way

add_word after : It's /TMD/ For a long time / no / Come on / Harpinara / In the wild / zoo / 了 /,/ In memory / still / When I was a child / School / organization / Spring Tour / Go to / Of / The Milky way

suggest_freq after : It's /TMD/ For a long time / no / Come on / Harpinara / Wildlife Park / 了 /,/ In memory / still / When I was a child / School / organization / Spring Tour / Go to / Of / The Milky way

Load custom Thesaurus

jieba.load_userdict(filename)#filename Is the file path

Dictionary format and dict.txt equally , One word, one line , Each line is divided into three parts ( Space off ), words Word frequency ( It saves ) The part of speech ( It saves )

Order cannot be reversed , if filename Open for path or binary mode , It needs to be UTF-8

'''

# Definition : Third and fourth grade In the document

jieba.load_userdict('C:\\Users\\lenovo\\Desktop\\ Custom Thesaurus .txt')

str_load=jieba.cut(str_text,cut_all=False)

print('load_userdict after :'+"/".join(str_load))

'''

notes jieba.load_userdict Load custom thesaurus and jieba Initial thesaurus is used together with ,

however , The default initial thesaurus is placed in the installation directory ixia, If you are sure to load thesaurus for a long time , Just replace him

Use the switch function of thesaurus set_dictionary()

Can be jieba Default Thesaurus copy Go to your own directory , Adding , Or find a more complete thesaurus

'''

# Generally in python All for site-packages\jieba\dict.txt

# Simulation demonstration

jieba.set_dictionary('filename')

# After the word segmentation , If we switch the Thesaurus , At this point, the program will initialize

Our lexicon , Instead of loading the default path Thesaurus

Use :

- Install or will jieba The directory is placed in the current directory or site-packages Catalog

Algorithm :

- Efficient word graph scanning based on prefix dictionary , Generate a directed acyclic graph of all possible word formation of Chinese characters in a sentence (DAG)

- Using dynamic programming to find the maximum probability path , Find out the maximum segmentation combination based on word frequency

- For unregistered words , Based on the ability of Chinese characters to form words HMM Model , Used Viterbi Algorithm

Add a custom dictionary

- Developers can specify their own custom dictionaries , To include jieba Words not in the Thesaurus . although jieba Have the ability to recognize new words , But adding new words by yourself can guarantee a higher accuracy

- usage :jieba.load_userdict(file_name)#file_name For file class objects Or custom dictionary path

- Dictionary format : One word, one line : words , Word frequency ( Omission ), The part of speech ( Omission ), Space off , Order cannot be reversed .UTF-8 code .

Keywords extraction :

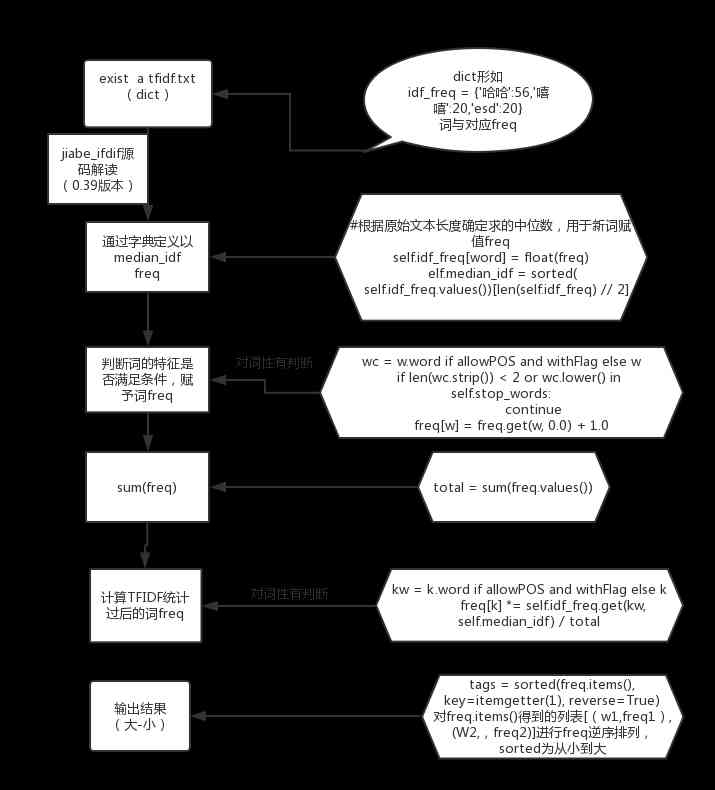

##4、 be based on TF-IDF Algorithm keyword extraction

import jieba.analyse

jieba.analyse.extract_tags(sentence, topK=20, withWeight=False, allowPOS=())

–sentence Is the text to be extracted

–topK To return a few TF/IDF The keyword with the largest weight , The default value is 20

–withWeight Whether to return the keyword weight value together , The default value is False

–allowPOS Only words with specified parts of speech are included , The default value is empty , That is, no screening

jieba.analyse.TFIDF(idf_path=None) newly build TFIDF example ,idf_path by IDF Frequency file

Keyword extraction using reverse file frequency (IDF) Text corpus can be switched to a custom corpus path

usage :jieba.analyse.set_idf_path(file_name) # file_name For custom corpus path

Stop words used in keyword extraction (Stop Words) Text corpus can be switched to a custom corpus path

usage : jieba.analyse.set_stop_words(file_name) # file_name For custom corpus path

##5、 be based on TextRank Keyword extraction of the algorithm

jieba.analyse.textrank(sentence, topK=20, withWeight=False, allowPOS=(‘ns’, ‘n’, ‘vn’, ‘v’)) Use it directly , The interface is the same , Pay attention to the default filtering part of speech .

jieba.analyse.TextRank() New custom TextRank example

– The basic idea :

1, The text of keywords to be extracted is segmented

2, Fixed window size ( The default is 5, adopt span Attribute adjustment ), The co-occurrence of words , Build a diagram

3, Calculate the nodes in the graph PageRank, Notice the undirected weighted graph

about itemgetter() Use reference connection

# Two 、 Commonly used NLP Expand your knowledge (python2.7)

##Part 1. Word frequency statistics 、 null

article = open("C:\\Users\\Luo Chen\\Desktop\\demo_long.txt", "r").read()

words = jieba.cut(article, cut_all = False)

word_freq = {}

for word in words:

if word in word_freq:

word_freq[word] += 1

else:

word_freq[word] = 1

freq_word = []

for word, freq in word_freq.items():

freq_word.append((word, freq))

freq_word.sort(key = lambda x: x[1], reverse = True)

max_number = int(raw_input(u" How many high frequency words are needed ? "))

for word, freq in freq_word[: max_number]:

print word, freq

##Part 2. Manually stop using words

Punctuation 、 Function words 、 Conjunctions are not in the statistical range .

stopwords = []

for word in open("C:\\Users\\Luo Chen\\Desktop\\stop_words.txt", "r"):

stopwords.append(word.strip())

article = open("C:\\Users\\Luo Chen\\Desktop\\demo_long.txt", "r").read()

words = jieba.cut(article, cut_all = False)

stayed_line = ""

for word in words:

if word.encode("utf-8") not in stopwords:

stayed_line += word + " "

print stayed_line

##Part 3. Combine synonyms

List the synonyms , Press down Tab The key to separate , Take the first word as the word to be displayed , The following words are used as synonyms to be replaced , Put a series of synonyms in a line .

here ,“ Beijing ”、“ The capital, ”、“ Beijing ”、“ Peiping city ”、“ The old capital ” For synonyms .

combine_dict = {}

for line in open("C:\\Users\\Luo Chen\\Desktop\\tongyici.txt", "r"):

seperate_word = line.strip().split("\t")

num = len(seperate_word)

for i in range(1, num):

combine_dict[seperate_word[i]] = seperate_word[0]

jieba.suggest_freq(" Peiping city ", tune = True)

seg_list = jieba.cut(" Beijing is the capital of China , The scenery of the capital is very beautiful , Just like the city of Peiping , I love every plant in the old capital .", cut_all = False)

f = ",".join(seg_list)

result = open("C:\\Users\\Luo Chen\\Desktop\\output.txt", "w")

result.write(f.encode("utf-8"))

result.close()

for line in open("C:\\Users\\Luo Chen\\Desktop\\output.txt", "r"):

line_1 = line.split(",")

final_sentence = ""

for word in line_1:

if word in combine_dict:

word = combine_dict[word]

final_sentence += word

else:

final_sentence += word

print final_sentence

##Part 4. Word reference rate

Main steps : participle —— Filter stop words ( A little )—— Substitute synonyms —— Calculate the probability of words appearing in the text .

origin = open("C:\\Users\\Luo Chen\\Desktop\\tijilv.txt", "r").read()

jieba.suggest_freq(" Morning mom ", tune = True)

jieba.suggest_freq(" Big black bull ", tune = True)

jieba.suggest_freq(" The capable ", tune = True)

seg_list = jieba.cut(origin, cut_all = False)

f = ",".join(seg_list)

output_1 = open("C:\\Users\\Luo Chen\\Desktop\\output_1.txt", "w")

output_1.write(f.encode("utf-8"))

output_1.close()

combine_dict = {}

for w in open("C:\\Users\\Luo Chen\\Desktop\\tongyici.txt", "r"):

w_1 = w.strip().split("\t")

num = len(w_1)

for i in range(0, num):

combine_dict[w_1[i]] = w_1[0]

seg_list_2 = ""

for i in open("C:\\Users\\Luo Chen\\Desktop\\output_1.txt", "r"):

i_1 = i.split(",")

for word in i_1:

if word in combine_dict:

word = combine_dict[word]

seg_list_2 += word

else:

seg_list_2 += word

print seg_list_2

freq_word = {}

seg_list_3 = jieba.cut(seg_list_2, cut_all = False)

for word in seg_list_3:

if word in freq_word:

freq_word[word] += 1

else:

freq_word[word] = 1

freq_word_1 = []

for word, freq in freq_word.items():

freq_word_1.append((word, freq))

freq_word_1.sort(key = lambda x: x[1], reverse = True)

for word, freq in freq_word_1:

print word, freq

total_freq = 0

for i in freq_word_1:

total_freq += i[1]

for word, freq in freq_word.items():

freq = float(freq) / float(total_freq)

print word, freq

##Part 5. Extract by part of speech

import jieba.posseg as pseg

word = pseg.cut(" Li Chen is so handsome , And it's powerful , yes “ Big black bull ”, Also a capable person , Or the team's intimate morning mother .")

for w in word:

if w.flag in ["n", "v", "x"]:

print w.word, w.flag

The following is from the Internet collection

3. Keywords extraction

be based on TF-IDF Algorithm keyword extraction

import jieba.analyse

jieba.analyse.extract_tags(sentence, topK=20, withWeight=False, allowPOS=())

sentence Is the text to be extracted

topK To return a few TF/IDF The keyword with the largest weight , The default value is 20

withWeight Whether to return the keyword weight value together , The default value is False

allowPOS Only words with specified parts of speech are included , The default value is empty , That is, no screening

Part of speech for word segmentation can be found in the blog :[ Part of speech reference ](http://blog.csdn.net/HHTNAN/article/details/77650128)

jieba.analyse.TFIDF(idf_path=None) newly build TFIDF example ,idf_path by IDF Frequency file

Code example ( Keywords extraction )

https://github.com/fxsjy/jieba/blob/master/test/extract_tags.py

Keyword extraction using reverse file frequency (IDF) Text corpus can be switched to a custom corpus path

usage : jieba.analyse.set_idf_path(file_name) # file_name For custom corpus path

Custom corpus example :https://github.com/fxsjy/jieba/blob/master/extra_dict/idf.txt.big

Usage examples :https://github.com/fxsjy/jieba/blob/master/test/extract_tags_idfpath.py

Stop words used in keyword extraction (Stop Words) Text corpus can be switched to a custom corpus path

usage : jieba.analyse.set_stop_words(file_name) # file_name For custom corpus path

Custom corpus example :https://github.com/fxsjy/jieba/blob/master/extra_dict/stop_words.txt

Usage examples :https://github.com/fxsjy/jieba/blob/master/test/extract_tags_stop_words.py

The keyword returns a keyword weight value example

Usage examples :https://github.com/fxsjy/jieba/blob/master/test/extract_tags_with_weight.py

be based on TextRank Algorithm keyword extraction

jieba.analyse.textrank(sentence, topK=20, withWeight=False, allowPOS=('ns', 'n', 'vn', 'v')) Use it directly , The interface is the same , Pay attention to the default filtering part of speech .

jieba.analyse.TextRank() New custom TextRank example

Algorithm paper : TextRank: Bringing Order into Texts

The basic idea :

The text of keywords to be extracted is segmented

Fixed window size ( The default is 5, adopt span Attribute adjustment ), The co-occurrence of words , Build a diagram

Calculate the nodes in the graph PageRank, Notice the undirected weighted graph

Examples of use :

see test/demo.py

4. Part of speech tagging

jieba.posseg.POSTokenizer(tokenizer=None) Create a new custom word splitter ,tokenizer Parameter to specify the internal use of jieba.Tokenizer Word segmentation is .jieba.posseg.dt Label the word breaker for the default part of speech .

Mark the part of speech of each word after sentence segmentation , Adopt and ictclas Compatible notation .

Usage examples

import jieba.posseg as pseg

words = pseg.cut(“ I love tian 'anmen square in Beijing ”)

for word, flag in words:

… print(’%s %s’ % (word, flag))

…

I r

Love v

Beijing ns

The tiananmen square ns

-

Parallel word segmentation

principle : After separating the target text by lines , Assign lines of text to multiple Python Parallel word segmentation , And then merge the result , In order to obtain a considerable improvement in the speed of word segmentation

be based on python Self contained multiprocessing modular , Not currently supported Windowsusage :

jieba.enable_parallel(4) # Turn on parallel word segmentation mode , The parameter is the number of parallel processes

jieba.disable_parallel() # Turn off parallel word segmentation modeExample :https://github.com/fxsjy/jieba/blob/master/test/parallel/test_file.py

experimental result : stay 4 nucleus 3.4GHz Linux On the machine , Accurate segmentation of Jin Yong's complete works , To obtain the 1MB/s The speed of , It's a single process version of 3.3 times .

Be careful : Parallel word segmentation only supports default word participators jieba.dt and jieba.posseg.dt.

-

Tokenize: Return the beginning and ending positions of words in the original text

Be careful , Input parameters only accept unicode

The default mode

result = jieba.tokenize(u’ Yonghe Garment Accessories Co., Ltd ’)

for tk in result:

print(“word %s\t\t start: %d \t\t end:%d” % (tk[0],tk[1],tk[2]))

word Yonghe start: 0 end:2

word clothing start: 2 end:4

word Ornaments start: 4 end:6

word Co., LTD. start: 6 end:10

search mode

result = jieba.tokenize(u’ Yonghe Garment Accessories Co., Ltd ’, mode=‘search’)

for tk in result:

print(“word %s\t\t start: %d \t\t end:%d” % (tk[0],tk[1],tk[2]))

word Yonghe start: 0 end:2

word clothing start: 2 end:4

word Ornaments start: 4 end:6

word Co., LTD. start: 6 end:8

word company start: 8 end:10

word Co., LTD. start: 6 end:10

-

ChineseAnalyzer for Whoosh Search engine

quote : from jieba.analyse import ChineseAnalyzer

Usage examples :https://github.com/fxsjy/jieba/blob/master/test/test_whoosh.py

-

Command line participle

Examples of use :python -m jieba news.txt > cut_result.txt

Command line options ( translate ):

Use : python -m jieba [options] filename

Stuttering command line interface .

Fixed parameter :

filename Input file

Optional parameters :

-h, --help Display this help message and exit

-d [DELIM], --delimiter [DELIM]

Use DELIM Separate words , Instead of using the default ’ / '.

If not specified DELIM, Use a space to separate .

-p [DELIM], --pos [DELIM]

Enable part of speech tagging ; If specified DELIM, Between words and parts of speech

Separate it , Otherwise, use _ Separate

-D DICT, --dict DICT Use DICT Instead of the default dictionary

-u USER_DICT, --user-dict USER_DICT

Use USER_DICT As an additional dictionary , Use with default or custom dictionaries

-a, --cut-all Full mode participle ( Part of speech tagging is not supported )

-n, --no-hmm Don't use the hidden Markov model

-q, --quiet Do not output load information to STDERR

-V, --version Display version information and exit

If no filename is specified , Use standard input .

–help Options output :

$> python -m jieba --help

Jieba command line interface.

positional arguments:

filename input file

optional arguments:

-h, --help show this help message and exit

-d [DELIM], --delimiter [DELIM]

use DELIM instead of ’ / ’ for word delimiter; or a

space if it is used without DELIM

-p [DELIM], --pos [DELIM]

enable POS tagging; if DELIM is specified, use DELIM

instead of ‘_’ for POS delimiter

-D DICT, --dict DICT use DICT as dictionary

-u USER_DICT, --user-dict USER_DICT

use USER_DICT together with the default dictionary or

DICT (if specified)

-a, --cut-all full pattern cutting (ignored with POS tagging)

-n, --no-hmm don’t use the Hidden Markov Model

-q, --quiet don’t print loading messages to stderr

-V, --version show program’s version number and exit

If no filename specified, use STDIN instead.

Lazy loading mechanism

jieba Use delay loading ,import jieba and jieba.Tokenizer() Does not immediately trigger dictionary loading , Start loading dictionaries as soon as necessary to build prefix dictionaries . If you want to start by hand jieba, You can also initialize it manually .

import jieba

jieba.initialize() # Manual initialization ( Optional )

stay 0.28 Previous versions cannot specify the path to the main dictionary , With the delayed loading mechanism , You can change the path of the main dictionary :

jieba.set_dictionary(‘data/dict.txt.big’)

Example : https://github.com/fxsjy/jieba/blob/master/test/test_change_dictpath.py

Principle reference :https://blog.csdn.net/u012558945/article/details/79918771

reference :http://blog.csdn.net/xiaoxiangzi222/article/details/53483931

# About stuttering installation failure

First try pip install jieba And conda install jieba To solve the problem . If many attempts fail , So it's not because of stuttering python The official library of ,https://www.lfd.uci.edu/~gohlke/pythonlibs/ At present, there is no whl file 、 Need to download https://pypi.org/project/jieba/#files.jie.zip file , And then decompress it ,

After decompressing, go to the directory of the extracted file ,

Click on the bottom left corner of the computer desktop 【 Start 】—》 function —》 Input : cmd —》 Switch to Jieba directory , such as ,D:\Download\Jieba, Use the following commands in turn :

C:\Users\Administrator>D:

D:>cd D:\Download\jieba-0.35

Observe whether it is under the stuttering list jieba Path , If confirmed, execute , Sometimes you need to enter a directory , After performing

D:\Download\jieba-0.35>python setup.py install

Successful installation .

版权声明

本文为[Elementary school students in IT field]所创,转载请带上原文链接,感谢

边栏推荐

- Jmeter——ForEach Controller&Loop Controller

- 前端都应懂的入门基础-github基础

- PN8162 20W PD快充芯片,PD快充充电器方案

- html

- ES6学习笔记(五):轻松了解ES6的内置扩展对象

- I'm afraid that the spread sequence calculation of arbitrage strategy is not as simple as you think

- 全球疫情加速互联网企业转型,区块链会是解药吗?

- Filecoin的经济模型与未来价值是如何支撑FIL币价格破千的

- Troubleshooting and summary of JVM Metaspace memory overflow

- 向北京集结!OpenI/O 2020启智开发者大会进入倒计时

猜你喜欢

Arrangement of basic knowledge points

PN8162 20W PD快充芯片,PD快充充电器方案

![[JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor](/img/cc/17b647d403c7a1c8deb581dcbbfc2f.jpg)

[JMeter] two ways to realize interface Association: regular representation extractor and JSON extractor

中国提出的AI方法影响越来越大,天大等从大量文献中挖掘AI发展规律

多机器人行情共享解决方案

hadoop 命令总结

What is the side effect free method? How to name it? - Mario

Just now, I popularized two unique skills of login to Xuemei

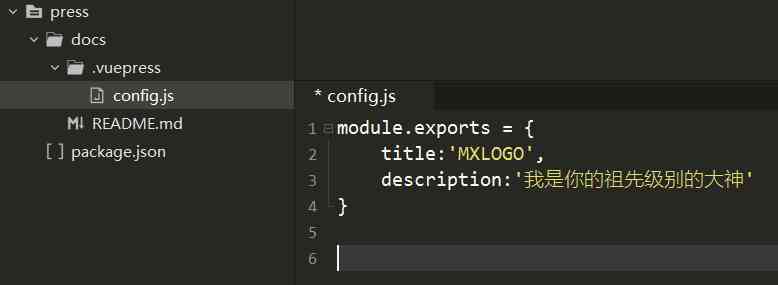

Use of vuepress

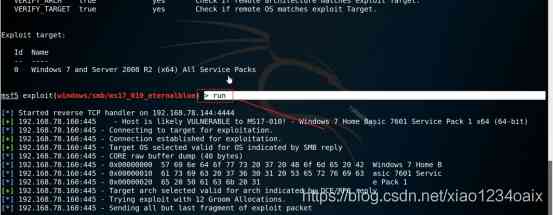

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

随机推荐

Python自动化测试学习哪些知识?

Network security engineer Demo: the original * * is to get your computer administrator rights! 【***】

It's so embarrassing, fans broke ten thousand, used for a year!

Asp.Net Core learning notes: Introduction

Polkadot series (2) -- detailed explanation of mixed consensus

[event center azure event hub] interpretation of error information found in event hub logs

What is the difference between data scientists and machine learning engineers? - kdnuggets

High availability cluster deployment of jumpserver: (6) deployment of SSH agent module Koko and implementation of system service management

I'm afraid that the spread sequence calculation of arbitrage strategy is not as simple as you think

中小微企业选择共享办公室怎么样?

Group count - word length

Did you blog today?

人工智能学什么课程?它将替代人类工作?

至联云分享:IPFS/Filecoin值不值得投资?

采购供应商系统是什么?采购供应商管理平台解决方案

“颜值经济”的野望:华熙生物净利率六连降,收购案遭上交所问询

Existence judgment in structured data

Analysis of react high order components

PHP应用对接Justswap专用开发包【JustSwap.PHP】

每个前端工程师都应该懂的前端性能优化总结: