当前位置:网站首页>MySQL optimization SQL related (continuous supplement)

MySQL optimization SQL related (continuous supplement)

2022-07-27 07:11:00 【Tie Zhu】

One 、 Preface

Here is the optimization encountered by bloggers in the development mysql The notes , Recording is also for your convenience , Every time I finish writing or want to write sql Have a look before , For record only . The following are also the process that bloggers encounter and optimize in the development , There are countless pits , Maybe only in this way can we become strong .

Two 、 Text

1、 Do not perform function operations on indexed fields , for example :

$start_time It's a timestamp format

log_time Is the formatted date format

select xxx from xx where 1 and unix_timestamp(log_time) between {$start_time}) and {$lt_end_time} and drr_weekly = 0

Direct conversion here log_time The format of the field , although sql Short. , But it doesn't actually use log_time Indexes ,sql Slow execution

After modification :

Yes log_time Indexes ,sql The execution speed has increased significantly

select xxx from xx where 1 and log_time between from_unixtime({$start_time}) and from_unixtime({$lt_end_time}) and drr_weekly = 0

first sql Because of the function operation on the index field , So no index is used , Remember here . If you are not familiar with the database , Please check the structure of the database first , Know which field has an index , Write it like this sql Can write more accurately .

2、 be-all where In the case of conditional query , Make sure Of the query where Field is not empty , There should be this step of judgment , Otherwise sql Will report a mistake . The second is to deal with the fields in the database as much as possible , Because sometimes the fields stored in the database may not be standardized , So try to deal with these non-standard fields , Ensure the stability of the program

3、 mysql Of the query where The condition is case insensitive , If you need to be case sensitive when querying , Then you can add some additional parameters :

Reference resources :https://www.cnblogs.com/yeahwell/p/6904310.html

4、 When creating a new data table , For some fields that need joint tables , such as user_id And so on. , Be sure to add an index . There will often appear to where Fields in conditions , Also add an index , In this way, the index is added in advance , Can save a lot of things

5、 get and post The value you get , Never put it directly sql in , Parameter needs to be escaped , Otherwise sql Will make mistakes , And will be sql Inject , If the type of , It needs to be formatted , For example int Of , direct intval() once , String words , To use the escape function addslashes() To escape the , You also need to judge whether it is empty , Empty words , There is no need to query sql 了

About the escape reference of string :https://blog.csdn.net/LJFPHP/article/details/86549582

6、 When the database is tabulated , Let's first look at the coding format of the existing table of the project , Then keep the coding format consistent .

For example, the current database coding format is utf8(utf8_general_ci), If we choose the field or data table coding format when creating a table (utf8_unicode_ci), So in mysql 5.7 In the strict mode of , An error will be reported when inserting :1267 Illegal mix of collations (utf8_general_ci,IMPLICIT) and (utf8_unicode_ci,IMPLICIT) for operation

Modify the coding format of the data table :alter table `table_name` convert to character set utf8

7、 After writing the function every time , For complex sql Conduct explain see , Clarify whether the index is used ,extra What is the prompt message inside , Need to optimize , Generally speaking, indexes can be used ,type Can achieve range That's all , If Extra It contains Using filesort perhaps Using temporary It means that sql It needs to be optimized .

About explain, You can refer to the blog :https://www.cnblogs.com/yycc/p/7338894.html

============================ 2019 year 5 month 10 Japan 13 When the update ==========================

8、 Determine the type of fields in the table , and get/post The parameter type passed . In operation sql When , The field type and parameter type should be consistent , The index cannot be used if it is inconsistent . For example, I have a string field ,version = 2.0, No index is needed , When changed to :version = '2.0' When , The same type , The index was successfully used .

9、 Here comes Article 7 above

Sometimes our local database data is very little , use explain The test is meaningless , because mysql When the amount of data is small , You won't choose to use indexes , Only when the amount of data reaches a certain level will you choose to use the index . So we can insert some data into the database before testing , Convenient test .

Test insert data ( Insert 10000 items in a cycle , Convenient query )

/* for($i=0;$i<10000;$i++)

{

$sql = "INSERT INTO `xxxx` ( `buyer_id`, `xxxx`, `xxxx`, `xxxxx`, `transaction_id`, `xxxx`, `pay_money`, `order_money`, `xxxx`, `pay_date`, `remark`, `order_ip`, `xxxx`, `transaction_type`, `server_unique_flag`, `order_time`, `pay_time`) VALUES

( 1, 6, 6, '2219F75724AE60AC', '7A1E0AA39766D615', 1, 99.9000, 99.9000, '2019-03-24 23:35:08', '2019-03-25 23:35:08', ' The first record ', '192.168.1.223', 'cn', 404, '1', 1553002641, 1553002741)";

$data = G_FUNCTION::getProjectDb("slave")->createCommand($sql)->execute();

}*/

10、 Such problems need to be considered when designing tables . Fields that store big data like this need to be split into other In the table . Or consider refining the data stored in it . because innodb In the engine . For large fields such as text blob etc. , Only store 768 Bytes in the data page , The remaining data will be stored in the overflow segment , When querying these large fields , I will visit many pages , Thereby affecting sql Performance of . Maximum 768 The function of bytes is to facilitate the creation of prefix indexes /prefix index, More of the rest is stored in extra page in , Even if there is only one more byte . therefore , The shorter the length of all columns, the better ( It works varchar Don't use it text).

Read this blog to understand the principle :http://www.cnblogs.com/chenpingzhao/p/6719258.html

influence sql The reason for the speed :mysql Of io With page In units of , So unnecessary data ( Large field ) It will also be read into memory along with the data that needs to be operated , This brings about problems because large fields will occupy a large amount of memory ( Compared with other small fields ), This makes the memory utilization poor , Cause more random reads . From the above analysis , We have seen that the performance bottleneck lies in the fact that large fields are stored in data pages , Resulting in poor memory utilization , Bring too many random reads .

The purpose of recording this is for many people on the Internet sql The slowness is caused by such large fields , Therefore, it is necessary to optimize when building tables .

Expand : About innode Stored page Introduce , You can refer to this article :https://blog.csdn.net/voidccc/article/details/40077329

11、 Create a new table , The structure and content of the table use the data queried in another table

create table table2 select * from table1;

12、InnoDB Using clustered index , Organize the primary key into a tree B+ In the tree , The row data is stored on the leaf node , If you use "where id = 14" This condition looks up the primary key , According to B+ The search algorithm of tree can find the corresponding leaf node , Then get the line data .( Finding the primary key is an index )

If yes Name Column to search , It takes two steps : The first step is to assist in indexing B+ Search the tree for Name, Go to its leaf node to get the corresponding primary key . The second step is to use the primary key in the primary index B+ The tree species will be executed once more B+ Tree retrieval operations , Finally, you can get the whole row of data when you reach the leaf node .( Finding other fields is traversal twice b+tree)

13、 order by The sorting field must be unique ?

answer : Yes , If order by The field of is not unique , For example, the sorting field is time, So when time At the same time , There may be missing data . So in this case , We're writing sql Must pay attention to , Consider using :xxxx order by time desc,id desc;

Also pay attention to :order by time,id desc; Equate to : order by time asc,id desc. Because sorting is ascending by default .

If you don't understand , You can refer to the blog :https://blog.csdn.net/tsxw24/article/details/44994835

============================ 2019 year 6 month 02 Japan 20 When the update ==========================

1、 Execute in the loop sql Of , If you really can't sql Take it out , Then we should judge the circulation condition , The re execution logic that meets the conditions , If it doesn't meet the requirements, the logic will not be executed , Avoid unnecessary cycles . For example, you cycle as soon as you come up 10 Data processing... Times , If some of these data do not need to be processed , Then the performance is wasted

2、 For subqueries , If you don't add where conditions , For full scan , Very expensive performance , So take the outside where Add conditions . It's original outside where The conditions should be preserved , Prevent key fields from having duplicate values , It will cause data duplication

3、group by It's fine too group by Multiple fields , Sometimes it works wonders . Finally, check sql Whether the query is accurate ,group by When , If the query field is not an aggregate field , Then this value is given randomly , It's not the maximum or small value you want .

4、 Single sql Is always faster than more sql Fast . Single sql More convenient for optimization and maintenance , Before defining the function , Try to write one first sql To achieve complex operations . Multiple sql Words , There is bound to be a cycle , For big data , The cycle is more deadly

5、 If the amount of data to be processed is large , Can be in sql At the beginning , Just use limit 0,500 To deal with it , Every query is guaranteed to process only 500 strip , The rest is for limit 500,500 To deal with it

6、 If you want to in where Determine whether the field value is null, for example :is_master = null Nothing can be found out in this way , It must be replaced by is_master is null This way, .

7、 When sql When there are subqueries in , hold order by and limit Are added to the subquery , Reduce the amount of data in subqueries . In this way, when the result set of the main table and the sub query is associated with the query , More efficient .

======================== 2019/12/1=============================

1、 When building the watch , image created_at,updated_at Try to create fields directly , Because with the improvement of business , According to the creation time , It is a normal scenario to modify the time for some business operations , If you add it later , trouble , And it may cause other problems . Reference resources CSDN Blog time , Each update , The release time will become the current , Why is this , Because they didn't record the creation time , Only record the modification time of ...

2、 When sql in in(str) When querying , It is necessary to judge whether there is str Value , If you don't judge ,sql You're going to report a mistake . Although according to the business logic ,str The probability of being empty is very small , But we still need to add this part of judgment

3、 Make good use of mysql Function of , For example, our demand is , When a field may be null when , The default value assigned to this field is 1, The usage is as follows :

coalesce(f.city_level,1) , So when you find city_level When , It's a normal display city_level Value , When the query is not available , The default is 1

Function interpretation :coalesce(a,b,c) Function range parameters abc The first one is not null Value , such as coalesce(1,null,null) return 1, coalesce(null,null,2) return 2

Apply to the environment : When connecting left or right , The unmatched row record is null value , At this point, we need to give the field default value , You can use this function

4、 Circular insert ,sql Batch insertion method of splicing

$sqlpre = "INSERT IGNORE INTO xxx(xxx,xxx) VALUES ";

$sqlVal = "";

$i = 0;

foreach ($arrDevice as $k => $v) {

$sqlVal .= "('xxx','xxx'),";

if ($i >= 500) {

$sqlVal = substr($sqlVal, 0, -1);

if (!empty($sqlVal)) {

$sql = "{$sqlpre} {$sqlVal}";

Yii::$app->db->createCommand($sql)->execute(); // 500 Just insert it once

$sqlVal = '';

$i = 0;

}

$i++;

}

}

This application scenario is the time of circular batch insertion , We can count , Every time 500 Insert once , Avoid the costly behavior of inserting only one at a time . Of course , Every time I plug here 500 This article is just for example , If you want to insert the limit every time , Refer to my other blog :mysql Bulk insert data , How many rows of data are inserted at a time is the most efficient ?

======================== 2020/01/20=============================

1、 Use INT UNSIGNED Storage IPv4, Do not use char(15)

2、 Use varchar(20) Store phone number , Don't use integers

Reading :

(1) Country code , May appear +/-/() Equal character , for example +86

(2) Cell phone numbers don't do math

(3)varchar Can fuzzy query , for example like ‘138%’

3、 The number of single table indexes is recommended to be controlled at 5 Within a , Internet high concurrency , Too many indexes can affect write performance

4、 The number of combined index fields is not recommended to exceed 5 individual

Reading : If 5 Four fields cannot be greatly reduced row Range , There's probably something wrong with the design

come from mysql Military regulations for building tables

Every time I update , You have to review these optimization records from the beginning , Every time there is a new receipt , It's amazing !

end

边栏推荐

- 从技术原理看元宇宙的可能性:Omniverse如何“造”火星

- deepsort源码解读(一)

- (转帖)eureka、consul、nacos的对比2

- Matlab drawing (ultra detailed)

- Details of cross entropy loss function in pytorch

- AI: play games in your spare time - earn it a small goal - [Alibaba security × ICDM 2022] large scale e-commerce map of risk commodity inspection competition

- vscode运行命令报错:标记“&&”不是此版本中的有效语句分隔符。

- Leetcode series (I): buying and selling stocks

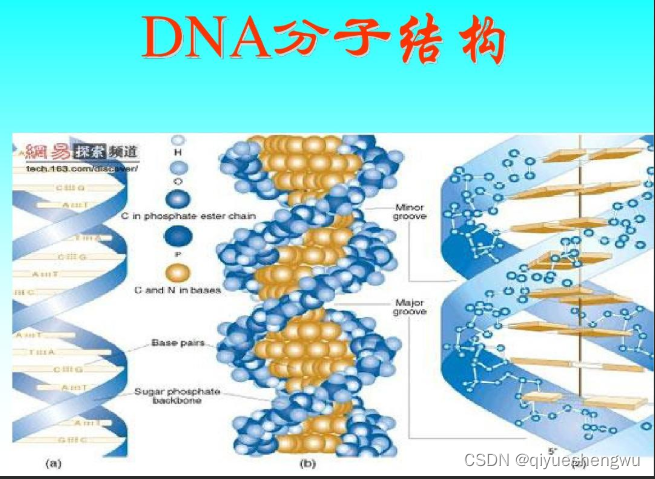

- DNA modified zinc oxide | DNA modified gold nanoparticles | DNA coupled modified carbon nanomaterials

- DNA科研实验应用|环糊精修饰核酸CD-RNA/DNA|环糊精核酸探针/量子点核酸探针

猜你喜欢

Working principle analysis of deepsort

DNA科研实验应用|环糊精修饰核酸CD-RNA/DNA|环糊精核酸探针/量子点核酸探针

Reasoning speed of model

Why can cross entropy loss be used to characterize loss

脱氧核糖核酸DNA修饰氧化锌|DNA修饰纳米金颗粒|DNA偶联修饰碳纳米材料

DNA修饰贵金属纳米颗粒|脱氧核糖核酸DNA修饰纳米金(科研级)

Mysql database

Analysis of online and offline integration mode of o2o E-commerce

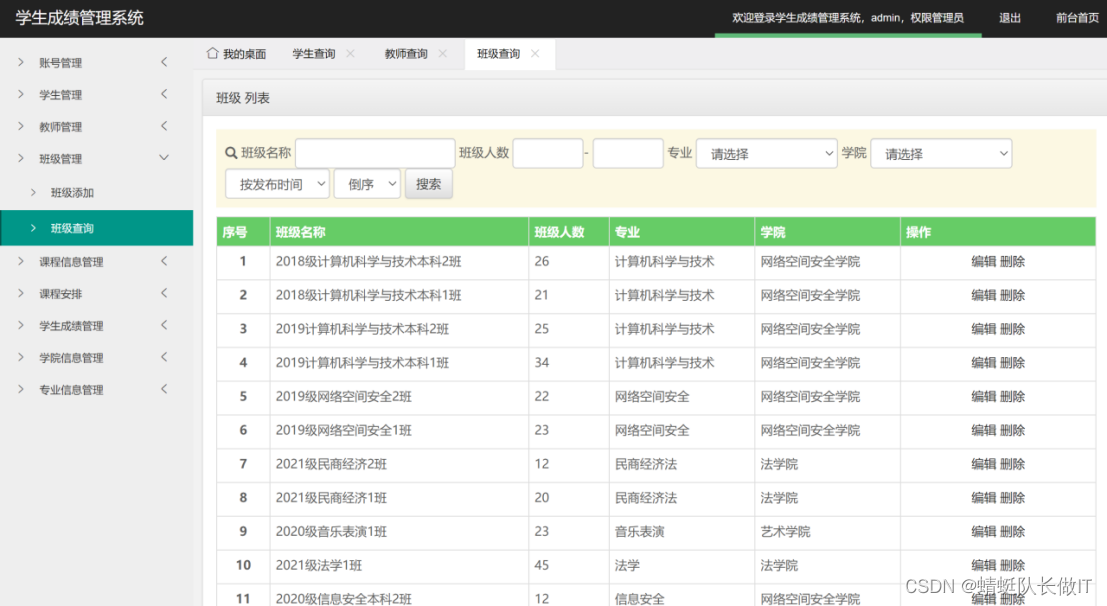

Student achievement management system based on SSM

Hospital reservation management system based on SSM

随机推荐

CdS quantum dots modified DNA | CDs DNA QDs | near infrared CdS quantum dots coupled DNA specification information

Day012 application of one-dimensional array

Cyclegan parsing

火狐浏览器,访问腾讯云服务器的时候,出现建立安全连接失败的问题。

[unity URP] the code obtains the universalrendererdata of the current URP configuration and dynamically adds the rendererfeature

内部类--看这篇就懂啦~

Significance of NVIDIA SMI parameters

Iotdb C client release 0.13.0.7

工控用Web组态软件比组态软件更高效

Digital image processing -- Chapter 3 gray scale transformation and spatial filtering

DNA coupled PbSe quantum dots | near infrared lead selenide PbSe quantum dots modified DNA | PbSe DNA QDs

Shell编程的规范和变量

Summary of APP launch in vivo application market

Student status management system based on SSM

仿真模型简单介绍

DNA偶联PbSe量子点|近红外硒化铅PbSe量子点修饰脱氧核糖核酸DNA|PbSe-DNA QDs

用typescript实现排序-递增

About the new features of ES6

Mysql database

C#时间相关操作