当前位置:网站首页>The difference of iteration number and information entropy

The difference of iteration number and information entropy

2022-07-27 10:57:00 【Black elm】

A | 5 | 7 | 2 | 4 | 3 | 9 | 1 | 6 | 8 |

0 | 5402.955 | 7822.01 | 8358.603 | 11983.15 | 12572.23 | 13346.79 | 23558.45 | 25605.5 | 27905.07 |

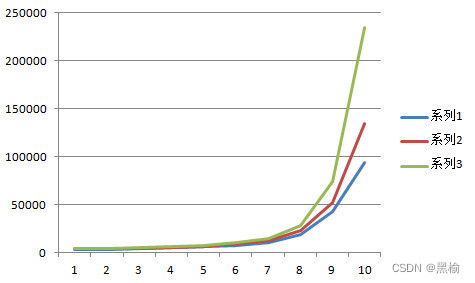

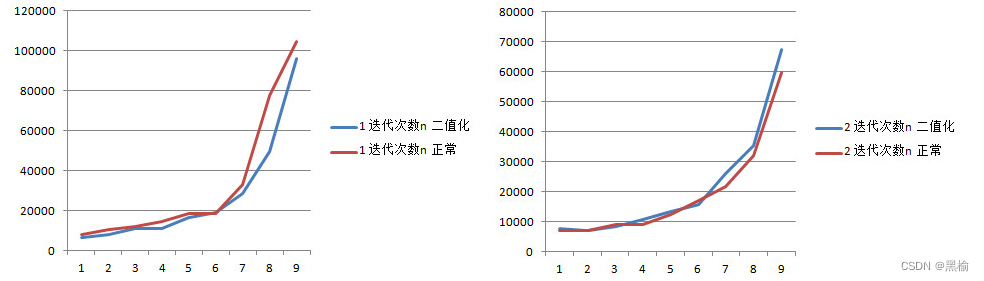

Classify with neural network A and B, Give Way A yes mnist Of 0, Give Way B by mnist Of 1-9, Fix the convergence error , Count the number of iterations and sort , You can get the form number axis . The order is

5 7 2 4 3 9 1 6 8

Whether this group of sorting depends on the pictures B The entropy of information ? The answer should be no ,

A | 5 | 7 | 2 | 4 | 3 | 9 | 1 | 6 | 8 |

0 | 5402.955 | 7822.01 | 8358.603 | 11983.15 | 12572.23 | 13346.79 | 23558.45 | 25605.5 | 27905.07 |

7 | 4 | 5 | 6 | 9 | 2 | 8 | 0 | 3 | |

1 | 9568.94 | 9577.513 | 10137.68 | 10241.39 | 10721.14 | 11792.54 | 16861 | 23558.45 | 35671.24 |

5 | 8 | 0 | 6 | 1 | 7 | 9 | 4 | 3 | |

2 | 7100.643 | 7658.015 | 8358.603 | 9360.106 | 11792.54 | 12555.62 | 13772.15 | 19984.86 | 33389.61 |

6 | 5 | 0 | 4 | 8 | 7 | 9 | 2 | 1 | |

3 | 8136.266 | 11703.08 | 12572.23 | 15199.52 | 17015.68 | 17331.39 | 19919.65 | 33389.61 | 35671.24 |

5 | 8 | 7 | 6 | 1 | 0 | 3 | 9 | 2 | |

4 | 5689.266 | 6106.347 | 7572.704 | 9020.96 | 9577.513 | 11983.15 | 15199.52 | 18523.66 | 19984.86 |

6 | 0 | 4 | 8 | 9 | 2 | 7 | 1 | 3 | |

5 | 5362.608 | 5402.955 | 5689.266 | 6116.397 | 6794.688 | 7100.643 | 8617.161 | 10137.68 | 11703.08 |

5 | 3 | 8 | 7 | 4 | 9 | 2 | 1 | 0 | |

6 | 5362.608 | 8136.266 | 8626.678 | 8983.447 | 9020.96 | 9044.211 | 9360.106 | 10241.39 | 25605.5 |

8 | 4 | 0 | 5 | 6 | 1 | 2 | 3 | 9 | |

7 | 7073.432 | 7572.704 | 7822.01 | 8617.161 | 8983.447 | 9568.94 | 12555.62 | 17331.39 | 20211.46 |

4 | 5 | 9 | 7 | 2 | 6 | 1 | 3 | 0 | |

8 | 6106.347 | 6116.397 | 6966.322 | 7073.432 | 7658.015 | 8626.678 | 16861 | 17015.68 | 27905.07 |

5 | 8 | 6 | 1 | 0 | 2 | 4 | 3 | 7 | |

9 | 6794.688 | 6966.322 | 9044.211 | 10721.14 | 13346.79 | 13772.15 | 18523.66 | 19919.65 | 20211.46 |

Because if the classification origin is not 0 But the rest , Then the sorting of classification objects is completely different . The information entropy of a single image cannot be changed by the change of the classification origin . So how to explain the contradiction that the order of iteration times has nothing to do with information entropy ?

hypothesis 1: Two objects that are identical cannot be divided into two classes , The corresponding number of classification iterations is infinite .

inference 1: The greater the number of iterations under the same convergence error, the smaller the difference between the two .

Assume according to the number of iterations , The number of iterations depends on the difference between the classification origin and the classification object . This is the interaction between two objects , Not individual behavior . Therefore, it is the classification origin and classification object that jointly determine the number of iterations , It does not completely depend on the classification object , Therefore, the information entropy of the classification object has nothing to do with the difference in the number of iterations .

monotonicity , That is, the higher the probability of occurrence , The lower the information entropy it carries

Or according to the definition of monotonicity of information entropy , For example, if the two pictures are more similar , The smaller the uncertainty of a single pixel , For the input of neural network, the probability of this morphology is higher , The lower the information entropy of these two pictures as a whole . Therefore, the number of iterations is inversely proportional to the information entropy of the classification origin and the classification object as a whole .

Therefore, the reciprocal of the number of iterations is a measure of the information entropy of the classification object and the classification origin as a whole .

边栏推荐

- MySQL log management, backup and recovery

- Use kaggle to run Li Hongyi's machine learning homework

- Shardingsphere kernel principle

- OpenAtom OpenHarmony分论坛,今天14:00见!附大事记精彩发布

- MIMO array 3D imaging technology based on mobile terminal

- Compete for the key battle of stock users and help enterprises build a perfect labeling system - 01 live review

- 最短移动距离和形态复合体的熵

- A few simple steps to realize the sharing network for industrial raspberry pie

- 迭代次数和熵之间关系的一个验证试验

- 颜值爆表!推荐两款JSON可视化工具,配合Swagger使用真香

猜你喜欢

随机推荐

Deep analysis: what is diffusion model?

TDengine 商业生态合作伙伴招募开启

flask_ Output fields in restful (resources, fields, marshal, marshal_with)

MySQL数据表的高级操作

Apache cannot start in phpstudy

NodeJS中Error: getaddrinfo ENOTFOUND localhost

Research on synaesthesia integration and its challenges

MySQL master-slave architecture, read-write separation, and high availability architecture

神经网络学习笔记

Analysis of C language pointer function and function pointer

搭建 Samba 服务

想要一键加速ViT模型?试试这个开源工具!

Li Kou brush question 02 (sum of three numbers + sum of maximum subsequence + nearest common ancestor of binary tree)

发动机悬置系统冲击仿真-瞬时模态动态分析与响应谱分析

Substr and substring function usage in SQL

ASP. Net core dependency injection journey: 1. Theoretical concepts

Analysis of heterogeneous computing technology

The difference between scalar, vector, matrix and tensor in deep learning

ECCV 2022 | complete four tracking tasks at the same time! Unicorn: towards the unification of target tracking

Custom page 01 of JSP custom tag