当前位置:网站首页>[note] logistic regression

[note] logistic regression

2022-07-27 14:07:00 【Sprite.Nym】

One 、 Overview of logistic regression

(1) The purpose of logistic regression : classification .

Logistic regression often solves classification problems , Especially the binary classification problem .

(2) The process of logistic regression : Return to .

The result is 0~1 Continuous values between , Represents the possibility of occurrence ( Class probability ).

(3) threshold : Complete the classification through the comparison of possibility and threshold .

Such as : Calculate the possibility of default , If it is greater than 0.5, The borrower is classified as a bad customer .

Two 、 Logistic regression model

Because in the second classification problem , Tags are only yes or no (1 and 0), If linear regression is used for fitting , The range of linear regression is not 0 ~ 1 Between , The prediction result is difficult to be output as possibility . And if we use piecewise function to fit , Because the piecewise function is not continuous , The predicted result is not the possibility we hope 0 ~ 1 Continuous values of .

The solution is to combine linear regression with sigmoid Functions together , That is to form a nested function .

sigmoid Function image :

Nested functions composed of :

3、 ... and 、 The loss function of logistic regression

If we directly combine y ^ \hat y y^ Replace with sigmoid Function as the loss function of logistic regression to find the minimum value , You will find that this function is not convex , Therefore, other loss functions are used .

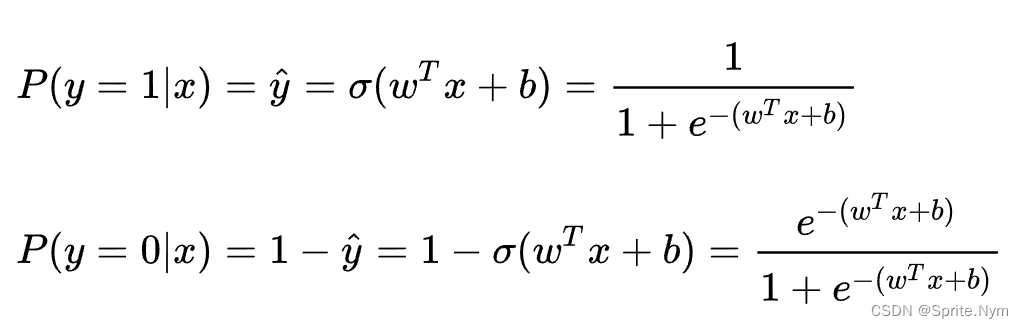

(1) In the problem of classification y Probability

Due to agreement y ^ = P ( y = 1 ∣ x ) \hat y=P(y=1|x) y^=P(y=1∣x) , therefore :

y = 1 y=1 y=1 when , P ( y ∣ x ) = y ^ P(y|x)=\hat y P(y∣x)=y^

y = 0 y=0 y=0 when , P ( y ∣ x ) = 1 − y ^ P(y|x)=1-\hat y P(y∣x)=1−y^

Merge to get :

P ( y ∣ x ) = y ^ y ( 1 − y ^ ) ( 1 − y ) P(y|x)={\hat y}^y(1-\hat y)^{(1-y)} P(y∣x)=y^y(1−y^)(1−y)

(2) Using the maximum likelihood estimation method to estimate the model parameters

The likelihood function is :

The log likelihood function is :

Find the maximum value of the maximum likelihood function , The most ideal parameter value can be obtained . So for the whole training set , The cost function can be defined as :

边栏推荐

- Brief tutorial for soft exam system architecture designer | system design

- Positive mask, negative mask, wildcard

- 592. Fraction addition and subtraction

- 592. 分数加减运算

- Application layer World Wide Web WWW

- SQL tutorial: introduction to SQL aggregate functions

- 致尚科技IPO过会:年营收6亿 应收账款账面价值2.7亿

- Recommended collection, confusing knowledge points of PMP challenge (2)

- 【2022-07-25】

- 知识关联视角下金融证券知识图谱构建与相关股票发现

猜你喜欢

NoSQL -- three theoretical cornerstones of NoSQL -- cap -- Base -- final consistency

![[training day4] anticipating [expected DP]](/img/66/35153a9aa77e348cae042990b55b1c.png)

[training day4] anticipating [expected DP]

Lighting 5g in the lighthouse factory, Ningde era is the first to explore the way made in China

灵活易用所见即所得的可视化报表

![[luogu_p5431] [template] multiplicative inverse 2 [number theory]](/img/e0/a710e22e28cc1ffa23666658f9ba13.png)

[luogu_p5431] [template] multiplicative inverse 2 [number theory]

Small program completion work wechat campus laundry small program graduation design finished product (2) small program function

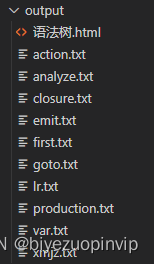

基于C语言的LR1编译器设计

Matlab digital image processing experiment 2: single pixel spatial image enhancement

Utnet hybrid transformer for medical image segmentation

Wechat campus laundry applet graduation design finished product of applet completion work (3) background function

随机推荐

Onnxruntime [reasoning framework, which users can easily use to run an onnx model]

Real image denoising based on multi-scale residual dense blocks and block connected cascaded u-net

[training day3] delete [simulation]

NFT 的 10 种实际用途

基于STM32的自由度云台运动姿态控制系统

小程序毕设作品之微信校园洗衣小程序毕业设计成品(6)开题答辩PPT

Lighting 5g in the lighthouse factory, Ningde era is the first to explore the way made in China

Chapter3 data analysis of the U.S. general election gold offering project

Some key information about Max animation (shift+v)

阿里最新股权曝光:软银持股23.9% 蔡崇信持股1.4%

Realize the basic operations such as the establishment, insertion, deletion and search of linear tables based on C language

JWT login expiration - automatic refresh token scheme introduction

西测测试深交所上市:年营收2.4亿募资9亿 市值47亿

Weice biological IPO meeting: annual revenue of 1.26 billion Ruihong investment and Yaohe medicine are shareholders

面试八股文之·TCP协议

NoSQL -- three theoretical cornerstones of NoSQL -- cap -- Base -- final consistency

Zoom, translation and rotation of OpenCV image

ONNXRuntime【推理框架,用户可以非常便利的用其运行一个onnx模型】

Cesium region clipping, local rendering

Pure C handwriting thread pool