当前位置:网站首页>Applied practical skills of deep reinforcement learning

Applied practical skills of deep reinforcement learning

2022-07-29 10:54:00 【CHH3213】

List of articles

Reference material

- 《 Deep reinforcement learning 》 Book number 18 Chapter

This blog is summarized from 《 Deep reinforcement learning 》 Book number 18 Chapter .

1. How to apply deep reinforcement learning

In intensive learning , Because the basic process of reinforcement learning requires agents to learn from reward signals rather than labels in the dynamic process of interacting with the environment , This is different from supervised learning .

The reward function in reinforcement learning may only contain incomplete or partial information , And agents use bootstrapping ( Bootstrapping) When learning methods, we often pursue a changing goal .

Besides , More than one deep neural network is often used in deep reinforcement learning , Especially in those higher or recently proposed methods . This makes the deep reinforcement learning algorithm may be unstable and sensitive to hyperparameters .

Reinforcement learning can be used in continuous decision-making problems , And this kind of problem can usually use Markov ( Markov) Process to describe or approximate . A prediction task with labeled data usually does not need reinforcement learning algorithm , The supervised learning method may be more direct and effective .

Reinforcement learning tasks usually include at least two key elements :

- Environmental Science , Used to provide dynamic processes and reward signals ;

- agent , Controlled by a strategy , This strategy is obtained through intensive learning and training .

The application of deep reinforcement learning algorithm has the following stages .

1.1 Simple test phase

It is necessary to use a model with high confidence in its correctness and accuracy , Including reinforcement learning algorithm .

If it is a new task , Use it to explore the environment ( Even use a random strategy ) Or gradually verify the extension that will be made on the final model , Instead of directly using a complex model .

need Experiment quickly to detect Possible problems in the basic settings of environment and model , Or at least familiarize yourself with the task to be solved , This will give you some inspiration in the later process , Sometimes, some extreme situations that need to be considered will be exposed .

1.2 Quick configuration phase

- The model settings should be quickly tested , To assess the possibility of its success . If there is an error , Visualize the learning process as much as possible , And use some statistical variables when you can't get the potential relationship directly from the number ( variance 、 mean value 、 Average difference 、 Minima, etc ).

1.3 Deployment training phase

- After carefully confirming the correctness of the model , You can start large-scale deployment training .

- Because deep reinforcement learning often requires a large number of samples to train for a long time , It is recommended to use parallel training 、 Use cloud server ( If you don't have a server yourself ) etc. , To speed up the large-scale training of the final model .

2. Implementation phase

Implement some basic reinforcement learning algorithms from scratch .

Properly implement the details of the paper . When you implement these methods , Don't over fit to the details of the paper , Instead, understand why the author chooses to use these techniques in these specific situations .

If you solve a specific task , First explore the environment .

Check the details of the environment , Including the amount of observation and the nature of the action , Such as dimension 、 range 、 Continuous or discrete value type . If the value of environmental observations is within a large effective range or unknown range , Then its value should be normalized . such as , If you use Tanh perhaps Sigmoid As an activation function , Larger input values may saturate the nodes of the first hidden layer , After the training starts, it will lead to smaller gradient value and slower learning speed .

Select an appropriate output activation function for each network .

An appropriate output activation function should be selected for the action network according to the environment . such as , Common image ReLU It may work well for hidden layers in terms of calculation time and convergence performance , However, it may not be appropriate for the action output range with negative values . It is best to match the range of policy output value with the action value range of the environment , For example, for the action range (−1, 1) Use... In the output layer Tanh Activation function .

Start with simple examples and gradually increase the complexity .

= Start with the dense reward function ==.

The design of reward function can affect the convexity of optimization problem in the learning process , Therefore, we should start with a smooth and dense reward function .

Choose the right network structure .

- For deep reinforcement learning , Neural network depth is usually not too deep , exceed 5 Layer neural networks are not particularly common in reinforcement learning applications . This is due to the computational complexity of reinforcement learning algorithm itself .

- In supervised learning , If the network is large enough compared to the data , It can be over fitted to the data set , In deep reinforcement learning , It may only slowly converge or even diverge , This is because of the strong correlation between exploration and utilization .

- The choice of network size is often based on the environment state space and action space . A discrete environment with dozens of state action combinations may use a tabular method , Or a single-layer or two-layer neural network .

- For the structure of the network , Multilayer perceptron is very common in the literature ( Multi-Layer Perceptrons, MLPs)、 Convolutional neural networks ( CNNs) And recurrent neural networks ( RNNs).

- A low dimensional vector input can be processed by a multilayer perceptron , Vision based strategies often require a convolutional neural network backbone to extract information in advance , Or train with reinforcement learning algorithm , Or use other computer vision methods for pre training . There are other situations , For example, low dimensional vector input and high dimensional image input are used together , In practice, it is usually used to extract the backbone of features from high-dimensional input and then connect it in parallel with other low-dimensional inputs . Cyclic neural networks can be used in environments that are not completely observable or non Markov processes , The best action choice depends not only on the current state , And depend on the previous state .

Be familiar with the nature of the reinforcement learning algorithm you use .

- for instance , image PPO or TRPO Trust region based methods of classes may require large batch size To ensure the progress of security strategy . For these trust region methods , We usually expect the strategy to show steady progress , Instead of a sudden big drop in some positions on the learning curve . TRPO The equal trust region method requires a larger batch size The reason is that , It needs to be approximated by conjugate gradients Fisher Information matrix , This is calculated based on the batch samples currently sampled . If batch size Too small or biased , This approximation may cause problems , And lead to Fisher Information matrix ( Or reverse Hessian The product of ) The inaccuracy of the approximation makes the learning performance decline . therefore , In practice , Algorithm TRPO and PPO Medium batch size Need to be increased , Until the agent has stable and progressive learning performance .TRPO Sometimes it cannot be well extended to large-scale networks or deep convolutional neural networks and cyclic Neural Networks .

- DDPG Algorithms are usually considered to be sensitive to hyperparameters , Although it has proved effective for many continuous action space tasks . When applied to large-scale or real-world tasks , This sensitivity will be more significant . such as , Although in a simple simulation test environment, a thorough super parameter search can finally find an optimal performance effect , However, due to time and resource constraints, the learning process in the real world may not allow this kind of hyperparametric search , therefore DDPG Compared with others TRPO or SAC The algorithm may not work well . On the other hand , Even though DDPG The algorithm was originally designed to solve tasks with continuous value actions , This does not mean that it cannot work with discrete value actions . If you try to apply it to tasks with discrete value actions , Then you need to use some extra skills , For example, use a larger t It's worth it Sigmoid(tx) Output the activation function and prune it to binary output , We must also ensure that the truncation error is relatively small , Or you can use it directly Gumbel-Softmax Technique to change the distribution of deterministic output into one category . Other algorithms can also have similar processing .

Normalized value processing .

- Normalize the value of the reward function by scaling rather than changing the mean , And standardize the predicted target value of the value function in the same way .

- The scaling of the reward function is based on the batch samples sampled in the training . Only do value scaling ( That is, divide by the standard deviation ) Without mean shift ( Subtract the statistical mean to get the zero mean ) The reason is that , Mean shift may affect the willingness of agents to survive . This is actually related to the sign of the whole reward function , And this conclusion only applies to your use “ Done” Signal situation . Actually , If it doesn't work in advance “ Done” Signal to terminate the segment , Then you can use the mean shift .

- Consider one of the following , If the agent experiences a segment , and “ Done=True” The signal occurs within the maximum segment length , So if we think that agents are still alive , Then this “ Done” The reward value after the signal is actually 0. If these are 0 The reward value of is generally higher than the previous reward value ( That is, the previous reward value is basically negative ), Then intelligent experience tends to end the segment as early as possible , To maximize the rewards in the whole clip . contrary , If the previous reward function is basically positive , Intelligent experience choice “ live ” Take longer . If we adopt the mean shift method for the reward value , It will break the agent's willingness to survive in the above situation , Thus, the agent will not choose to survive longer even when the reward value is basically positive , And this will affect the performance in training .

- The goal of the normalized value function is similar . for instance , Some are based on DQN The average of the algorithm Q The value will unexpectedly increase during the learning process , And this is from the maximization optimization formula for Q Caused by overestimation of value . Normalization target Q Value can alleviate this problem , Or use other techniques such as Double Q-Learning.

Notice the divergence between the reward function and the final goal .

- Reinforcement learning is often used for a specific task with an ultimate goal , Usually, it is necessary to design a reward function that is consistent with the final goal to facilitate agent learning .

- In this sense , Reward function is a quantitative form of goal , This also means that they may be two different things . In some cases, there will be differences between them . Because an reinforcement learning agent can over fit to the reward function you set for the task , And you may find that the final strategy of training is different from what you expect in achieving the final goal . One of the most likely reasons for this is the divergence between the reward function and the ultimate goal .

- In most cases , It is easy for the reward function to tend to the final task goal , But designing a reward function is consistent with the ultimate goal in all extreme cases , Is not mediocre . What you should do is to minimize such differences , To ensure that the reward function you design can smoothly help the agent achieve the ultimate real goal .

Non Markov case .

- Non Markov decision processes and partially observable Markov processes ( POMDP) The difference is sometimes subtle . such as , If one is in Atari Pong The state in the game is defined as containing the position and speed information of the ball at the same time ( Suppose the ball moves without acceleration ), But the observation quantity only has the position , Then the environment is POMDP Not a non Markov process . However , Pong The state of the game is usually considered as the static frame of each time step , Then the current state only contains the position of the ball without all the information that the agent can make the optimal action choice , such as , The speed and direction of the ball will also affect the optimal action . So in this case, it is a non Markov environment . One way to provide speed and direction information is to use historical states , This violates the processing method under Markov process .

- stay DQN In the original , Use Stack frames In an approximate way MDP To solve Pong Mission . If we treat all stacked frames as a single state , And it is assumed that the stacked frame can contain all the information to make the optimal action choice , Then this task actually still follows the Markov process assumption .

- Cyclic neural network or higher LSTM The method can also be used in the case of decision-making based on historical memory , To solve the problem of non Markov process .

3. Training and commissioning stage

Initialization is important .

- Deep reinforcement learning methods are usually either online strategies ( On-Policy) Method update the strategy with samples in each segment , Or use offline strategy ( Off-Policy) Dynamic playback cache in ( Replay Buffer), This cache contains samples of diversity over time .

- This makes deep reinforcement learning different from supervised learning , Supervised learning is learning from a fixed data set , Therefore, the order of learning samples is not particularly important . However , In intensive learning , The initialization of a policy can affect the scope of possible subsequent exploration , And decide the subsequent samples stored in the cache or the samples directly used for updating , Therefore, it will affect the whole learning performance .

- Starting with a random strategy will lead to a greater probability of having more samples , This is good for the beginning of training . But with the convergence and progress of the strategy , The scope of exploration gradually narrowed , And close to the trajectory generated by the current strategy . For the initialization of weight parameters , Generally speaking, use more advanced methods such as Xavier initialization Or orthogonal initialization Will be better , In this way, gradient disappearance or gradient explosion can be avoided , And have a relatively stable learning performance for most deep learning situations .

Use multiple random seeds and calculate the average value to reduce randomness .

- Fixed random seeds can be used to reproduce the learning process . Use random seeds and get the average value of the learning curve , It can reduce the possibility of getting wrong conclusions caused by the randomness of deep reinforcement learning in experimental comparison . Usually use more random seeds , The more reliable the experimental results are , But it also increases the experimental time . Based on experience , We use different random seeds 3 To 5 A relatively reliable result can be obtained from this experiment , But the more, the better .

Balance CPU and GPU Calculate resources to speed up training .

- This tip is actually about finding and solving the bottleneck problem in training speed .

- Better use of computing resources on limited computers , Reinforcement learning is more complex than supervised learning .

- In supervised learning , CPU It is often used for data reading, writing and preprocessing , and GPU For forward reasoning and back propagation .

- However , Because the reasoning process in reinforcement learning always involves the interaction with the environment , The device that calculates the gradient needs to match the computing power of the device that interacts with the processing environment , Otherwise, it will be a waste of exploration or utilization .

- In reinforcement learning , CPU It is often used in the process of interactive sampling with the environment , This may involve a lot of computation for some complex simulation systems . GPU It is used for forward reasoning and back propagation to update the network . In the process of deploying large-scale training , Should check CPU and GPU Calculate resource utilization , Avoid threads or processes sleeping .

- about GPU Overuse , More sampling threads or processes can be used to interact with the environment .

- about CPU Overuse , You can reduce the number of distributed sampling threads , Or increase the number of distributed update threads , Increase the number of update iterations in the algorithm , For offline update, increase the batch size, etc .

visualization .

- If you can't see the potential relationship directly from the value , It should be visualized as much as possible . such as , Sometimes due to the unstable nature of the reinforcement learning process , The reward function may have a lot of jitter , In this case, it may be necessary to draw a moving average curve of the reward value to understand whether the agent has made progress in training .

Smooth the learning curve .

- The process of reinforcement learning can be very unstable . It is often unreliable to draw conclusions directly from an unprocessed learning curve , We usually use moving average 、 Convolution kernel, etc. to smooth the learning curve , And choose a suitable window length .

Question your algorithm implementation .

- Just after the code implementation , It doesn't work , It's very common , And then , It is important to debug the code patiently .

- The correctness of algorithm implementation always precedes the fine adjustment of a relatively good result , therefore , We should consider fine-tuning the super parameters on the premise of ensuring the correctness of the implementation .

边栏推荐

- 【Unity,C#】Character键盘输入转向与旋转

- 浅谈安科瑞灭弧式智慧用电在养老机构的应用

- 聊聊性能测试环境搭建

- How can agile development reduce cognitive bias in collaboration| Agile way

- Conference OA project - my approval

- 「PHP基础知识」使用数组保存数据

- KRYSTAL:审计数据中基于知识图的战术攻击发现框架

- The heavy | open atomic school source activity was officially launched

- factoextra:多元统计方法的可视化PCA

- Start from scratch blazor server (3) -- add cookie authorization

猜你喜欢

Kunlunbase instruction manual (II) best practices for peer-to-peer deployment

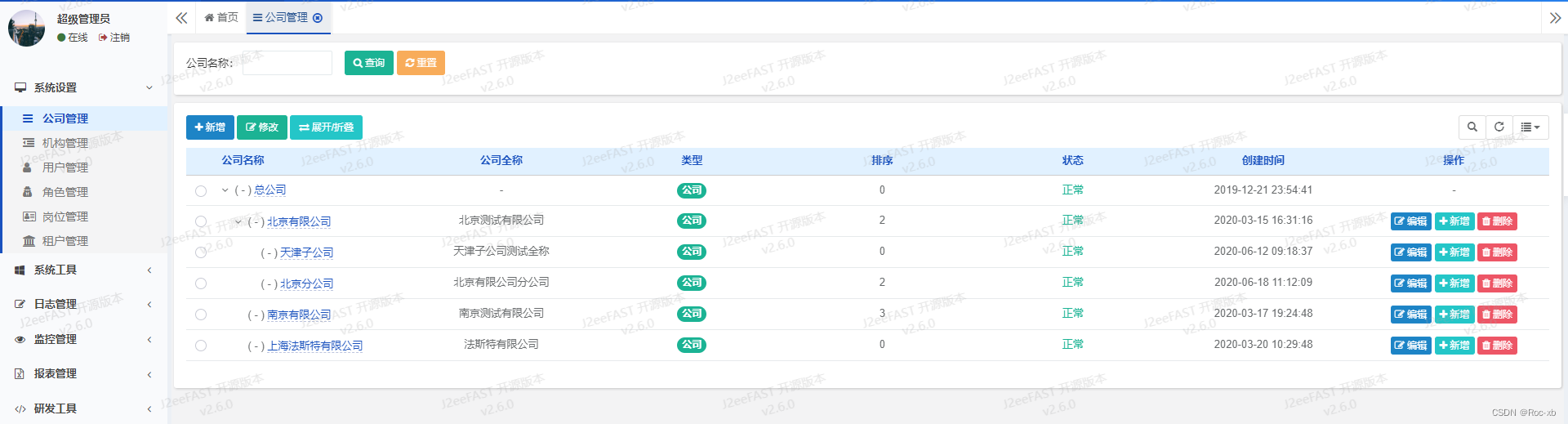

若依集成minio实现分布式文件存储

leetcode-位运算

Factoextra: visual PCA of multivariate statistical methods

基于flask写的一个小商城mall项目

Zhou Hongyi: 360 is the largest secure big data company in the world

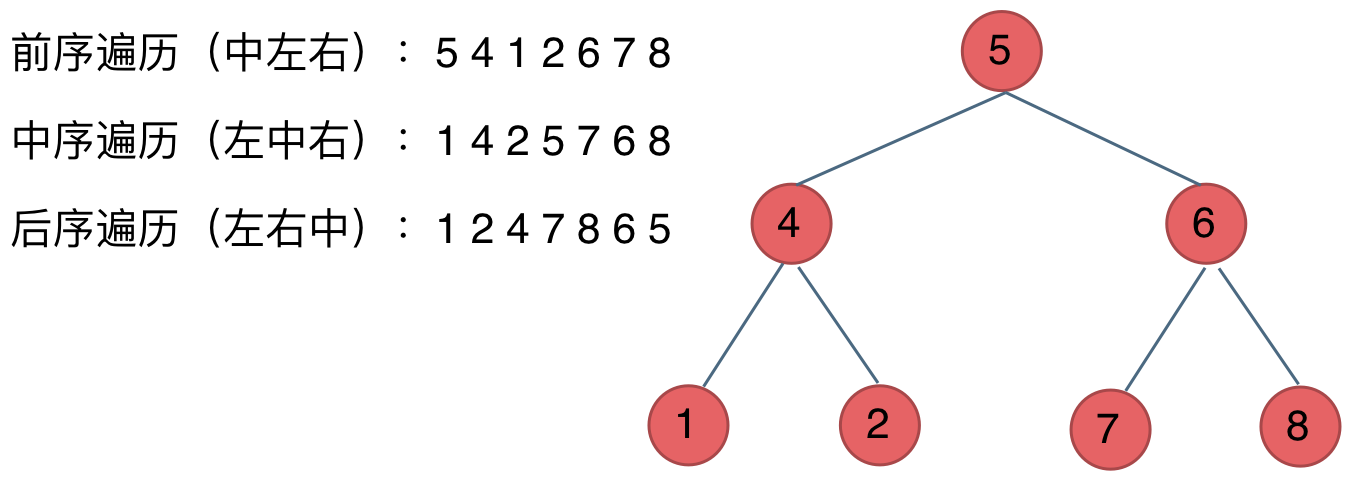

一文搞懂什么是二叉树(二叉树的种类、遍历方式、定义)

How to realize the function of adding watermark

If distributed file storage is realized according to integrated Minio

Pyqt5 rapid development and practice 6.6 qformlayout & 6.7 nested layout & 6.8 qsplitter

随机推荐

QWidget、QDialog、QMainWindow 的异同点

【图像检测】基于灰度图像的积累加权边缘检测方法研究附matlab代码

Spark高效数据分析02、基础知识13篇

Summer 2022 software innovation laboratory training JDBC

Start from scratch blazor server (3) -- add cookie authorization

『知识集锦』一文搞懂mysql索引!!(建议收藏)

使用R包skimr汇总统计量的优美展示

QT基本工程的解析

js两个数组对象进行合并去重

2022cuda summer training camp Day6 practice

3道软件测试面试题,能全答对的人不到10%!你会几个?

Detailed arrangement of JVM knowledge points (long text warning)

牛客网刷题

专访 | 阿里巴巴首席技术官程立:云 + 开源共同形成数字世界的可信基础

How to realize the function of adding watermark

基于flask实现的mall商城---用户模块

Atomic operation of day4 practice in 2022cuda summer training camp

报表控件FastReport与StimulSoft功能对比

R language Monte Carlo method and average method are used to calculate the definite integral. Considering the random point casting method, the confidence is 0.05, and the requirement is ϵ= 0.01, numbe

Watch the open source summit first | quick view of the sub Forum & Activity agenda on July 29