当前位置:网站首页>Pytoch notes

Pytoch notes

2022-06-12 11:53:00 【Bewitch one】

It is mainly used to record some used but infrequently used pytorch Method , In alphabetical order .

C

1. nn.ChannelShuffle()

Function function : Disturb the channel order of the original feature map , That is, the input characteristic diagram is (B,C,H,W), The size of the output feature map is also (B,C,H,W), however C The order of dimensions on the dimension will be disturbed . Parameters group(int) To indicate a division channel Number of groups .

channel_shuffle = nn.ChannelShuffle(2)

input = torch.randn(1, 4, 2, 2)

print(input)

''' [[[[1, 2], [3, 4]], [[5, 6], [7, 8]], [[9, 10], [11, 12]], [[13, 14], [15, 16]], ] '''

output = channel_shuffle(input)

print(output)

''' [[[[1, 2], [3, 4]], [[9, 10], [11, 12]], [[5, 6], [7, 8]], [[13, 14], [15, 16]], ]] '''

D

1. nn.Dropout(p=float)

Dropout The role of : Set to prevent over fitting ,p Indicates that each neuron has p Probability of not being activated , It is generally used after full connection network mapping .

nn.Droupout(p=0.3)

P

1. nn.Parameter()

Except convolution in neural networks 、 Other operations such as full connection require some additional parameters, which will also be learned and trained along with the whole network . For example, the weight parameter in the attention mechanism 、Vision Transformer in positional embedding etc. .

# With ViT Medium position embedding For example

self.pos_embedding = nn.Parameter(torch.randn(1, num_patches+1, dim))

边栏推荐

- Architecture training module 7

- Lambda and filter, index of list and numpy array, as well as various distance metrics, concatenated array and distinction between axis=0 and axis=1

- 35. search insertion position

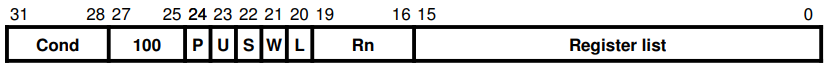

- Jump instruction of arm instruction set

- QT based travel query and simulation system

- Compiling Draco library on Windows platform

- ioremap

- LeetCode 497. 非重叠矩形中的随机点(前缀和+二分)

- 进程的创建和回收

- Thirty one items that affect the weight of the store. Come and see if you've been hit

猜你喜欢

Socket implements TCP communication flow

Record the pits encountered when using JPA

7-5 复数四则运算

ARM处理器模式与寄存器

Batch load/store instructions of arm instruction set

PDSCH 相关

6.6 RL:MDP及奖励函数

A.前缀极差

Index in MySQL show index from XXX the meaning of each parameter

M-arch (fanwai 10) gd32l233 evaluation -spi drive DS1302

随机推荐

6.6 Convolution de séparation

文件夹目录结构自动生成

Promise controls the number of concurrent requests

Spark common encapsulation classes

Why is there no traffic after the launch of new products? How should new products be released?

Spark常用封装类

Record the pits encountered when using JPA

6.6 分离卷积

标品和非标品如何选品,选品的重要性,店铺怎样布局

視頻分類的類間和類內關系——正則化

How to determine the relationship between home page and search? Relationship between homepage and search

First understand the onion model, analyze the implementation process of middleware, and analyze the source code of KOA Middleware

Multiplication instruction of arm instruction set

ARM指令集之跳转指令

[QNX hypervisor 2.2 user manual] 4.1 method of building QNX hypervisor system

如何确定首页和搜索之间的关系呢?首页与搜索的关系

Socket implements TCP communication flow

FPGA Development - Hello_ World routine

为什么新品发布上架之后会没有流量,新品应该怎么发布?

IP地址管理