当前位置:网站首页>Torch Cookbook

Torch Cookbook

2022-07-06 21:16:00 【Love CV】

This code is based on PyTorch 1.0 edition , The following packages are needed

import collections

import os

import shutil

import tqdm

import numpy as np

import PIL.Image

import torch

import torchvision1. Basic configuration

Check PyTorch edition

torch.__version__ # PyTorch version

torch.version.cuda # Corresponding CUDA version

torch.backends.cudnn.version() # Corresponding cuDNN version

torch.cuda.get_device_name(0) # GPU typeto update PyTorch

PyTorch Will be installed in anaconda3/lib/python3.7/site-packages/torch/ Under the table of contents .

conda update pytorch torchvision -c pytorchFix random seeds

torch.manual_seed(0)

torch.cuda.manual_seed_all(0)Specifies that the program runs on a specific GPU obstruct

Specify the environment variable... On the command line

CUDA_VISIBLE_DEVICES=0,1 python train.pyOr specify... In the code

os.environ['CUDA_VISIBLE_DEVICES'] = '0,1'To determine if there is CUDA Support

torch.cuda.is_available()Set to cuDNN benchmark Pattern

Benchmark Mode will speed up the calculation , But because of the randomness in the calculation , The feedforward results of each network are slightly different .

torch.backends.cudnn.benchmark = TrueIf you want to avoid this kind of result fluctuation , Set up

torch.backends.cudnn.deterministic = Trueeliminate GPU Storage

Sometimes Control-C After suspension of operation GPU Storage not released in time , It needs to be cleared manually . stay PyTorch The interior can

torch.cuda.empty_cache()Or at the command line, you can use ps Find the program PID, Reuse kill End the process

ps aux | grep python

kill -9 [pid]Or directly reset those that are not cleared GPU

nvidia-smi --gpu-reset -i [gpu_id]2. Tensor processing

Tensor basic information

tensor.type() # Data type

tensor.size() # Shape of the tensor. It is a subclass of Python tuple

tensor.dim() # Number of dimensions.Data type conversion

# Set default tensor type. Float in PyTorch is much faster than double.

torch.set_default_tensor_type(torch.FloatTensor)

# Type convertions.

tensor = tensor.cuda()

tensor = tensor.cpu()

tensor = tensor.float()

tensor = tensor.long()torch.Tensor And np.ndarray transformation

# torch.Tensor -> np.ndarray.

ndarray = tensor.cpu().numpy()

# np.ndarray -> torch.Tensor.

tensor = torch.from_numpy(ndarray).float()

tensor = torch.from_numpy(ndarray.copy()).float() # If ndarray has negative stridetorch.Tensor And PIL.Image transformation

PyTorch The tensor in the defaults to N×D×H×W The order of , And the data range is [0, 1], It needs to be transposed and normalized .

# torch.Tensor -> PIL.Image.

image = PIL.Image.fromarray(torch.clamp(tensor * 255, min=0, max=255

).byte().permute(1, 2, 0).cpu().numpy())

image = torchvision.transforms.functional.to_pil_image(tensor) # Equivalently way

# PIL.Image -> torch.Tensor.

tensor = torch.from_numpy(np.asarray(PIL.Image.open(path))

).permute(2, 0, 1).float() / 255

tensor = torchvision.transforms.functional.to_tensor(PIL.Image.open(path)) # Equivalently waynp.ndarray And PIL.Image transformation

# np.ndarray -> PIL.Image.

image = PIL.Image.fromarray(ndarray.astypde(np.uint8))

# PIL.Image -> np.ndarray.

ndarray = np.asarray(PIL.Image.open(path))Extract values from a tensor that contains only one element

This counts during training loss It's especially useful in the process of change . Otherwise, it will accumulate the calculation chart , send GPU More and more storage .

value = tensor.item()Tensor deformation

Tensor deformation is often used to input the convolution features into the fully connected layer . comparison torch.view,torch.reshape It can automatically handle the discontinuous input tensor .

tensor = torch.reshape(tensor, shape)Out of order

tensor = tensor[torch.randperm(tensor.size(0))] # Shuffle the first dimensionFlip horizontal

PyTorch I won't support it tensor[::-1] This negative step operation , Horizontal flipping can be realized by tensor index .

# Assume tensor has shape N*D*H*W.

tensor = tensor[:, :, :, torch.arange(tensor.size(3) - 1, -1, -1).long()]Copy tensor

There are three ways of copying , Corresponding to different needs .

# Operation | New/Shared memory | Still in computation graph |

tensor.clone() # | New | Yes |

tensor.detach() # | Shared | No |

tensor.detach.clone()() # | New | No |Splicing tensor

Be careful torch.cat and torch.stack The difference is that torch.cat Stitching along a given dimension , and torch.stack One dimension will be added . For example, if the parameter is 3 individual 10×5 Tensor ,torch.cat The result is 30×5 Tensor , and torch.stack The result is 3×10×5 Tensor .

tensor = torch.cat(list_of_tensors, dim=0)

tensor = torch.stack(list_of_tensors, dim=0)Convert integer tags to unique heat (one-hot) code

PyTorch The tags in the default from 0 Start .

N = tensor.size(0)

one_hot = torch.zeros(N, num_classes).long()

one_hot.scatter_(dim=1, index=torch.unsqueeze(tensor, dim=1), src=torch.ones(N, num_classes).long())Get non-zero / Zero element

torch.nonzero(tensor) # Index of non-zero elements

torch.nonzero(tensor == 0) # Index of zero elements

torch.nonzero(tensor).size(0) # Number of non-zero elements

torch.nonzero(tensor == 0).size(0) # Number of zero elementsJudge that two tensors are equal

torch.allclose(tensor1, tensor2) # float tensor

torch.equal(tensor1, tensor2) # int tensorTensor expansion

# Expand tensor of shape 64*512 to shape 64*512*7*7.

torch.reshape(tensor, (64, 512, 1, 1)).expand(64, 512, 7, 7)Matrix multiplication

# Matrix multiplication: (m*n) * (n*p) -> (m*p).

result = torch.mm(tensor1, tensor2)

# Batch matrix multiplication: (b*m*n) * (b*n*p) -> (b*m*p).

result = torch.bmm(tensor1, tensor2)

# Element-wise multiplication.

result = tensor1 * tensor2Calculate the distance between two sets of data

# X1 is of shape m*d, X2 is of shape n*d.

dist = torch.sqrt(torch.sum((X1[:,None,:] - X2) ** 2, dim=2))3. Model definition

Convolution layer

The most common convolution layer configuration is

conv = torch.nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=True)

conv = torch.nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=1, padding=0, bias=True)If the roll up layer configuration is complex , It is not convenient to calculate the output size , The following visualization tools can be used to assist

Convolution Visualizer

https://ezyang.github.io/convolution-visualizer/index.html

GAP(Global average pooling) layer

gap = torch.nn.AdaptiveAvgPool2d(output_size=1)Bilinear convergence (bilinear pooling)[1]

X = torch.reshape(N, D, H * W) # Assume X has shape N*D*H*W

X = torch.bmm(X, torch.transpose(X, 1, 2)) / (H * W) # Bilinear pooling

assert X.size() == (N, D, D)

X = torch.reshape(X, (N, D * D))

X = torch.sign(X) * torch.sqrt(torch.abs(X) + 1e-5) # Signed-sqrt normalization

X = torch.nn.functional.normalize(X) # L2 normalizationMulti card synchronization BN(Batch normalization)

When using torch.nn.DataParallel Run the code on multiple GPU On the card ,PyTorch Of BN Layer default operation is to calculate the mean value and standard deviation of data on each card independently , Sync BN Use all the data on the card to calculate BN The mean and standard deviation of layers , Ease when batch size (batch size) Compare the situation when the mean value and standard deviation are not estimated correctly , It is an effective skill to improve performance in the task of target detection .

Synchronized-BatchNorm-PyTorch

https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

Now? PyTorch Officials have supported synchronization BN operation

sync_bn = torch.nn.SyncBatchNorm(num_features, eps=1e-05, momentum=0.1, affine=True,

track_running_stats=True)All of the existing network BN Change layer to synchronization BN layer

def convertBNtoSyncBN(module, process_group=None):

'''Recursively replace all BN layers to SyncBN layer.

Args:

module[torch.nn.Module]. Network

'''

if isinstance(module, torch.nn.modules.batchnorm._BatchNorm):

sync_bn = torch.nn.SyncBatchNorm(module.num_features, module.eps, module.momentum,

module.affine, module.track_running_stats, process_group)

sync_bn.running_mean = module.running_mean

sync_bn.running_var = module.running_var

if module.affine:

sync_bn.weight = module.weight.clone().detach()

sync_bn.bias = module.bias.clone().detach()

return sync_bn

else:

for name, child_module in module.named_children():

setattr(module, name) = convert_syncbn_model(child_module, process_group=process_group))

return modulesimilar BN moving average

If you want to achieve something similar BN Sliding average operation , stay forward Function to use in place (inplace) The operation assigns a value to the sliding average .

class BN(torch.nn.Module)

def __init__(self):

...

self.register_buffer('running_mean', torch.zeros(num_features))

def forward(self, X):

...

self.running_mean += momentum * (current - self.running_mean)Calculate the overall parameters of the model

num_parameters = sum(torch.numel(parameter) for parameter in model.parameters())similar Keras Of model.summary() Output model information

pytorch-summary

https://github.com/sksq96/pytorch-summary

Model weight initialization

Be careful model.modules() and model.children() The difference between :model.modules() Will iterate through all the sub layers of the model , and model.children() Only one layer under the model will be traversed .

# Common practise for initialization.

for layer in model.modules():

if isinstance(layer, torch.nn.Conv2d):

torch.nn.init.kaiming_normal_(layer.weight, mode='fan_out',

nonlinearity='relu')

if layer.bias is not None:

torch.nn.init.constant_(layer.bias, val=0.0)

elif isinstance(layer, torch.nn.BatchNorm2d):

torch.nn.init.constant_(layer.weight, val=1.0)

torch.nn.init.constant_(layer.bias, val=0.0)

elif isinstance(layer, torch.nn.Linear):

torch.nn.init.xavier_normal_(layer.weight)

if layer.bias is not None:

torch.nn.init.constant_(layer.bias, val=0.0)

# Initialization with given tensor.

layer.weight = torch.nn.Parameter(tensor)Part of the layer uses the pre training model

Note that if the saved model is torch.nn.DataParallel, The current model also needs to be torch.nn.DataParallel.torch.nn.DataParallel(model).module == model.

model.load_state_dict(torch.load('model,pth'), strict=False)Will be in GPU The saved model is loaded into CPU

model.load_state_dict(torch.load('model,pth', map_location='cpu'))4. Data preparation 、 Feature extraction and fine tuning

Image fragmentation (image shuffle)/ Regional confusion mechanism (region confusion mechanism,RCM)[2]

# X is torch.Tensor of size N*D*H*W.

# Shuffle rows

Q = (torch.unsqueeze(torch.arange(num_blocks), dim=1) * torch.ones(1, num_blocks).long()

+ torch.randint(low=-neighbour, high=neighbour, size=(num_blocks, num_blocks)))

Q = torch.argsort(Q, dim=0)

assert Q.size() == (num_blocks, num_blocks)

X = [torch.chunk(row, chunks=num_blocks, dim=2)

for row in torch.chunk(X, chunks=num_blocks, dim=1)]

X = [[X[Q[i, j].item()][j] for j in range(num_blocks)]

for i in range(num_blocks)]

# Shulle columns.

Q = (torch.ones(num_blocks, 1).long() * torch.unsqueeze(torch.arange(num_blocks), dim=0)

+ torch.randint(low=-neighbour, high=neighbour, size=(num_blocks, num_blocks)))

Q = torch.argsort(Q, dim=1)

assert Q.size() == (num_blocks, num_blocks)

X = [[X[i][Q[i, j].item()] for j in range(num_blocks)]

for i in range(num_blocks)]

Y = torch.cat([torch.cat(row, dim=2) for row in X], dim=1)Get the basic information of video data

import cv2

video = cv2.VideoCapture(mp4_path)

height = int(video.get(cv2.CAP_PROP_FRAME_HEIGHT))

width = int(video.get(cv2.CAP_PROP_FRAME_WIDTH))

num_frames = int(video.get(cv2.CAP_PROP_FRAME_COUNT))

fps = int(video.get(cv2.CAP_PROP_FPS))

video.release()TSN Each paragraph (segment) Sample a video [3]

K = self._num_segments

if is_train:

if num_frames > K:

# Random index for each segment.

frame_indices = torch.randint(

high=num_frames // K, size=(K,), dtype=torch.long)

frame_indices += num_frames // K * torch.arange(K)

else:

frame_indices = torch.randint(

high=num_frames, size=(K - num_frames,), dtype=torch.long)

frame_indices = torch.sort(torch.cat((

torch.arange(num_frames), frame_indices)))[0]

else:

if num_frames > K:

# Middle index for each segment.

frame_indices = num_frames / K // 2

frame_indices += num_frames // K * torch.arange(K)

else:

frame_indices = torch.sort(torch.cat((

torch.arange(num_frames), torch.arange(K - num_frames))))[0]

assert frame_indices.size() == (K,)

return [frame_indices[i] for i in range(K)]extract ImageNet The convolution characteristics of a certain layer in the pre training model

# VGG-16 relu5-3 feature.

model = torchvision.models.vgg16(pretrained=True).features[:-1]

# VGG-16 pool5 feature.

model = torchvision.models.vgg16(pretrained=True).features

# VGG-16 fc7 feature.

model = torchvision.models.vgg16(pretrained=True)

model.classifier = torch.nn.Sequential(*list(model.classifier.children())[:-3])

# ResNet GAP feature.

model = torchvision.models.resnet18(pretrained=True)

model = torch.nn.Sequential(collections.OrderedDict(

list(model.named_children())[:-1]))

with torch.no_grad():

model.eval()

conv_representation = model(image)extract ImageNet The convolution characteristics of the pre training model

class FeatureExtractor(torch.nn.Module):

"""Helper class to extract several convolution features from the given

pre-trained model.

Attributes:

_model, torch.nn.Module.

_layers_to_extract, list<str> or set<str>

Example:

>>> model = torchvision.models.resnet152(pretrained=True)

>>> model = torch.nn.Sequential(collections.OrderedDict(

list(model.named_children())[:-1]))

>>> conv_representation = FeatureExtractor(

pretrained_model=model,

layers_to_extract={'layer1', 'layer2', 'layer3', 'layer4'})(image)

"""

def __init__(self, pretrained_model, layers_to_extract):

torch.nn.Module.__init__(self)

self._model = pretrained_model

self._model.eval()

self._layers_to_extract = set(layers_to_extract)

def forward(self, x):

with torch.no_grad():

conv_representation = []

for name, layer in self._model.named_children():

x = layer(x)

if name in self._layers_to_extract:

conv_representation.append(x)

return conv_representationOther pre training models

pretrained-models.pytorch

https://github.com/Cadene/pretrained-models.pytorch

Fine tune the full connection layer

model = torchvision.models.resnet18(pretrained=True)

for param in model.parameters():

param.requires_grad = False

model.fc = nn.Linear(512, 100) # Replace the last fc layer

optimizer = torch.optim.SGD(model.fc.parameters(), lr=1e-2, momentum=0.9, weight_decay=1e-4)Fine tune the full connection layer with a large learning rate , Small learning rate, fine-tuning volume layer

model = torchvision.models.resnet18(pretrained=True)

finetuned_parameters = list(map(id, model.fc.parameters()))

conv_parameters = (p for p in model.parameters() if id(p) not in finetuned_parameters)

parameters = [{'params': conv_parameters, 'lr': 1e-3},

{'params': model.fc.parameters()}]

optimizer = torch.optim.SGD(parameters, lr=1e-2, momentum=0.9, weight_decay=1e-4)5. model training

Common training and validation data preprocessing

among ToTensor The operation will PIL.Image Or in the shape of H×W×D, The range of values is [0, 255] Of np.ndarray Convert to shape D×H×W, The range of values is [0.0, 1.0] Of torch.Tensor.

train_transform = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(size=224,

scale=(0.08, 1.0)),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225)),

])

val_transform = torchvision.transforms.Compose([

torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225)),

])Train the basic code framework

for t in epoch(80):

for images, labels in tqdm.tqdm(train_loader, desc='Epoch %3d' % (t + 1)):

images, labels = images.cuda(), labels.cuda()

scores = model(images)

loss = loss_function(scores, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()The marks are smooth (label smoothing)[4]

for images, labels in train_loader:

images, labels = images.cuda(), labels.cuda()

N = labels.size(0)

# C is the number of classes.

smoothed_labels = torch.full(size=(N, C), fill_value=0.1 / (C - 1)).cuda()

smoothed_labels.scatter_(dim=1, index=torch.unsqueeze(labels, dim=1), value=0.9)

score = model(images)

log_prob = torch.nn.functional.log_softmax(score, dim=1)

loss = -torch.sum(log_prob * smoothed_labels) / N

optimizer.zero_grad()

loss.backward()

optimizer.step()Mixup[5]

beta_distribution = torch.distributions.beta.Beta(alpha, alpha)

for images, labels in train_loader:

images, labels = images.cuda(), labels.cuda()

# Mixup images.

lambda_ = beta_distribution.sample([]).item()

index = torch.randperm(images.size(0)).cuda()

mixed_images = lambda_ * images + (1 - lambda_) * images[index, :]

# Mixup loss.

scores = model(mixed_images)

loss = (lambda_ * loss_function(scores, labels)

+ (1 - lambda_) * loss_function(scores, labels[index]))

optimizer.zero_grad()

loss.backward()

optimizer.step()L1 Regularization

l1_regularization = torch.nn.L1Loss(reduction='sum')

loss = ... # Standard cross-entropy loss

for param in model.parameters():

loss += lambda_ * torch.sum(torch.abs(param))

loss.backward()Don't do the bias L2 Regularization / Weight attenuation (weight decay)

bias_list = (param for name, param in model.named_parameters() if name[-4:] == 'bias')

others_list = (param for name, param in model.named_parameters() if name[-4:] != 'bias')

parameters = [{'parameters': bias_list, 'weight_decay': 0},

{'parameters': others_list}]

optimizer = torch.optim.SGD(parameters, lr=1e-2, momentum=0.9, weight_decay=1e-4)Gradient cut (gradient clipping)

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=20)Calculation Softmax The accuracy of the output

score = model(images)

prediction = torch.argmax(score, dim=1)

num_correct = torch.sum(prediction == labels).item()

accuruacy = num_correct / labels.size(0)Visual model feed forward calculation chart

pytorchviz

https://github.com/szagoruyko/pytorchviz

Visual learning curve

Yes Facebook self-developed Visdom and Tensorboard( Still in the experimental stage ) Two choices .

facebookresearch/visdom

https://github.com/facebookresearch/visdom

Tensorboard

https://pytorch.org/docs/stable/tensorboard.html

# Example using Visdom.

vis = visdom.Visdom(env='Learning curve', use_incoming_socket=False)

assert self._visdom.check_connection()

self._visdom.close()

options = collections.namedtuple('Options', ['loss', 'acc', 'lr'])(

loss={'xlabel': 'Epoch', 'ylabel': 'Loss', 'showlegend': True},

acc={'xlabel': 'Epoch', 'ylabel': 'Accuracy', 'showlegend': True},

lr={'xlabel': 'Epoch', 'ylabel': 'Learning rate', 'showlegend': True})

for t in epoch(80):

tran(...)

val(...)

vis.line(X=torch.Tensor([t + 1]), Y=torch.Tensor([train_loss]),

name='train', win='Loss', update='append', opts=options.loss)

vis.line(X=torch.Tensor([t + 1]), Y=torch.Tensor([val_loss]),

name='val', win='Loss', update='append', opts=options.loss)

vis.line(X=torch.Tensor([t + 1]), Y=torch.Tensor([train_acc]),

name='train', win='Accuracy', update='append', opts=options.acc)

vis.line(X=torch.Tensor([t + 1]), Y=torch.Tensor([val_acc]),

name='val', win='Accuracy', update='append', opts=options.acc)

vis.line(X=torch.Tensor([t + 1]), Y=torch.Tensor([lr]),

win='Learning rate', update='append', opts=options.lr)Get the current learning rate

# If there is one global learning rate (which is the common case).

lr = next(iter(optimizer.param_groups))['lr']

# If there are multiple learning rates for different layers.

all_lr = []

for param_group in optimizer.param_groups:

all_lr.append(param_group['lr'])Learning rate decline

# Reduce learning rate when validation accuarcy plateau.

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='max', patience=5, verbose=True)

for t in range(0, 80):

train(...); val(...)

scheduler.step(val_acc)

# Cosine annealing learning rate.

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max=80)

# Reduce learning rate by 10 at given epochs.

scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones=[50, 70], gamma=0.1)

for t in range(0, 80):

scheduler.step()

train(...); val(...)

# Learning rate warmup by 10 epochs.

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda t: t / 10)

for t in range(0, 10):

scheduler.step()

train(...); val(...)Save and load breakpoints

Note that in order to be able to resume training , We need to keep the state of both the model and optimizer , And the current number of training rounds .

# Save checkpoint.

is_best = current_acc > best_acc

best_acc = max(best_acc, current_acc)

checkpoint = {

'best_acc': best_acc,

'epoch': t + 1,

'model': model.state_dict(),

'optimizer': optimizer.state_dict(),

}

model_path = os.path.join('model', 'checkpoint.pth.tar')

torch.save(checkpoint, model_path)

if is_best:

shutil.copy('checkpoint.pth.tar', model_path)

# Load checkpoint.

if resume:

model_path = os.path.join('model', 'checkpoint.pth.tar')

assert os.path.isfile(model_path)

checkpoint = torch.load(model_path)

best_acc = checkpoint['best_acc']

start_epoch = checkpoint['epoch']

model.load_state_dict(checkpoint['model'])

optimizer.load_state_dict(checkpoint['optimizer'])

print('Load checkpoint at epoch %d.' % start_epoch)Computational accuracy 、 Precision rate (precision)、 Recall rate (recall)

# data['label'] and data['prediction'] are groundtruth label and prediction

# for each image, respectively.

accuracy = np.mean(data['label'] == data['prediction']) * 100

# Compute recision and recall for each class.

for c in range(len(num_classes)):

tp = np.dot((data['label'] == c).astype(int),

(data['prediction'] == c).astype(int))

tp_fp = np.sum(data['prediction'] == c)

tp_fn = np.sum(data['label'] == c)

precision = tp / tp_fp * 100

recall = tp / tp_fn * 1006. Model test

Calculate the precision of each category (precision)、 Recall rate (recall)、F1 And overall indicators

import sklearn.metrics

all_label = []

all_prediction = []

for images, labels in tqdm.tqdm(data_loader):

# Data.

images, labels = images.cuda(), labels.cuda()

# Forward pass.

score = model(images)

# Save label and predictions.

prediction = torch.argmax(score, dim=1)

all_label.append(labels.cpu().numpy())

all_prediction.append(prediction.cpu().numpy())

# Compute RP and confusion matrix.

all_label = np.concatenate(all_label)

assert len(all_label.shape) == 1

all_prediction = np.concatenate(all_prediction)

assert all_label.shape == all_prediction.shape

micro_p, micro_r, micro_f1, _ = sklearn.metrics.precision_recall_fscore_support(

all_label, all_prediction, average='micro', labels=range(num_classes))

class_p, class_r, class_f1, class_occurence = sklearn.metrics.precision_recall_fscore_support(

all_label, all_prediction, average=None, labels=range(num_classes))

# Ci,j = #{y=i and hat_y=j}

confusion_mat = sklearn.metrics.confusion_matrix(

all_label, all_prediction, labels=range(num_classes))

assert confusion_mat.shape == (num_classes, num_classes)Write various results into a spreadsheet

import csv

# Write results onto disk.

with open(os.path.join(path, filename), 'wt', encoding='utf-8') as f:

f = csv.writer(f)

f.writerow(['Class', 'Label', '# occurence', 'Precision', 'Recall', 'F1',

'Confused class 1', 'Confused class 2', 'Confused class 3',

'Confused 4', 'Confused class 5'])

for c in range(num_classes):

index = np.argsort(confusion_mat[:, c])[::-1][:5]

f.writerow([

label2class[c], c, class_occurence[c], '%4.3f' % class_p[c],

'%4.3f' % class_r[c], '%4.3f' % class_f1[c],

'%s:%d' % (label2class[index[0]], confusion_mat[index[0], c]),

'%s:%d' % (label2class[index[1]], confusion_mat[index[1], c]),

'%s:%d' % (label2class[index[2]], confusion_mat[index[2], c]),

'%s:%d' % (label2class[index[3]], confusion_mat[index[3], c]),

'%s:%d' % (label2class[index[4]], confusion_mat[index[4], c])])

f.writerow(['All', '', np.sum(class_occurence), micro_p, micro_r, micro_f1,

'', '', '', '', ''])7. PyTorch Other matters needing attention

Model definition

It is recommended that there be layers and confluences with parameters (pooling) Layer using torch.nn Module definition , The activation function directly uses torch.nn.functional.torch.nn Module and torch.nn.functional The difference is that ,torch.nn Module in the calculation of the underlying call torch.nn.functional, but torch.nn The module includes the parameters of this layer , You can also deal with training and testing two network states . Use torch.nn.functional Pay attention to the network state , Such as

def forward(self, x):

...

x = torch.nn.functional.dropout(x, p=0.5, training=self.training)model(x) Pre use model.train() and model.eval() Switch network status .

Do not need to calculate the gradient of the code block with torch.no_grad() Include .model.eval() and torch.no_grad() The difference is that ,model.eval() It is to switch the network to the test state , for example BN And random deactivation (dropout) Use different calculation methods during training and testing .torch.no_grad() It's closing PyTorch The automatic derivation mechanism of tensor , To reduce storage usage and accelerate Computing , The result obtained cannot be carried out loss.backward().

torch.nn.CrossEntropyLoss The input of does not need to go through Softmax.torch.nn.CrossEntropyLoss Equivalent to torch.nn.functional.log_softmax + torch.nn.NLLLoss.

loss.backward() Pre use optimizer.zero_grad() Clear the cumulative gradient .optimizer.zero_grad() and model.zero_grad() The effect is the same .

PyTorch Performance and debugging

torch.utils.data.DataLoader Try to set pin_memory=True, For very small data sets such as MNIST Set up pin_memory=False It's faster .num_workers We need to find the fastest value in the experiment .

use del Delete unused intermediate variables in time , save GPU Storage .

Use inplace Operation can save GPU Storage , Such as

x = torch.nn.functional.relu(x, inplace=True)Besides , You can also use torch.utils.checkpoint In forward propagation, only some intermediate results are retained to save GPU Storage use , The content required in back propagation is calculated from the nearest intermediate results .

Reduce CPU and GPU Data transfer between . For example, if you want to know a epoch Each of them mini-batch Of loss And accuracy , First, accumulate them in GPU Medium one epoch After the end of the transmission back together CPU It's better than every one mini-batch All once GPU To CPU The transmission is faster .

Use semi precision floating point numbers half() There will be a certain speed increase , Specific efficiency depends on GPU model . It is necessary to be careful of the stability problems caused by low numerical accuracy .

Use... Often assert tensor.size() == (N, D, H, W) As a means of debugging , Make sure that the tensor dimension is consistent with what you envision .

Except for tags y Outside , Try to use less one-dimensional tensor , Use n*1 Instead of , It can avoid some unexpected calculation results of one-dimensional tensor .

It takes time to count all parts of the code

with torch.autograd.profiler.profile(enabled=True, use_cuda=False) as profile:

...

print(profile)Or run... On the command line

python -m torch.utils.bottleneck main.py边栏推荐

- Is it profitable to host an Olympic Games?

- PG basics -- Logical Structure Management (transaction)

- 正则表达式收集

- The use method of string is startwith () - start with XX, endswith () - end with XX, trim () - delete spaces at both ends

- OAI 5g nr+usrp b210 installation and construction

- Le langage r visualise les relations entre plus de deux variables de classification (catégories), crée des plots Mosaiques en utilisant la fonction Mosaic dans le paquet VCD, et visualise les relation

- SAP Fiori应用索引大全工具和 SAP Fiori Tools 的使用介绍

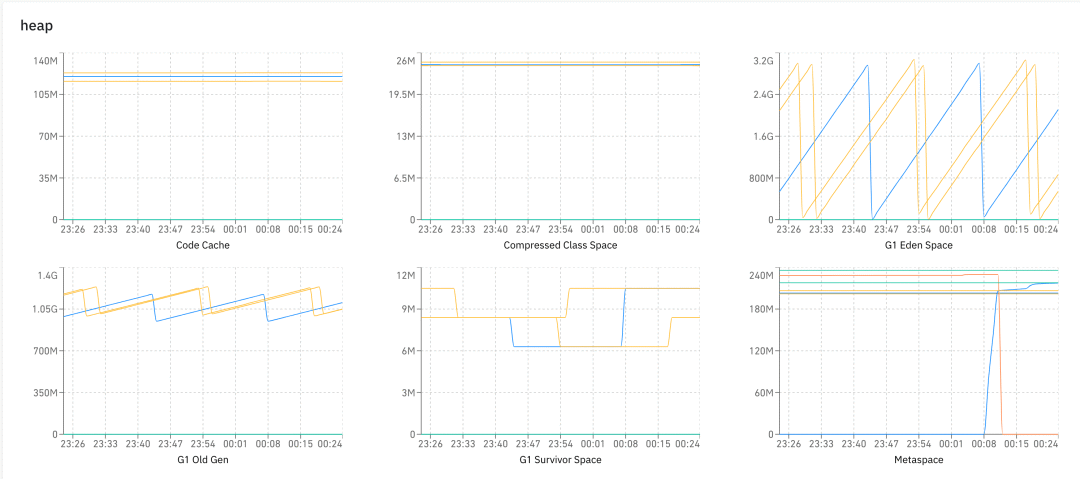

- The biggest pain point of traffic management - the resource utilization rate cannot go up

- Hardware development notes (10): basic process of hardware development, making a USB to RS232 module (9): create ch340g/max232 package library sop-16 and associate principle primitive devices

- How do I remove duplicates from the list- How to remove duplicates from a list?

猜你喜欢

Common English vocabulary that every programmer must master (recommended Collection)

1500萬員工輕松管理,雲原生數據庫GaussDB讓HR辦公更高效

Study notes of grain Mall - phase I: Project Introduction

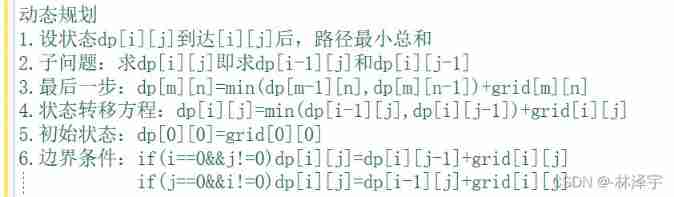

966 minimum path sum

Seven original sins of embedded development

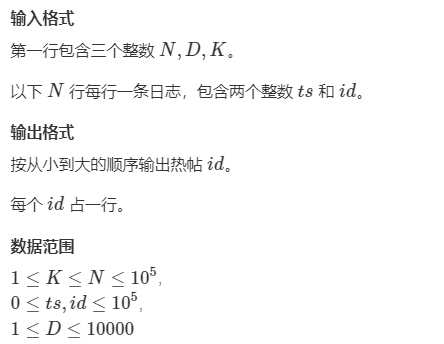

【滑动窗口】第九届蓝桥杯省赛B组:日志统计

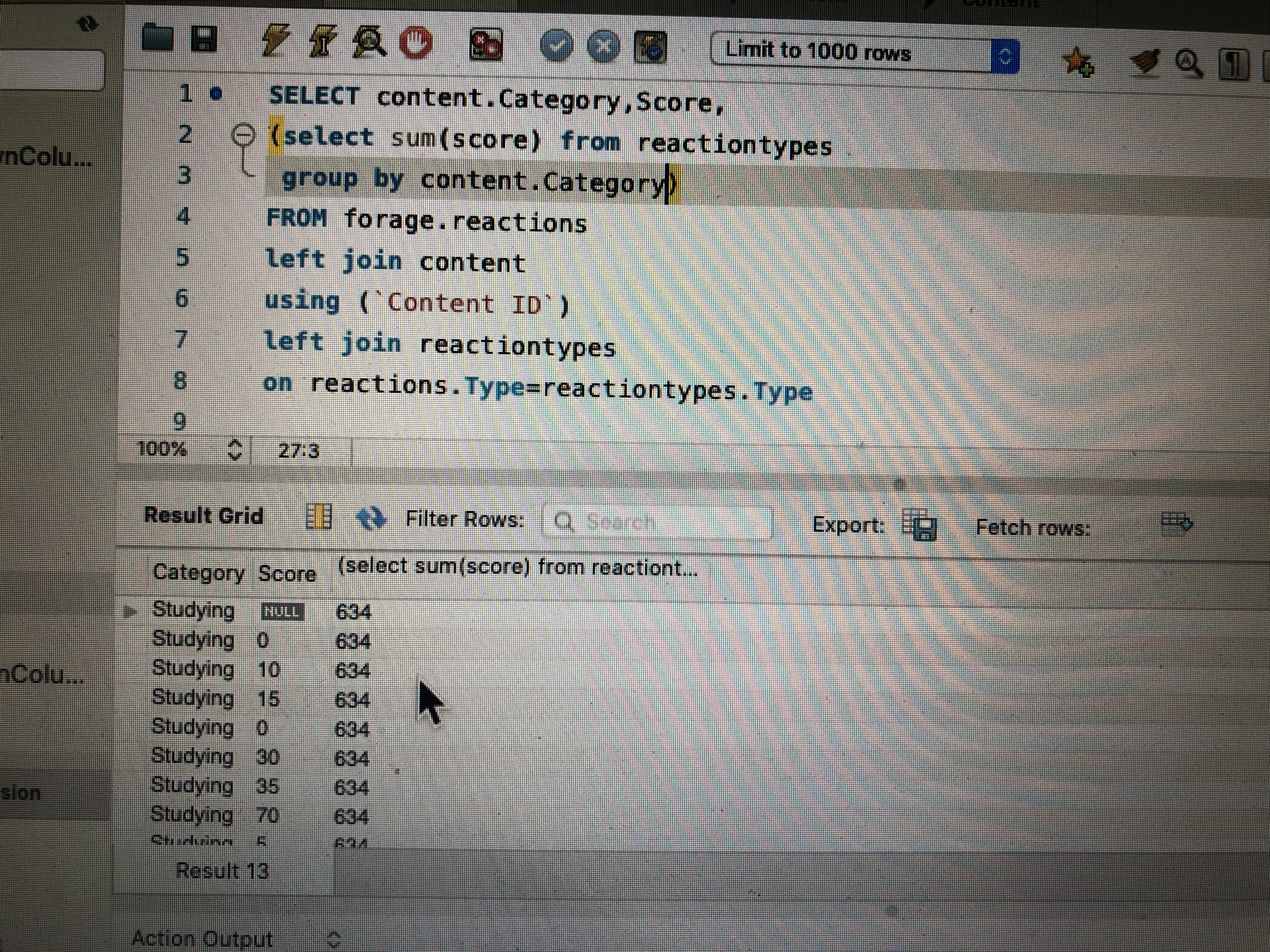

What is the problem with the SQL group by statement

Aike AI frontier promotion (7.6)

Performance test process and plan

监控界的最强王者,没有之一!

随机推荐

SAP Fiori应用索引大全工具和 SAP Fiori Tools 的使用介绍

Yyds dry goods count re comb this of arrow function

Interviewer: what is the internal implementation of ordered collection in redis?

OSPF多区域配置

SDL2来源分析7:演出(SDL_RenderPresent())

代理和反向代理

js通过数组内容来获取数组下标

【OpenCV 例程200篇】220.对图像进行马赛克处理

过程化sql在定义变量上与c语言中的变量定义有什么区别

【深度学习】PyTorch 1.12发布,正式支持苹果M1芯片GPU加速,修复众多Bug

Select data Column subset in table R [duplicate] - select subset of columns in data table R [duplicate]

Study notes of grain Mall - phase I: Project Introduction

js中,字符串和数组互转(二)——数组转为字符串的方法

Why do job hopping take more than promotion?

@Detailed differences among getmapping, @postmapping and @requestmapping, with actual combat code (all)

KDD 2022 | 通过知识增强的提示学习实现统一的对话式推荐

Thinking about agile development

Common English vocabulary that every programmer must master (recommended Collection)

Swagger UI tutorial API document artifact

C # use Oracle stored procedure to obtain result set instance