当前位置:网站首页>Crawler text data cleaning

Crawler text data cleaning

2022-07-31 01:38:00 【In the sea fishing】

def filter_chars(text):

"""过滤无用字符 :param text: 文本 """

# Find all non-Chinese in the text,English and numeric characters

add_chars = set(re.findall(r'[^\u4e00-\u9fa5a-zA-Z0-9]', text))

extra_chars = set(r"""!!¥$%*()()-——【】::“”";;'‘’,.?,.?、""")

add_chars = add_chars.difference(extra_chars)

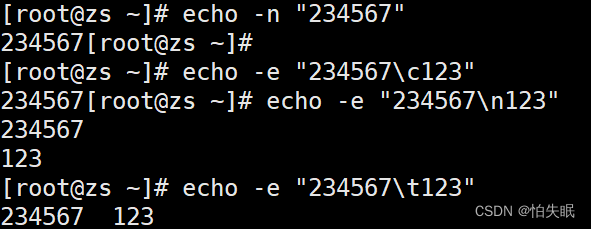

# tab 是/t

# Replace special character combinations

text = re.sub('{IMG:.?.?.?}', '', text)

text = re.sub(r'<!--IMG_\d+-->', '', text)

text = re.sub('(https?|ftp|file)://[-A-Za-z0-9+&@#/%?=~_|!:,.;]+[-A-Za-z0-9+&@#/%=~_|]', '', text) # Filter URLs

text = re.sub('<a[^>]*>', '', text).replace("</a>", "") # 过滤a标签

text = text.replace("</P>", "")

text = text.replace("nbsp;", "")

text = re.sub('<P[^>]*>', '', text, flags=re.IGNORECASE).replace("</p>", "") # 过滤P标签

text = re.sub('<strong[^>]*>', ',', text).replace("</strong>", "") # 过滤strong标签

text = re.sub('<br>', ',', text) # 过滤br标签

text = re.sub('www.[-A-Za-z0-9+&@#/%?=~_|!:,.;]+[-A-Za-z0-9+&@#/%=~_|]', '', text).replace("()", "") # 过滤www开头的网址

text = re.sub(r'\s', '', text) # 过滤不可见字符

text = re.sub('Ⅴ', 'V', text)

# 清洗

for c in add_chars:

text = text.replace(c, '')

return text

边栏推荐

- Ticmp - 更快的让应用从 MySQL 迁移到 TiDB

- 【Map与Set】之LeetCode&牛客练习

- 想要写出好的测试用例,先要学会测试设计

- 【genius_platform软件平台开发】第七十四讲:window环境下的静态库和动态库的一些使用方法(VC环境)

- 类似 MS Project 的项目管理工具有哪些

- 《云原生的本手、妙手和俗手》——2022全国新高考I卷作文

- tkinter模块高级操作(二)—— 界面切换效果、立体阴影字效果及gif动图的实现

- ShardingSphere's vertical sub-database sub-table actual combat (5)

- TiDB 在长银五八消费金融核心系统适配经验分享

- 蛮力法/邻接表 广度优先 有向带权图 无向带权图

猜你喜欢

随机推荐

Dispatch Center xxl-Job

35. Reverse linked list

Chi-square distribution of digital image steganography

ECCV 2022丨轻量级模型架构火了,力压苹果MobileViT(附代码和论文下载)

分布式.分布式锁

剑指offer17---打印从1到最大的n位数

Programmer's debriefing report/summary

数字图像隐写术之卡方分布

MySQL的存储过程

分布式.幂等性

软件测试基础接口测试-入门Jmeter,你要注意这些事

权限管理怎么做的?

keep-alive缓存组件

MySQL——数据库的查,增,删

九州云获评云计算标准化优秀成员单位

程序员转正述职报告/总结

Jiuzhou Cloud was selected into the "Trusted Cloud's Latest Evaluation System and the List of Enterprises Passing the Evaluation in 2022"

【952. Calculate the maximum component size according to the common factor】

Overview of prometheus monitoring

TiDB 操作实践 -- 备份与恢复