当前位置:网站首页>Unity shader global fog effect

Unity shader global fog effect

2022-07-28 17:07:00 【Morita Rinko】

Depth texture

Depth texture stores high-precision depth values , The scope is [0,1], And it is usually nonlinear .

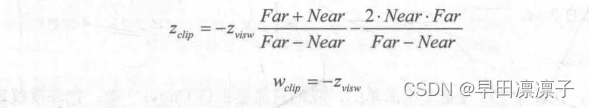

Depth value calculation

In vertex change , Finally, it will be transformed to the clipping space NDC Under space , Crop space is a [-1,1] Linear space , stay NDC In space, we can easily get [-1,1] Depth value of d

To obtain d after , We map it to [0,1] in

Depth value acquisition

stay unity We don't need to calculate the depth value by ourselves , We can get the depth value from the depth texture .

First , We need to set the camera through script depthTextureMode

After setting , We can do that shader Pass through _CameraDepthTexture Variable to access the depth texture .

Sampling depth texture , To deal with platform differences , Use

sampling .

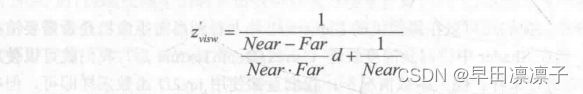

Because the depth value obtained by sampling is not linear , We need to make it linear .

We know the transformation matrix from perspective space to clipping space , Join us to change a point from perspective space to clipping space , We can get :

Divide it next

Get the expression

Map it to [0,1]

Because the camera is corresponding to z Negative value , So we have to take the opposite

But in unity Functions are provided in to convert

LinearEyeDepth The sampling result of the depth texture will be converted to the linear depth value in the view space

Linear01Depth The depth texture sampling results will be converted to the view space [0,1] Linear depth value of

Global fog effect

Realization effect

Noise global fog effect

The key to achieving

We need the depth value , Get the actual world coordinates of each pixel . So as to simulate the global fog effect .

- Firstly, the apparent cone rays in image space are interpolated , Get the direction information from the camera to the pixel .

- Multiply the ray by the depth value in the linear viewing angle space , Get the offset of this point to the camera

- The world coordinates of this point are obtained by offsetting and adding the camera position in world space

The code is as follows :

_WorldSpaceCameraPos and linearDepth It can be obtained by function .

interpolatedRay Calculation

interpolatedRay The calculation of comes from the interpolation of a specific vector near the four corners of the clipping plane .

First, we calculate the near clipping plane up、right The direction of the vector

Use the known vector to express the vector from the camera to the four corners

According to the vector of the angle and the depth value , The distance from the camera to this point can be obtained

extract scale factor

The vector values corresponding to the four corners

After the interpolation of these four vectors, we can get interpolatedRay

Calculation of fog

Three calculation formulas for calculating fog :

Here we use noise texture to achieve uneven fog effect

Script

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class FogWithNoise : PostEffectsBase

{

public Shader fogShader;

private Material fogMaterial;

public Material material

{

get

{

fogMaterial = CheckShaderAndCreateMaterial(fogShader, fogMaterial);

return fogMaterial;

}

}

private Camera myCamera;

public Camera camera

{

get

{

if (myCamera == null)

{

myCamera = GetComponent<Camera>();

}

return myCamera;

}

}

private Transform myCameraTransform;

public Transform cameraTransform

{

get

{

if (myCameraTransform == null)

{

myCameraTransform = camera.transform;

}

return myCameraTransform;

}

}

// Fog concentration

[Range(0.0f, 3.0f)]

public float fogDensity = 1.0f;

// The color of the fog

public Color fogColor = Color.white;

// Starting height

public float fogStart = 0.0f;

// Termination height

public float fogend = 2.0f;

// Noise texture

public Texture noiseTexture;

// Noise texture x Moving speed in direction

[Range(-0.5f, 0.5f)]

public float fogXSpeed = 0.1f;

// Noise texture y Moving speed in direction

[Range(-0.5f, 0.5f)]

public float fogYSpeed = 0.1f;

// How much noise texture is used , If 0 The fog effect is not affected by noise

[Range(0.0f, 3.0f)]

public float noiseAmount = 1.0f;

// Set the camera status

private void OnEnable()

{

camera.depthTextureMode |= DepthTextureMode.Depth;

}

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if (material != null)

{

// Create a matrix variable that stores four directional variables

Matrix4x4 frustumCorners = Matrix4x4.identity;

// Get the variables needed for calculation

float fov = camera.fieldOfView;

float near = camera.nearClipPlane;

float far = camera.farClipPlane;

float aspect = camera.aspect;

// Calculate four directional variables

float halfHeight = near * Mathf.Tan(fov * 0.5f * Mathf.Deg2Rad);

// Calculate the vectors in two directions

Vector3 toRight = cameraTransform.right * halfHeight * aspect;

Vector3 toTop = cameraTransform.up * halfHeight;

// Top left

Vector3 topLeft = cameraTransform.forward * near + toTop - toRight;

//scale factor

float scale = topLeft.magnitude / near;

topLeft.Normalize();

// The direction of the vector

topLeft *= scale;

// The upper right

Vector3 topRight = cameraTransform.forward * near + toRight + toTop;

topRight.Normalize();

topRight *= scale;

// The lower left

Vector3 bottomLeft = cameraTransform.forward * near - toTop - toRight;

bottomLeft.Normalize();

bottomLeft *= scale;

// The lower right

Vector3 bottomRight = cameraTransform.forward * near + toRight - toTop;

bottomRight.Normalize();

bottomRight *= scale;

// Store the calculated vector in the matrix ( In a certain order )

frustumCorners.SetRow(0, bottomLeft);

frustumCorners.SetRow(1, bottomRight);

frustumCorners.SetRow(2, topRight);

frustumCorners.SetRow(3, topLeft);

// Pass attribute values

material.SetMatrix("_FrustumCornersRap", frustumCorners);

material.SetMatrix("_ViewProjectionInverseMatrix", (camera.projectionMatrix * camera.worldToCameraMatrix).inverse);

material.SetFloat("_FogDensity", fogDensity);

material.SetColor("_FogColor", fogColor);

material.SetFloat("_FogStart", fogStart);

material.SetFloat("_FogEnd", fogend);

material.SetTexture("_NoiseTex", noiseTexture);

material.SetFloat("_FogXSpeed", fogXSpeed);

material.SetFloat("_FogYSpeed", fogYSpeed);

material.SetFloat("_NoiseAmount", noiseAmount);

Graphics.Blit(source, destination, material);

}

else

{

Graphics.Blit(source, destination);

}

}

}

shader

Shader "Custom/Chapter15-FogWithNoise"

{

Properties

{

_MainTex ("Base (RGB)", 2D) = "white" {

}

_FogDensity("Fog Density",Float)=1.0

_FogColor("Fog Color",Color)=(1,1,1,1)

_FogStart("Fog Start",Float)=0.0

_FogEnd("Fog End",Float)=1.0

_NoiseTex("Noise Texture",2D)="white"{

}

_FogXSpeed("Fog Horizontal Speed",Float)=0.1

_FogYSpeed("Fog Vertical Speed",Float)=0.1

_NoiseAmount("Noise Amount",Float)=1

}

SubShader

{

CGINCLUDE

#include "unityCG.cginc"

sampler2D _MainTex;

half4 _MainTex_TexelSize;

half _FogDensity;

fixed4 _FogColor;

half _FogStart;

half _FogEnd;

sampler2D _NoiseTex;

half _FogXSpeed;

half _FogYSpeed;

half _NoiseAmount;

sampler2D _CameraDepthTexture;

float4x4 _FrustumCornersRay;

struct v2f{

float4 pos:SV_POSITION;

// Noise texture texture coordinates

half2 uv:TEXCOORD0;

// Depth texture texture coordinates

half2 uv_depth:TEXCOORD1;

// Store the vector after interpolation

float4 interpolatedRay:TEXCOORD2;

};

v2f vert (appdata_img v){

v2f o;

o.pos =UnityObjectToClipPos(v.vertex);

o.uv=v.texcoord;

o.uv_depth=v.texcoord;

#if UNITY_UV_STARTS_AT_TOP

if(_MainTex_TexelSize.y<0){

o.uv_depth.y=1-o.uv_depth.y;

}

#endif

// Calculate the index to determine the direction variable , According to index To get interpolatedRay

int index=0;

if(v.texcoord.x<0.5 && v.texcoord.y<0.5){

index=0;

}else if(v.texcoord.x>0.5 && v.texcoord.y<0.5){

index=1;

}else if(v.texcoord.x>0.5 && v.texcoord.y>0.5){

index=2;

}else if(v.texcoord.x<0.5 && v.texcoord.y>0.5){

index=3;

}

#if UNITY_UV_STARTS_AT_TOP

if(_MainTex_TexelSize.y<0){

index=3-index;

}

#endif

// from _FrustumCornersRay Get the corresponding index The direction vector of

o.interpolatedRay=_FrustumCornersRay[index];

return o;

}

fixed4 frag(v2f i):SV_Target{

// Depth value in view space

float linearDepth =LinearEyeDepth(SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture,i.uv_depth));

// World coordinates

float3 worldPos =_WorldSpaceCameraPos+linearDepth*i.interpolatedRay;

// Offset value of noise texture

float2 speed =_Time.y*float2(_FogXSpeed,_FogYSpeed);

// Sample noise texture

float noise =(tex2D(_NoiseTex,i.uv+speed).r-0.5)*_NoiseAmount;

// According to the world coordinates y Value to calculate the concentration of fog

float fogDensity =(_FogEnd-worldPos.y)/(_FogEnd-_FogStart);// In proportion to

// Add the noise value , Randomly change the concentration of fog

fogDensity =(fogDensity*_FogDensity*(1+noise));// Fog concentration

fixed4 finalColor=tex2D(_MainTex,i.uv);

// Mix the fog color with the original color according to the fog concentration

finalColor.rgb =lerp(finalColor,_FogColor,fogDensity);

return finalColor;

}

ENDCG

Pass{

ZTest Always Cull Off ZTest Off

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

ENDCG

}

}

FallBack Off

}

边栏推荐

- Easypoi --- excel file export

- Simple addition, deletion, modification and query of commodity information

- [deep learning]: day 5 of pytorch introduction to project practice: realize softmax regression from 0 to 1 (including source code)

- 结构化设计的概要与原理--模块化

- parseJson

- Comprehensively design an oppe homepage -- after sales service of the page

- Games101-assignment05 ray tracing - rays intersect triangles

- TCP handshake, waving, time wait connection reset and other records

- 我该如何理解工艺

- Alibaba cloud - Wulin headlines - site building expert competition

猜你喜欢

Unity shader transparent effect

Re13: read the paper gender and racial stereotype detection in legal opinion word embeddings

Text filtering skills

【深度学习】:《PyTorch入门到项目实战》第二天:从零实现线性回归(含详细代码)

PostgreSQL weekly news - July 20, 2022

Ruoyi's solution to error reporting after integrating flyway

ERROR: transport library not found: dt_ socket

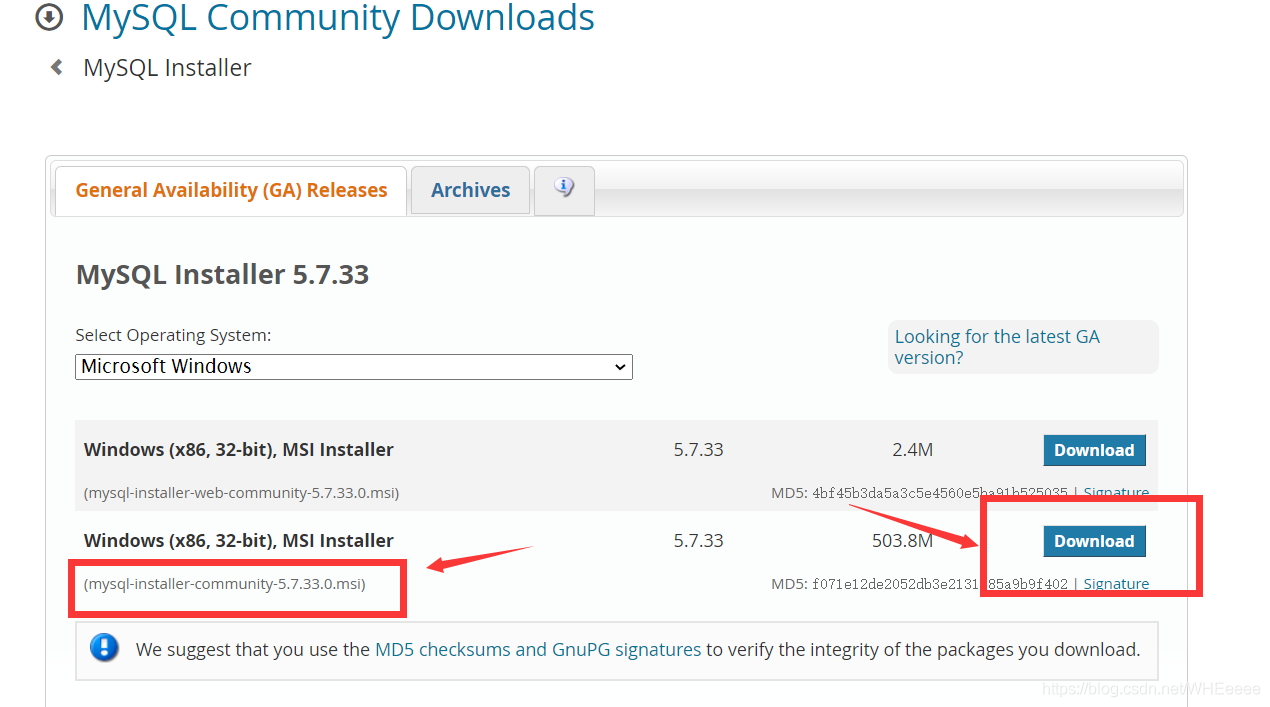

MySQL installation tutorial

【深度学习】:《PyTorch入门到项目实战》第九天:Dropout实现(含源码)

负整数及浮点数的二进制表示

随机推荐

Interesting kotlin 0x08:what am I

[deep learning]: day 5 of pytorch introduction to project practice: realize softmax regression from 0 to 1 (including source code)

SUSE CEPH add nodes, reduce nodes, delete OSD disks and other operations – storage6

Unity shader transparent effect

Efficiency comparison of three methods for obtaining timestamp

Huawei mate 40 series exposure: large curvature hyperboloid screen, 5nm kylin 1020 processor! There will also be a version of Tianji 1000+

Unity3d simple implementation of water surface shader

Oracle table partition

Technology sharing | MySQL shell customized deployment MySQL instance

[deep learning]: day 1 of pytorch introduction to project practice: data operation and automatic derivation

技术分享 | MySQL Shell 定制化部署 MySQL 实例

Ugui learning notes (VI) get the information of the clicked UI

HTAP是有代价的

Is smart park the trend of future development?

MD5 encryption verification

Re10: are we really making much progress? Revisiting, benchmarking, and refining heterogeneous gr

mysql 最大建议行数2000w,靠谱吗?

Implementation of transfer business

Easypoi --- excel file export

【深度学习】:《PyTorch入门到项目实战》第一天:数据操作和自动求导