当前位置:网站首页>Multi camera data collection based on Kinect azure (II)

Multi camera data collection based on Kinect azure (II)

2022-06-22 07:02:00 【GaryW666】

be based on Kinect Azure Multi camera data acquisition ( Two )

stay be based on Kinect Azure Multi camera data acquisition ( One ) Take the data acquisition of dual cameras as an example , It introduces Kinect Azure The method of multi camera data acquisition , Mainly including data collection , Device synchronization , Equipment calibration , Data fusion has four parts . The depth data of acquisition are given 、 Color data and method for obtaining color point cloud data . This article continues with how to synchronize devices .

The same as usual , Or put reference links first :

https://docs.microsoft.com/zh-cn/azure/Kinect-dk/multi-camera-sync

https://github.com/microsoft/Azure-Kinect-Sensor-SDK/blob/develop/examples/green_screen/MultiDeviceCapturer.h

Multi camera data acquisition ultimately wants to synthesize a good point cloud model , Device synchronization is the most basic requirement . So called synchronization , This is to make each device capture data at the same time , Only by synchronizing devices accurately , Finally, the point cloud data among multiple devices can be perfectly integrated . Device synchronization includes intra device synchronization and inter device synchronization , Synchronization in the equipment refers to the synchronization of the depth sensor and the color sensor , The synchronization between them can be realized through the depth_delay_off_color_usec Property to set . The synchronization between devices includes hardware synchronization and software synchronization , Refer to the first connection above for the specific method of hardware synchronization . This article focuses on sharing how to synchronize two devices on the software .

One 、 preparation :

1、 Set the primary and secondary properties of the device ;

2、 Turn on all slave devices first , Restart the main equipment ;( These two points can be found in the last article in this series )

3、 Set the device's subordinate_delay_off_master_usec The attribute is 0;

4、 Set the... Of two devices respectively depth_delay_off_color_usec The attribute is 80 and -80. To prevent mutual interference between depth sensors of multiple devices , Therefore, the capture time between depth sensors should deviate from 160μs Or more ;

5、 For the precise timing of the equipment , It is necessary to manually adjust the exposure time of the color image , Automatic mode will cause synchronized devices to lose synchronization faster . Use k4a_device_set_color_control The function sets the exposure time to manual mode ; Simultaneous use k4a_device_set_color_control The function continues to set the control frequency to manual mode , The parameters I set here are consistent with those in the above linked routine .

Two 、 Implementation method and code

Use the method of adjusting timestamp to obtain device synchronization . The specific implementation ideas are as follows :

1、 The master and slave devices respectively acquire a frame of data , And get its color image ( Depth images can also );

2、 use k4a_image_get_device_timestamp_usec Function to obtain the timestamp of two images respectively ;

3、 Calculate the expected timestamp ;

Expected timestamp = Master device image timestamp +subordinate_delay_off_master_usec Property value ,

If you use depth images , Plus depth_delay_off_color_usec Property value )

4、 Compare the timestamp of the slave device image with the desired timestamp :

1) The difference is less than the opposite of the set threshold , Indicates that the timestamp of the slave device lags behind , Slave device recapture data , And go to step 3;

2) The difference is greater than the set threshold , Indicates that the timestamp of the master device lags behind , The master device recaptures the data , And go to step 3;

3) The absolute value of the difference is less than the set threshold , Indicates that the synchronization between the two devices has been completed ;

When synchronizing with color images , Threshold set to 100μs; When synchronizing with depth images , Threshold set to 260μs.

thus , The synchronization between the two devices has been completed , But it may also face the risk of going out of step ( But I haven't found it yet ). So for the correctness of the program , It is safer to call the synchronization function once for each frame of data collection . And be careful , The image obtained in the synchronization function must be timely release, Otherwise, the memory will explode when the loop runs !!! Don't ask me how I know that …

边栏推荐

- Vector of relevant knowledge of STL Standard Template Library

- 编程题:移除数组中的元素(JS实现)

- ROS Qt环境搭建

- 《数据安全实践指南》- 数据采集安全实践-数据分类分级

- Introduction to 51 single chip microcomputer - matrix key

- The journey of an operator in the framework of deep learning

- golang調用sdl2,播放pcm音頻,報錯signal arrived during external code execution。

- [fundamentals of machine learning 01] blending, bagging and AdaBoost

- OpenGL - Draw Triangle

- [out of distribution detection] deep analog detection with outlier exposure ICLR '19

猜你喜欢

Detailed tutorial on connecting MySQL with tableau

【实习】跨域问题

How can we effectively alleviate anxiety? See what ape tutor says

ROS Qt环境搭建

![[meta learning] classic work MAML and reply (Demo understands meta learning mechanism)](/img/e5/ea68e197834ddcfe10a14e631c68d6.jpg)

[meta learning] classic work MAML and reply (Demo understands meta learning mechanism)

一个算子在深度学习框架中的旅程

What exactly is the open source office of a large factory like?

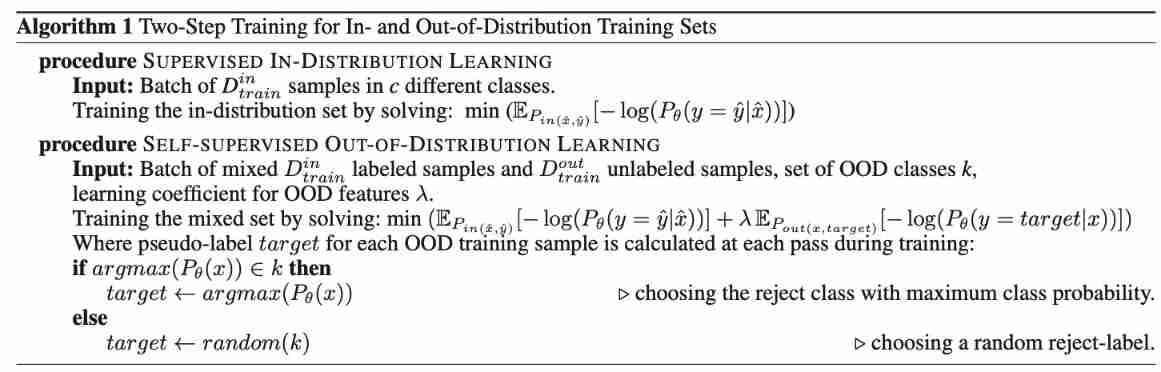

Self supervised learning for general out of distribution detection AAAI '20

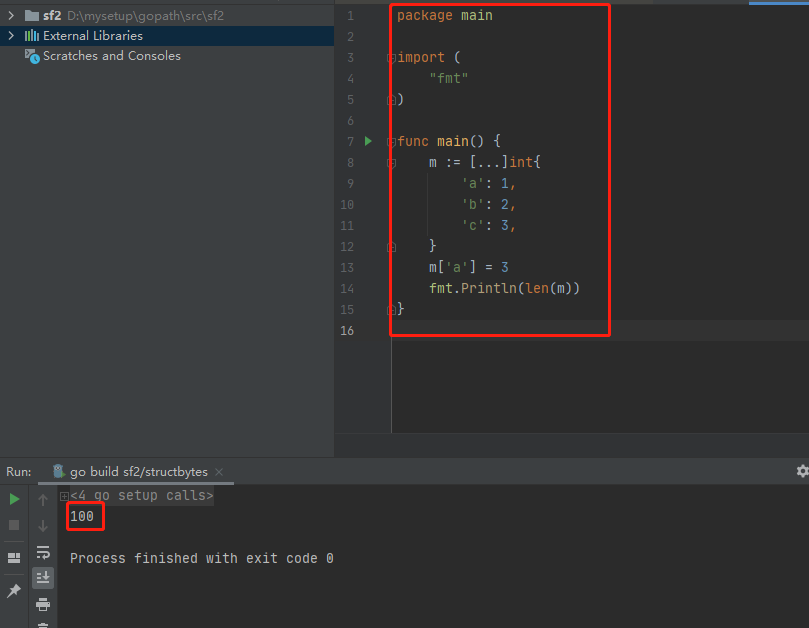

June 21, 2022: golang multiple choice question, what does the following golang code output? A:3; B:4; C:100; D: Compilation failed. package main import ( “fmt“ ) func

Training penetration range 02 | 3-star VH LLL target | vulnhub range node1

随机推荐

How to learn 32-bit MCU

【GAN】《ENERGY-BASED GENERATIVE ADVERSARIAL NETWORKS》 ICLR‘17

Five common SQL interview questions

Tpflow V6.0.6 正式版发布

Don't throw away the electric kettle. It's easy to fix!

Introduction to 51 Single Chip Microcomputer -- the use of Keil uvision4

迪进面向ConnectCore系统模块推出Digi ConnectCore语音控制软件

JDBC查询结果集,结果集转化成表

cookie的介绍和使用

[out of distribution detection] learning confidence for out of distribution detection in neural networks arXiv '18

Cesium loading 3D tiles model

In 2022, which industry will graduates prefer when looking for jobs?

Py之Optbinning:Optbinning的简介、安装、使用方法之详细攻略

[rust notes] 01 basic types

Databricks from open source to commercialization

[meta learning] classic work MAML and reply (Demo understands meta learning mechanism)

流程引擎解决复杂的业务问题

Difference between grail layout and twin wing layout

OpenGL - Textures

Up sampling and down sampling (notes, for personal use)