当前位置:网站首页>Multiple linear regression (sklearn method)

Multiple linear regression (sklearn method)

2022-07-05 08:50:00 【Python code doctor】

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn import svm

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import StandardScaler

# SVR LinearSVR Return to

# SVC LinearSVC classification

# technological process

# 1. get data

data = pd.read_csv('./data.csv')

# 2. Data exploration

# print(data.columns)

# print(data.describe())

# 3. Data cleaning

# Characteristics are divided into 3 Group

features_mean = list(data.columns[2:12]) # Average data

features_se = list(data.columns[12:22]) # Standard deviation data

# ID Column delete

data.drop('id',axis=1,inplace=True)

# take B Benign replace with 0,M Malignant replace with 1

data['diagnosis'] = data['diagnosis'].map({

'M':1,'B':0})

print(data.head(5))

# 4. feature selection

# Purpose Dimension reduction

sns.countplot(data['diagnosis'],label='Count')

plt.show()

# Heat map features_mean Correlation between fields

corr = data[features_mean].corr()

plt.figure(figsize=(14,14))

sns.heatmap(corr,annot=True)

plt.show()

# feature selection Average this group 10--→6

features_remain = ['radius_mean', 'texture_mean', 'smoothness_mean', 'compactness_mean', 'symmetry_mean','fractal_dimension_mean']

# model training

# extract 30% Data as a test set

train,test = train_test_split(data,test_size=0.3)

train_x = train[features_mean]

train_y = train['diagnosis']

test_x = test[features_mean]

test_y = test['diagnosis']

# Data normalization

ss = StandardScaler()

train_X = ss.fit_transform(train_x)

test_X = ss.transform(test_x)

# establish svm classifier

model = svm.SVC()

# Parameters

# kernel Kernel function selection

# 1.linear Linear kernel function When the data is linearly separable

# 2.poly Polynomial kernel function Mapping data from low dimensional space to high dimensional space But there are many parameters , The amount of calculation is relatively large

# 3.rbf Gaussian kernel Map samples to high-dimensional space Less parameters Good performance Default

# 4.sigmoid sigmoid Kernel function In the mapping of snake spirit network SVM Realize multilayer neural network

# c Penalty coefficient of objective function

# gamma Coefficient of kernel function The default is the reciprocal of the sample feature number

# Training data

model.fit(train_x,train_y)

# 6. Model to evaluate

pred = model.predict(test_x)

print(' Accuracy rate :',accuracy_score(test_y,pred))

边栏推荐

猜你喜欢

随机推荐

Digital analog 2: integer programming

Illustration of eight classic pointer written test questions

Guess riddles (11)

资源变现小程序添加折扣充值和折扣影票插件

Basic number theory - factors

Use arm neon operation to improve memory copy speed

多元线性回归(梯度下降法)

asp.net(c#)的货币格式化

12、动态链接库,dll

kubeadm系列-01-preflight究竟有多少check

图解八道经典指针笔试题

C language data type replacement

An enterprise information integration system

287. 寻找重复数-快慢指针

[Niuke brush questions day4] jz55 depth of binary tree

Warning: retrying occurs during PIP installation

GEO数据库中搜索数据

猜谜语啦(5)

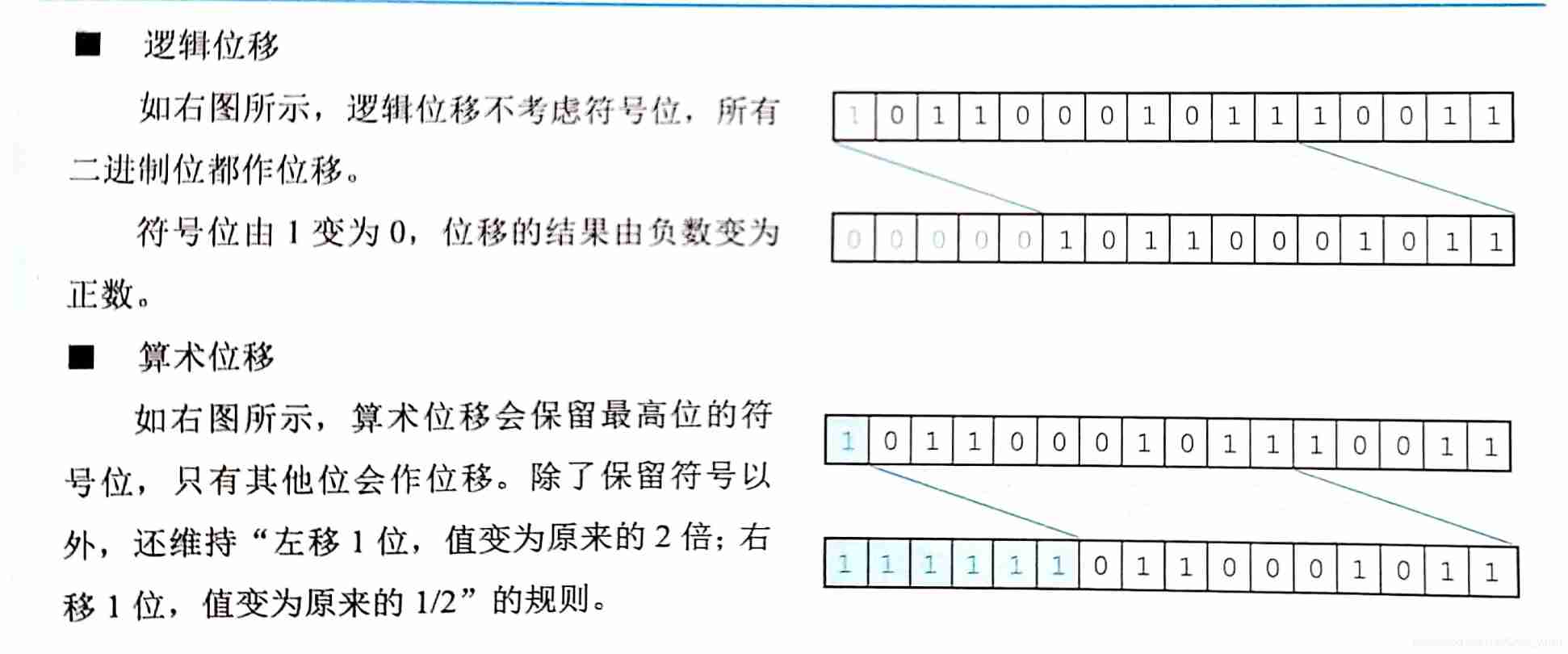

Bit operation related operations

Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding

![[牛客网刷题 Day4] JZ55 二叉树的深度](/img/f7/ca8ad43b8d9bf13df949b2f00f6d6c.png)