当前位置:网站首页>Trunet: short videos generation from long videos via story preserving truncation (thesis translation)

Trunet: short videos generation from long videos via story preserving truncation (thesis translation)

2022-06-12 06:16:00 【Caicaicaicaicaicai】

TruNet: Short Videos Generation from Long Videos via Story-Preserving Truncation

TruNet: Generate short video from long video by saving story truncation

In this work , We introduce a new problem , be called {\ em Story saving long video truncation }, This problem requires an algorithm to automatically truncate a long video into several short and attractive sub videos , Each sub video contains an ongoing story . This is different from the traditional video highlight detection or video summarization , Because each sub video needs to keep a coherent and complete story , This is for the resource production video sharing platform ( for example Youtube,Facebook,TikTok,Kwai etc. ) Become especially important in order to solve this problem , We collected and annotated a new large video truncation data set , be called TruNet, It includes 1470 A video , Each video contains... On average 11 A short story . Leverage new data sets , We further developed and trained a neural architecture for video truncation , The architecture consists of two parts : Boundary aware network (BAN) And fast forward long-term short-term memory (FF-LSTM). We use BAN Generate high-quality time recommendations by jointly considering the attractiveness and boundary at the frame level . then , We use FF-LSTM( Tends to capture higher-order dependencies between a series of frames ) To determine whether the time proposal is a coherent and complete story . We show that , Our proposed framework is superior to the existing methods for story preserving long video truncation in both quantitative methods and user research . It can be done by https://ai.baidu.com/broad/download Use data sets for public academic research .

In this work, we introduce a new problem, named as {\em story-preserving long video truncation}, that requires an algorithm to automatically truncate a long-duration video into multiple short and attractive sub-videos with each one containing an unbroken story. This differs from traditional video highlight detection or video summarization problems in that each sub-video is required to maintain a coherent and integral story, which is becoming particularly important for resource-production video sharing platforms such as Youtube, Facebook, TikTok, Kwai, etc. To address the problem, we collect and annotate a new large video truncation dataset, named as TruNet, which contains 1470 videos with on average 11 short stories per video. With the new dataset, we further develop and train a neural architecture for video truncation that consists of two components: a Boundary Aware Network (BAN) and a Fast-Forward Long Short-Term Memory (FF-LSTM). We first use the BAN to generate high quality temporal proposals by jointly considering frame-level attractiveness and boundaryness. We then apply the FF-LSTM, which tends to capture high-order dependencies among a sequence of frames, to decide whether a temporal proposal is a coherent and integral story. We show that our proposed framework outperforms existing approaches for the story-preserving long video truncation problem in both quantitative measures and user-study. The dataset is available for public academic research usage at https://ai.baidu.com/broad/download.

1. Introduction

Such as TikTok and Kwai Such short video sharing platforms are becoming more and more popular , And lead to the need to generate short video . Users like to waste their time on more compact short videos , And high quality video ( Such as reality shows and TV series ) Usually very long ( for example > 1 Hours ). under these circumstances , Develop new algorithms to truncate long videos into short ones , Attractive and uninterrupted stories are particularly interesting .

Short-form video sharing platforms such as TikTok and Kwai are becoming increasingly popular and lead to the requirement of generating short-form videos. Users prefer to consume their time in more compact short-form videos, while high quality videos such as reality show and TV series are usually long (e.g. > 1 hour). In such context, developing new algorithms that can truncate long videos into short, attractive and unbroken stories is of special interest.

Although great progress has been made in video highlight detection and video aggregation [32,33,6,7,5], But many of them focus on producing a coherent whole story in the final combination of highlights , And you don't need the story of each sub video to be continuous . Due to the limitations of existing data sets , The problem of long video truncation with story preserved is still not well studied . obviously , under these circumstances , The integrity or completeness of the story is a crucial measure for short videos , However, this has not been taken into account in the existing video highlights data set . for example , stay SumMe [6] and TVSum [25] in , The climactic battle footage is very interesting , It's also important. , Enough to be a real button , But as a separate short video , It must involve the beginning and the end to clarify the causal relationship of the story . On the other hand ,ActivityNet [16] It provides an accurate time boundary for the action interval , But its data distribution is different from the requirements of short video production from large long video databases . As shown in the table 1 Shown ,ActivityNet The average video length of is too short ( Less than 2 minute ), So the average number of stories per video is only 1.2, The average story length is only 0.8 minute

Although much progress has been made in video highlight detection and video summarization [32, 33, 6, 7, 5], many of the them focus on producing a coherent whole story in the final combined highlight and there is no requirement of an unbroken story to each sub-video. And the problem of story-preserving long video truncation is still not well studied due to the limitation of existing datasets. Obviously, story integrity or completeness is a crucial measurement for short-form videos under this scenario, but is not yet considered in existing video highlight datasets. For example, a climactic fight fragment is interesting and important enough to be a ground-truth keyshot in SumMe [6] and TVSum [25], but as an individual short-form video, it has to involve the beginning and the ending parts to clarify the cause and effect of the story. On the other hand, ActivityNet [16] provides action intervals with accurate temporal boundaries, but its data distribution is different from the requirement of short-form video production from the massive long video database. As Table 1 shows, the average video length of ActivityNet is too short (< 2 minutes) such that the average story number per video is only 1.2 and the average story length is only 0.8 minute

In this paper , We have a collection called TruNet New large datasets , It includes 1470 A video , A total of 2101 Hours , The average video duration exceeds 1 Hours . It covers a wide range of popular topics , Including variety shows , Reality show , Talk shows and TV series . TruNet On average, each long video provides 11 A short story , And every story has a precise time boundary . chart 1(a) Shows TruNet Sample video of . This video is a variety show , It includes 9 A song and dance performance . this 9 Short stories are represented by different colors , The third story is shown in figure with high temporal resolution 1(b) in .

In this paper, we collect a new large dataset, named as TruNet, which contains 1470 videos with a total of 2101 hours and an average video length longer than 1 hour. It covers a wide range of popular topics including variety show, reality show, talk show, and TV series. TruNet provides on average 11 short stories per long video and each story is annotated with accurate temporal boundaries. Figure 1(a) shows A sample video of the TruNet. The video is a variety show that contains 9 song and dance performances. The 9 short stories are indicated in different colors, and the third one is shown in Figure 1(b) with a higher temporal resolution.

With new data sets , We further developed a baseline neural architecture for story preserving long video truncation , The architecture consists of two components : A boundary aware network for generating proposals (BAN) And fast forward long-term short-term memory (FF- LSTM) Classification of story integrity . Generation with previous proposals depends only on the latest technology of action [30] Different ,BAN Generate proposals using additional frame level boundaries , And achieve higher accuracy when the number of proposals is small . With the traditional LSTM Sequence modeling is different ,FF-LSTM [39] The fast forward connection is introduced into LSTM Layer stack , To encourage stable and efficient back propagation in deep loop topology , This significantly improves video performance Story classification . As far as we know , This is the first time that FF-LSTM Used for sequence modeling in video domain .

With the new dataset, we further develop a baseline neural architecture for story-preserving long video truncation that consists of two components: a Boundary Aware Network (BAN) for proposal generation and a Fast-Forward Long Short-Term Memory (FF-LSTM) for story integrity classification. Different from previous state-of-the-art methods [30] whose proposal generation only depends on actionness, BAN utilizes additional frame-level boundaryness to generate proposals and achieves higher precision when the number of proposals is small. And different from traditional LSTM on sequence modeling, FF-LSTM [39] introduces fast-forward connections to a stack of LSTM layers to encourage stable and effective backpropagation in the deep recurrent topology, which leads to an obvious performance improvement in our video story classification. To the best of our knowledge, this is the first time that FF-LSTM has been used for modeling sequences in the video domain.

To make a long story short , Our contribution is in three aspects :(1) We introduce a new practical problem in video truncation , That is, to keep the long video of the story truncated , This requires truncating long videos into multiple short videos , Each video keeps a story . (2) We collected and annotated a new large data set to study this problem , It can be a supplementary resource to the existing video data sets . (3) We propose a baseline framework , The framework involves a new time proposal generation module and a new sequence modeling module , Compared with the traditional method , It has better performance . We will also publish data sets for public academic research .

In summary, our contributions are threefold: (1) We introduce a new practical problem in video truncation, story-preserving long video truncation, which requires to truncate a long-time video into multiple short-form videos with each one preserving a story. (2) We collect and annotate a new large dataset for studying this problem, which can become a complementary source to existing video datasets. (3) We propose a baseline framework that involves a new temporal proposal generation module and a new sequence modeling module, with better performance compared to traditional methods. We will also release the dataset for public academic research usage.

2. Related Work

2.1. Video Dataset

ad locum , We briefly review the typical video datasets related to our work . SumMe Data sets [6] from 25 Video composition , cover 3 Categories . The length of the video is about 1 To 6 minute , extract 5% To 15% As a video summary . Again ,TVSum Data sets [25] Include from 10 Category 50 A video . The video duration is 2 To 10 Between minutes , And at most 5% As a key frame . And SueMe and TVSum comparison , What we proposed TruNet The dataset focuses on the problem of long video truncation to preserve stories , In this way, each summary is a complete short format video with precise time boundary .

Here we briefly review typical video datasets that are related to our work. The SumMe dataset [6] consists of 25 videos covering 3 categories. The length of the videos ranges from about 1 to 6 minutes and 5% to 15% frames are extracted to be the summary of a video. Similarly, the TVSum dataset [25] contains 50 videos from 10 categories. The video duration is between 2 to 10 minutes and at most 5% frames are selected to be the keyshots. Compared with SueMe and TVSum, our proposed TruNet dataset focuses on the story-preserving long video truncation problem such that each summary is an integral short-form video with accurate temporal boundaries.

On the other hand , Even though THUMOS14 [12] and Acivityivity [16] Time boundaries are also provided , But their data distribution is not suitable for our problem . With ActivityNet For example ,Activi-tyNet The average video length of is too short , The average number of operands per video is only 1.2. Ac tivityNet Whether the collected daily activities are suitable for video sharing is another consideration . contrary , We suggest TruNet Focus on collecting high-quality long videos , In this way, the average number of short stories per video can reach up to 11 individual .TruNet The video theme in is also selected to be suitable for sharing in the video sharing platform

On the other hand, although THUMOS14 [12] and ActivityNet [16] also provide temporal boundaries, their data distributions are not suitable for our problem. Take ActivityNet as an example, the average video length of Activi-tyNet is too short and the average action number per video is only 1.2. Whether the daily activities collected by ActivityNet are suitable for video sharing is another consideration. In contrast, our proposed TruNet focuses on collecting high-quality long videos, such that the average short story number per video can be up to 11. The video topics in TruNet are also chosen to be suitable to share in video sharing platforms

2.2. Video Summarization

Video summarization has become a long-standing problem in the field of computer vision and multimedia . Previous studies usually regarded it as extracting key frames [32、1、17、29、13、14], Video clip [33、31、37、6、7、20、21], Synopsis [5], Time delay [15]. ], Montage [26] And video summary [22]. An exhaustive review is beyond the scope of this article . We refer to [28] Investigate early works of video abstracts . Compared with the previous method , In this paper, we propose a long video truncation with story line preserved , The video summarization problem is raised in different ways . Each truncated short video should be an engaging and complete short story , So that it can be used in the video sharing platform .

Video summarization has been a long-standing problem in computer vision and multimedia. Previous studies typically treat it as extracting keyshots [32, 1, 17, 29, 13, 14], video skims [33, 31, 37, 6, 7, 20, 21], storyboards [5], timelapses [15], montages [26], and video synopses [22]. An exhaustive review is beyond the scope of this paper. We refer [28] for a survey of early works on video summarization. Compared with previous approaches, this paper proposes story preserving long video truncation that formulates the video summarization problem in a different way. Each truncated short-form video should be an attractive and unbroken short story such that it can be used in the video sharing platforms.

2.3. Temporal Action Localization

Our work is also related to time action localization . Early time motion location methods [27,11,34] Rely on hand-made features and sliding window search . Compared with functions based on manual functions , Using a ConvNet The function of ( for example C3D [24] And second rate CNN [2、35]) Higher efficiency and better performance can be achieved . [3,4,30] Focus on using a more powerful network structure to generate high-quality time advice . Structured time modeling [38] and 1d Time convolution [18] It has also proved to be very important to improve the performance of temporary motion positioning . Although similar in concept , But our model is novel , Because it generates high-quality time proposals by taking into account the attractiveness and boundary at the frame level , This is good for short, truncated video without interruption . BSN [19] It is also a boundary aware framework , But it is designed for temporary motion advice , The recommendations are generated from the frame level action start and action end confidence scores , our BAN Start with the story , At the end of the story, generate suggestions and story scores at the frame group level , To accommodate extremely long inputs .

Our work is also related to the work on temporal action localization. Early methods [27, 11, 34] of temporal action location relied on hand-crafted features and sliding window search. Using ConvNet-based features such as C3D [24] and two-stream CNN [2, 35] achieves both higher efficiency and better performance than hand-crafted features. [3, 4, 30] focused on utilizing stronger network structure to generate high-quality temporal proposals. Structured temporal modeling [38] and 1d temporal convolution [18] are also proved important to boost the performance of temporal action localization. While conceptually similar, our proposed model is novel in that it generates high quality temporal proposals by jointly considering frame-level attractiveness and boundaryness, which facilitates the truncated short-form videos to be unbroken. BSN [19] is also boundary sentitive framework, but it is designed for temporal action proposal and its proposals are generated from frame-level action-start and action-end confidence score, our BAN generate proposals according to story-start, story-end as well as the storyness score at group-of-frames level to suite the extremely long input.

3. The TruNet Dataset

Due to the lack of publicly available data sets , Therefore, the problem of long video truncation with story preserved is not well studied . therefore , We built TruNet Data sets to quantitatively evaluate the proposed framework .

The story-preserving long video truncation problem is not well studied due to the lack of publicly available dataset. Therefore, we construct the TruNet dataset to quantitatively evaluate the proposed framework.

3.1. Dataset Setup

Considering that the short stories in the long video should be suitable for sharing on the video sharing platform , We chose four types of long videos , Variety show , Reality show , Talk shows and TV series . TruNet Most of the videos are from video websites iQIYI.com Download the , There are a lot of high-quality long videos on the website . then , After collecting the data , Apply crowdsourcing annotations based on well-designed annotation tools . Each annotator is trained by annotating a small number of videos , And only when he / She can only participate in the formal notes after passing the training program . In the annotation task , Ask the staff 1) Watch the whole video ; 2) Annotate the time boundaries of short stories ; 3) Adjust the boundaries . Each long video is annotated by several workers , To ensure quality , These notes were eventually reviewed by a number of experts . We divide the data set into three parts : The training set contains 1241 A video , The validation set contains 115 Samples , The test set contains 114 Samples . The validation set was not used throughout our experiment .

Considering the truncated short-form stories from long videos should be suitable to share in video sharing platforms, we choose four types of long videos, i.e. variety show, reality show, talk show and TV series. Most videos of the TruNet are downloaded from the video website: iQIYI.com, which has a large quantity of high quality long videos. Crowdsourced annotation based on a carefully designed annotation tool is then applied after data collection. Each annotation worker is trained by annotating a small number of videos, and can participate the formal annotation only when he/she passes the training program. During the annotation task, the worker is asked to 1) watch the whole video; 2) annotate temporal boundaries of short stories; 3) adjust the boundaries. Each long videos are annotated by multiple workers for quality assurance, and the annotations are finally reviewed by several experts. We randomly split the dataset into three parts: training set with 1241 videos, validation set with 115 samples and testing set with 114 samples. The validation set are not used throughout our experiments.

3.2. Dataset Statistics

TruNet Data set containing 1470 A long video , The average duration is 80 minute , A total of 2101 Hours . Longer videos contain on average 11 A story , The total number of manually tagged stories is 16891. The average length of a short story is 3 minute . chart 2 take TruNet Localize data sets with existing video summaries and time activities ( for example SumMe,TVSum,Activi tyNet and THUMOS14) Made a comparison . It can be seen that ,TruNet Has the longest total video length and average recommended duration .

The TruNet dataset consists of 1470 long videos with the duration of 80 minutes on average and 2101 hours in total. A long video contains 11 stories on average, and the manually labeled story number is 16891 in total. The average duration of a short story is 3 minutes. Figure 2 compares TruNet with existing video summarization and temporal action localization dataset such as SumMe, TVSum, ActivityNet and THUMOS14. As can be seen, TruNet has the largest total video length and average proposal duration.

We are in the picture 3 Shows TruNet A set of statistics for a dataset . The video length distribution is shown in Figure 3(a) It shows that . The distribution of story numbers in each long video is shown in the figure 3(b) Shown . The distribution of story length is shown in the figure 3(c) Shown . The distribution of the ratio of story area length to long video length is shown in the figure below 3(d) Shown .

We show a group of statistics of the TruNet dataset in Figure 3. The video length distribution is shown in Figure 3(a). The distribution of story number in each long video is shown in Figure 3(b). The distribution of story length is shown in Figure 3. The distribution of the ratio story region length to long video length is shown in Figure 3(d).

4. Methodology

The mathematical formula of story preserving long video truncation problem is similar to time action localization . The training data set can be expressed as τ = f V i = ( f u i t g T t = 1 i ; f s i ; j ; e i ; j g l j i = 1 ) g N i = 1 τ = fVi = (fui tgT t=1 i ; fsi;j; ei;jgl ji=1)gN i=1 τ=fVi=(fuitgTt=1i;fsi;j;ei;jglji=1)gNi=1 The frame level features u t i u^i_t uti From long video V i V_i Vi, Corresponding to a short video set cut into facts . ( s i , j , e i , j {(s {i,j},e_ {i,j}} (si,j,ei,j yes V i V_i Vi Of j t h jth jth Index of the beginning and end of the interval . N Is the number of training videos , T i T_i Ti yes V i V_i Vi The number of frames , l i l_i li yes V i V_i Vi Number of intervals .

The mathematical formulation of the story-preserving long video truncation problem is similar with temporal action localization. The training dataset can be represented as τ = f V i = ( f u i t g T t = 1 i ; f s i ; j ; e i ; j g l j i = 1 ) g N i = 1 τ = fVi = (fui tgT t=1 i ; fsi;j; ei;jgl ji=1)gN i=1 τ=fVi=(fuitgTt=1i;fsi;j;ei;jglji=1)gNi=1 where frame-level feature u t i u^i_t uti comes from long video V i V_i Vi, which is corresponding with a ground-truth sliced short-form video set. ( s i , j , e i , j ) {(s{i,j}, e_{i,j}}) (si,j,ei,j) is the beginning and ending indexes of the j t h jth jth interval of V i V_i Vi. N is the number of training videos, T i T_i Ti is the frame number of V i V_i Vi and l i l_i li is the interval number of V i V_i Vi.

chart 4. An architectural overview of the proposed framework , There are three components : feature extraction , Interim proposal generation and sequential structure modeling . In the first component, the multimodal features of the frame are extracted and connected . In the second part , The boundary aware network is used to predict the attractiveness and boundary of each frame . Then perform the inflated merge algorithm to generate the proposal . In the third component , Each suggested sequential structure consists of FF-LSTM modeling , The model outputs the classification confidence score and the improved boundary .

Figure 4. An architecture overview of the proposed framework which contains three components: feature extraction, temporal proposal generation and sequential structure modeling. Multi-modal features of frames are extracted and concatenated in the first component. In the second component, a boundary aware network is used to predict attractiveness and boundaryness for each frame. A dilated merge algorithm is then carried out to generate proposals. In the third component, the sequential structure of each proposal is modeled by FF-LSTM, which outputs the classification confidence score together with the refined boundaries.

chart 4 The architecture of the proposed framework is illustrated , There are three main components : feature extraction , Interim proposal generation and sequential structure modeling . Given input long video , Extract and connect based on RGB Of 2D Convolution feature and audio feature . In the temporary proposal generation component , Enter features into BAN To predict the attractiveness and boundary of each frame . Then execute the inflated merge algorithm , To generate temporary proposals based on frame level attractiveness and boundary scores . In the sequential structure modeling component , Each suggested sequential structure consists of FF-LSTM modeling , The model outputs the proposed classification confidence score and the improved boundary .

Figure 4 illustrates the architecture of the proposed framework, which includes three major components: feature extraction, temporal proposal generation and sequential structure modeling. Given an input long video, RGB-based 2D convolutional feature and audio feature are extracted and concatenated. In the temporal proposal generation component, the features are fed into the BAN to predict the attractiveness and boundaryness for each frame. A dilated merge algorithm is then carried out to generate temporal proposals according to the frame-level attractiveness and boundaryness scores. In the sequential structure modeling component, the sequential structure of each proposal is modeled by FF-LSTM, which outputs the classification confidence score of the proposal together with the refined boundaries.

4.1. Boundary Aware Network

This paper proposes a novel boundary aware network (BAN), To provide high-quality story advice . Pictured 4 Shown ,BAN Will be continuous 7 The characteristics of the frame are LSTM Layer of the input . take LSTM Average the output of , The linear layer is used to predict four kinds of probability scores , Include : In the story , background , Story start boundary and story end boundary . The label of the center frame is determined by the category with the highest score . If each frame in the sequence belongs to the in story category , Then we consider the frame sequence as a candidate for the story . A simple expansion and merging algorithm is implemented , At a small distance (5 frame ) Merge adjacent story candidates . Perform non maximum suppression (NMS) To reduce redundancy and generate final recommendations .

A novel boundary aware network (BAN) is proposed in this paper to provide high quality story proposals. As Figure 4 shows, BAN takes the features of consecutive 7 frames as the input of a LSTM layer. The output of the LSTM is averaged pooled and a linear layer is utilized to predict a four-categories probability scores, include: within story, background, story beginning boundary and story ending boundary. The label of the center frame is decided by the category with the largest score. We regard a frame sequence as a story candidate if every frame in the sequence belongs to the within story category. A simple dilated merge algorithm is carried out to merge adjacent story candidates with small distance (5 frames). Non-Maximal Suppression (NMS) is carried out to reduce redundancy and generate the final proposals.

Unlike previous popular methods that relied solely on operability to generate recommendations [30、3、4、38] Different ,BAN Generate recommendations using additional frame level boundaries . TAG [30]( Top row ) And the proposed BAN( Middle row ) The comparison between the action scores of is shown in the figure 5 Shown . about TAG, We show a motion score greater than 0.5. about BAN, We show the highest scores in the three foreground categories in different colors . The actual recommended interval is shown in the bottom row . It can be seen that ,BAN Score curve ratio of TAG smooth , And the boundary better matches the real proposed interval .

Different from previous popular methods [30, 3, 4, 38] that only depend on actionness for proposal generation, BAN utilizes additional frame-level boundaryness to generate proposals. A comparison between the actionness score of TAG [30] (the upper row) and the proposed BAN (the middle row) is shown in Figure 5. For TAG, we show actionness scores larger than 0.5. For BAN, we show the maximum scores among the three foreground categories in different colors. The ground-truth proposal intervals are shown in the bottom row. As can be seen, the score curve of BAN is smoother than TAG, and the boundaries better match the ground-truth proposal intervals.

4.2. FF-LSTM

Let's first briefly review LSTM, It is the basis of sequential structure modeling . Long and short term memory (LSTM)[10] It has a set of memory units c The recurrent neural network of (RNN) Enhanced version of . LSTM The calculation of can be written as

We first briefly review LSTM that serves as the basis of sequential structure modeling. Long-Short Term Memory (LSTM) [10] is an enhanced version of recurrent neural network (RNN) with a set of memory cells c. The computation of LSTM can be written as

among t t t It's the time step , x x x It's input , [ z i , z g , z c , z o ] [z_i,z_g,z_c,z_o] [zi,zg,zc,zo] Is a series of four vectors of equal size ,h yes c Of transport Out , b i , b g c Output ,b_i,b_g c Of transport Out ,bi,bg and bo They are input gates , Forget the gate and output gate , σ i , σ g σ_i,σ_g σi,σg and σ o σ_o σo They are input activation functions , Forget the activation function and the output activation function , W f , W h , W g W_f,W_h,W_g Wf,Wh,Wg and W o W_o Wo It's a learnable parameter . (1) The calculation of can be equivalently divided into two consecutive steps : Hidden block f t = W f x t f ^ t = W_f x ^ t ft=Wfxt And loop block ( h t , c t ) = L S T M ( f t , h t − 1 , c t − 1 ) (h ^ t,c ^ t)= LSTM(f ^ t,h_ {t-1},c ^ {t-1}) (ht,ct)=LSTM(ft,ht−1,ct−1).

where t t t is the time step, x x x is the input, [ z i , z g , z c , z o ] [z_i,z_g,z_c, z_o] [zi,zg,zc,zo] is a concatenation of four vectors of equal size, h is the output of c , b i , b g c, b_i, b_g c,bi,bg and bo are input gate, forget gate and output gate respectively, σ i , σ g σ_i, σ_g σi,σg and σ o σ_o σo are input activation function, forget activation function and output activation function respectively, W f , W h , W g W_f , W_h, W_g Wf,Wh,Wg and W o W_o Wo are learnable parameters. The computation of (1) can be equivalently split into two consecutive steps: the hidden block f t = W f x t f^t = W_f x^t ft=Wfxt and the recurrent block ( h t , c t ) = L S T M ( f t , h t − 1 , c t − 1 ) (h^t,c^t) = LSTM(f^t,h_{t−1}, c^{t−1}) (ht,ct)=LSTM(ft,ht−1,ct−1).

You can stack multiple directly LSTM Layer to build direct depth LSTM. hypothesis LSTMk It's No k individual LSTM layer , be :

A straightforward deep LSTM can be constructed by directly stacking multiple LSTM layers. Suppose LSTMk is the kth LSTM layer, then:

chart 6(b) Shows a deep... With three stacked layers LSTM. It can be seen that , The input of the hidden block is the output of the loop block on its upper layer . stay FF-LSTM in , Added a fast forward connection to connect two hidden blocks of adjacent layers . The added connection establishes a fast path that contains neither nonlinear activation nor recursive computation , This makes it easy to spread information or gradients . chart 6(a) The three-layer FF-LSTM, deep FF-LSTM The calculation of can be expressed as :

Figure 6(b) illustrates a deep LSTM with three stacked layers. As can be seen, the input of the hidden block is the output of the recurrent block at its previous layer. In FF-LSTM, a fast-forward connection is added to connect two hidden blocks of adjacent layers. The added connections build a fast path that contains neither non-linear activations nor recurrent computations such that the information or gradients can be propagated easily. Figure 6(a) illustrates a FF-LSTM with three layers, and the computation of deep FF-LSTM can be expressed as:

Assume multiple layers FF-LSTM From video V i V_i Vi Scope of proposal received { p i , s , p i , e } \{p_ {i,s},p_ {i,e} \} { pi,s,pi,e}, Is the first FF-LSTM The hidden block of can be calculated as f f 1 t = W f 1 u t i + p i ; s g p t = 0 i ; e − p i ; s ff1t = Wf1ut i + pi; sgp t = 0 i; e-pi; s ff1t=Wf1uti+pi;sgpt=0i;e−pi;s.

Supposing the multi-layer FF-LSTMs receive a proposal range { p i , s , p i , e } \{p_{i,s},p_{i,e}\} { pi,s,pi,e} from video V i V_i Vi, the hidden block of the first FF-LSTM can be calculated as f f 1 t = W f 1 u t i + p i ; s g p t = 0 i ; e − p i ; s ff1t = Wf1ut i+pi;sgp t=0 i;e−pi;s ff1t=Wf1uti+pi;sgpt=0i;e−pi;s.

The top is at the top FF-LSTM On , The maximum pooling layer is used to obtain the global representation of the proposal . Based on the story / The global representation of the background classification is used to calculate the binary classifier . We calculate the intersection between each proposal and the real situation (IoU), If the biggest IoU Greater than 0.7, The proposal is considered a positive sample , And negative samples IoU Less than 0.3. The boundary regressors are also computed based on the maximum pool global representation . Training advice p i , k = { p i , k , s , p i , k , e } p_ {i,k} = \{p_ {i,k,s},p_ {i,k,e} \} pi,k={ pi,k,s,pi,k,e} The multitasking loss of can be written as :

On the topmost FF-LSTM, a max-pooling layer is used to obtain a global representation of the proposal. A binary classifier is calculated based on the global representation for story/background classification. We calculate the intersection-over-union (IoU) between each proposal and ground-truth story, and if the max IoU is larger than 0.7 the proposal is regarded as a positive sample, and a negative sample with IoU less than 0.3. A boundary regressor is also computed based on the max-pooled global representation. The multi-task loss over an training proposal p i , k = { p i , k , s , p i , k , e } p_{i,k} = \{p_{i,k,s}, p_{i,k,e}\} pi,k={ pi,k,s,pi,k,e} can be written as:

among c i , k c_ {i,k} ci,k Is the label of the proposal , P ( c i ; k j p i ; k P(ci; kjpi; k P(ci;kjpi;k It's multilayer FF-LSTM, Maximum pooling and classification scores defined by binary classifiers . λ It's a weight parameter , 1 c i ; k = 1 1ci; k = 1 1ci;k=1 Indicates that the second item is only marked as 1 Effective when .

where c i , k c_{i,k} ci,k is the label of the proposal and P ( c i ; k j p i ; k ) P(ci;kjpi;k) P(ci;kjpi;k) is the classification score defined by multi-layer FF-LSTMs, max-pooling and the binary classifier. λ is a balanced weight parameter, and 1 c i ; k = 1 1ci;k=1 1ci;k=1 means that the second term works only when the label of the proposal is 1.

5. Experiments

5.1. Implementation Details

The data set is too large to handle , So we preprocess the video and extract frame level features . We use every second 1 frame (FPS) Decode the video at a speed of , And extract two functions : stay ImageNet [23] Trained on ResNet-50 [8] Of “ pool5” And convolution audio function [9].

Because the size of the dataset is too large to process, we pre-process the videos and extract frame-level features. We decode the videos in 1 frame-per-second (FPS) and extract two kinds of features: “pool5” of ResNet-50 [8] trained on ImageNet [23] and convolutional audio feature [9].

We're in four K40 GPU The applied momentum is 0.9, The number of epochs is 70, The weight attenuation is 0.0005, The minimum batch size is 256 Random gradient surface of (SGD) Training BAN. A period means that all training samples pass once . All parameters are initialized randomly . The learning rate is set to 0.001. We try to reduce the learning rate during training , But I didn't find any benefit . Four categories ( In the story , background , Within the start and end boundaries ) The sampling rate of is 6:6:1:1:1. To implement boundary regression in the time structure modeling phase , We added BAN The generated recommendations begin with extensions And the end boundary are similar to [38].

We train the BAN using Stochastic Gradient Decent (SGD) with momentum of 0.9, epoch number of 70, weight decay of 0.0005 and a mini-batch size of 256 on four K40 GPUs. One epoch means all training samples are passed through once. All parameters are randomly initialized. The learning rate is set at 0.001. We tried reducing the learning rate during training but found no benefit. The sampling ratio of the four categories (within story, background, beginning boundary and ending boundary) is 6 : 6 : 1 : 1. To enable boundary regression in the temporal structure modeling stage, we augment the BAN-generated proposals to extend the beginning and ending boundaries similar with[38].

We use SGD Training 5 layer FF-LSTM, Its momentum is 0.9, The number of epochs is 40, The weight attenuation is 0.0008, The minimum batch size is 256. During the whole training process , Keep learning rate 0.001. The ratio of positive proposal to negative proposal is 1:3. Equilibrium parameters λ Set to 5.

We train a 5 layer FF-LSTM using SGD with momentum of 0.9, epoch number of 40, weight decay of 0.0008 and a mini-batch size of 256. The learning rate is kept 0.001 throughout the training. Positive and negative proposals are sampled with the ratio of 1 : 3. The balanced parameter λ is set at 5.

5.2. Evaluation Metrics

For story positioning , Reported in three different IoU threshold { 0.5 , 0.7 , 0.9 } \{0.5,0.7,0.9 \} { 0.5,0.7,0.9} Next , Average accuracy of different methods (mAP). We also reported thresholds [0,5:0,05:0,95] The average of mAP. To assess the quality of the generated interim proposal , We reported that [4] The average recall rate defined in and the average number of proposals retrieved (AR-AN) curve .

For story localization, the mean average precision (mAP) of different methods at three different IoU thresholds { 0.5 , 0.7 , 0.9 } \{0.5, 0.7, 0.9\} { 0.5,0.7,0.9} are reported. We also report the average of mAP with thresholds [0,5 : 0,05 :0,95]. For evaluating the quality of generated temporal proposals, we report the average recall vs. average number of retrieved proposals (AR-AN) curve defined in [4].

5.3. Ablation Studies

To study the proposed BAN The effectiveness of the , We compare it with sliding window search (SW),KTS [21] and TAG [30] Made a comparison . The comparison results are summarized in Figure 6 in . We can see , When the number of proposals is small , The proposed BAN The average recall rate is significantly higher than other methods , But because of its selection criteria , It cannot generate as many proposals as other proposals . be aware TAG and BAN They are highly complementary , So merging their proposals will get a better curve .

To study the effectiveness of the proposed BAN, we compare with sliding window search (SW), KTS [21] and TAG [30]. The comparison results are summarized in Figure 6. We can see that the average recall of the proposed BAN is significantly higher than other methods when the proposal number is small, but it cannot generate as much proposals as others because of its selection standard. Noticing that TAG and BAN are highly complementary, merging their proposals obtains an substantial better curve.

surface 2 The results of ablation studies on the generation of interim proposals and sequential structure modeling are summarized , Finding these two components is critical to final performance . FF-LSTM Constantly beat with different proposal generation methods LSTM. We observed methods with training steps (TAG and BAN) Than heuristic methods ( Sliding windows and KTS) Generate better advice . Using a single BAN Proposals are better than using a single TAG The proposal is better , But considering that TAG A large number of proposals can be generated ,BAN and TAG They are highly complementary . As shown in the table 2 Shown , Merging them is a significant improvement over each individual object .

Table 2 summarizes the ablation study results of temporal proposal generation and sequential structure modeling, emerging that both components are crucial for the final performance. FF-LSTM keeps beating LSTM with different proposal generation methods. We observe that methods with a training step (TAG and BAN) generate much better proposals than heuristic ones (sliding window and KTS). Using individual BAN proposals are slight better than using individual TAG proposals, but considering TAG can generate larger number of proposals, BAN and TAG are highly complementary. As shown in Table 2, merging them brings an obvious improvement over each individual one.

surface 3 The results of ablation studies using different functions are summarized . It can be seen that ,RGB And audio functions are highly complementary , And discarding any of these features will significantly reduce performance . Another interesting phenomenon is that using a single audio function is better than using a single RGB Features for better performance . We guess this is because the audio mode has changed rapidly in the video story boundary , Especially for variety shows .

Table 3 summarizes the ablation study results of using different features. As can be seen, the RGB and audio features are highly complementary and dropping either feature decreases the performance obviously. Another interesting phenomenon is using single audio feature achieves better performance than using single RGB feature. We guess this is because audio patterns change rapidly in video story boundaries, especially for variety programs.

surface 4 The ablation results of sequential structure modeling using different depth recursive models are summarized . Due to the difficulty of convergence , Stack multiple directly LSTM Layers cause performance degradation . contrary , Use deeper FF-LSTM Significant performance improvements can be achieved . And 1 layer LSTM comparison ,3 layer FF-LSTM send mAP increase 3.9, and 5 layer FF-LSTM Burring mAP increase 0.9. We also tried 5 Layer above FF-LSTM, But small improvements were found .

Table 4 summarizes the ablation study results of using different deep recurrent models for sequential structure modeling. Directly stacking multiple LSTM layers lead to performance drop because of convergence difficulty. Instead, using deeper FF-LSTM obtains noteworthy performance gains. A 3-layer FF-LSTM increases the mAP by 3.9 over 1-layer LSTM, and a 5-layer FF-LSTM furter increases the mAP by 0.9. We also tried more than 5-layer FF-LSTM, but found very marginal improvements.

surface 5 The ablation results of regression loss are summarized . It can be seen from the results , Increasing the regression loss can make mAP Improve 3.0. in consideration of BAN The story boundary problem has been solved when generating the proposal , our FF-LSTM Components can capture high-order dependencies between frames , To further improve the boundary .

Table 5 summarizes the ablation study results of the regression loss. From the results, we can see that adding the regression loss improves the mAP by 3.0. Considering that BAN has addressed the story boundaries when generating proposals, our FF-LSTM component can capture high-order dependencies among frames for further boundary refinement.

5.4. Quantitative Comparison Results

We compare our proposed framework with the latest video summarization method ( Include vsLSTM [36] and HD-VS [33]) Compare . We have to do it again vsLSTM and HD-VS So that it fits our formula , Because the integrity of the story telling is very important to preserve the long video truncation of the story , But the previous video abstract papers did not consider .

We compare our proposed framework with state-of-the-art video summary methods, including vsLSTM [36] and HD-VS [33]. We have to re-implement vsLSTM and HD-VS to make them suitable for our formulation, because the completeness of the storytelling is essential in story-preserving long video truncation, but is not considered in previous video summary papers.

about vsLSTM, We use merged TAG and BAN Propose as vsLSTM The input of , It replaces FF-LSTM And server as the regression and classification basis of time modeling . about HD-VS, We use merged TAG and BAN Suggest as 5 layer FF-LSTM The input of , And the cross entropy loss is replaced by HD-VS The depth sorting loss proposed in . The results are summarized in table 6 in . It can be seen that , In all cases , The proposed framework outperforms all previous approaches in all cases .

For vsLSTM, we use the merged TAG and BAN proposals as the input of vsLSTM, which replaces the FF-LSTM and servers as the basis of temporal modeling for regression and classification. For HD-VS, we use the merged TAG and BAN proposals as the input of a 5-layer FF-LSTM, and the cross-entropy loss is replaced with a deep ranking loss proposed in HD-VS. The results are summarized in Table 6. As can be seen, the proposed framework outperforms all previous methods in all cases in a large margin.

5.5. User-Study Results

We finally make a subjective assessment , To compare the quality of the generated short video story summaries . requirement 100 Different genders , Volunteers with educational background and age should deal with the following steps respectively :

We finally conduct subjective evaluation to compare the quality of generated short video story summary. 100 volunteers with different genders, education backgrounds and ages are required to process the following steps independently:

watch 18 A randomly selected long video .

For each long video , See the suggested framework vsLSTM [36] and HD-VS [33] Generated short video story summary .

For each long video , Please select one of the video stories

Summary is the best .Watch 18 randomly selected long videos.

For each long video, watch the short video story summaries generated by the proposed framework, vsLSTM [36] and HD-VS [33].

For each long video, choose one of the video story

summaries as the best one.

surface 7 Zhonghuizong 100 The answers of volunteers , The selection proportion of these three methods is summarized . It can be seen that , The summary generated by the proposed framework is obtained 46.4% The votes of the , higher than vsLSTM(40.8%) and HD -VS(12.8%).

The answers of the 100 volunteers are accumulated and the chosen ratio of the three methods are summarized in Table 7. As can be seen, the proposed framework generated summaries receive 46.4% of the votes, which is higher than vsLSTM (40.8%) and HD-VS(12.8%).

6. Conclusions

In this paper , We propose a new video truncation problem to preserve stories , This problem requires the use of algorithms to truncate long videos into short ones , An engaging and uninterrupted story . This problem is especially important for resource production in video sharing platform . We collected and annotated a new large TruNet Data sets , And a combination BAN and FF-LSTM To solve this problem .

In this paper, we propose a new story-preserving video truncation problem that requires algorithms to truncate long videos into short, attractive, and unbroken stories. This problem is particularly important for resource production in video sharing platforms. We collect and annotate a new large TruNet dataset and propose a novel framework that combines BAN and FF-LSTM is proposed to address this problem.

边栏推荐

- Unity3d display FPS script

- Single channel picture reading

- Explanation of sensor flicker/banding phenomenon

- Leetcode 第 80 场双周赛题解

- 【思维方法】之第一性原理

- Leetcode sword finger offer (Second Edition) complete version of personal questions

- JS预解析

- Sensor bringup 中的一些问题总结

- Leetcode-1663. Minimum string with given value

- How to split a row of data into multiple rows in Informix database

猜你喜欢

Analysis of memory management mechanism of (UE4 4.26) UE4 uobject

Houdini terrain creation

EBook list page

肝了一個月的 DDD,一文帶你掌握

cv2.fillPoly coco annotator segment坐标转化为mask图像

Android studio mobile development creates a new database and obtains picture and text data from the database to display on the listview list

为什么联合索引是最左匹配原则?

MNIST handwritten data recognition by CNN

夜神模擬器adb查看log

Word vector training based on nnlm

随机推荐

相机图像质量概述

Un mois de DDD hépatique.

Leetcode-1535. Find the winner of the array game

Leetcode-717. 1-bit and 2-bit characters (O (1) solution)

Une explication du 80e match bihebdomadaire de leetcode

Information content security experiment of Harbin Institute of Technology

Univariate linear regression model

为什么联合索引是最左匹配原则?

Using hidden Markov model to mark part of speech

In unity3d, billboard effect can be realized towards another target

Summary of some problems in sensor bring up

Word vector training based on nnlm

Redis queue

Why don't databases use hash tables?

Bert use

Leetcode-553. Optimal division

MySQL master-slave, 6 minutes to master

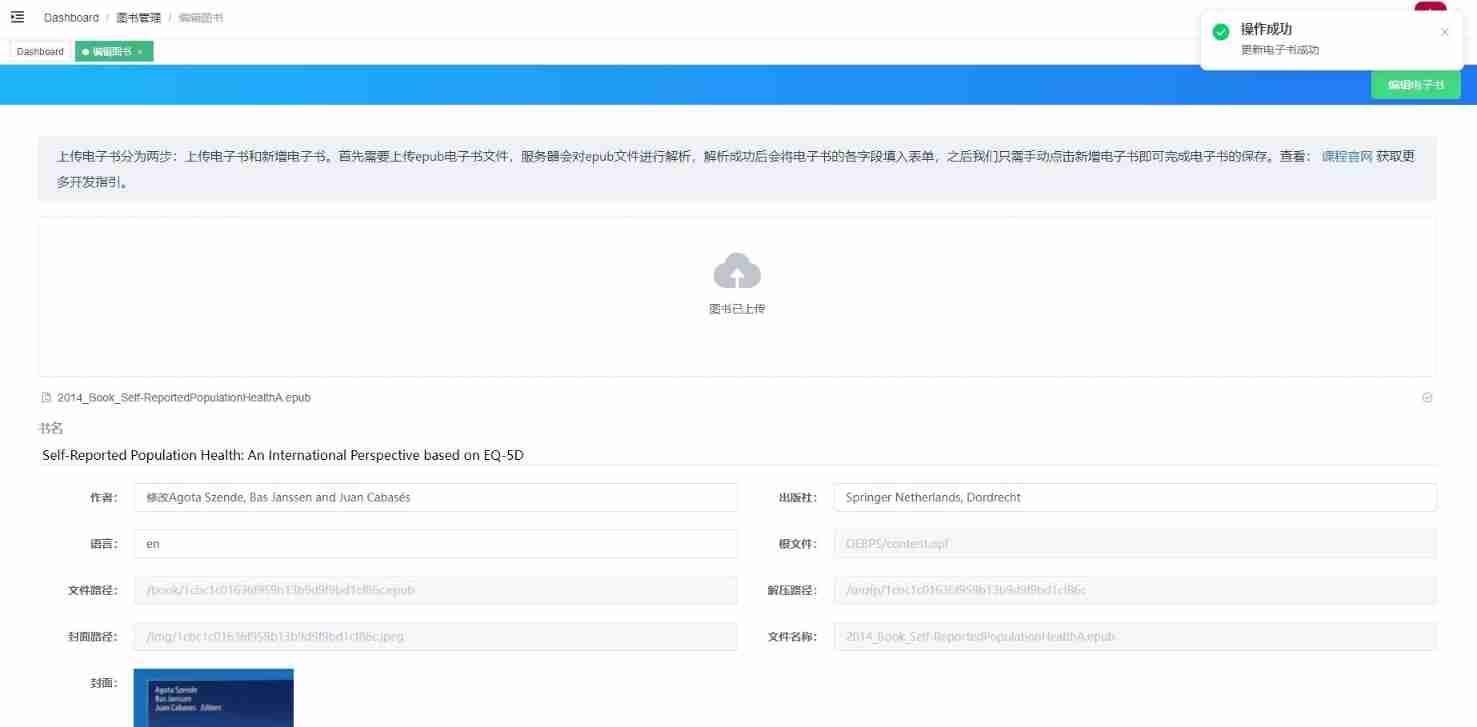

EBook upload

[PowerShell] command line output and adding system environment variables

Leetcode-1043. Separate arrays for maximum sum