当前位置:网站首页>MySQL master-slave, 6 minutes to master

MySQL master-slave, 6 minutes to master

2022-06-12 05:52:00 【Louzai】

Hello everyone , I'm Lou Zi !

The topic written today , It's actually very basic , however Several scenarios that are very important for high concurrency , I can't get around it , It is estimated that during the interview , Often .

Articles on this topic , There are a lot of them on the Internet , I wanted to reprint an article directly , But I feel there is no suitable , Or the article is not refined enough , Or it's too lean , So I'd better write one by myself .

Although the content is basic , But I still stick to my previous writing style , After referring to many excellent blogs , I'm going to write an article that can be easily understood , Without losing a comprehensive article .

Preface

Let's first look at the normal query process :

First query Redis, If the query is successful , Go straight back to , Query does not exist , Go to query DB;

If DB The query is successful , Data write back Redis, Query does not exist , Go straight back to .

Cache penetration

Definition : When there is no data in the query database and cache , Because the database query has no data , For fault tolerance , The results will not be saved to the cache , therefore Every request will query the database , This is called cache penetration .

Red lines , This is the scene of cache penetration , When inquired Key In cache and DB When none of them exist , This will happen .

You can imagine , For example, there is an interface that needs to query product information , If a malicious user impersonates a non-existent product ID Initiate request , Instantaneous concurrency is very high , Estimate your DB I'll just hang up .

Maybe your first reaction is to perform regular verification on the input parameters , Filter out invalid requests , Yes ! The true , Is there any other better plan ?

Cache null

When we find a null value from the database , We can return a null value to the cache , In order to avoid the cache being occupied for a long time , You need to add a shorter expiration time to this null value , for example 3~5 minute .

But there's a problem with this plan , When a large number of invalid requests penetrate , There will be a large number of null value caches in the cache , If the cache space is full , It will also eliminate some user information that has been cached , Instead, it will reduce the cache hit rate , So this program , Need to evaluate cache capacity .

If a cache null value is not desirable , At this time, you can consider using Bloom filter .

The bloon filter

The bloom filter is made up of a variable length N A binary array with a variable number M The hash function of , Be simple and rough , It's just one. Hash Map.

The principle is quite simple : Such as element key=#3, If through Hash The algorithm gets a value of 9 Value , There is this Hash Map Of the 9 Bit element , By marking 1 Indicates that there is already data in this bit , As shown in the figure below ,0 No data ,1 Yes, there are data .

So through this method , There will be a conclusion : stay Hash Map in , Tagged data , Not necessarily , But unmarked data , There must be no .

Why? “ Tagged data , Not necessarily ” Well ? because Hash Conflict !

such as Hash Map The length of is 100, But you have 101 A request , If you're lucky enough to explode , this 100 Requests are typed evenly with a length of 100 Of Hash Map in , Your Hash Map All have been marked as 1.

When the first 101 When a request comes , Just 100% appear Hash Conflict , Although I didn't ask , But the mark is 1, The bloom filter did not intercept .

If you need to reduce miscalculation , Can increase Hash Map The length of , And choose a higher grade Hash function , For example, many times to key Conduct hash.

except Hash Conflict , The bloom filter can actually cause a fatal problem : Bloom filter update failed .

For example, there is a commodity ID First request , When DB When in , Need to be in Hash Map Mark in , But because of the Internet , Cause the tag to fail , So next time this product ID When the request is reissued , The request will be intercepted by the bloom filter , For example, this is a double 11 Stock of popular goods , There is clearly 10W Commodity , But you suggest that the inventory does not exist , Leaders may say “ You don't have to come tomorrow ”.

So if you use a bloom filter , In the face of Hash Map When updating data , It needs to be ensured that this data can 100% The update is successful , It can be done asynchronously 、 How to retry , Therefore, this scheme has certain implementation cost and risk .

Cache breakdown

Definition : A hotspot cache just fails at a certain time , Then there happens to be a large number of concurrent requests , At this point, these requests will put great pressure on the database , This is called cache breakdown .

This is actually the same as “ Cache penetration ” The flow chart is the same , But the starting point of this is “ A hotspot cache just fails at a certain time ”, For example, a very popular popular product , Cache suddenly fails , All the traffic directly reaches DB, This will cause excessive database requests at a certain time , More emphasis on instantaneity .

The main ways to solve the problem are 2 Kind of :

Distributed lock : Only the first thread that gets the lock requests the database , Then insert the cache , Of course, every time you get the lock, you have to check whether the cache has , In high concurrency scenarios , I don't recommend using distributed locks , It will affect the query efficiency ;

Settings never expire : For some hotspot caches , We can set it to never expire , This ensures the stability of the cache , But it should be noted that after the data changes , To update this hotspot cache in time , Otherwise, it will cause the error of query results , For example, popular goods , Preheat to the database first , Then go offline .

There are also “ Cache renewal ” The way , Such as caching 30 Minutes out , You can do a regular task , Every time 20 Run every minute , It feels like this way is neither fish nor fowl , For your reference only .

Cache avalanche

Definition : A large number of caches expire at the same time in a short time , Resulting in a large number of requests to query the database directly , This has caused great pressure on the database , In severe cases, the situation that may lead to database downtime is called cache avalanche .

if “ Cache breakdown ” It's individual resistance , that “ Cache avalanche ” It's a collective uprising , Then what happens to cache avalanche ?

A large number of caches expire at the same time in a short time ;

Cache service down , Cause a large-scale cache failure at a certain time .

So what are the solutions ?

Add random time to cache : Random time can be added when setting the cache , such as 0~60s, This can greatly avoid a large number of cache failures at the same time ;

Distributed lock : Add a distributed lock , The first request is to persist the data to the cache , Only other requests can enter ;

Current limiting and degradation : Through current limiting and degradation strategies , Reduce requested traffic ;

Cluster deployment :Redis Deploy through cluster 、 Master-slave strategy , After the primary node goes down , Will switch to the slave node , Guarantee the availability of services .

Example of adding random time to cache :

// Cache original expiration time

int exTime = 10 * 60;

// Random number generating class

Random random = new Random();

// Cache Settings

jedis.setex(cacheKey, exTime + random.nextInt(1000) , value);

「 A summary of the article 」

Summary table of contents : Louzi's original selection is a collection

Recommended reasons : More than 100 original good articles , near 2 Years of persistence , No matter you are Xiaobai , Or senior boss , You can always find your own stage .

It is better to believe in books than to have no books , Because of limited personal ability , There are inevitably omissions and mistakes , If found bug Or better advice , Welcome criticism and correction , Thank you very much .

边栏推荐

- Login authentication filter

- [road of system analyst] collection of wrong topics in software engineering chapters

- 从传统网络IO 到 IO多路复用

- China Aquatic Fitness equipment market trend report, technical innovation and market forecast

- China embolic coil market trend report, technical innovation and market forecast

- Wireshark filter rule

- Legal liabilities to be borne by the person in charge of the branch

- jpg格式与xml格式文件分离到不同的文件夹

- XML参数架构,同一MTK SW版本兼容两套不同的音频参数

- Lock and reentrankload

猜你喜欢

Simple introduction to key Wizard

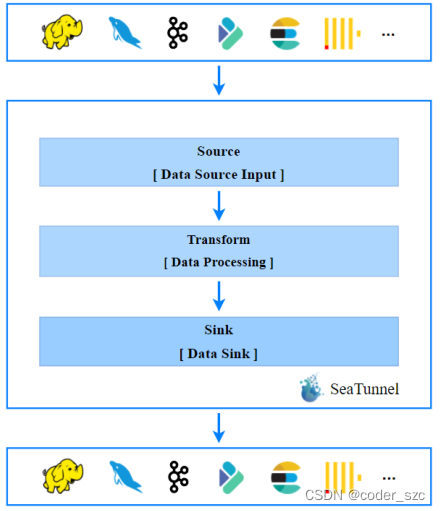

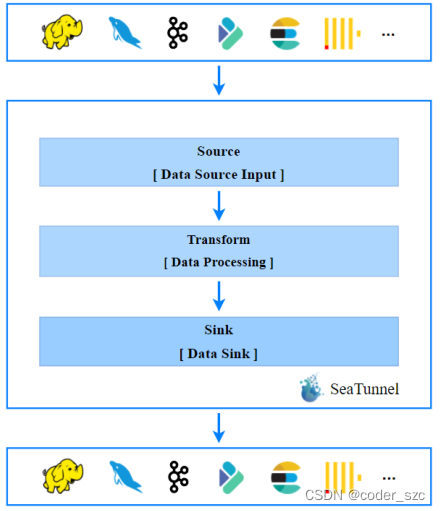

Data integration framework seatunnel learning notes

MySQL 主从,6 分钟带你掌握

数据集成框架SeaTunnel学习笔记

Wireshark filter rule

Word frequency statistics using Jieba database

Beginning is an excellent emlog theme v3.1, which supports emlog Pro

Review notes of naturallanguageprocessing based on deep learning

How Wireshark decrypts WiFi data packets

Details of FPGA syntax

随机推荐

Laravel8 authentication login

Stack and queue classic interview questions

jpg格式与xml格式文件分离到不同的文件夹

Leetcode simple problem: converting an integer to the sum of two zero free integers

Database Experiment 2: data update

Conversion of Halcon 3D depth map to 3D image

肝了一个月的 DDD,一文带你掌握

MySQL 主从,6 分钟带你掌握

AddUser add user and mount hard disk

[untitled]

Halcon 用点来拟合平面

Project management and overall planning

C language - how to define arrays

[Speech] 如何根据不同国家客制化ring back tone

Market trend report, technical innovation and market forecast of Chinese stump crusher

China embolic coil market trend report, technical innovation and market forecast

yolov5

Beginning is an excellent emlog theme v3.1, which supports emlog Pro

Json-c common APIs

nrf52832--官方例程ble_app_uart添加led特性,实现电脑uart和手机app控制开发板led开和关