当前位置:网站首页>DDTL: Domain Transfer Learning at a Distance

DDTL: Domain Transfer Learning at a Distance

2022-08-04 01:40:00 【A moment of loss】

DDTL问题:The target domain can be completely different from the source domain.

1、A selective learning algorithm is proposed(SLA),以Supervised autoencoder or supervised convolutional autoencoderAs a base model for handling different types of input.

2、SLAThe algorithm gradually selects from the intermediate domainUseful unlabeled data作为桥梁,in order to break down the huge distributional differences in the transfer of knowledge between two distant domains.

迁移学习:Learning methods that borrow knowledge from the source domain to enhance the learning ability of the target domain

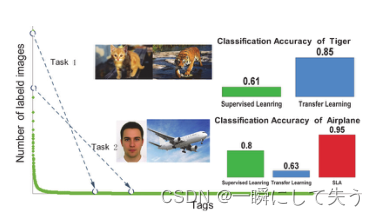

Task1:Transfer knowledge between cat and tiger images.Transfer learning algorithms achieve better performance than some supervised learning algorithms.

Task2:Transfer knowledge between human faces and airplane images.The transfer learning algorithm failed,Because its performance is worse than supervised learning algorithms.然而,当应用SLA算法时,获得了更好的性能.

1、DDTL问题定义

大小为

的Source field tag data:

;

大小为

的Target domain tag data:

;

多个The intermediate fields are unlabeled data的混合:

.

A domain corresponds to one of a particular classification problemconcept or category,Such as recognizing faces or airplanes from images.

问题描述:Assume that the classification problems in both the source and target domains are binary.All data points should be in the same feature space.设

、

和

are the source domain data边际分布、Conditional and joint distributions;The three distributions relative to the target domain are

,

,

;

is the marginal distribution of the intermediate domain.在DDTL问题中:

;

;

.

目标:利用中间域中的未标记数据,each other in the original距离较远Build a bridge between the source and target domains,And through the bridge from the source domainTransfer supervision knowledge来训练目标域accurate classifier.

PS:Not all data in the intermediate domain should be similar to the source domain data,Some of these data can be very different.因此,简单地Building bridges using all intermediate data may fail.

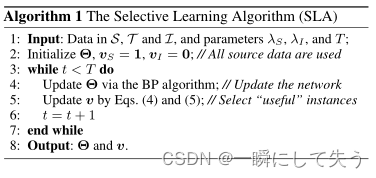

2、SLA:Selective Learning Algorithms

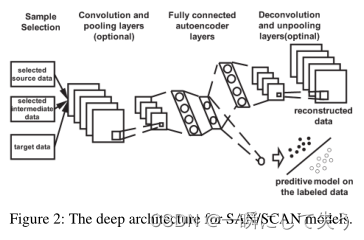

2.1Autoencoders and their variants

自动编码器是一种Unsupervised Feedforward Neural Networks,具有输入层、One or more hidden and output layers,It usually consists of two processes:编码和解码.

输入:

;

编码函数:

Encode it to map it to a hidden representation;

解码函数:

Decode to reconstructx.

The process of the autoencoder can be summarized as:

编码:

;

解码:

.

其中

is an approximation to the original inputxthe reconstructed input.By minimizing the reconstruction error on all training data,即

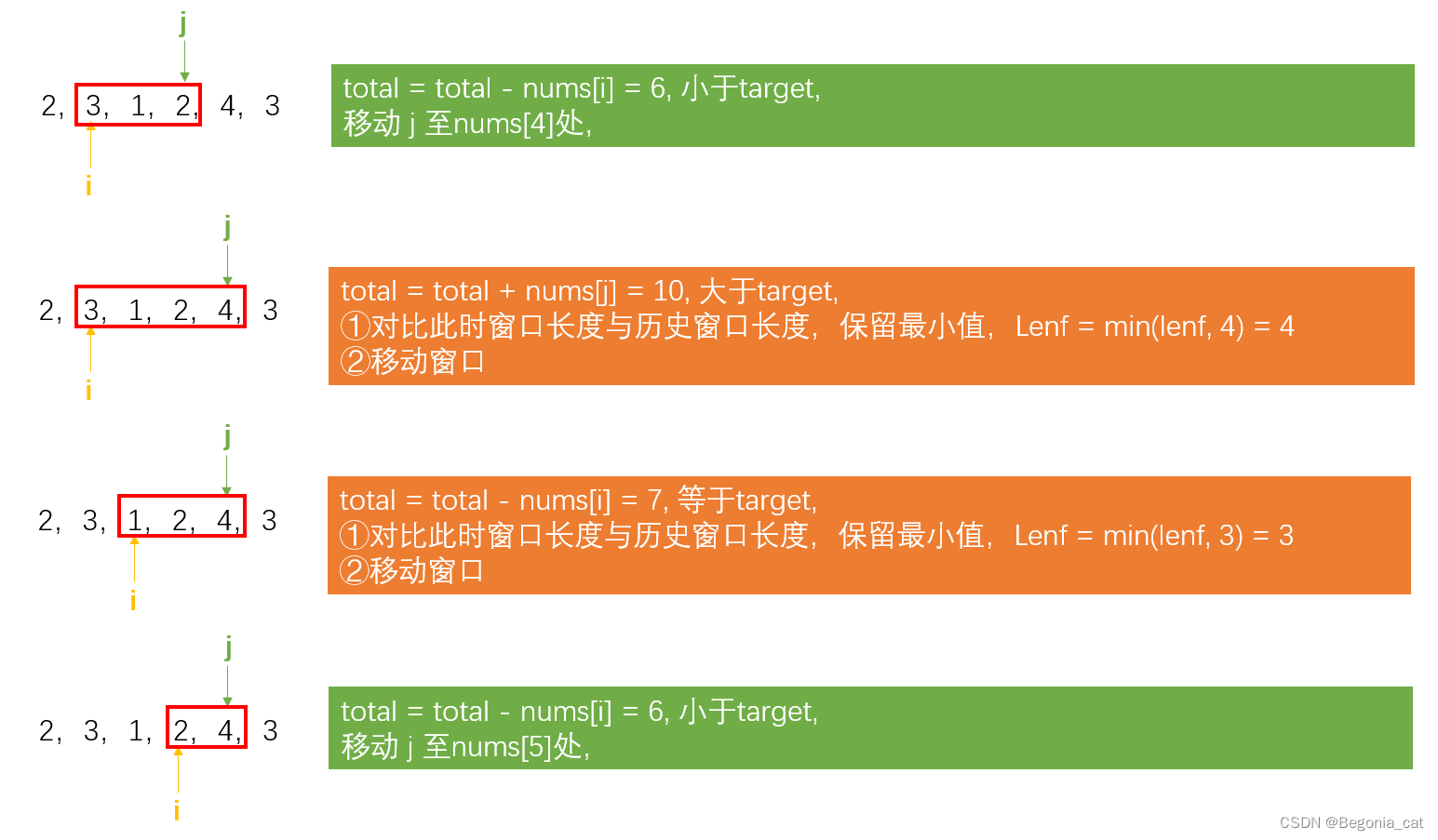

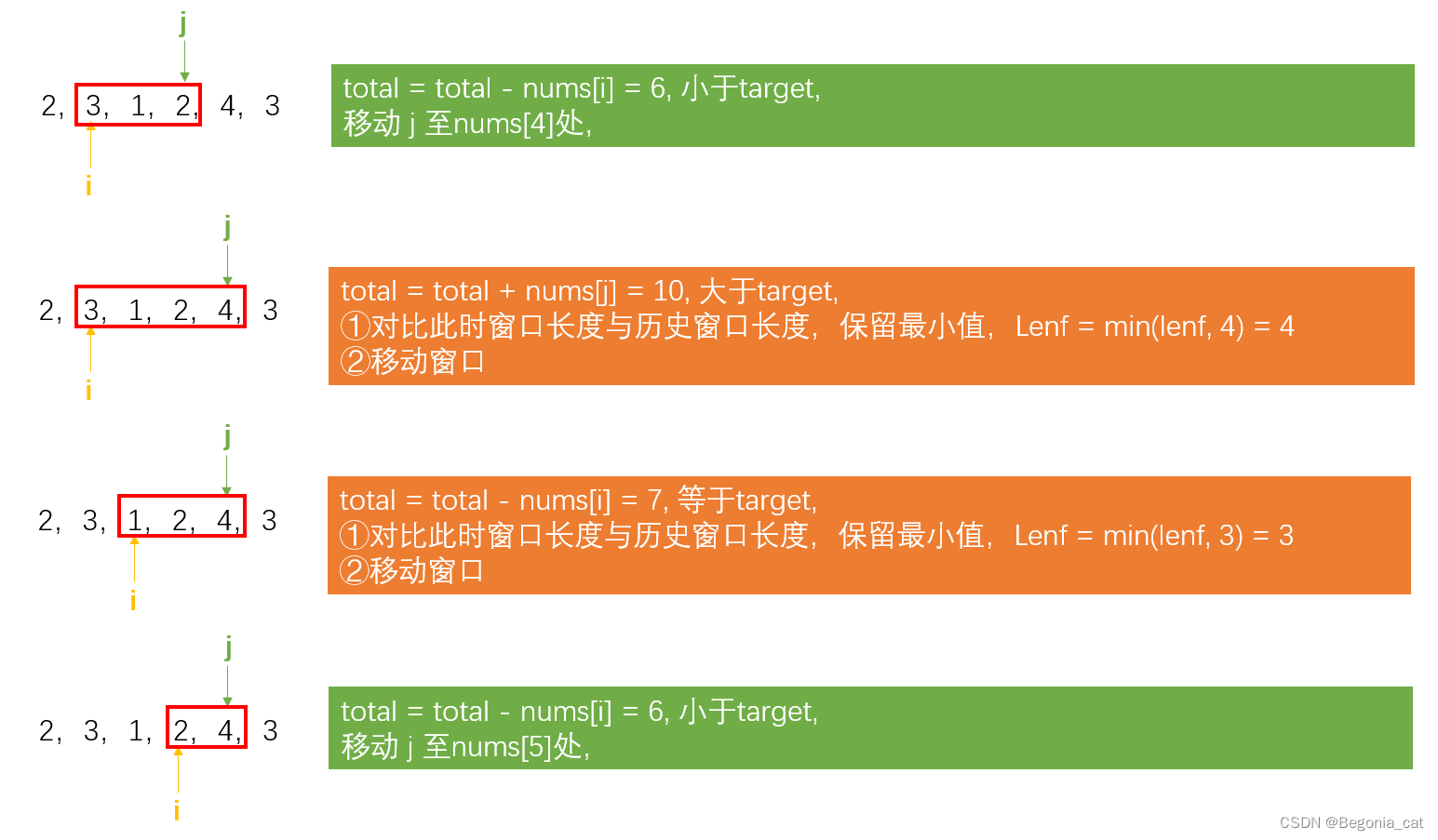

2.2Instance selection by reconstruction error

在实践中,Because the source domain and the target domain are far apart,Only a portion of the source domain data may be useful to the target domain,The situation is similar for intermediate domains.因此,In order to select useful instances from the intermediate domain,And remove the relevant instance of the target domain from the source domain,通过Minimize the reconstruction error for selected instances in the source and intermediate domains and all instances in the target domainto learn a pair of encoding and decoding functions.The objective function to be minimized is formulated as follows:

、

∈ {0,1}:source domainithe first instance and the intermediate domainj个实例selection indicator.当值为1时,The corresponding instance will be selected,Otherwise it will be deselected.

:

和

Regularization term on ,通过将

和

All values of are set to zero to avoid some unimportant solutions.将其定义为:

Minimizing this term is equivalent to encouraging from the source domain and the intermediate domainChoose as many instances as possible.Two regularization parameters

和

Controls the importance of this regularization term.

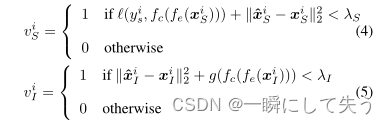

2.3Explanation of auxiliary information

Incorporate auxiliary information when learning hidden representations for different domains.

源域和目标域:Data tags can be used as auxiliary information;

中间域:没有标签信息.

Treat predictions on intermediate domains as auxiliary information,And use the predicted confidence to guide the learning of hidden representations.具体而言,We propose to incorporate auxiliary information into learning by minimizing the following function:

:is the classification function that outputs the classification probability;

g(·):定义为

,其中0≤ z≤ 1;

将

用于选择Instances of high prediction confidence in the intermediate domain.

2.4总体目标函数

DDTL的最终目标函数如下:

其中

;Θ表示函数

、

和

的所有参数.

Use block coordinatesdecedent(BCD)方法,在每次迭代中,在保持其他变量不变的情况下,Sequentially optimize the variables in each block.

在

selected to have low reconstruction error and low training loss;在

selected to have low reconstruction error and low training loss.

The deep learning architecture is shown in the figure below.

3、总结

This paper studies a new oneDDTL问题,The source domain and the target domain are far apart,But can be connected through some intermediate domains.为了解决DDTL问题,提出了SLA算法,from the intermediate domainGradually select unlabeled data,to connect two distant domains.

边栏推荐

- Variable string

- Continuing to invest in product research and development, Dingdong Maicai wins in supply chain investment

- 天地图坐标系转高德坐标系 WGS84转GCJ02

- OpenCV如何实现Sobel边缘检测

- FeatureNotFound( bs4.FeatureNotFound: Couldn't find a tree builder with the features you requested:

- Please refer to dump files (if any exist) [date].dump, [date]-jvmRun[N].dump and [date].dumpstream.

- nodejs安装及环境配置

- 【正则表达式】笔记

- The 600MHz band is here, will it be the new golden band?

- nodejs install multi-version version switching

猜你喜欢

如何用C语言代码实现商品管理系统开发

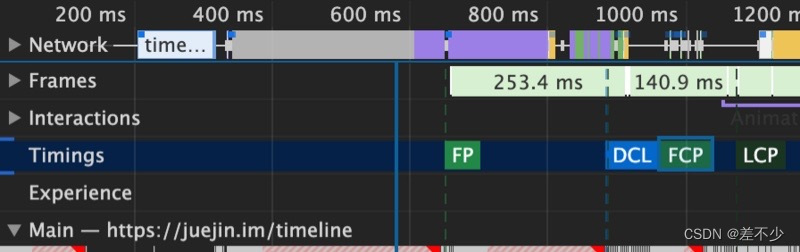

计算首屏时间

typescript58 - generic classes

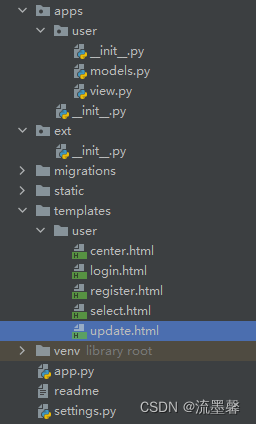

Flask Framework Beginner-06-Add, Delete, Modify and Check the Database

![[store mall project 01] environment preparation and testing](/img/78/415b18a26fdc9e6f59b59ba0a00c4f.png)

[store mall project 01] environment preparation and testing

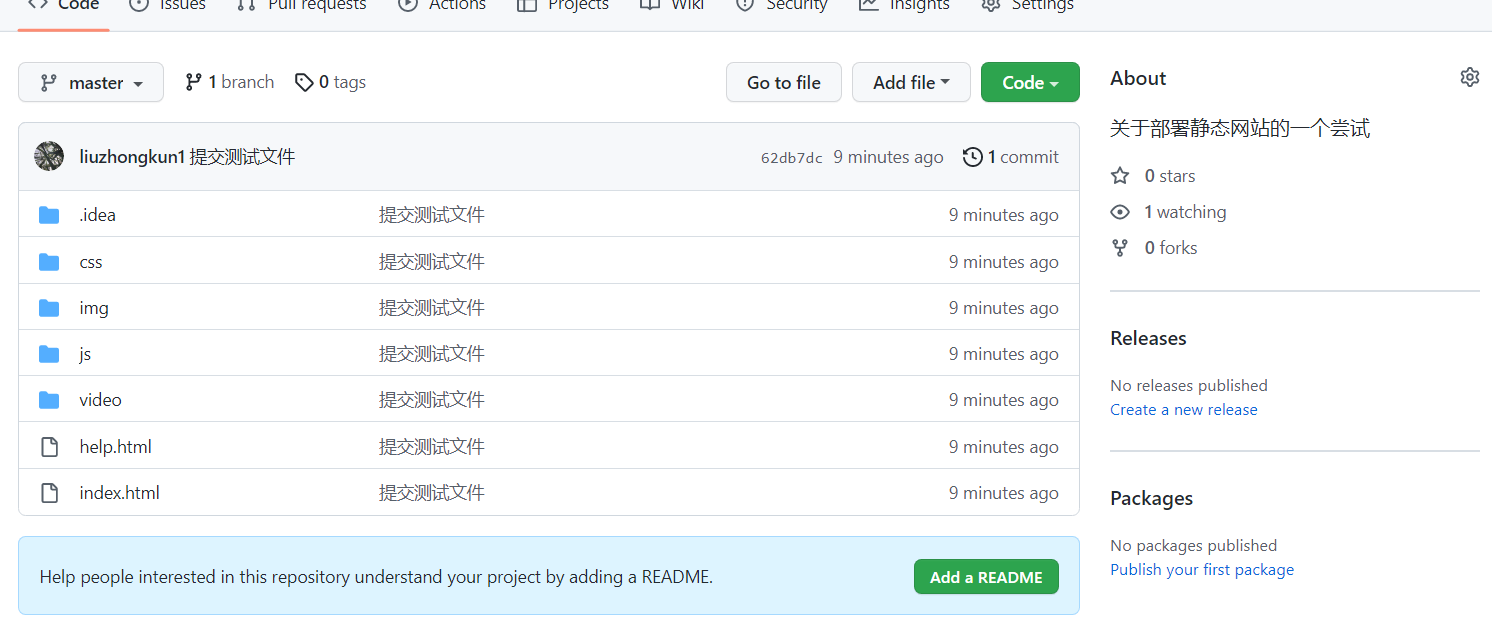

Quickly build a website with static files

安全至上:落地DevSecOps最佳实践你不得不知道的工具

Array_Sliding window | leecode brushing notes

Continuing to pour money into commodities research and development, the ding-dong buy vegetables in win into the supply chain

数组_滑动窗口 | leecode刷题笔记

随机推荐

MySQL回表指的是什么

计算首屏时间

2022 China Computing Power Conference released the excellent results of "Innovation Pioneer"

Multithreading JUC Learning Chapter 1 Steps to Create Multithreading

简单的线性表的顺序表示实现,以及线性表的链式表示和实现、带头节点的单向链表,C语言简单实现一些基本功能

2022 中国算力大会发布“创新先锋”优秀成果

Continuing to invest in product research and development, Dingdong Maicai wins in supply chain investment

FeatureNotFound( bs4.FeatureNotFound: Couldn't find a tree builder with the features you requested:

Continuing to invest in product research and development, Dingdong Maicai wins in supply chain investment

GNSS【0】- 专题

KunlunBase 1.0 发布了!

LDO investigation

appium软件自动化测试框架

实例036:算素数

Flask Framework Beginner-06-Add, Delete, Modify and Check the Database

Use of lombok annotation @RequiredArgsConstructor

Apache DolphinScheduler新一代分布式工作流任务调度平台实战-中

【虚拟化生态平台】虚拟化平台搭建

HBuilderX的下载安装和创建/运行项目

nodejs安装及环境配置

的

的 ;

; 的

的 ;

; .

. 、

、 和

和 are the source domain data

are the source domain data ,

, ,

, ;

; is the marginal distribution of the intermediate domain.在DDTL问题中:

is the marginal distribution of the intermediate domain.在DDTL问题中: ;

; ;

; .

. ;

; Encode it to map it to a hidden representation;

Encode it to map it to a hidden representation; Decode to reconstructx.

Decode to reconstructx. ;

; .

. is an approximation to the original inputxthe reconstructed input.By minimizing the reconstruction error on all training data,即

is an approximation to the original inputxthe reconstructed input.By minimizing the reconstruction error on all training data,即

、

、 ∈ {0,1}:

∈ {0,1}: :

: 和

和 Regularization term on ,通过将

Regularization term on ,通过将

和

和 Controls the importance of this regularization term.

Controls the importance of this regularization term.

:is the classification function that outputs the classification probability;

:is the classification function that outputs the classification probability; ,其中0≤ z≤ 1;

,其中0≤ z≤ 1; 用于选择

用于选择

;Θ表示函数

;Θ表示函数

selected to have low reconstruction error and low training loss.

selected to have low reconstruction error and low training loss.