当前位置:网站首页>Crazy God said redis notes

Crazy God said redis notes

2022-07-06 04:44:00 【RainHey】

List of articles

- Nosql summary

- Redis introduction

- Redis Basic commands

- Basic data type

- Three special data types

- Redis Basic transaction operations

- Redis Achieve optimistic lock

- Jedis

- Springboot Integrate Redis

- Redis.config

- Persistence -RDB

- Persistence -AOF

- Redis Publish subscribe

- Redis colony

- Sentinel mode

- Cache penetration and avalanche

Nosql summary

Why use Nosql

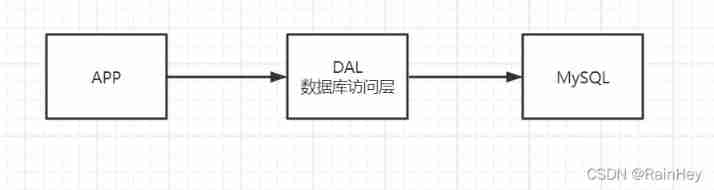

stand-alone MySQL Time  90 years , The number of visits to a website is generally not too large , A single database is enough , But as the number of users increases , There are the following problems :

90 years , The number of visits to a website is generally not too large , A single database is enough , But as the number of users increases , There are the following problems :

- Too much data , A machine cannot be put down

- Index of data (B+ tree), There is no memory for a machine

- Too many visits ( Read write mix ), A machine can't bear

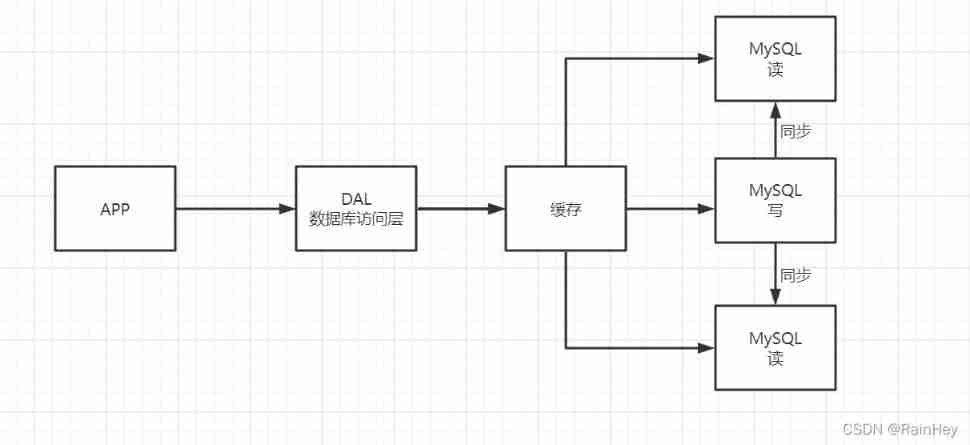

Memcached( cache )+MySQL+ Split Vertically

Website 80% It's all about reading , It's very troublesome to query the database every time ! So we want to reduce the pressure on the database , We can use caching to ensure efficiency !

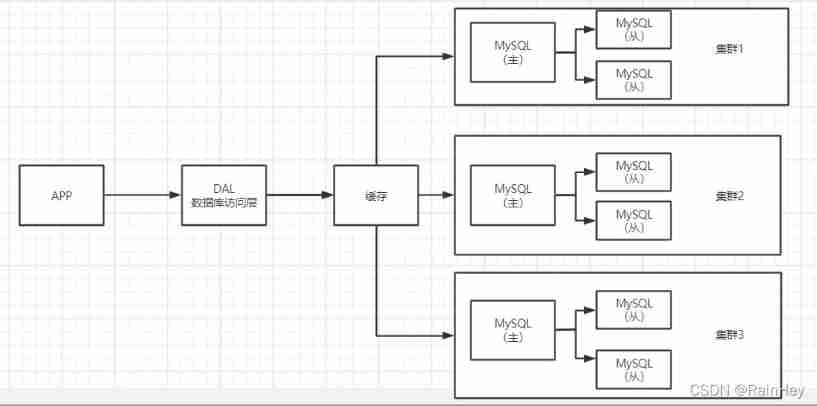

Sub database and sub table + Horizontal split + MySQL colony

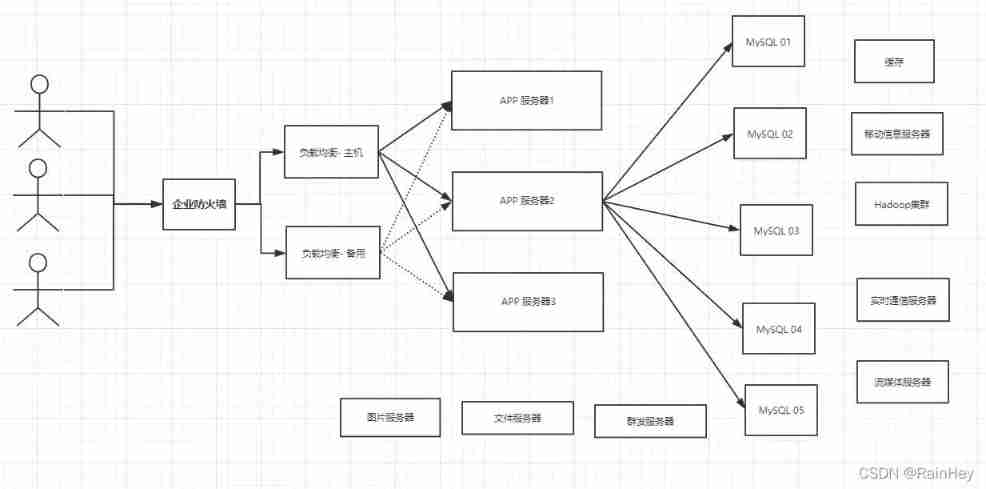

Current basic Internet projects

User's personal information , Social networks , Location . User generated data , User logs and so on exploded !

And that's where we need to use it NoSQL Database ,Nosql Can handle the above situation very well !

What is? Nosql

NoSQL = Not Only SQL( not only SQL)

Relational database : surface : Column + That's ok , The structure of data in the same table is the same

Non relational database : There is no fixed format for data storage , And it can scale out

Nosql characteristic

- Easy to expand ( There's no relationship between the data , Good extension )

- Large data volume and high performance (Redis One second can write 8 Ten thousand times , read 11 Ten thousand times ,NoSQL Cache record level of , It's a fine-grained cache , The performance will be relatively high !)

- Data types are diverse !( There's no need to design the database in advance , Take it and use it )

Redis introduction

Redis What is it?

Redis(Remote Dictionary Server ), Remote dictionary service

Is an open source use ANSI C Language writing 、 Support network 、 Log type that can be memory based or persistent 、Key-Value database , And provide multilingual API

The data is cached in memory ,redis Meeting Periodically write the updated data to the disk or write the modification operation to the additional record file , And on this basis to achieve master-slave( Master-slave ) Sync

Redis It's open source (BSD The license ), Memory storage data structure server , It can be used as a database , Caching and message queuing agents . It supports character string 、 Hashtable 、 list 、 aggregate 、 Ordered set , Bitmap ,hyperloglogs And so on . Built in replication 、Lua Script 、LRU Take back 、 Transactions and different levels of disk persistence , At the same time through Redis Sentinel Provide high availability , adopt Redis Cluster Provide automatic zoning

characteristic

A variety of data types

Persistence

colony

Business

…

Single thread

Redis It's single threaded !,Redis Is to put all the data in memory , So using single thread to operate is the highest efficiency , Multithreading (CPU The context switches : Time consuming operations !), For memory systems , If there is no context switching, the efficiency is the highest , Read and write many times in the same CPU Upper , In the case of data stored in memory , Single thread is the best solution

Linux Lower installation

Download installation package

redis-6.2.6.tar.gzUpload to Linux,

/usr/local/softwareCatalogGet into

/usr/local/softwareCatalog , decompressiontar -zxvf redis-6.2.6.tar.gzEnter the unzip file

cd redis-6.2.6Installation environment

yum install gcc-c++perform

makeAnd then executemake installredis Default installation path /usr/local/bin, Entering this directory will redis Copy the configuration file of to /usr/local/bin/rainhey_config

[[email protected] bin]# cp ../software/redis-6.2.6/redis.conf ./rainhey_configmodify rainhey_config Profile under , modify

daemonize yesStart with the modified configuration file redis,

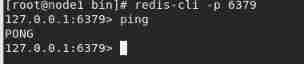

[[email protected] bin]# redis-server rainhey_config/redis.confOpen client connection server,

redis-cli -p 6379test

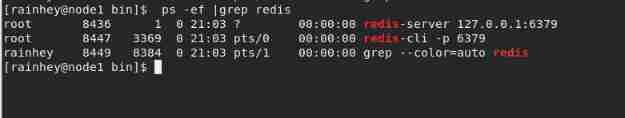

see Redis Is the process on

close Redis service

Redis Basic commands

Official order complete document

database

select 3 //select Switch database ,Redis The default is 16 A database ( Number 0-15), By default, use section 0 A database

dbsize // Current database size

Set the value

set name rainhey // Store value , Key value pair

get name // Value

keys * // View all key

Empty

flushdb // Clear the current database

flushall // Empty all databases

To judge the existence of

exists name // Determine whether the key value exists ; There is returned 1, There is no return 0

Move

move name 1 // Remove from the current database key To 1 In database

Set expiration

expire age 20 // Set the expiration time of the key ( second ), Automatically remove after expiration

ttl age // see key The rest of the time

type

type name // see key The type of

Basic data type

String

Additional

append name test // stay key name Append the string after the value of “test”, if key Does not exist is equivalent to adding

length

strlen name // obtain key The string length of the corresponding value

Addition and subtraction

incr view //view Number plus 1

decr view //view Value minus 1

incrby view 10 //view Number plus 10

decrby view 11 //view Value minus 11

Range value

getrange test 0 4 // Intercept the string subscript [0,4] String , Notice that there are closed intervals on both sides ;[0,-1] Represents the entire string

setrange test 1 *** // Replace string ,1 Subscript for starting replacement ,*** Represents the value of starting replacement

Be overdue

setex key1 20 "hello" // setex( set with expire )

There is no setting

setnx key2 "hello" // setnx (set if not exist)

The batch operation

mset k1 v1 k2 v2 k3 v3 // Batch setting value

mget k1 k2 k3 // Batch fetch value

msetnx k1 v1 k4 v4 // key Set when none of them exist , Otherwise, do not set

object

set user:1 {name:zhangsan,age:3} // Set up user:1 object , use json String saves its value

mset user:1:name zhangsan user:1:age 55 // there key It's a clever design user:{id}:{field}

Get settings

getset key2 v2 // First get the value and then set it

List

be-all List The orders are based on L At the beginning

add to ,lpush It's equivalent to putting it on the stack ,rpush Equivalent to entering the queue , When reading, read the stack first and then the queue

lpush list one // Go to list Add elements to it

rpush list four // Go to list Additive elements

Range value

lrange list 0 -1 // from list Inside the values

Remove left and right

lpop list // remove list The first element in

rpop list // remove list The last element in

rpoplpush list list1 // remove list The last element lpush To list1 in

Subscript value

lindex list 1 // obtain list Subscript 1 Value

List length

llen list // obtain list length

remove

lrem list 2 four // from list Remove two values from four Value

trim

ltrim list 0 3 // Only keep list[0,3] Value

Replace

lset list 0 one // Replace the value of the specified subscript

interpolation

linsert list before world other // stay world Add before other

Set

Redis Of Set yes string Type of unordered set . Collection members are unique , This means that in the set Cannot have duplicate data

Redis in Collections are implemented through hash tables Of , So add the , Delete , The complexity of searching is O(1)

sadd myset hello1 // add value

smembers myset // View members

sismember myset hello1 // Judge hello1 Whether it is myset Members of

scard myset // see myset Element number

srem myset hello1 // remove hello1

srandmember myset 2 // Take out two randomly

spop myset 1 // Randomly remove a specified number of elements

smove myset1 myset2 one // take one from myset1 Move to myset2

sdiff set1 set2 //set2 stay set1 The difference set in

sinter set1 set2 // intersection

sunion set1 set2 // Combine

Hash

Map aggregate ,key-map, At this time, the value is map Set , The nature and string No big difference

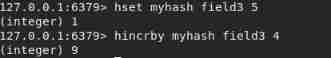

hset myhash field1 rainhey // Save key value pairs

hget myhash field1 // Value

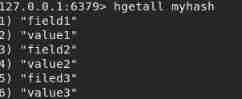

hmset myhash field1 value1 field2 value2 // Batch storage of key value pairs

hmget myhash field1 field2 // Batch values

hgetall myhash // Get all key value pairs

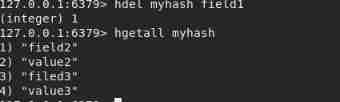

hdel myhash field1 // Delete key, Corresponding value No more.

hlen myhash // Check the logarithm of key value pairs

hkeys myhash // Get all key value pairs key

hvals myhash // Get all key value pairs value

hincrby myhash field3 4 //field3 Corresponding value plus 4

hsetnx myhash field4 hello // There is no creation , There is a problem that cannot be set

Hash More suitable for object storage ,Sring More suitable for string storage !

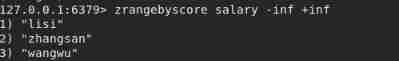

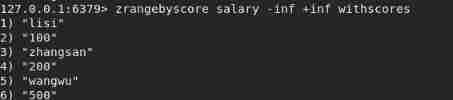

Zset( Ordered set )

stay set A value is added on the basis of set k1 v1 ; zset k1 score v1

zadd myzset 1 one // Set a value

zadd myzset 3 three 4 four // Set multiple values

zrange myzset 0 -1 // Value , Sort from small to large

zrangebyscore salary -inf +inf // According to score Sort

zrangebyscore salary -inf +inf withscores // Sort with score

zrem salary lisi // Removes the specified element

zcard salary // Look at the number of elements

zrevrange salary 0 -1 // Sort from large to small

zcount myset 1 3 // obtain score stay 1 To 3 The number of elements of

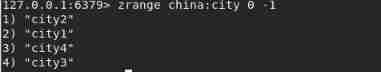

Three special data types

Geospatial Location

There are only six commands

- Effective longitude from -180 C to 180 degree .

- Effective latitude from -85.05112878 C to 85.05112878 degree

geoadd china:city 114.878872 30.459422 beijing // Add location , Parameters key longitude dimension name

geoadd china:city 116.413384 39.910925 guangzhou 79.920212 37.118336 hetian // Multifocal

geopos china:city beijing // Get the latitude and longitude of a place

geopos china:city shanghai beijing // Multifocal

distance

- m Expressed in meters

- km Expressed in kilometers

- mi In miles .

- ft In feet

geodist china:city shanghai beijing // Calculated distance

geodist china:city shanghai beijing km // km In units of

georadius china:city 118 30 500 km // Find in longitude and latitude 118 30 For the center of a circle ,500km A city with a radius

georadius china:city 119 30 200 km withdist // Find and take the distance

georadius china:city 119 30 200 km withcoord // Find and bring the longitude and latitude coordinates

georadius china:city 119 30 200 km withcoord withdist count 2 // Find and bring the coordinate distance 、 Limit the number of searches

eoradiusbymember china:city city3 100 km // Find other members whose members are center points

geohash china:city city1 city2 city3 // The command returns 11 A character geohash character string , Convert two-dimensional longitude and latitude into one-dimensional string , The closer the string is, the closer the distance is

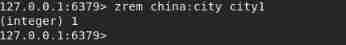

Geo The underlying implementation principle of is Zset, So you can also Zset Command to operate Geo

View all elements

Remove elements

Hyperloglog Base Statistics

base : The number of non duplicate elements in the dataset

pfadd myset a b c d e f g h i j // add to

pfadd myset2 i j k c v z h

pfcount myset // Count , No repetition

pfmerge myset3 myset myset2 // Merge sets myset myset2 To myset3

If fault tolerance is allowed , Then you can use Hyperloglog !

If fault tolerance is not allowed , Just use set Or your own data type !

Bitmap Bitmap

As long as it's in two states , Bitmaps can be used to manipulate binary bits to record

setbit sign 2 0 //2 Represents the second position ,0 Represents the position status

getbit sign 6 // Get the status of the sixth position

bitcount sign // Statistics sign The state is 1 The number of

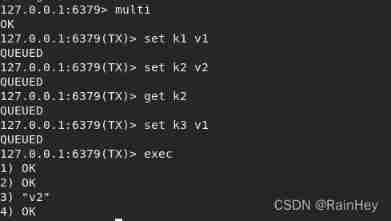

Redis Basic transaction operations

Redis The nature of business : A collection of commands , All commands of a transaction are serialized , Execute in sequence during transaction execution

Redis A single command remains atomic , But transactions don't guarantee atomicity

Redis Transactions have no concept of isolation levels

All commands are not executed directly in the transaction , Only after issuing the execution command can it be executed

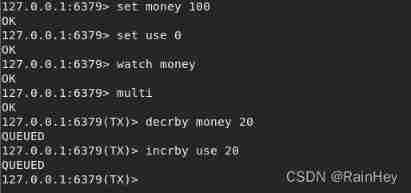

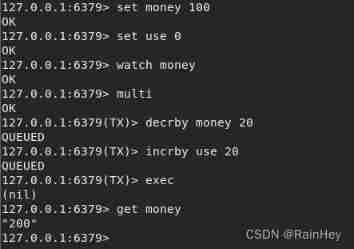

- Open transaction multi

- Order to join the team

- Perform transactions exec

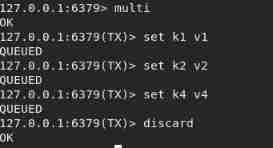

discard Give up the business , None of the commands in the queue will be executed

If there is a compiled exception in a command in the transaction , Then all commands will not be executed ; If a command in a transaction has a runtime exception ( Such as 1/0), Then other commands can be executed normally

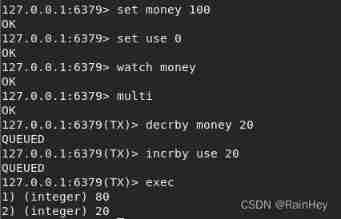

Redis Achieve optimistic lock

Pessimistic locking : I think it's going to go wrong all the time , Whatever you do, you'll lock it

Optimism lock : I don't think anything will go wrong at any time , No locks , When updating the data, judge whether someone has modified the data during this period

watch key Monitor the specified data , It's like optimistic locking

Normal execution

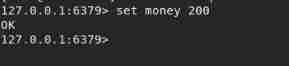

Under abnormal conditions , Start a client simulation queue jumping thread

Threads 1: Not yet implemented exec

The thread 2: Changed the value

Threads 1 perform : The result is null, The transaction was not executed

If transaction execution fails , Unlock unwatch Get the latest value , Then lock the transaction

Jedis

Redis Officially recommended Java Connection tool , Use Java operation Redis Middleware

- Import dependence

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>4.1.1</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.72</version>

</dependency>

- Code instance

public class Test {

public static void main(String[] args) {

JSONObject jsonObject = new JSONObject();

jsonObject.put("hello", "world");

jsonObject.put("name", "rainhey");

String result = jsonObject.toJSONString();

// 1.new jedis object

Jedis jedis = new Jedis("192.168.0.100", 6379);

jedis.auth("123456");

// 2. jedis All commands of are all commands learned before

Transaction multi = jedis.multi();

try{

multi.set("user1", result);

multi.set("user2", result);

multi.exec();

}catch (Exception e){

multi.discard();

e.printStackTrace();

}

finally {

System.out.println(jedis.get("user1"));

System.out.println(jedis.get("user2"));

jedis.close();

}

}

}

Springboot Integrate Redis

Quick start

Springboot 2.x after , Originally used Jedis By Lettuce Replace

Jedis: Using direct connection , If multiple threads operate , It's not safe . If you want to avoid insecurity , Use jedis pool Connection pool ! More like BIO Pattern

Lettuce: use netty, Instances can be shared across multiple threads , There is no thread unsafe situation ! Can reduce thread data , More like NIO Pattern

- Import dependence

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

- configure connections

spring.redis.host=192.168.0.100

spring.redis.port=6379

spring.redis.password=123456

Pay attention to some connection pool related configurations , Be sure to use Lettuce The connection pool

- test

@SpringBootTest

class RedisSpringApplicationTests {

@Autowired

private RedisTemplate redisTemplate;

@Test

void contextLoads() {

/* In addition to the basic operations , Common methods can be through redisTemplate operation , Things like business and basic CRUD redisTemplate. opsForValue: Operation string , similar String opsForList: operation list opsForSet() opsForZSet() opsForHash() opsForGeo() opsForHyperLogLog()*/

redisTemplate.opsForValue().set("mykey", "rainhey");

System.out.println(redisTemplate.opsForValue().get("mykey"));

/* Some commands passed Redis Connection object operation of * */

/*RedisConnection connection = redisTemplate.getConnectionFactory().getConnection(); connection.flushAll(); connection.flushDb();*/

}

}

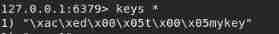

stay Redis Garbled code is found when viewing data in , This is related to the serialization of storage objects , See the source code Redis By default JDK Serialization mode , At this time Redis The data you see is garbled

serialize

Java Provides a mechanism for object serialization , The mechanism of , An object can be represented as a sequence of bytes , The sequence of bytes contains the data for the object 、 Information about the type of object and the type of data stored in the object ; After writing the serialized object to the file , It can be read from a file , And deserialize it , in other words , Object type information 、 Object's data , There are also data types in objects that can be used to create new objects in memory

public class User {

private String name;

private int age;

}

@Test

void test() throws JsonProcessingException {

User user = new User("rainhey", 3);

// serialize , convert to json String passing

String s = new ObjectMapper().writeValueAsString(user);

redisTemplate.opsForValue().set("user", s);

System.out.println(redisTemplate.opsForValue().get("user"));

}

public class User implements Serializable {

private String name;

private int age;

}

@Test

void test() throws JsonProcessingException {

User user = new User("rainhey", 3);

// serialize , convert to json String passing

//String s = new ObjectMapper().writeValueAsString(user);

redisTemplate.opsForValue().set("user", user);

System.out.println(redisTemplate.opsForValue().get("user"));

}

Customize redisTemplate

@Configuration

public class RedisConfig {

// Learn from the source code , Write your own redisTemplate, Covering the bottom layer

@Resource

RedisConnectionFactory redisConnectionFactory; // Error in method parameter injection , Use Resource Inject

@Bean

public RedisTemplate<String, Object> redisTemplate() {

// For development convenience , In general use string

RedisTemplate<String, Object> template = new RedisTemplate();

template.setConnectionFactory(redisConnectionFactory);

/* * Serialization settings * call RedisSerializer To return the serializer */

// key、hash Of key use String Serialization mode

template.setKeySerializer(RedisSerializer.string());

template.setHashKeySerializer(RedisSerializer.string());

// value、hash Of value use Jackson Serialization mode

template.setValueSerializer(RedisSerializer.json());

template.setHashValueSerializer(RedisSerializer.json());

template.afterPropertiesSet();

return template;

}

}

Customize Redis Tool class

RedisTemplate You need to call .opForxxx Affect efficiency , These commonly used public API Extracted and encapsulated into a tool class , Then directly use the tool class to indirectly operate Redis

Tool reference blog :

https://www.cnblogs.com/zeng1994/p/03303c805731afc9aa9c60dbbd32a323.html

https://www.cnblogs.com/zhzhlong/p/11434284.html

Redis.config

- The configuration file is case insensitive

- The Internet

bind 127.0.0.1 -::1 // binding IP

protected-mode no // Whether to turn on the protection mode

port 6379 // port

- Universal

daemonize yes // Execute... As a daemons

pidfile /var/run/redis_6379.pid // If it is executed in the background daemon mode , One needs to be specified PID file

# journal

# Specify the server verbosity level.

# This can be one of:

# debug (a lot of information, useful for development/testing)

# verbose (many rarely useful info, but not a mess like the debug level)

# notice (moderately verbose, what you want in production probably) // Production environment

# warning (only very important / critical messages are logged)

loglevel notice // The level of logging

logfile "" // Log output file

databases 16 // Number of databases

always-show-logo no // Whether or not shown LOGO- pagoda

- snapshot

Persistence , How many operations are performed within the specified time , Will persist to the file .rdb .aof

Redis Is a memory database , If there is no persistence , Data will be lost

# save 3600 1 //3600 s There is at least one Key Make changes , It will last

# save 300 100

# save 60 10000

stop-writes-on-bgsave-error yes // Whether to continue working after persistence error

rdbcompression yes // Is it compressed? rdb file , It needs to be consumed cpu resources

rdbchecksum yes // preservation rdb Check and verify the file for errors

dir ./ //rdb File storage directory

- REPLICATION Copy

replicaof <masterip> <masterport> // Configure the host ip And port , It's equivalent to an order slaveof

masterauth <master-password> // Configure host password

- SECURITY Security

requirepass 123456 // Set the password

- CLIENTS

maxclients 10000 // Client limit

maxmemory <bytes> //redis Configure maximum memory capacity

maxmemory-policy noeviction // Processing strategy after the memory reaches the upper limit

Strategy , The setting method is as follows: config set maxmemory-policy volatile-lru

1、volatile-lru: Only for key Conduct LRU( The default value is )

2、allkeys-lru : Delete lru Algorithm key

3、volatile-random: Random delete is about to expire key

4、allkeys-random: Random delete

5、volatile-ttl : Delete expiring

6、noeviction : Never expire , Returns an error

- APPEND ONLY MODE (AOF To configure )

appendonly no // Not on by default , By default rdb

appendfilename "appendonly.aof" // The name of the persistent file

# appendfsync always // Every modification is synchronized

appendfsync everysec // Once a second , One second of data could be lost

# appendfsync no // Out of sync

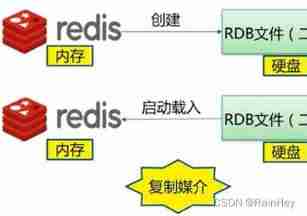

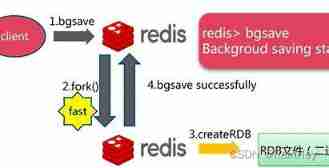

Persistence -RDB

Write the data set snapshot in memory to disk within the specified time interval , When it recovers, it reads the snapshot file directly into memory

rdb The saved file is dump.rdb, You can configure... In the configuration file

RDB principle

- Redis call forks, Have both parent and child processes

- The subprocess writes data to a temporary RDB In file

- When the subprocess completes the new RDB When the file is written ,Redis New use RDB Replace the original file with RDB file , And delete the old RDB file

Trigger mechanism

- save When the rules are satisfied , Will automatically trigger rdb principle

- perform flushall command , It will trigger our rdb principle

- sign out redis, It will also automatically generate rdb file

One will be automatically generated after the trigger mechanism rdb file

recovery

Just put the rdb Files in Redis Start Directory ,redis It checks automatically when it starts up dump.rdb, Recover the data

Advantages and disadvantages

advantage :

- Suitable for large-scale data recovery

- The requirement of data integrity is not high

shortcoming :

- It takes a certain time interval to operate , If redis Unexpected downtime , This last modified data is gone

- fork Process time , Will take up a certain amount of content space

Persistence -AOF

AOF What is kept is appendonly.aof file

Not on by default , You need to go to the configuration file to configure it yourself

principle

Record all the orders executed ( Read operation commands are not recorded ), When recovering, execute all commands in the file

Repair

If AOF There is an error in the file , Now Redis It can't start normally , This needs to be modified aof file ,redis Provides a tool redis-check-aof --fix,aof After the file is repaired normally ,redis It can start normally

redis-check-aof --fix appendonly.aof // Repair aof file

Advantages and disadvantages

advantage

- Every change is synchronized , The integrity of the file will be better

- Synchronize once per second , One second of data could be lost

- No synchronization , Highest efficiency

shortcoming

- Relative to data files ,aof Far greater than rdb, Repair faster than rdb slow !

- aof It's more efficient than rdb slow , So we redis The default configuration is rdb Persistence

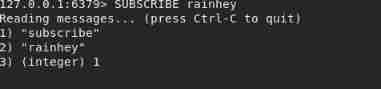

Redis Publish subscribe

| command | describe |

|---|---|

PSUBSCRIBE pattern [pattern..] | Subscribe to one or more channels that match the given pattern |

PUNSUBSCRIBE pattern [pattern..] | Unsubscribe from one or more channels that match the given mode |

PUBSUB subcommand [argument[argument]] | View subscription and publishing system status |

PUBLISH channel message | Publish a message to a designated channel |

SUBSCRIBE channel [channel..] | Subscribe to a given channel or channels |

UNSUBSCRIBE channel [channel..] | Unsubscribe from one or more channels |

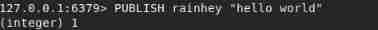

- Start a client subscription channel , Auto monitor

- Start a client to send messages

- The subscriber receives the message

principle

Every Redis The server processes all maintain a... That represents the state of the server redis.h/redisServer structure , Structural pubsub_channels Property is a dictionary , This dictionary is used to save subscription channel information , among , The key of the dictionary is the channel being subscribed , The value of a dictionary is a linked list , All clients subscribing to this channel are saved in the linked list

Client subscription , It is linked to the end of the linked list of the corresponding channel , Unsubscribe is to remove the client node from the list

application

- News subscription : Official account subscription , Weibo attention and so on

- Multi person online chat room …

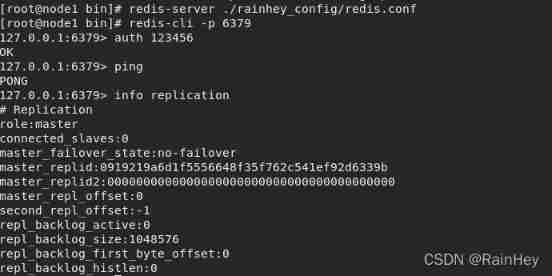

Redis colony

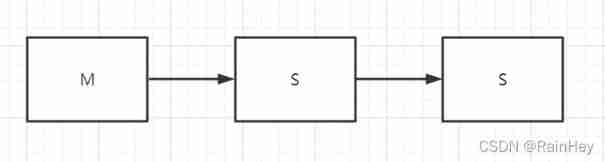

Master slave copy

Master slave copy , It means to put one Redis Server data , Copy to other Redis The server . The former is called the main node , The latter is called the slave node , Data replication is one-way , It can only be copied from the master node to the slave node

effect

- data redundancy : Master-slave replication realizes hot backup of data , It's a way of data redundancy other than persistence

- Fault recovery : When the primary node fails , The slave node can temporarily replace the master node to provide services , It's a way of service redundancy

- Load balancing : On the basis of master-slave replication , Cooperate with the separation of reading and writing , Write by the master node , Read from node , Share the load on the server ; Especially in the context of more reading and less writing , Load sharing through multiple slave nodes , Increase concurrency

- High availability cornerstone : Master slave replication is also the foundation for sentinels and clusters to implement

Environment configuration

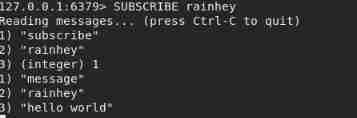

Only configure slave Libraries , Do not configure the master database , because Redis By default, you are the main database

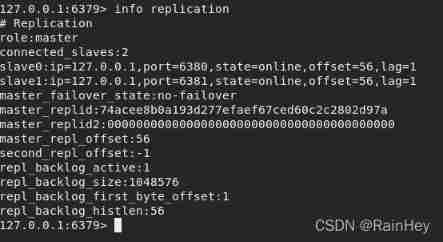

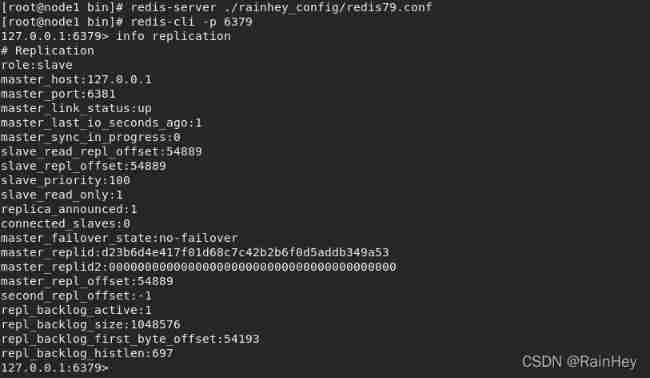

View the current Redis Master slave copied information

info replication

- Copy redis.conf Named as redis79.conf、redis80.conf、redis81.conf

Modify the configuration file

redis79.conf:

logfile "6379.log"

dbfilename dump6379.rdb

redis80.conf:

port 6380

pidfile /var/run/redis_6380.pid

logfile "6380.log"

dbfilename dump6380.rdb

redis81.conf:

port 6381

pidfile /var/run/redis_6381.pid

logfile "6381.log"

dbfilename dump6381.rdb

- start-up 3 individual Redis service , Build one master and two slaves , Only configure the slave

Slave 80:

slaveof 127.0.0.1 6379

Slave 81:

slaveof 127.0.0.1 6379

Look at the host replication

The real master-slave configuration is configured in the configuration file , Here we configure it with commands , Is temporary

Configure the master-slave configuration in the configuration file

replicaof <masterip> <masterport> // Configure the host ip And port , It's equivalent to an order slaveof

masterauth <master-password> // Configure host password

details

The slave can only read , Can't write , The host is readable and writable, but mostly used for writing , All data and information in the host will be actively saved by the slave

When the host is disconnected , By default, the role of the slave does not change , The cluster just lost the write operation , When the host recovers , It will be connected to the slave computer and restored to its original state

When the slave is disconnected , If the master and slave are configured with the command line instead of the slave configured with the configuration file , Then the original slave will be automatically restored to the master after it is started again, and the data of the master connected to it as the slave cannot be obtained , If it is reconfigured as a slave of the previously connected host , Then you can get all the data of the host

As long as the host is reconnected , once Copy in full Will not automatically execute ; Then the new data on the host Incremental replication To slaveLink model

Similarly, only the master node can writeCommand from slave node to master node

SLAVEOF no one

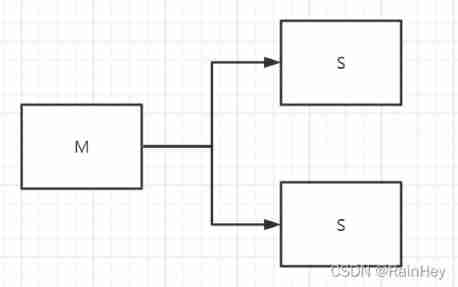

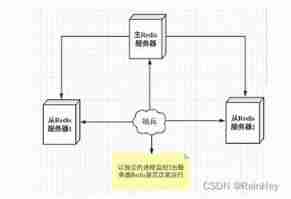

Sentinel mode

When the primary server goes down , You need to manually switch one from the server to the primary server , This requires human intervention , Laborious and arduous , It will also make the service unavailable for a period of time

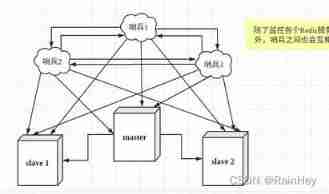

Sentinel mode : Automatically monitor whether the host fails , When the host fails , Automatically switch the slave to the host according to the number of votes , Sentinels are an independent process , Through to the Redis The server sends a command and waits for a response , So as to monitor multiple running Redis example

A sentinel is right Redis There may be problems when the server is monitored , We can use multiple sentinels to monitor , The Sentinels will also monitor , In this way, the multi sentry mode is formed

Suppose the primary server goes down , sentry 1 First detect the result , The system doesn't work right away failover The process , Just sentinels 1 Subjectively, the primary server is not available , This phenomenon is called Subjective offline , When the sentinel behind also detects that the main server is unavailable and the number reaches a certain value , There will be a vote between the Sentinels , The vote was initiated by a sentinel , Conduct failover Fail over operations , Publish through subscription mode , Let each sentinel switch his monitoring from the server to the host , This process is called Objective offline

test

First set up a master-slave mode

- Write sentry configuration

vim sentinel.conf

sentinel monitor myredis 127.0.0.1 6379 1

// myredis The monitoring name taken by yourself

// monitor ip And port

// 1 When a sentinel subjectively thinks that the host is disconnected , It can be objectively considered that the host is faulty , Then start electing a new host

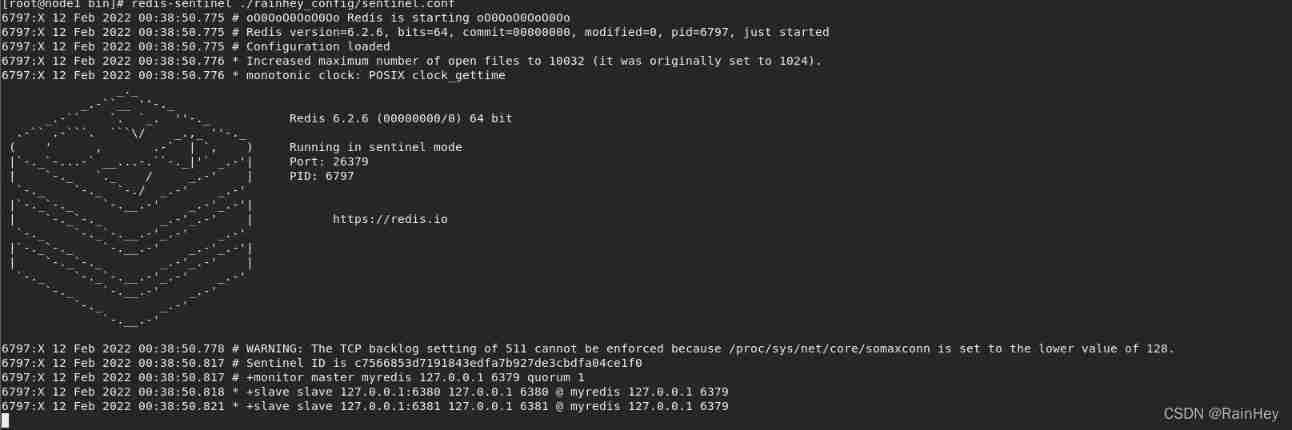

- Open the sentry

redis-sentinel ./rainhey_config/sentinel.conf

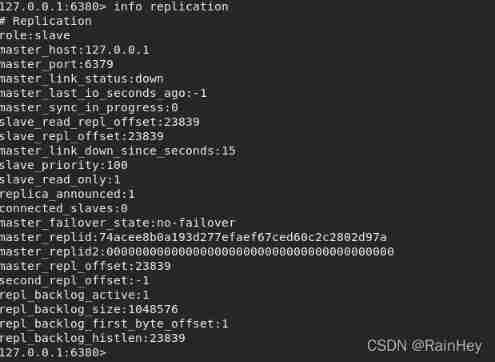

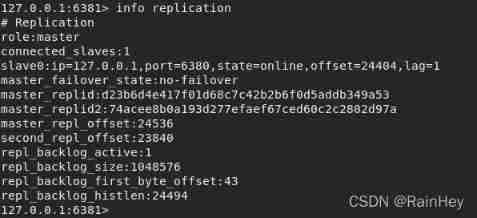

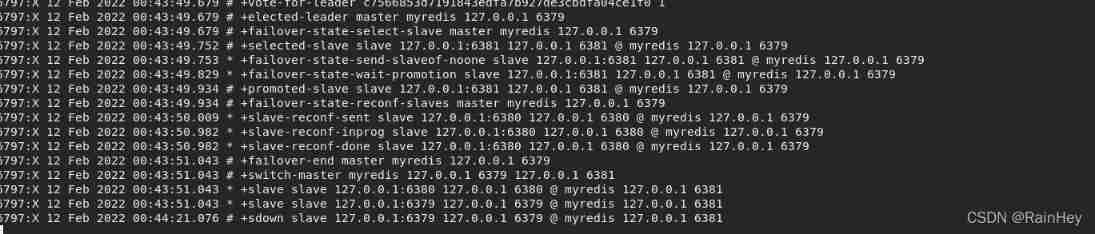

3. Turn off the host 6379

4. Observe 6380 and 6381, You can find 81 It has automatically become a host ,80 Become its slave

5. Sentinel log

After the main engine is disconnected , At this time, a server will be selected from the slave as the host ( Voting algorithm )

If you connect back to the previous host at this time, you can find , The previous master has become a slave

Advantages and disadvantages

advantage :

- The sentry cluster , Based on master-slave replication mode , All the advantages of master-slave replication , It all has

- Master slave can switch , Faults can be transferred , The availability of the system is better

- Sentry mode is an upgrade of master-slave mode , Manual to automatic , More robust

shortcoming :

- Redis Not good for online expansion , Once the cluster capacity reaches the upper limit , Online expansion is very troublesome

- The configuration of sentinel mode is very troublesome , There are many configuration items in it

Full configuration of sentinel mode

# Example sentinel.conf

# sentry sentinel The port on which the instance runs Default 26379

port 26379

# sentry sentinel Working directory of

dir /tmp

# sentry sentinel Monitored redis The master node ip port

# master-name You can name the master node by yourself Only letters A-z、 Numbers 0-9 、 These three characters ".-_" form .

# quorum When these quorum Number sentinel The sentry thought master Primary node lost connection Then at this time It is objectively believed that the primary node is disconnected

# sentinel monitor <master-name> <ip> <redis-port> <quorum>

sentinel monitor mymaster 127.0.0.1 6379 1

# When in Redis In the example, the requirepass foobared Authorization code So all connections Redis The client of the instance must provide the password

# Set up a sentry sentinel Password to connect master and slave Note that the same authentication password must be set for master slave

# sentinel auth-pass <master-name> <password>

sentinel auth-pass mymaster MySUPER--secret-0123passw0rd

# Specify how many milliseconds later The master node didn't answer the sentry sentinel here Sentinels subjectively think that the primary node is offline Default 30 second

# sentinel down-after-milliseconds <master-name> <milliseconds>

sentinel down-after-milliseconds mymaster 30000

# This configuration item specifies what is happening failover How many can there be at most during the primary / standby handover slave Simultaneously on the new master Conduct Sync ,

The smaller the number , complete failover The longer it takes ,

But if the number is bigger , That means the more Much of the slave because replication And is not available .

You can do this by setting this value to zero 1 To make sure there's only one at a time slave In a state where command requests cannot be processed .

# sentinel parallel-syncs <master-name> <numslaves>

sentinel parallel-syncs mymaster 1

# Timeout for failover failover-timeout It can be used in the following ways :

#1. The same sentinel To the same master two failover The time between .

#2. When one slave From a wrong one master Where the synchronized data starts to calculate the time . until slave Corrected to be correct master Where the data is synchronized .

#3. When you want to cancel an ongoing failover The time required .

#4. When doing failover when , Configure all slaves Point to the new master Maximum time required . however , Even after this timeout ,slaves It will still be correctly configured to point master, But it won't parallel-syncs Here comes the configured rule

# Default three minutes

# sentinel failover-timeout <master-name> <milliseconds>

sentinel failover-timeout mymaster 180000

# SCRIPTS EXECUTION

# Configure the scripts that need to be executed when an event occurs , The administrator can be notified by script , For example, when the system is not running normally, send an email to inform the relevant personnel .

# There are the following rules for the result of the script :

# If the script returns 1, Then the script will be executed again later , The number of repetitions currently defaults to 10

# If the script returns 2, Or 2 A higher return value , The script will not repeat .

# If the script is terminated during execution due to receiving a system interrupt , Then the same return value is 1 The same behavior when .

# The maximum execution time of a script is 60s, If I exceed that time , The script will be a SIGKILL Signal termination , And then re execute .

# Notification scripts : When sentinel When any warning level event occurs ( for instance redis Subjective failure and objective failure of examples, etc ), Will call this script ,

# At this point the script should be sent by email ,SMS Wait for the way to inform the system administrator about the abnormal operation of the system . When the script is called , Two parameters will be passed to the script ,

# One is the type of event ,

# One is the description of the event .

# If sentinel.conf The script path is configured in the configuration file , Then you have to make sure that the script exists in this path , And it's executable , otherwise sentinel Failed to start normally successfully .

# Notification script

# sentinel notification-script <master-name> <script-path>

sentinel notification-script mymaster /var/redis/notify.sh

# The client reconfigures the master node parameter script

# When one master because failover And when it changes , This script will be called , Notify related clients about master Information that the address has changed .

# The following parameters will be passed to the script when the script is called :

# <master-name> <role> <state> <from-ip> <from-port> <to-ip> <to-port>

# at present <state> Always “failover”,

# <role> yes “leader” perhaps “observer” One of them .

# Parameters from-ip, from-port, to-ip, to-port It's for the old master And the new master( I.e. old slave) communication

# This script should be generic , Can be called many times , It's not targeted .

# sentinel client-reconfig-script <master-name> <script-path>

sentinel client-reconfig-script mymaster /var/redis/reconfig.sh

Cache penetration and avalanche

Cache penetration

Concept

When a user wants to query some data ,Redis There is no... In the in memory database , That is, cache miss , Then a request is sent to the persistence layer database ; When there are a lot of users ,Redis No cache hits , To request the persistence layer database , It will cause great pressure on the persistence layer database , This is cache penetration

Solution

The bloon filter

Caching empty objects

If a request is not found in the cache or database , Put an empty object of the request in the cache to process subsequent requests

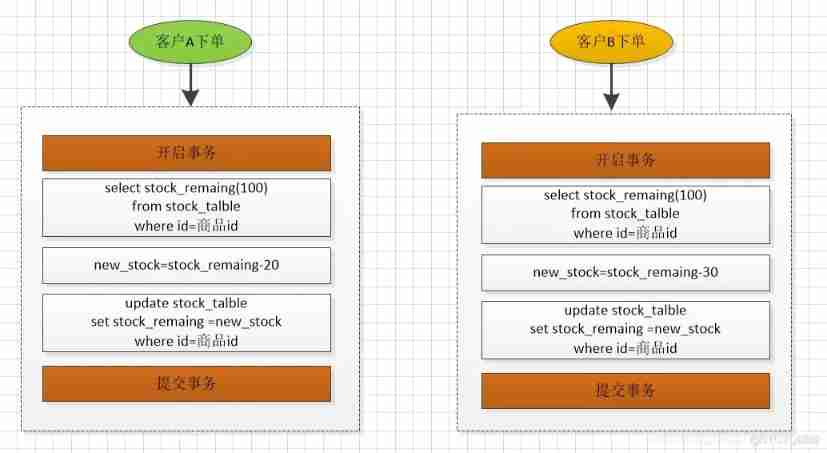

Cache breakdown ( Too much , Data is out of date )

Cache breakdown refers to a hot spot in the cache key, Large concurrency sets access to this , When this key Immediately after failure , Continuous large concurrency directly requests the persistence layer database , Cause too much database pressure

Solution

- Hot data never expires

- Add mutex lock ( Distributed lock )

Use distributed locks to ensure that each key At the same time, there is only one thread to query the back-end service , Other threads do not get the distributed lock and need to wait , In this way, the pressure of high concurrency is transferred to distributed locks

Cache avalanche

At some point in time , Cache set expired or Redis Downtime

Solution

- Redis High availability : build Redis colony

- Current limiting the drop : After cache failure , Control the number of threads that read the database write cache by locking or queuing . For example, to some key Only one thread is allowed to query data and write cache , Other threads wait

- Data preheating : Access the possible data in advance , In this way, some of the data that may be accessed in large amounts will be loaded into the cache ; Manually trigger loading cache before large concurrent access occurs key, Set different expiration times , Make the cache failure time as uniform as possible

边栏推荐

- The most detailed and comprehensive update content and all functions of guitar pro 8.0

- How to estimate the population with samples? (mean, variance, standard deviation)

- What should the project manager do if there is something wrong with team collaboration?

- Postman管理测试用例

- 比尔·盖茨晒18岁个人简历,48年前期望年薪1.2万美元

- 也算是学习中的小总结

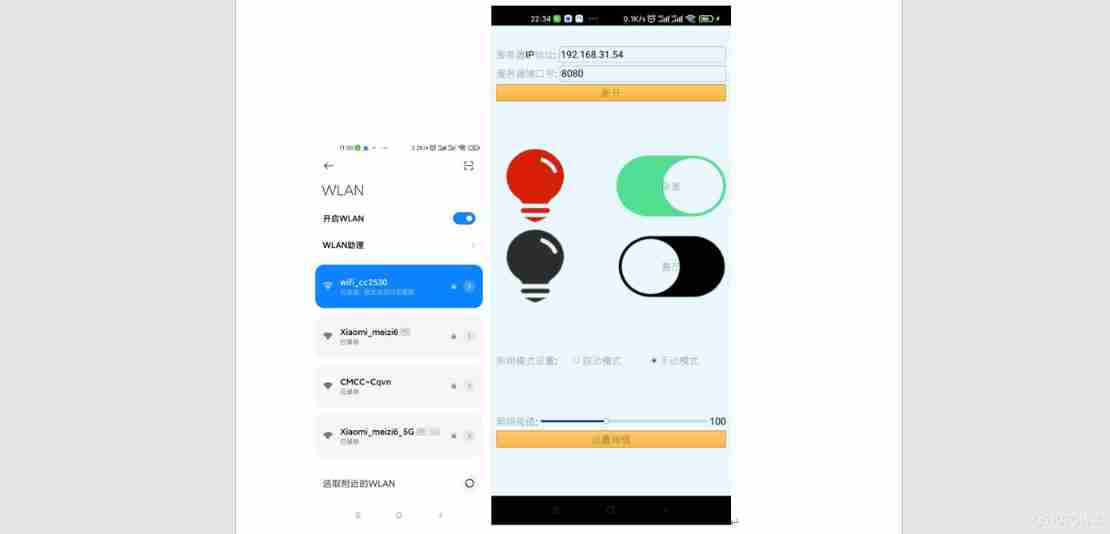

- 11. Intranet penetration and automatic refresh

- Introduction of several RS485 isolated communication schemes

- Complete list of common functions of turtle module

- flink sql 能同时读多个topic吗。with里怎么写

猜你喜欢

Yyds dry inventory automatic lighting system based on CC2530 (ZigBee)

满足多元需求:捷码打造3大一站式开发套餐,助力高效开发

11. Intranet penetration and automatic refresh

Jd.com 2: how to prevent oversold in the deduction process of commodity inventory?

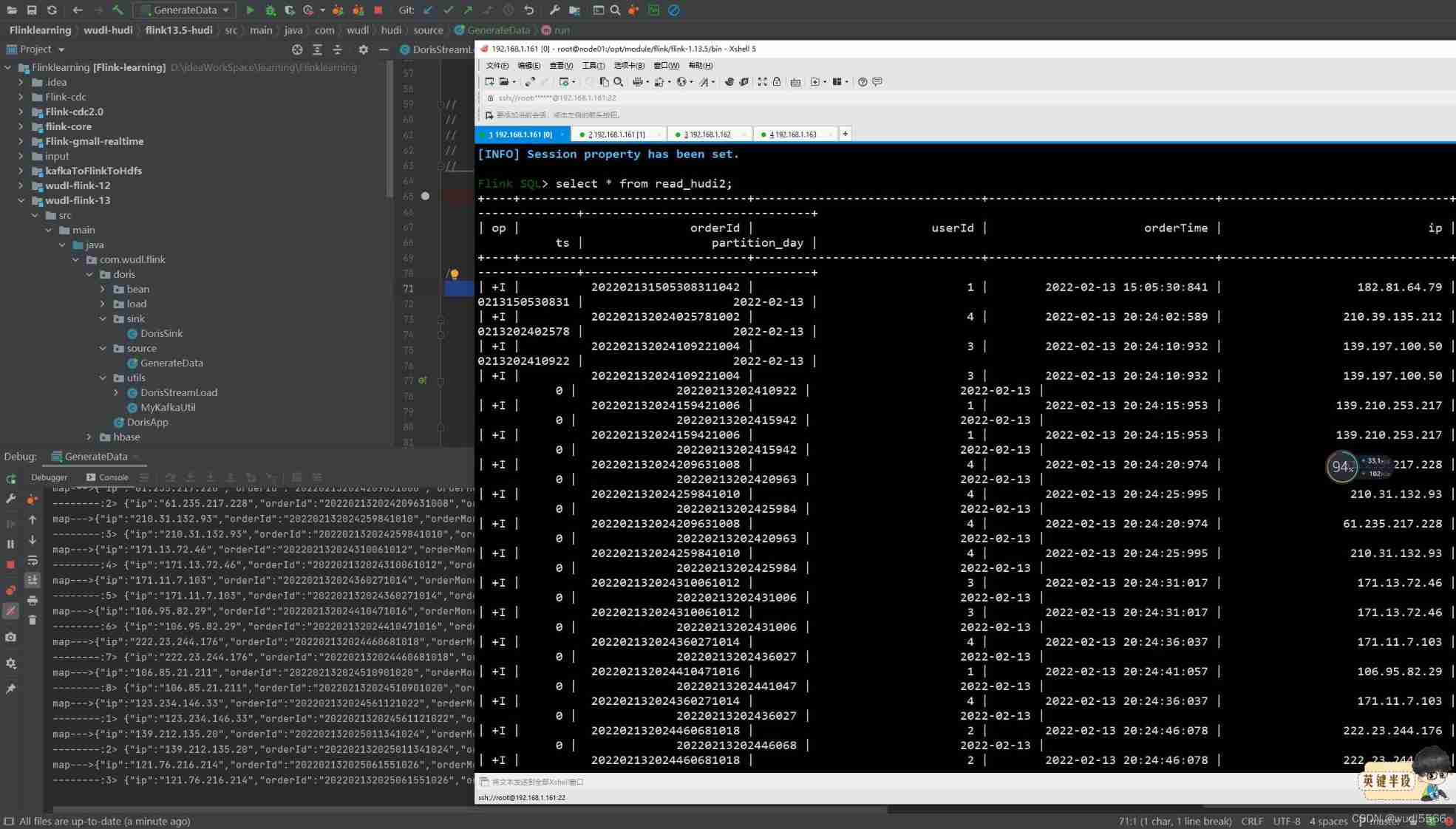

Flink kakfa data read and write to Hudi

JVM garbage collector concept

SQL injection vulnerability (MSSQL injection)

Unity screen coordinates ugui coordinates world coordinates conversion between three coordinate systems

Introduction to hashtable

Sorting out the latest Android interview points in 2022 to help you easily win the offer - attached is the summary of Android intermediate and advanced interview questions in 2022

随机推荐

Sqlserver query results are not displayed in tabular form. How to modify them

[network] channel attention network and spatial attention network

The implementation of the maize negotiable digital warehouse receipt standard will speed up the asset digitization process of the industry

[Chongqing Guangdong education] engineering fluid mechanics reference materials of southwestjiaotonguniversity

Case of Jiecode empowerment: professional training, technical support, and multiple measures to promote graduates to build smart campus completion system

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

Scala function advanced

2327. Number of people who know secrets (recursive)

饼干(考试版)

Lombok principle and the pit of ⽤ @data and @builder at the same time

Database - MySQL storage engine (deadlock)

Dynamic programming (tree DP)

How does vs change the project type?

Fuzzy -- basic application method of AFL

Selection sort

Unity screen coordinates ugui coordinates world coordinates conversion between three coordinate systems

What should the project manager do if there is something wrong with team collaboration?

Project manager, can you draw prototypes? Does the project manager need to do product design?

Recommendation | recommendation of 9 psychotherapy books

RTP gb28181 document testing tool