当前位置:网站首页>python_ scrapy_ Fang Tianxia

python_ scrapy_ Fang Tianxia

2020-11-08 08:04:00 【osc_x4ot1joy】

scrapy- Explain

xpath Select node The common tag elements are as follows .

| Mark | describe |

|---|---|

| extract | The extracted content is converted to Unicode character string , The return data type is list |

| / | Select from root node |

| // | Match the selected current node, select the node in the document |

| . | node |

| @ | attribute |

| * | Any element node |

| @* | Any attribute node |

| node() | Any type of node |

Climb to take the house world - Prelude

analysis

1、 website :url:https://sh.newhouse.fang.com/house/s/.

2、 Determine what data to crawl :1) Web address :page.2) Location name :name.3) Price :price.4) Address :address.5) Phone number :tel

2、 Analyze the web page .

open url after , We can see the data we need , Then you can see that there are still pagination .

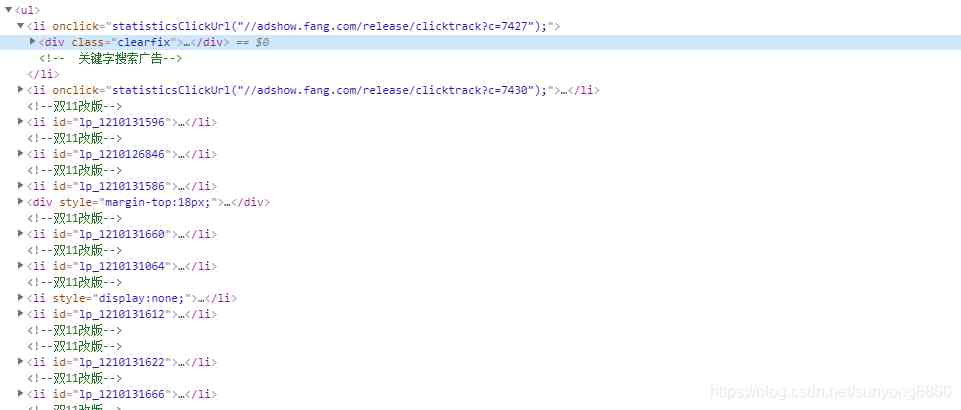

You can see the opening url Then look at the page elements , All the data we need are in a pair of ul tag .

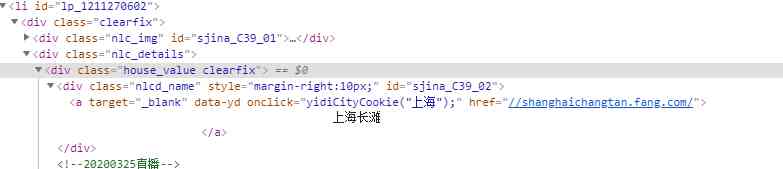

open li A couple of labels , What we need name Is in a Under the label , And there are unclear spaces around the text, such as line feed, need special treatment .

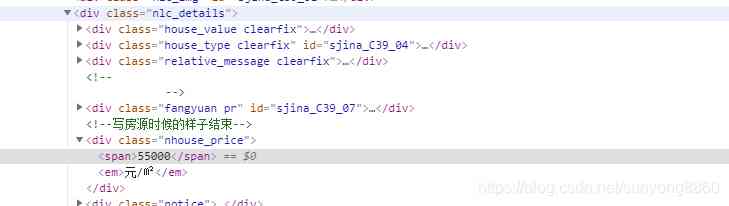

What we need price Is in 55000 Under the label , Be careful , Some houses have been bought without price display , Step on this pit carefully .

We can find the corresponding by analogy address and tel.

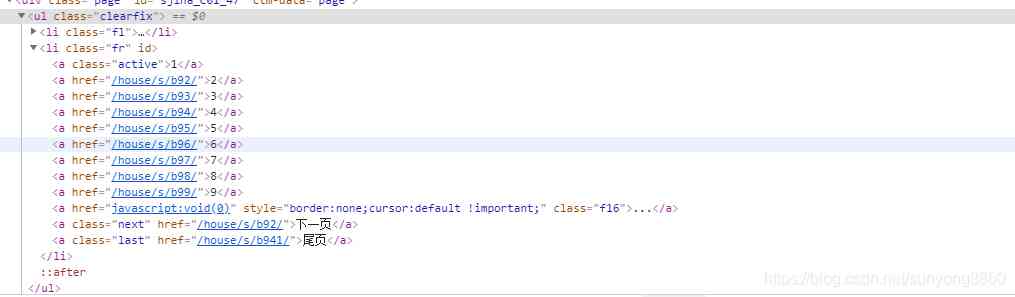

The pagination tag element shows , Of the current page a Of class="active". In opening the home page is a The text of is 1, It means the first page .

Climb to take the house world - Before the specific implementation process

First new scrapy project

1) Switch to the project folder :Terminal Input... On the console scrapy startproject hotel,hotel It's the project name of the demo , You can customize it according to your own needs .

2) On demand items.py Folder configuration parameters . Five parameters are needed in the analysis , Namely :page,name,price,address,tel. The configuration code is as follows :

class HotelItem(scrapy.Item):

# The parameters here should correspond to the specific parameters of the crawler implementation

page = scrapy.Field()

name = scrapy.Field()

price = scrapy.Field()

address = scrapy.Field()

tel = scrapy.Field()

3) Build our new reptile Branch . Switch to spiders Folder ,Terminal Input... On the console scrapy genspider house sh.newhouse.fang.comhouse Is the crawler name of the project , You can customize ,sh.newhouse.fang.com It's an area selection for crawling .

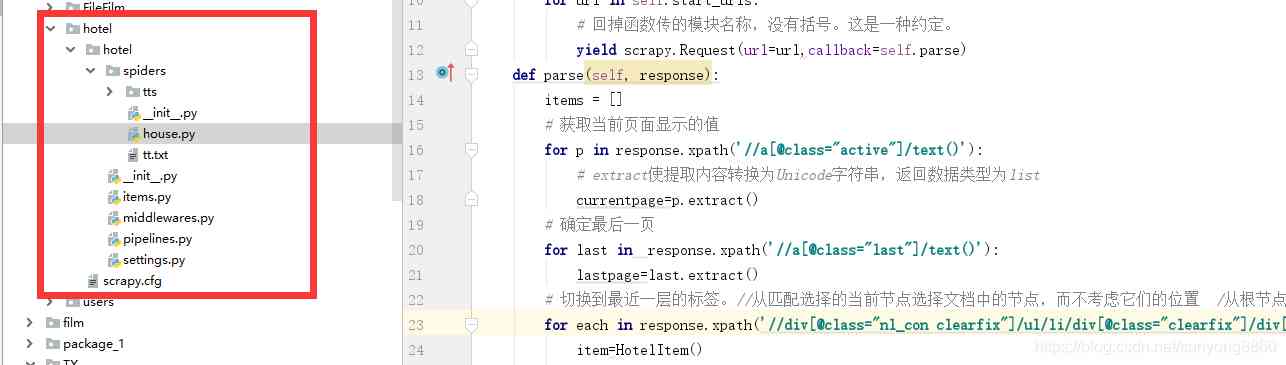

stay spider Under the folder we created house.py The file .

The code implementation and explanation are as follows

import scrapy

from ..items import *

class HouseSpider(scrapy.Spider):

name = 'house'

# Crawling area restrictions

allowed_domains = ['sh.newhouse.fang.com']

# The main page of crawling

start_urls = ['https://sh.newhouse.fang.com/house/s/',]

def start_requests(self):

for url in self.start_urls:

# Return the module name passed by the function , There are no brackets . It's a convention .

yield scrapy.Request(url=url,callback=self.parse)

def parse(self, response):

items = []

# Get the value displayed on the current page

for p in response.xpath('//a[@class="active"]/text()'):

# extract Convert the extracted content to Unicode character string , The return data type is list

currentpage=p.extract()

# Determine the last page

for last in response.xpath('//a[@class="last"]/text()'):

lastpage=last.extract()

# Switch to the nearest layer of tags .// Select the node in the document from the current node that matches the selection , Regardless of their location / Select from root node

for each in response.xpath('//div[@class="nl_con clearfix"]/ul/li/div[@class="clearfix"]/div[@class="nlc_details"]'):

item=HotelItem()

# name

name=each.xpath('//div[@class="house_value clearfix"]/div[@class="nlcd_name"]/a/text()').extract()

# Price

price=each.xpath('//div[@class="nhouse_price"]/span/text()').extract()

# Address

address=each.xpath('//div[@class="relative_message clearfix"]/div[@class="address"]/a/@title').extract()

# Telephone

tel=each.xpath('//div[@class="relative_message clearfix"]/div[@class="tel"]/p/text()').extract()

# all item The parameters in it have to do with us items The meaning of the parameters in it corresponds to

item['name'] = [n.replace(' ', '').replace("\n", "").replace("\t", "").replace("\r", "") for n in name]

item['price'] = [p for p in price]

item['address'] = [a for a in address]

item['tel'] = [s for s in tel]

item['page'] = ['https://sh.newhouse.fang.com/house/s/b9'+(str)(eval(p.extract())+1)+'/?ctm=1.sh.xf_search.page.2']

items.append(item)

print(item)

# When crawling to the last page , Class label last Automatically switch to the home page

if lastpage==' home page ':

pass

else:

# If it's not the last page , Continue crawling to the next page of data , Know all the data

yield scrapy.Request(url='https://sh.newhouse.fang.com/house/s/b9'+(str)(eval(currentpage)+1)+'/?ctm=1.sh.xf_search.page.2', callback=self.parse)

4) stay spiders Run the crawler under ,Terminal Input... On the console scrapy crawl house.

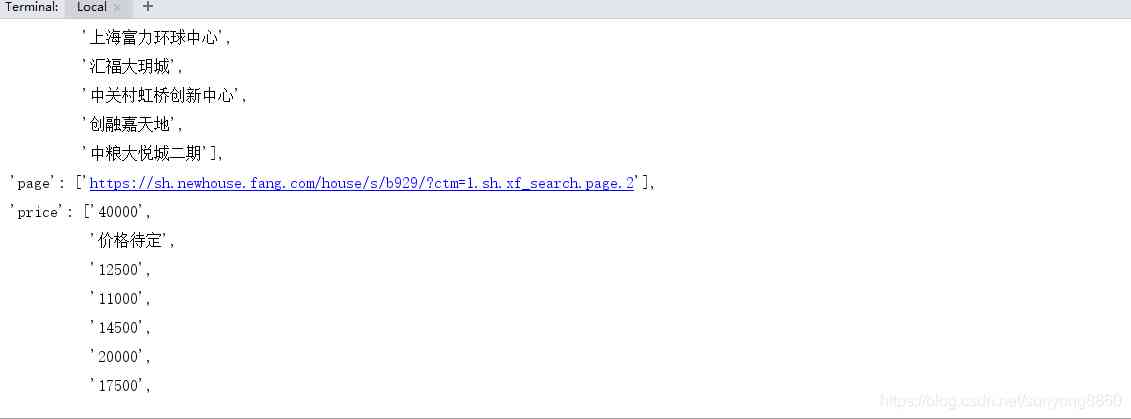

The results are shown in the following figure

The overall project structure is shown on the right tts The folder is used to store data on my side txt file . There is no need for this project .

If you find any errors, please contact wechat :sunyong8860

python Crawling along the road

版权声明

本文为[osc_x4ot1joy]所创,转载请带上原文链接,感谢

边栏推荐

- ulab 1.0.0发布

- 技术人员该如何接手一个复杂的系统?

- WPF personal summary on drawing

- Adobe Prelude / PL 2020 software installation package (with installation tutorial)

- 洞察——风格注意力网络(SANet)在任意风格迁移中的应用

- 2020天翼智能生态博览会中国电信宣布5G SA正式规模商用

- Macquarie Bank drives digital transformation with datastex enterprise (DSE)

- Tail delivery

- 鼠标变小手

- 微信昵称emoji表情,特殊表情导致列表不显示,导出EXCEL报错等问题解决!

猜你喜欢

Macquarie Bank drives digital transformation with datastex enterprise (DSE)

Distributed consensus mechanism

Judging whether paths intersect or not by leetcode

【原创】关于高版本poi autoSizeColumn方法异常的情况

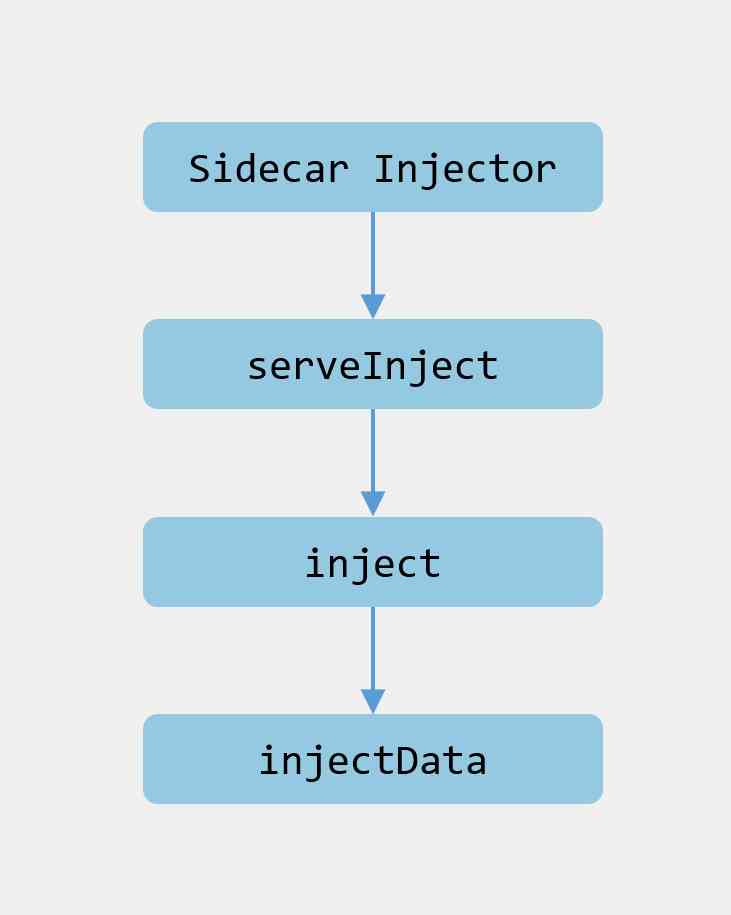

1. In depth istio: how is sidecar auto injection realized?

ts流中的pcr与pts计算与逆运算

Astra: the future of Apache Cassandra is cloud native

c# 表达式树(一)

2020-11-07:已知一个正整数数组,两个数相加等于N并且一定存在,如何找到两个数相乘最小的两个数?

你的主机中的软件中止了一个已建立的连接。解决方法

随机推荐

What details does C + + improve on the basis of C

Face recognition: attack types and anti spoofing techniques

Do you really understand the high concurrency?

On the stock trading of leetcode

C++在C的基础上改进了哪些细节

Hand tearing algorithm - handwritten singleton mode

[original] about the abnormal situation of high version poi autosizecolumn method

Astra: Apache Cassandra的未来是云原生

QT hybrid Python development technology: Python introduction, hybrid process and demo

Speed up your website with jsdelivr

More than 50 object detection datasets from different industries

洞察——风格注意力网络(SANet)在任意风格迁移中的应用

Learn Scala if Else statement

Cloud Alibabab笔记问世,全网详解仅此一份手慢无

【原创】关于高版本poi autoSizeColumn方法异常的情况

IOS upload app store error: this action cannot be completed - 22421 solution

ts流中的pcr与pts计算与逆运算

搜索引擎的日常挑战_4_外部异构资源 - 知乎

LadonGo开源全平台渗透扫描器框架

来自不同行业领域的50多个对象检测数据集