当前位置:网站首页>朴素贝叶斯--学习笔记--基本原理及代码实现

朴素贝叶斯--学习笔记--基本原理及代码实现

2022-08-01 09:23:00 【Miracle Fan】

朴素贝叶斯

概述

Bayes是首先通过以训练集进行概率计算,得到先验概率分布和条件概率分布,而对于这两种概率,Bayes分类器是采用的用样本估计整体(极大似然估计)的思路,通过训练集中样本的这两种概率分布,进行总体近似。也就是计算出

- 先验概率分布:每个样本标签可能的概率,也就是各分类样本所占比例

- 在已经知道标签的条件下,各类属性发生的条件可能

再知道这两种概率之后,就能利用贝叶斯定理

P ( A ∣ B ) = P ( A B ) P ( B ) = P ( B ∣ A ) P ( A ) P ( B ) P(A|B)=\frac{P(AB)}{P(B)}=\frac{P(B|A)P(A)}{P(B)} P(A∣B)=P(B)P(AB)=P(B)P(B∣A)P(A)

计算出在已知样本特征之后预测样本的标签。

基本方法

先验概率分布:

P ( Y = c k ) , k = 1 , 2 , ⋯ , K P(Y=c_k),\quad k=1,2,\cdots ,K P(Y=ck),k=1,2,⋯,K

条件概率分布:朴素贝叶斯的朴素也表现在这里,“朴素”假设样本不同特征相互独立

P ( X = x ∣ Y = c k ) = P ( X ( 1 ) = x ( 1 ) , ⋯ , X ( n ) = x ( n ) ∣ Y = c k ) = ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c k ) \begin{aligned} P\left(X=x \mid Y=c_{k}\right) &=P\left(X^{(1)}=x^{(1)}, \cdots, X^{(n)}=x^{(n)} \mid Y=c_{k}\right) \\ &=\prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{k}\right) \end{aligned} P(X=x∣Y=ck)=P(X(1)=x(1),⋯,X(n)=x(n)∣Y=ck)=j=1∏nP(X(j)=x(j)∣Y=ck)

于是可以由乘法公式得到 P ( X , Y ) P(X,Y) P(X,Y),再利用贝叶斯定理求出后验概率分布,也就是相当于已知了某样本的相关特征属性,预测它属性 c k c_k ck的概率是多少,从而达到了预测的效果。

P ( Y = C k ∣ X = x ) = P ( X = x ∣ Y = c k ) ⋅ P ( Y = c k ) P ( X = x ) = P ( X = x ∣ Y = C k ) P ( Y = C k ) ∑ k P ( X = x ∣ Y = C k ) P ( Y = C k ) = P ( Y = C k ) ∏ j P ( X ( j ) = x ( j ) ∣ Y = C k ) ∑ k P ( Y = C k ) ∏ j P ( X ( j ) = x ( j ) ∣ Y = C k ) \begin{aligned} P\left(Y=C_{\mathrm{k}} \mid X=x\right)&= \frac{P(X=x|Y=c_k)\cdot P(Y=c_k)}{P(X=x)} \\&=\frac{P\left(X=x \mid Y=C_{k}\right) P\left(Y=C_{k}\right)}{\sum_{k} P\left(X=x \mid Y=C_{k}\right) P\left(Y=C_{k}\right)} \\ &=\frac{P\left(Y=C_{k}\right) \prod_{j} P\left(X^{(j)}=x^{(j)} \mid Y=C_{k}\right)}{\sum_{k} P\left(Y=C_{k}\right) \prod_{j} P\left(X^{(j)}=x^{(j)} \mid Y=C_{k}\right)} \end{aligned} P(Y=Ck∣X=x)=P(X=x)P(X=x∣Y=ck)⋅P(Y=ck)=∑kP(X=x∣Y=Ck)P(Y=Ck)P(X=x∣Y=Ck)P(Y=Ck)=∑kP(Y=Ck)∏jP(X(j)=x(j)∣Y=Ck)P(Y=Ck)∏jP(X(j)=x(j)∣Y=Ck)

极大似然估计

对于整个事件的概率分布,我们一般不可能知道各个类型的分布情况,以及在某个类型发生情况下,各个属性发生的概率分布,因此我们可以使用极大似然估计,以样本预测总体的概率。

先验概率分布的极大似然估计:

P ( Y = c k ) = ∑ i = 1 n I ( y i = c K ) N , k = 1 , 2 , ⋯ , K P(Y=c_k)=\frac{\sum_{i=1}^nI(y_i=c_K)}{N},\quad k=1,2,\cdots ,K P(Y=ck)=N∑i=1nI(yi=cK),k=1,2,⋯,K

条件概率的极大似然估计:

P ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) ∑ i = 1 N I ( y i = c k ) j = 1 , 2 , ⋯ , n , l = 1 , 2 , ⋯ , S j , y i ∈ { c 1 , c 2 , ⋯ , c K } P\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)}\quad \\j=1,2, \cdots, n, l=1,2, \cdots,S_{j}, y_{i} \in\{c_{1},c_2 , \cdots , c_{K}\} P(X(j)=ajl∣Y=ck)=∑i=1NI(yi=ck)∑i=1NI(xi(j)=ajl,yi=ck)j=1,2,⋯,n,l=1,2,⋯,Sj,yi∈{ c1,c2,⋯,cK}

朴素贝叶斯算法 (naive Bayes algorithm)

- 输入: 训练数据 T = { ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x N , y N ) } T=\left\{\left(x_{1}, y_{1}\right),\left(x_{2}, y_{2}\right), \cdots,\left(x_{N}, y_{N}\right)\right\} T={ (x1,y1),(x2,y2),⋯,(xN,yN)},其中 x i = ( x i ( 1 ) , x i ( 2 ) , ⋯ , x i ( n ) ) T x_{i}=\left(x_{i}^{(1)}, x_{i}^{(2)}, \cdots, x_{i}^{(n)}\right)^{T} xi=(xi(1),xi(2),⋯,xi(n))T , x i j x_i^{j} xij 是第i个样本的第 j个特征, x i j ∈ a j 1 , a j 2 , … , a j S j x_i^{j}\in{a_{j1},a_{j2},\dots,a_{jS_j}} xij∈aj1,aj2,…,ajSj, a j l a_{jl} ajl是第j个特征可能取得第l个值, j = 1 , 2 , ⋯ , n , l = 1 , 2 , ⋯ , S j , j=1,2, \cdots, n, l=1,2, \cdots,S_{j}, j=1,2,⋯,n,l=1,2,⋯,Sj, y i ∈ { c 1 , c 2 , ⋯ , c K } y_{i} \in\{c_{1},c_2, \cdots , c_{K}\} yi∈{ c1,c2,⋯,cK} ; 实例 x x x ;

- 输出:实例 x x x 的分类.

- 计算先验概率及条件概率

P ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) N , k = 1 , 2 , ⋯ , K P ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j 1 , y i = c k ) ∑ i = 1 N I ( y i = c k ) j = 1 , 2 , ⋯ , n ; l = 1 , 2 , ⋯ , S j ; k = 1 , 2 , ⋯ , K \begin{array}{l} P\left(Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)}{N}, \quad k=1,2, \cdots, K \\ P\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j 1}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)} \\ j=1,2, \cdots, n ; \quad l=1,2, \cdots, S_{j} ; \quad k=1,2, \cdots, K \end{array} P(Y=ck)=N∑i=1NI(yi=ck),k=1,2,⋯,KP(X(j)=ajl∣Y=ck)=∑i=1NI(yi=ck)∑i=1NI(xi(j)=aj1,yi=ck)j=1,2,⋯,n;l=1,2,⋯,Sj;k=1,2,⋯,K

- 对于给定的实例 x = ( x ( 1 ) , x ( 2 ) , ⋯ , x ( n ) ) T x=\left(x^{(1)}, x^{(2)}, \cdots, x^{(n)}\right)^{\mathrm{T}} x=(x(1),x(2),⋯,x(n))T, 由上述得到的后验概率,可以得到其分母为条件概率和先验概率组合,相当于为已知参数,所以我们可以只计算分子进行最后预测评估。

P ( Y = c k ) ∏ j = 1 n P ( X ( j ) = x ( n ) ∣ Y = c k ) , k = 1 , 2 , ⋯ , K P\left(Y=c_{k}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(n)} \mid Y=c_{k}\right), \quad k=1,2, \cdots, K P(Y=ck)j=1∏nP(X(j)=x(n)∣Y=ck),k=1,2,⋯,K

- 确定实例 x x x 的类,就是通过已知特征去计算它是属性哪一类的样本,然后计算概率值最大的索引也就是其属于哪一类。

y = arg max c k P ( Y = c k ) ∏ j = 1 n P ( X ( j ) = x ( j ) ∣ Y = c k ) y=\arg \max _{c_{k}} P\left(Y=c_{k}\right) \prod_{j=1}^{n} P\left(X^{(j)}=x^{(j)} \mid Y=c_{k}\right) y=argckmaxP(Y=ck)j=1∏nP(X(j)=x(j)∣Y=ck)

概率密度函数

伯努利模型

处理布尔型特征(true和false,或者1和0),使用伯努利模型。

如果特征值为1,那么 P ( x i ∣ y k ) = P ( x i = 1 ∣ y k ) P\left(x_{i} \mid y_{k}\right)=P\left(x_{i}=1 \mid y_{k}\right) P(xi∣yk)=P(xi=1∣yk)

如果特征值为0,那么 P ( x i ∣ y k ) = 1 − P ( x i = 1 ∣ y k ) P\left(x_{i} \mid y_{k}\right)=1-P\left(x_{i}=1 \mid y_{k}\right) P(xi∣yk)=1−P(xi=1∣yk)

贝叶斯估计–平滑处理

用极大似然估计可能会出现所要估计的概率值为 0 的情况. 这时会影响到后验概率的计算结果, 使分类产生偏差. 解决这一问题的方法是采用贝叶斯估计. 具体地, 条件概率的贝叶斯估计是

P λ ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) + λ ∑ i = 1 N I ( y i = c k ) + S j λ P_{\lambda}\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)+\lambda}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+S_{j} \lambda} Pλ(X(j)=ajl∣Y=ck)=∑i=1NI(yi=ck)+Sjλ∑i=1NI(xi(j)=ajl,yi=ck)+λ

式中 λ ⩾ 0 \lambda \geqslant 0 λ⩾0 . 等价于在随机变量各个取值的频数上赋予一个正数 λ > 0 \lambda>0 λ>0. 当 λ = 0 \lambda=0 λ=0时 就是极大似然估计. 常取 λ = 1 \lambda=1 λ=1 , 这时称为拉普拉斯平滑 (Laplace smoothing). 显然, 对任何 l = 1 , 2 , ⋯ , S j , k = 1 , 2 , ⋯ , K l=1,2, \cdots, S_{j}, k=1,2, \cdots, K l=1,2,⋯,Sj,k=1,2,⋯,K , 有

P λ ( X ( j ) = a j l ∣ Y = c k ) > 0 ∑ l = 1 s j P ( X ( j ) = a j l ∣ Y = c k ) = 1 P_{\lambda}\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)>0 \\ \sum_{l=1}^{s_{j}} P\left(X^{(j)}=a_{j l} \mid Y=c_{k}\right)=1 Pλ(X(j)=ajl∣Y=ck)>0l=1∑sjP(X(j)=ajl∣Y=ck)=1

同样, 先验概率的贝叶斯估计是

P λ ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) + λ N + K λ P_{\lambda}\left(Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+\lambda}{N+K \lambda} Pλ(Y=ck)=N+Kλ∑i=1NI(yi=ck)+λ

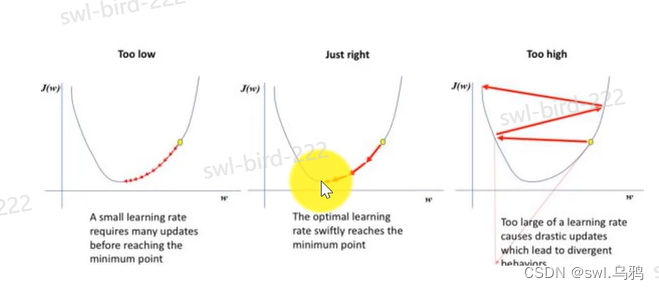

GaussianNB 高斯朴素贝叶斯

特征的可能性被假设为高斯

概率密度函数:

P ( x i ∣ y k ) = 1 2 π σ y k 2 e x p ( − ( x i − μ y k ) 2 2 σ y k 2 ) P(x_i | y_k)=\frac{1}{\sqrt{2\pi\sigma^2_{yk}}}exp(-\frac{(x_i-\mu_{yk})^2}{2\sigma^2_{yk}}) P(xi∣yk)=2πσyk21exp(−2σyk2(xi−μyk)2)

数学期望(mean): μ \mu μ

方差: σ 2 = ∑ ( X − μ ) 2 N \sigma^2=\frac{\sum(X-\mu)^2}{N} σ2=N∑(X−μ)2

代码实现:

class NaiveBayes:

def __init__(self):

self.model = None

def summarize(self, train_data):

train_data = np.array(train_data)

mean = np.mean(train_data, axis=0)

std = np.std(train_data, axis=0)

summaries = np.stack((mean, std), axis=1)

return summaries

def fit(self, X, y):

labels = list(set(y))

data = {

label: [] for label in labels}

for f, label in zip(X, y):

data[label].append(f)

self.model = {

label: self.summarize(value) for label, value in data.items()}

return 'gaussianNB train done!'

# 高斯概率密度函数

def gaussian_probability(self, x, mean, stdev):

exponent = math.exp(-(math.pow(x - mean, 2) /(2 * math.pow(stdev, 2))))

prod=(1 / (math.sqrt(2 * math.pi) * stdev)) * exponent

return prod

def gaussian_probability_np(self, x, summarize):

x=np.array(x)

x = x.reshape(x.shape[0], 1)

mean, std = np.hsplit(summarize, indices_or_sections=2)

exponent = np.exp(-((x - mean) ** 2 /(2 * (std ** 2))))

prod = (1 / (np.sqrt(2 * np.pi) * std)) * exponent

prod=np.prod(prod, axis=0)

return prod

# 计算概率

def calculate_probabilities_np(self, input_data):

probabilities = {

}

for label, value in self.model.items():

# 初始化权重概率为1

probabilities[label] = 1

# 计算有多少个属性遍历几次

probabilities[label] *= self.gaussian_probability_np(input_data, value)

return probabilities

def calculate_probabilities(self, input_data):

probabilities = {

}

for label, value in self.model.items():

# 初始化权重概率为1

probabilities[label] = 1

for i in range(len(value)):

mean, stdev = value[i]

probabilities[label] *= self.gaussian_probability(input_data[i], mean, stdev)

print('math:',probabilities)

return probabilities

# 类别

def predict(self, X_test):

# {0.0: 2.9680340789325763e-27, 1.0: 3.5749783019849535e-26}

label = sorted(

self.calculate_probabilities_np(X_test).items(),

key=lambda x: x[-1])

label = label[-1][0]

return label

def score(self, X_test, y_test):

right = 0

for X, y in zip(X_test, y_test):

label = self.predict(X)

if label == y:

right += 1

return right / float(len(X_test))

如下是再利用贝叶斯分类器进行数据集的分类之后,得到的最终概率

实例实现

1.IRIS鸢尾花数据集

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

import math

# data

def create_data():

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['label'] = iris.target

df.columns = ['sepal length', 'sepal width', 'petal length', 'petal width', 'label']

data = np.array(df.iloc[:, :])

return data[:, :-1], data[:, -1]

X, y = create_data()

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

model = NaiveBayes()

model.fit(X_train, y_train)

score=model.score(X_test, y_test)

print(model.predict([4.4, 3.2, 1.3, 0.2]))

print(score)

边栏推荐

- Microsoft Azure & NVIDIA IoT 开发者季 I|Azure IoT & NVIDIA Jetson 开发基础

- Prime Ring Problem

- zip package all files in the directory (including hidden files/folders)

- Idea 常用插件

- mysql查看cpu使用情况

- HoloView 在 jyputer lab/notebook 不显示总结

- STM32个人笔记-程序跑飞

- sqlserver怎么查询一张表中同人员的交叉日期

- net stop/start mysql80 access denied

- Chapter 9 of Huawei Deep Learning Course - Convolutional Neural Network and Case Practice

猜你喜欢

随机推荐

Install GBase 8 c database, the error shows "Resource, how to solve?

leetcode-6133:分组的最大数量

How to ensure the consistency of database and cache data?

Static Pod, Pod Creation Process, Container Resource Limits

用OpenCV的边缘检测

Parsing MySQL Databases: "SQL Optimization" vs. "Index Optimization"

HoloView -- Tabular Datasets

mysql查看cpu使用情况

pytest interface automation testing framework | parametrize source code analysis

Data Analysis 5

Mysql数据库的部署以及初始化步骤

【Untitled】

Pod environment variables and initContainer

【STM32】入门(二):跑马灯-GPIO端口输出控制

HoloView——实时数据

扁平数组转树结构实现方式

A problem with writing to the database after PHP gets the timestamp

Classify GBase 8 s lock

Shell执行SQL发邮件

Leicester Weekly 304 6135. The longest ring in the picture Inward base ring tree