当前位置:网站首页>Real time data synchronization scheme based on Flink SQL CDC

Real time data synchronization scheme based on Flink SQL CDC

2020-11-06 01:15:00 【InfoQ】

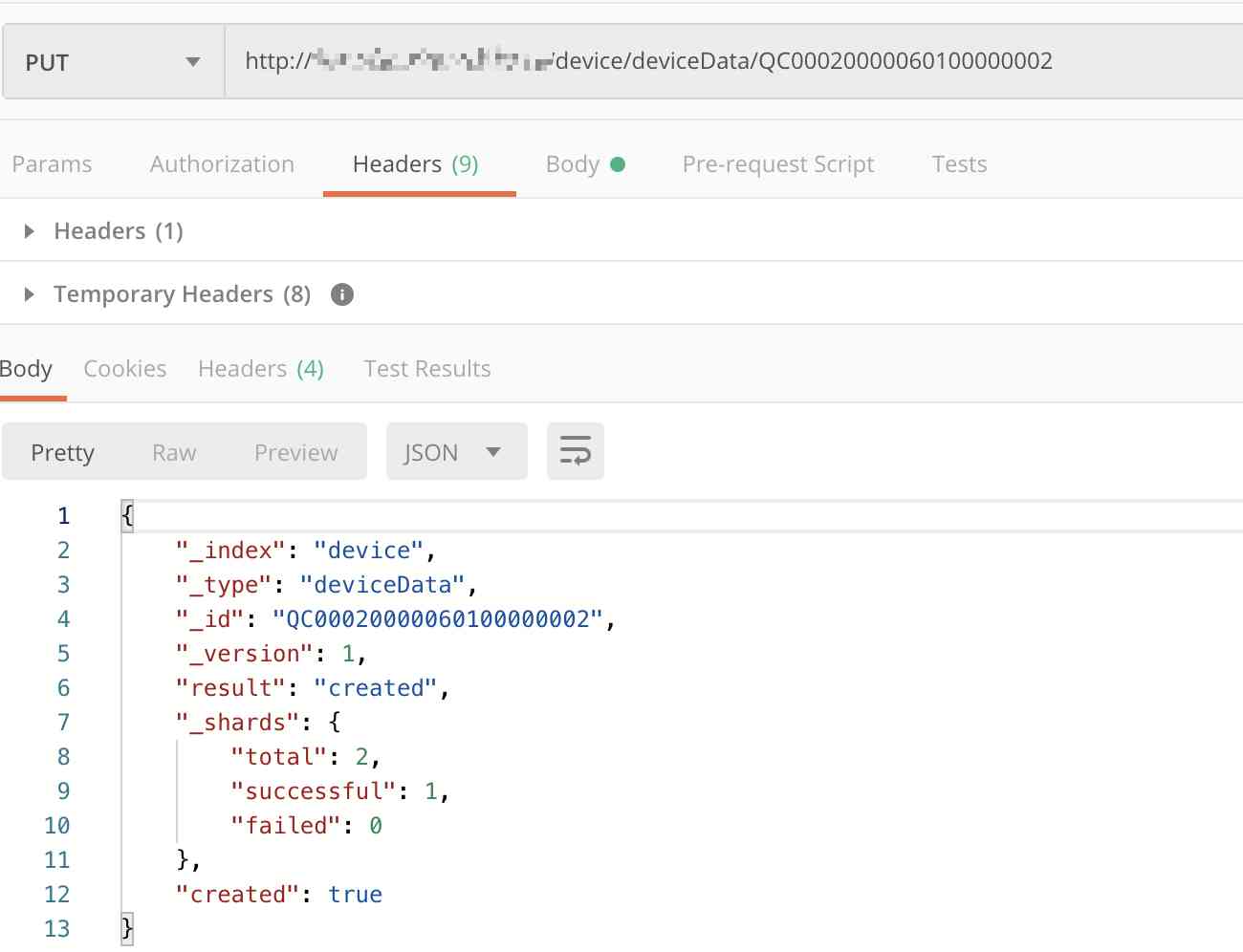

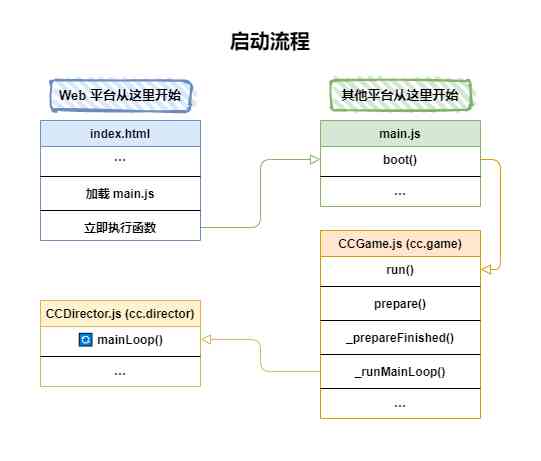

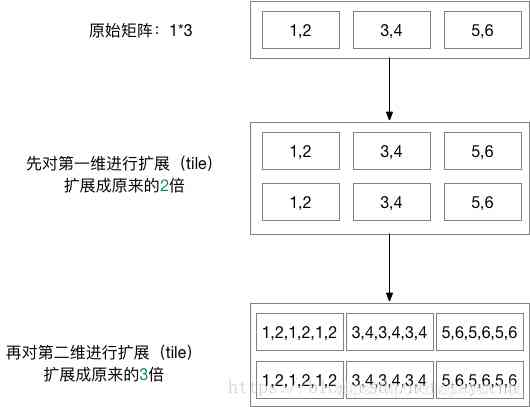

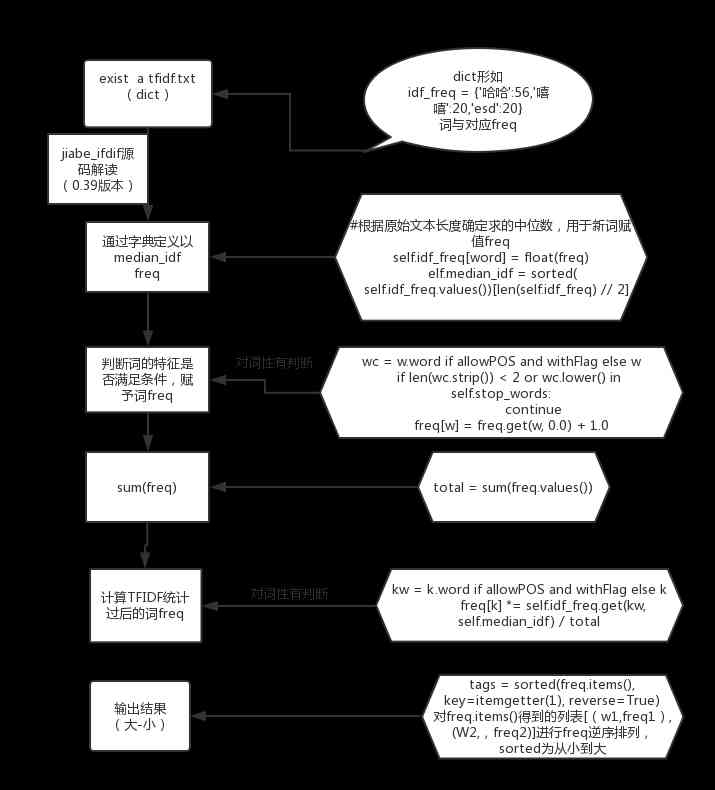

{"type":"doc","content":[{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" author : Wu Zhen ( Cloud evil )"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Arrangement : Chen Zhengyu (Flink Community volunteers )"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"Flink 1.11 Introduced Flink SQL CDC,CDC What changes can it bring to our data and business ? This paper is written by Apache Flink PMC, Alibaba technology expert Wu Zhen ( Cloud evil ) Share , The content will be from the traditional data synchronization scheme , be based on Flink CDC Synchronous solutions and more application scenarios and CDC Future development planning and other aspects of the introduction and demonstration ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"1、 Traditional data synchronization scheme "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"2、 be based on Flink SQL CDC Data synchronization scheme of (Demo)"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"3、Flink SQL CDC More application scenarios "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"4、Flink SQL CDC The future plan of "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Live review :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://www.bilibili.com/video/BV1zt4y1D7kt/","title":""},"content":[{"type":"text","text":"https://www.bilibili.com/video/BV1zt4y1D7kt/"}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":" Traditional data synchronization scheme and Flink SQL CDC Solution "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Business systems often encounter the need to update data to multiple stores . for example : An order system has just started, just need to write to the database to complete business use . One day BI The team expects full-text indexing of the database , So we have to write more data to ES in , Some time after the transformation , There is a need to write to Redis In cache ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/37/37bd656e21eca39e3473101141e367c5.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Obviously, this model is unsustainable , This kind of dual write to each data storage system may lead to non maintenance and expansion , Data consistency and so on , Distributed transactions need to be introduced , The cost and complexity also increase . We can go through CDC(Change Data Capture) Tool to decouple , Synchronization to downstream storage systems that need to be synchronized . Improve the robustness of the system in this way , It is also convenient for subsequent maintenance ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/22/22f36f144b32026e8068f49466522b31.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":"Flink SQL CDC Data synchronization and principle analysis "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"CDC The full name is Change Data Capture , It is a relatively broad concept , As long as you can capture the changed data , We can all call it CDC . The industry mainly has query based CDC And log based CDC , You can compare their functions and differences from the table below ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/dc/dc007990d5f22e16dc36d25a61e9f442.jpeg","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" After the above comparison , We can find that based on logs CDC There are several advantages :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Able to capture all data changes , Capture the complete change record . Disaster recovery in different places , Data backup and other scenarios are widely used , If it's query based CDC It may lead to data loss in the middle of two queries "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Every time DML All operations are recorded without query CDC This initiates a full table scan for filtering , With higher efficiency and performance , With low latency , The advantage of not increasing database load "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· No intrusion service required , Business decoupling , There is no need to change the business model "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Capture delete events and capture the state of old records , In the query CDC in , Periodic queries cannot detect whether intermediate data is deleted "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/16/16bb1c899c42eb924ac16936f30063a6.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":" Log based CDC Introduction of the plan "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" from ETL From the perspective of , Generally, the data collected are business library data , Use here MySQL As a database to be collected , adopt Debezium hold MySQL Binlog Collect and send to Kafka Message queue , And then dock some real-time computing engines or APP After consumption, the data is transferred to input OLAP System or other storage medium ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"Flink Hope to get through more data sources , Full computing power . Our production mainly comes from business log and database log ,Flink In the business log support has been very perfect , But in terms of database log support, there are Flink 1.11 The front still belongs to a blank , That's why you need to integrate CDC One of the reasons ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"Flink SQL Internal support for complete changelog Mechanism , therefore Flink docking CDC Data just need to put CDC Data to Flink The data we know , So in Flink 1.11 It's reconstructed TableSource Interface , In order to better support and integrate CDC."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/4c/4c3fe47c4c9c437eeab1ff817e84cdfd.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/3b/3b1c09ab7941fb18df97e7ebd2f3b0c7.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" After the reconstruction TableSource The output is RowData data structure , Represents a row of data . stay RowData There will be a metadata message on it , We call it RowKind .RowKind It includes inserting 、 Before updating 、 After the update 、 Delete , This and the database in the binlog The concept is very similar . adopt Debezium Collected JSON Format , Contains old data and new data rows as well as the original data information ,op Of u Said is update Update operation identifier ,ts_ms Indicates the timestamp of synchronization . therefore , docking Debezium JSON The data of , In fact, this is the original JSON Data to Flink cognitive RowData."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":" choice Flink As ETL Tools "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" When choosing Flink As ETL Tool time , In the data synchronization scenario , The synchronization structure is shown in the figure below :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/90/901ca16246899d3df2110305af8499a3.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" adopt Debezium Subscribe to the business library MySQL Of Binlog Transfer to Kafka ,Flink By creating a Kafka Table specifies format The format is debezium-json , And then through Flink After calculation or directly inserted into other external data storage system , For example Elasticsearch and PostgreSQL."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/e1/e11d15e04b580dd9b9e587fafcd77ba9.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" But there's a drawback to this architecture , We can see that there are too many components at the acquisition end, which leads to complicated maintenance , At this point, I wonder if I can use Flink SQL Direct docking MySQL Of binlog Data? , Is there any alternative ?"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" The answer is yes ! After improvement, the structure is shown in the figure below :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/92/92c1bedcdf9b678212048c04aeb10b26.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" The community has developed flink-cdc-connectors Components , This is one that can be directly from MySQL、PostgreSQL The database directly reads the total data and incremental change data source Components . Now it's open source , Open source address :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"blockquote","content":[{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://github.com/ververica/flink-cdc-connectors","title":""},"content":[{"type":"text","text":"https://github.com/ververica/flink-cdc-connectors"}]}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"flink-cdc-connectors Can be substituted for Debezium+Kafka Data acquisition module of , So as to achieve Flink SQL collection + Calculation + transmission (ETL) Integrated , The advantages of this are as follows :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Open the box , Easy to use "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Reduce maintenance components , Simplify real-time Links , Reduce deployment costs "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Reduce end to end delay "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Flink Self support Exactly Once Read and calculate "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Data doesn't land , Reduce storage costs "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Supports full and incremental streaming reading "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· binlog The acquisition site can be traced back to *"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":" be based on Flink SQL CDC Data synchronization scheme practice of "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Let's bring you 3 about Flink SQL + CDC More cases are used in actual scenarios . At the end of the experiment , You need Docker、MySQL、Elasticsearch And so on , Please refer to each case reference document for details ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":" Case study 1 : Flink SQL CDC + JDBC Connector"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" This case is done by subscribing to our order form ( Fact table ) data , adopt Debezium take MySQL Binlog Sent to the Kafka, Through the dimension table Join and ETL The operation outputs the result to downstream PG database . For details, please refer to Flink Official account :《Flink JDBC Connector:Flink Best practices for integrating with databases 》 Case for practical operation ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://www.bilibili.com/video/BV1bp4y1q78d","title":""},"content":[{"type":"text","text":"https://www.bilibili.com/video/BV1bp4y1q78d"}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/99/99c39126acf4426a79acafd1f5f3c96d.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":" Case study 2 : CDC Streaming ETL"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Simulate the order form and logistics table of e-commerce company , Statistical analysis of order data is needed , For different information needs to be associated, after the subsequent formation of the order wide table , To the downstream business side to use ES Do data analysis , This case demonstrates how to rely only on Flink Don't rely on other components , With the help of Flink Powerful computing power to put Binlog The data stream is associated once and synchronized to ES ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/2f/2f3ad35133725315ce1977a1edff666e.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" For example, the following paragraph Flink SQL The code can do real-time synchronization MySQL in orders The total quantity of a watch + The purpose of incremental data ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"codeblock","attrs":{"lang":""},"content":[{"type":"text","text":"CREATE TABLE orders (\n order_id INT,\n order_date TIMESTAMP(0),\n customer_name STRING,\n price DECIMAL(10, 5),\n product_id INT,\n order_status BOOLEAN\n) WITH (\n 'connector' = 'mysql-cdc',\n 'hostname' = 'localhost',\n 'port' = '3306',\n 'username' = 'root',\n 'password' = '123456',\n 'database-name' = 'mydb',\n 'table-name' = 'orders'\n);\n\nSELECT * FROM orders"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" In order to make readers better understand and understand , We also provide docker-compose Test environment for , For more detailed case tutorials, please refer to the video link and document link below ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"blockquote","content":[{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Video link :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://www.bilibili.com/video/BV1zt4y1D7kt","title":""},"content":[{"type":"text","text":"https://www.bilibili.com/video/BV1zt4y1D7kt"}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Documentation tutorial :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://github.com/ververica/flink-cdc-connectors/wiki/","title":""},"content":[{"type":"text","text":"https://github.com/ververica/flink-cdc-connectors/wiki/"}]},{"type":"text","text":" Chinese Course "}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":" Case study 3 : Streaming Changes to Kafka"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" The following example is about GMV Day level statistics of the whole station . Include insert / to update / Delete , Only paid orders can be counted into GMV , Observe GMV Change in value ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/53/533d676c9e779153d444add1360ce717.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"blockquote","content":[{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Video link :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://www.bilibili.com/video/BV1zt4y1D7kt","title":""},"content":[{"type":"text","text":"https://www.bilibili.com/video/BV1zt4y1D7kt"}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Documentation tutorial :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://github.com/ververica/flink-cdc-connectors/wiki/","title":""},"content":[{"type":"text","text":"https://github.com/ververica/flink-cdc-connectors/wiki/"}]},{"type":"text","text":" Chinese Course "}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":"Flink SQL CDC More application scenarios "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"Flink SQL CDC It can not only be flexibly applied to real-time data synchronization scenarios , It can also open up more scenarios for users to choose from ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":"Flink Flexible positioning in data synchronization scenarios "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· If you already have Debezium/Canal + Kafka The acquisition layer of (E), have access to Flink As a computing layer (T) And the transport layer (L)"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· It can also be used. Flink replace Debezium/Canal , from Flink Change data directly to Kafka,Flink Unified ETL technological process "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· If you don't need to Kafka Data caching , Can be Flink Change data directly to the destination ,Flink Unified ETL technological process "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":3},"content":[{"type":"text","text":"Flink SQL CDC : Get through more scenes "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Real time data synchronization , The data backup , Data migration , Data warehouse construction "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" advantage : Rich upstream and downstream (E & L), Powerful computing (T), Easy-to-use API(SQL), Stream computing has low latency "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Real time materialized views on the database 、 Streaming data analysis "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Index building and real-time maintenance "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Business cache Refresh "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Audit trail "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Decoupling of microservices , Read / write separation "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· be based on CDC Dimension table Association of "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Here's why CDC Dimension table association is faster than query based dimension table queries ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","marks":[{"type":"strong"}],"text":"■ Query based dimension table Association "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/72/72cfba125a85149e2b2356d93cb6f386.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" At present, the query method of dimension table is mainly through Join The way , After data comes in from the message queue, it is initiated to the database IO Request , The results are returned by the database, merged and then output to the downstream , But this process inevitably produces IO The consumption of communication with the network , The throughput cannot be further improved , Even with some caching mechanism , But because the cache update is not timely, the accuracy may not be so high ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","marks":[{"type":"strong"}],"text":"■ be based on CDC Dimension table Association of "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"image","attrs":{"src":"https://static001.geekbang.org/infoq/b3/b3190b0323b6761c648e18b67263a741.png","alt":null,"title":"","style":[{"key":"width","value":"100%"},{"key":"bordertype","value":"none"}],"href":"","fromPaste":false,"pastePass":false}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" We can go through CDC Import the data of dimension table into dimension table Join In the state of , In this State Because it's distributed State , It's preserved Database Inside the real-time database dimension table image , When message queue data comes in, there is no need to query the remote database again , Query the local disk directly State , Avoided IO operation , Low latency 、 High throughput , More accurate ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"Tips: At present, this function is in 1.12 Version planning , Please pay attention to the specific progress FLIP-132 ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":" The future planning "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· FLIP-132 :Temporal Table DDL( be based on CDC Dimension table Association of )"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Upsert Data output to Kafka"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· added CDC formats Support (debezium-avro, OGG, Maxwell)"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· Batch mode supports processing CDC data "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":"· flink-cdc-connectors Support more databases "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":" summary "}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" This paper compares the traditional data synchronization scheme with Flink SQL CDC The plan shared Flink CDC The advantages of , At the same time, it introduces CDC It is divided into log type and query type . A follow-up case also demonstrates about Debezium subscribe MySQL Binlog The scene of , And how to pass flink-cdc-connectors Implement technology integration instead of subscription components . besides , And explained in detail Flink CDC In data synchronization 、 Materialized view 、 Multi machine room backup, etc , And it focuses on the future planning of the community based on CDC The advantages of dimension table Association compared with traditional dimension table Association and CDC Components work ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" I hope through this sharing , Everyone to Flink SQL CDC To have a new understanding of , In the future actual production and development , expect Flink CDC Can bring more convenient development and more rich use scenarios ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"heading","attrs":{"align":null,"level":2},"content":[{"type":"text","text":"Q & A"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","marks":[{"type":"strong"}],"text":"1、GROUP BY How to write the result Kafka ?"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" because group by The result of is an updated result , Cannot write at this time append only In the message queue . The updated results are written to Kafka Will be in 1.12 The version originally supports . stay 1.11 In the version , Can pass flink-cdc-connectors Provided by the project changelog-json format To achieve this function , See the document for details ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"blockquote","content":[{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Document links :"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"link","attrs":{"href":"https://github.com/ververica/flink-cdc-connectors/wiki/Changelog-JSON-Format","title":""},"content":[{"type":"text","text":"https://github.com/ververica/flink-cdc-connectors/wiki/Changelog-JSON-Format"}]}]}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","marks":[{"type":"strong"}],"text":"2、CDC Whether we need to ensure sequential consumption ?"}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null},"content":[{"type":"text","text":" Yes , Data synchronization to kafka , The first thing you need to kafka Keep the order in the partition , The same key Change data needs to be typed into the same kafka In the partition of . such flink Only when reading can the order be guaranteed ."}]},{"type":"paragraph","attrs":{"indent":0,"number":0,"align":null,"origin":null}}]}

版权声明

本文为[InfoQ]所创,转载请带上原文链接,感谢

边栏推荐

- 简直骚操作,ThreadLocal还能当缓存用

- 6.9.1 flashmapmanager initialization (flashmapmanager redirection Management) - SSM in depth analysis and project practice

- [performance optimization] Nani? Memory overflow again?! It's time to sum up the wave!!

- JVM内存区域与垃圾回收

- Asp.Net Core學習筆記:入門篇

- 如何使用ES6中的参数

- 车的换道检测

- Asp.Net Core learning notes: Introduction

- 中国提出的AI方法影响越来越大,天大等从大量文献中挖掘AI发展规律

- 【C/C++ 1】Clion配置与运行C语言

猜你喜欢

随机推荐

JVM内存区域与垃圾回收

python 下载模块加速实现记录

6.9.2 session flashmapmanager redirection management

你的财务报告该换个高级的套路了——财务分析驾驶舱

drf JWT認證模組與自定製

3分钟读懂Wi-Fi 6于Wi-Fi 5的优势

解決pl/sql developer中資料庫插入資料亂碼問題

WeihanLi.Npoi 1.11.0/1.12.0 Release Notes

安装Anaconda3 后,怎样使用 Python 2.7?

GUI 引擎评价指标

数据科学家与机器学习工程师的区别? - kdnuggets

连肝三个通宵,JVM77道高频面试题详细分析,就这?

JetCache埋点的骚操作,不服不行啊

How to get started with new HTML5 (2)

Flink on paasta: yelp's new stream processing platform running on kubernetes

keras model.compile损失函数与优化器

链表的常见算法总结

直播预告 | 微服务架构学习系列直播第三期

Outlier detection based on RNN self encoder

如何对Pandas DataFrame进行自定义排序