当前位置:网站首页>[point cloud compression] variable image compression with a scale hyperprior

[point cloud compression] variable image compression with a scale hyperprior

2022-06-12 02:49:00 【Jonathan_ Paul 10】

Variational Image Compression with A Scale Hyperprior

This paper presents a new method of compression : Using transcendental knowledge . Transcendental yes ” A priori a priori ”.

Intro

This paper gives the edge information (Side information) The definition of : Side information is an additional bit stream from the encoder to the decoder , The information is modified to the entropy model , This reduces mismatches (additional bits of information sent from the encoder to the decoder, which signal modifications to the entropy model intended to reduce the mismatch). therefore , This kind of edge information is regarded as a priori of entropy model parameters , And edge information has become a hidden representation “ A priori a priori ” 了 .

Ideas

Background

Transformation based models

Coding of transformations (Transform coding) Now in the depth of learning is popular . Input the vector of the image x x x You can use a parameterized transformation , become :

y = g a ( x ; ϕ g ) y=g_a(x;\phi_g) y=ga(x;ϕg)

there y y y Is a potential feature ; ϕ g \phi_g ϕg Yes converter ( Encoder ) Parameters of ; This process is called Parametric Analysis The process . And notice , there y y y It needs to be quantized before entropy coding ( Quantized to discrete values , So that it can be entropy encoded losslessly ). It is assumed that the potential characteristics after quantification are y ^ \hat y y^, Then the transformation used in the reconstruction , bring :

x ^ = g s ( y ^ ; θ g ) \hat x = g_{s}\left(\hat{ {y}} ; {\theta}_{g}\right) x^=gs(y^;θg)

among , This process is called Parametric Synthesis The process ( Here, it can also be regarded as a decoder ). θ g {\theta}_{g} θg Is the parameter of the decoder .

VAE

Variational self encoder (Variational Autoencoder, VAE) Compare with AE, It maps the input to a distribution ( This distribution is usually Gussian) Not a specific vector , As described in the previous section Transformation based models Medium y y y. stay VAE in , He used “ Inferential model ”(Inference Model) Deduce the potential representation in the probability source of the image (“inferring” the latent representation from the source image), use “ Generate models ”(Generative model) Generate the probability to get the reconstructed image .

For more details, please refer to [1]. But notice , In this paper , We use z z z To express super prior information rather than potential distribution . Please distinguish .

Model

Pictured 2 Shown , Potential representations obtained by using prior knowledge y y y( chart 2 The second graph from the left of ) There are structural dependencies ( Spatial coupling ), This cannot be captured by the total decomposition of the variational model . therefore , The model will be modeled in a super prior way .

The so-called super a priori is a priori of a priori . therefore , Then a potential representation is established y y y Potential representation of z z z, To capture this spatial dependency . It is worth mentioning that , there z z z That is, edge information ( z z z is then quantized, compressed, and transmitted as side information). Capture potential representations z z z after , After quantification z ^ \hat z z^ To estimate σ ^ \hat \sigma σ^. This σ ^ \hat \sigma σ^ Will be used to reconstruct at the decoder side y ^ \hat y y^, In order to obtain x ^ \hat x x^.

Reference

边栏推荐

- Interpreting 2021 of middleware: after being reshaped by cloud nativity, it is more difficult to select models

- Force deduction solution summary 875- coco who likes bananas

- Force deduction solution summary 713- subarray with product less than k

- 如何防止商场电气火灾的发生?

- Red's deleted number

- Demand and business model innovation - demand 10- observation and document review

- Transformation of geographical coordinates of wechat official account development

- Demand and business model innovation - demand 9- prototype

- [digital signal processing] correlation function (periodic signal | autocorrelation function of periodic signal)

- oracle之模式对象

猜你喜欢

How to make div 100% page (not screen) height- How to make a div 100% of page (not screen) height?

About 100 to realize the query table? Really? Let's experience the charm of amiya.

博创智能冲刺科创板:年营收11亿 应收账款账面价值3亿

【点云压缩】Sparse Tensor-based Point Cloud Attribute Compression

Comment prévenir les incendies électriques dans les centres commerciaux?

errno: -4091, syscall: ‘listen‘, address: ‘::‘, port: 8000

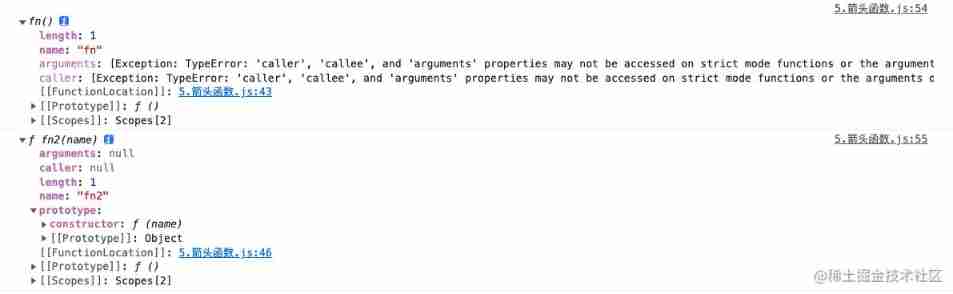

In 2022, don't you know the difference between arrow function and ordinary function?

微积分复习2

Unity3D中DrawCall、Batches、SetPassCall

Acl2022 | DCSR: a sentence aware contrastive learning method for open domain paragraph retrieval

随机推荐

Computer common sense

Unity3d ugui translucent or linear gradient pictures display abnormally (blurred) problem solving (color space mismatch)

Unique paths for leetcode topic resolution

Wave view audio information

Graduation design of fire hydrant monitoring system --- thesis (add the most comprehensive hardware circuit design - > driver design - > Alibaba cloud Internet of things construction - > Android App D

Application of ankery anti shake electric products in a chemical project in Hebei

Ue4\ue5 touch screen touch event: single finger and double finger

Application of residual pressure monitoring system in high-rise civil buildings

The program actively carries out telephone short message alarm, and customizes telephone, short message and nail alarm notifications

Audio and video technology under the trend of full true Internet | Q recommendation

Intel case

Unity3D中DrawCall、Batches、SetPassCall

如何防止商场电气火灾的发生?

errno: -4078, code: ‘ECONNREFUSED‘, syscall: ‘connect‘, address: ‘127.0.0.1‘, port: 3306;postman报错

Force deduction solution summary 933- number of recent requests

Getting started with RPC

What are the solutions across domains?

DbNull if statement - DbNull if statement

Using SSH public key to transfer files

RPC 入门