当前位置:网站首页>ICTCLAS word Lucene 4.9 binding

ICTCLAS word Lucene 4.9 binding

2022-07-05 19:58:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack , I've prepared for you today Idea Registration code .

It has always liked the search direction , Although I can't do it . But still maintain a fanatical share . Remember that summer 、 This laboratory 、 This group of people , Everything goes with the wind . On a new journey . I didn't have myself before . Facing the business environment of 73% Technology , I chose precipitation . Society is a big machine , We are just a small screw . We can't tolerate any hesitation .

The product of an era . Will eventually be abandoned by the times . The topic is right. , stay lucene Add a self-defined word breaker , Need to inherit Analyzer class . Realization createComponents Method . Define at the same time Tokenzier Class is used to record the word to be indexed and its position in the article , Here inherit SegmentingTokenizerBase class , It needs to be realized setNextSentence And incrementWord Two methods . among .setNextSentence Set the next sentence , In multiple domains (Filed) Word segmentation index ,setNextSentence Is to set the content of the next domain , Can pass new String(buffer, sentenceStart, sentenceEnd – sentenceStart) obtain . and incrementWord The method is to record each word and its position . One thing to note is to add clearAttributes(), Otherwise, it may appear first position increment must be > 0… error . With ICTCLAS Take word segmentation as an example , Post personal code below , I hope I can help you , deficiencies , Slap more bricks .

import java.io.IOException;

import java.io.Reader;

import java.io.StringReader;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.analysis.Tokenizer;

import org.apache.lucene.analysis.core.LowerCaseFilter;

import org.apache.lucene.analysis.en.PorterStemFilter;

import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

import org.apache.lucene.util.Version;

/**

* Chinese Academy of Sciences word segmentation Inherit Analyzer class . To achieve its tokenStream Method

*

* @author ckm

*

*/

public class ICTCLASAnalyzer extends Analyzer {

/**

* This method mainly transforms documents into lucene Set up the search Introduce the required TokenStream object

*

* @param fieldName

* File name

* @param reader

* The input stream of the file

*/

@Override

protected TokenStreamComponents createComponents(String fieldName, Reader reader) {

try {

System.out.println(fieldName);

final Tokenizer tokenizer = new ICTCLASTokenzier(reader);

TokenStream stream = new PorterStemFilter(tokenizer);

stream = new LowerCaseFilter(Version.LUCENE_4_9, stream);

stream = new PorterStemFilter(stream);

return new TokenStreamComponents(tokenizer,stream);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return null;

}

public static void main(String[] args) throws Exception {

Analyzer analyzer = new ICTCLASAnalyzer();

String str = " Hacker technology ";

TokenStream ts = analyzer.tokenStream("field", new StringReader(str));

CharTermAttribute c = ts.addAttribute(CharTermAttribute.class);

ts.reset();

while (ts.incrementToken()) {

System.out.println(c.toString());

}

ts.end();

ts.close();

}

}import java.io.IOException;

import java.io.Reader;

import java.text.BreakIterator;

import java.util.Arrays;

import java.util.Iterator;

import java.util.List;

import java.util.Locale;

import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

import org.apache.lucene.analysis.tokenattributes.OffsetAttribute;

import org.apache.lucene.analysis.util.SegmentingTokenizerBase;

import org.apache.lucene.util.AttributeFactory;

/**

*

* Inherit lucene Of SegmentingTokenizerBase, Overload it setNextSentence And

* incrementWord Fang Law , Record the words to be indexed and their position in the article

*

* @author ckm

*

*/

public class ICTCLASTokenzier extends SegmentingTokenizerBase {

private static final BreakIterator sentenceProto = BreakIterator.getSentenceInstance(Locale.ROOT);

private final CharTermAttribute termAttr= addAttribute(CharTermAttribute.class);// Record the words that need to be indexed

private final OffsetAttribute offAttr = addAttribute(OffsetAttribute.class);// Record the position of the words to be indexed in the article

private ICTCLASDelegate ictclas;// The entrusted object of the word segmentation system

private Iterator<String> words;// Words formed after word segmentation

private int offSet= 0;// Record the end position of the last word element

/**

* Constructors

*

* @param segmented The result of word segmentation

* @throws IOException

*/

protected ICTCLASTokenzier(Reader reader) throws IOException {

this(DEFAULT_TOKEN_ATTRIBUTE_FACTORY, reader);

}

protected ICTCLASTokenzier(AttributeFactory factory, Reader reader) throws IOException {

super(factory, reader,sentenceProto);

ictclas = ICTCLASDelegate.getDelegate();

}

@Override

protected void setNextSentence(int sentenceStart, int sentenceEnd) {

// TODO Auto-generated method stub

String sentence = new String(buffer, sentenceStart, sentenceEnd - sentenceStart);

String result=ictclas.process(sentence);

String[] array = result.split("\\s");

if(array!=null){

List<String> list = Arrays.asList(array);

words=list.iterator();

}

offSet= 0;

}

@Override

protected boolean incrementWord() {

// TODO Auto-generated method stub

if (words == null || !words.hasNext()) {

return false;

} else {

String t = words.next();

while(t.equals("")||StopWordFilter.filter(t)){ // This is mainly to filter white space characters and stop words

//StopWordFilter Define a stop phrase filter class for yourself

if (t.length() == 0)

offSet++;

else

offSet+= t.length();

t =words.next();

}

if (!t.equals("") && !StopWordFilter.filter(t)) {

clearAttributes();

termAttr.copyBuffer(t.toCharArray(), 0, t.length());

offAttr.setOffset(correctOffset(offSet), correctOffset(offSet=offSet+ t.length()));

return true;

}

return false;

}

}

/**

* Reset

*/

public void reset() throws IOException {

super.reset();

offSet= 0;

}

public static void main(String[] args) throws IOException {

String content = " Bao jianfeng from the sharpen out , Plum blossom fragrance from the bitter cold !"; String seg = ICTCLASDelegate.getDelegate().process(content); //ICTCLASTokenzier test = new ICTCLASTokenzier(seg); //while (test.incrementToken()); } }import java.io.File;

import java.nio.ByteBuffer;

import java.nio.CharBuffer;

import java.nio.charset.Charset;

import ICTCLAS.I3S.AC.ICTCLAS50;

/**

* Chinese Academy of Sciences word segmentation system agent class

*

* @author ckm

*

*/

public class ICTCLASDelegate {

private static final String userDict = "userDict.txt";// User dictionary

private final static Charset charset = Charset.forName("gb2312");// Default encoding format

private static String ictclasPath =System.getProperty("user.dir");

private static String dirConfigurate = "ICTCLASConf";// The name of the folder where the configuration file is located

private static String configurate = ictclasPath + File.separator+ dirConfigurate;// The absolute path of the folder where the configuration file is located

private static int wordLabel = 2;// Part of speech tagging type ( Peking University secondary annotation set )

private static ICTCLAS50 ictclas;// Chinese Academy of Sciences word segmentation system jni Interface object

private static ICTCLASDelegate instance = null;

private ICTCLASDelegate(){ }

/**

* initialization ICTCLAS50 object

*

* @return ICTCLAS50 Whether the object initialization is successful

*/

public boolean init() {

ictclas = new ICTCLAS50();

boolean bool = ictclas.ICTCLAS_Init(configurate

.getBytes(charset));

if (bool == false) {

System.out.println("Init Fail!");

return false;

}

// Set part of speech tagging set (0 Calculate the secondary dimension set .1 Calculate the first level dimension set ,2 Peking University secondary annotation set ,3 Peking University first level annotation set )

ictclas.ICTCLAS_SetPOSmap(wordLabel);

importUserDictFile(configurate + File.separator + userDict);// Import user dictionary

ictclas.ICTCLAS_SaveTheUsrDic();// Save user dictionary

return true;

}

/**

* Convert the encoding format to the type recognized by the word segmentation system

*

* @param charset

* Coding format

* @return The number corresponding to the encoding format

**/

public static int getECode(Charset charset) {

String name = charset.name();

if (name.equalsIgnoreCase("ascii"))

return 1;

if (name.equalsIgnoreCase("gb2312"))

return 2;

if (name.equalsIgnoreCase("gbk"))

return 2;

if (name.equalsIgnoreCase("utf8"))

return 3;

if (name.equalsIgnoreCase("utf-8"))

return 3;

if (name.equalsIgnoreCase("big5"))

return 4;

return 0;

}

/**

* The function of this method is to import user dictionary

*

* @param path

* Absolute path of user dictionary

* @return Returns the number of words in the imported dictionary

*/

public int importUserDictFile(String path) {

System.out.println(" Import user dictionary ");

return ictclas.ICTCLAS_ImportUserDictFile(

path.getBytes(charset), getECode(charset));

}

/**

* The function of this method is to segment the string

*

* @param source

* The source data of the word to be segmented

* @return The result of word segmentation

*/

public String process(String source) {

return process(source.getBytes(charset));

}

public String process(char[] chars){

CharBuffer cb = CharBuffer.allocate (chars.length);

cb.put (chars);

cb.flip ();

ByteBuffer bb = charset.encode (cb);

return process(bb.array());

}

public String process(byte[] bytes){

if(bytes==null||bytes.length<1)

return null;

byte nativeBytes[] = ictclas.ICTCLAS_ParagraphProcess(bytes, 2, 0);

String nativeStr = new String(nativeBytes, 0,

nativeBytes.length-1, charset);

return nativeStr;

}

/**

* Get the word segmentation system proxy object

*

* @return Word segmentation system proxy object

*/

public static ICTCLASDelegate getDelegate() {

if (instance == null) {

synchronized (ICTCLASDelegate.class) {

instance = new ICTCLASDelegate();

instance.init();

}

}

return instance;

}

/**

* Exit the word segmentation system

*

* @return Whether the return operation is successful

*/

public boolean exit() {

return ictclas.ICTCLAS_Exit();

}

public static void main(String[] args) {

String str=" Married monks and unmarried monks ";

ICTCLASDelegate id = ICTCLASDelegate.getDelegate();

String result = id.process(str.toCharArray());

System.out.println(result.replaceAll(" ", "-"));

}

}import java.util.Iterator;

import java.util.Set;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

/**

* Disable word filter

*

* @author ckm

*

*/

public class StopWordFilter {

private static Set<String> chineseStopWords = null;// Chinese stop words

private static Set<String> englishStopWords = null;// English stop words

static {

init();

}

/**

* Initialize the Chinese and English stop word set

*/

public static void init() {

LoadStopWords lsw = new LoadStopWords();

chineseStopWords = lsw.getChineseStopWords();

englishStopWords = lsw.getEnglishStopWords();

}

/**

* infer keyword And infer whether it is a stop word Be careful : Temporarily only consider Chinese , english . A mixture of Chinese and English , Medium mixed , English numbers mix these five types . Chinese and English blend ,

* Medium mixed , English number mixing has not been specifically discontinued Thesaurus or grammatical rules distinguish them

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean filter(String word) {

Pattern chinese = Pattern.compile("^[\u4e00-\u9fa5]+$");// Chinese matching

Matcher m1 = chinese.matcher(word);

if (m1.find())

return chineseFilter(word);

Pattern english = Pattern.compile("^[A-Za-z]+$");// English matching

Matcher m2 = english.matcher(word);

if (m2.find())

return englishFilter(word);

Pattern chineseDigit = Pattern.compile("^[\u4e00-\u9fa50-9]+$");// Median match

Matcher m3 = chineseDigit.matcher(word);

if (m3.find())

return chineseDigitFilter(word);

Pattern englishDigit = Pattern.compile("^[A-Za-z0-9]+$");// English matching

Matcher m4 = englishDigit.matcher(word);

if (m4.find())

return englishDigitFilter(word);

Pattern englishChinese = Pattern.compile("^[A-Za-z\u4e00-\u9fa5]+$");// Chinese English match , This must be after Chinese matching and English matching

Matcher m5 = englishChinese.matcher(word);

if (m5.find())

return englishChineseFilter(word);

return true;

}

/**

* infer keyword Is it a Chinese stop word

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean chineseFilter(String word) {

// System.out.println(" Inference of Chinese stop words ");

if (chineseStopWords == null || chineseStopWords.size() == 0)

return false;

Iterator<String> iterator = chineseStopWords.iterator();

while (iterator.hasNext()) {

if (iterator.next().equals(word))

return true;

}

return false;

}

/**

* infer keyword Is it an English stop word

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean englishFilter(String word) {

// System.out.println(" Inference of English stop words ");

if (word.length() <= 2)

return true;

if (englishStopWords == null || englishStopWords.size() == 0)

return false;

Iterator<String> iterator = englishStopWords.iterator();

while (iterator.hasNext()) {

if (iterator.next().equals(word))

return true;

}

return false;

}

/**

* infer keyword Is it an English stop word

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean englishDigitFilter(String word) {

return false;

}

/**

* infer keyword Is it a median stop word

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean chineseDigitFilter(String word) {

return false;

}

/**

* infer keyword Is it a British Chinese stop word

*

* @param word

* keyword

* @return true It means stop words

*/

public static boolean englishChineseFilter(String word) {

return false;

}

public static void main(String[] args) {

/*

* Iterator<String> iterator=

* StopWordFilter.chineseStopWords.iterator(); int n=0;

* while(iterator.hasNext()){ System.out.println(iterator.next()); n++;

* } System.out.println(" Total words :"+n);

*/

boolean bool = StopWordFilter.filter(" sword ");

System.out.println(bool);

}

}import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.util.HashSet;

import java.util.Iterator;

import java.util.Set;

/**

* Load stop word file

*

* @author ckm

*

*/

public class LoadStopWords {

private Set<String> chineseStopWords = null;// Chinese stop words

private Set<String> englishStopWords = null;// English stop words

/**

* Get Chinese stop word set

*

* @return Chinese stop words Set<String> type

*/

public Set<String> getChineseStopWords() {

return chineseStopWords;

}

/**

* Set Chinese stop word set

*

* @param chineseStopWords

* Chinese stop words Set<String> type

*/

public void setChineseStopWords(Set<String> chineseStopWords) {

this.chineseStopWords = chineseStopWords;

}

/**

* Get English stop word set

*

* @return English stop words Set<String> type

*/

public Set<String> getEnglishStopWords() {

return englishStopWords;

}

/**

* Set English stop word set

*

* @param englishStopWords

* English stop words Set<String> type

*/

public void setEnglishStopWords(Set<String> englishStopWords) {

this.englishStopWords = englishStopWords;

}

/**

* Load inactive thesaurus

*/

public LoadStopWords() {

chineseStopWords = loadStopWords(this.getClass().getResourceAsStream(

"ChineseStopWords.txt"));

englishStopWords = loadStopWords(this.getClass().getResourceAsStream(

"EnglishStopWords.txt"));

}

/**

* Load stop words from the stop words file , The stop phrase file is common GBK Encoded text file , Every line Is a stop word . Gaze use “//”, Stop words contain Chinese punctuation marks ,

* Chinese space , And words that are used too often and have little meaning to the index .

*

* @param input

* Stop word file stream

* @return Composed of stop words HashSet

*/

public static Set<String> loadStopWords(InputStream input) {

String line;

Set<String> stopWords = new HashSet<String>();

try {

BufferedReader br = new BufferedReader(new InputStreamReader(input,

"GBK"));

while ((line = br.readLine()) != null) {

if (line.indexOf("//") != -1) {

line = line.substring(0, line.indexOf("//"));

}

line = line.trim();

if (line.length() != 0)

stopWords.add(line.toLowerCase());

}

br.close();

} catch (IOException e) {

System.err.println(" You cannot open the inactive thesaurus !.");

}

return stopWords;

}

public static void main(String[] args) {

LoadStopWords lsw = new LoadStopWords();

Iterator<String> iterator = lsw.getEnglishStopWords().iterator();

int n = 0;

while (iterator.hasNext()) {

System.out.println(iterator.next());

n++;

}

System.out.println(" Total words :" + n);

}

}We need it here ChineseStopWords.txt And EnglishStopWords.txt Both China and Britain store stop words , ad locum , We don't know how to upload , Yes ICTCLAS Basic files .

Download the complete project :http://download.csdn.net/detail/km1218/7754907

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/117733.html Link to the original text :https://javaforall.cn

边栏推荐

- MySql的root密码忘记该怎么找回

- Jvmrandom cannot set seeds | problem tracing | source code tracing

- 【obs】QString的UTF-8中文转换到blog打印 UTF-8 char*

- Two pits exported using easyexcel template (map empty data columns are disordered and nested objects are not supported)

- 【C语言】字符串函数及模拟实现strlen&&strcpy&&strcat&&strcmp

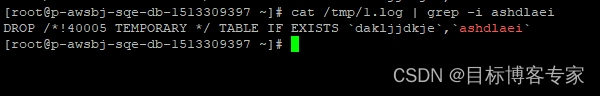

- Debezium series: record the messages parsed by debezium and the solutions after the MariaDB database deletes multiple temporary tables

- DP:树DP

- Common operators and operator priority

- S7-200smart uses V90 Modbus communication control library to control the specific methods and steps of V90 servo

- Securerandom things | true and false random numbers

猜你喜欢

S7-200smart uses V90 Modbus communication control library to control the specific methods and steps of V90 servo

力扣 729. 我的日程安排表 I

微信小程序正则表达式提取链接

Go language | 02 for loop and the use of common functions

【C语言】字符串函数及模拟实现strlen&&strcpy&&strcat&&strcmp

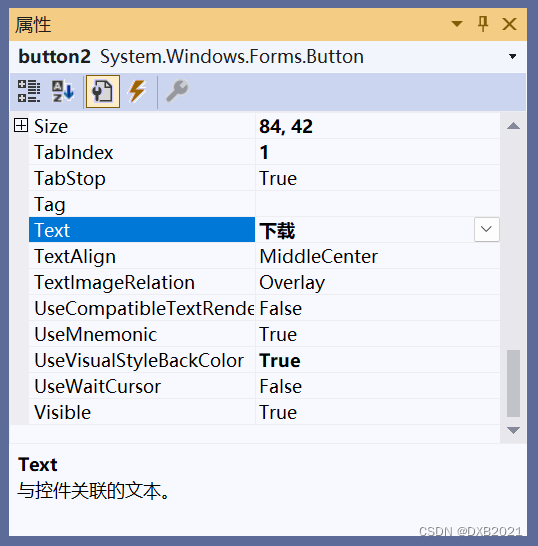

C application interface development foundation - form control (5) - grouping control

leetcode刷题:二叉树12(二叉树的所有路径)

Debezium series: record the messages parsed by debezium and the solutions after the MariaDB database deletes multiple temporary tables

Zhongang Mining: analysis of the current market supply situation of the global fluorite industry in 2022

Build your own website (16)

随机推荐

Go language learning tutorial (XV)

Concept and syntax of function

建议收藏,我的腾讯Android面试经历分享

《乔布斯传》英文原著重点词汇笔记(十二)【 chapter ten & eleven】

【obs】libobs-winrt :CreateDispatcherQueueController

leetcode刷题:二叉树12(二叉树的所有路径)

third-party dynamic library (libcudnn.so) that Paddle depends on is not configured correctl

Android interview classic, 2022 Android interview written examination summary

How about testing outsourcing companies?

[hard core dry goods] which company is better in data analysis? Choose pandas or SQL

通配符选择器

acm入门day1

2023年深圳市绿色低碳产业扶持计划申报指南

1:引文;

Do you know several assertion methods commonly used by JMeter?

浅浅的谈一下ThreadLocalInsecureRandom

third-party dynamic library (libcudnn.so) that Paddle depends on is not configured correctl

Fundamentals of deep learning convolutional neural network (CNN)

[FAQ] summary of common causes and solutions of Huawei account service error 907135701

CADD课程学习(7)-- 模拟靶点和小分子相互作用 (半柔性对接 AutoDock)