当前位置:网站首页>Spark troubleshooting finishing

Spark troubleshooting finishing

2022-07-27 15:37:00 【wankunde】

List of articles

ShutdownHook Lead to Spark Driver OOM

Problem discovery and location

There is one online Spark The program has no problem running batch data for a day , But the memory overflowed when running the batch for many days . The first Heap Dump Come out and have a look. There are a lot of Netty The distribution of Heap Memory space was not freed , Find out SparkSession The object is not GC.

In order not to affect the interaction between different programs for many days , So the program has new instantiations constantly SparkSession To shut down . Check off SparkSession Code , ThreadLocal Variable determination has been remove 了 .

// Project clean up SparkSession Code

def stop() {

logDebug("Clear SparkSession and SparkContext")

if (sqlContext != null) {

sqlContext = null

}

if (spark != null) {

spark.stop()

spark = null

}

SparkSession.clearActiveSession

}

// SparkSession Code

/** The active SparkSession for the current thread. */

private val activeThreadSession = new InheritableThreadLocal[SparkSession]

/** * Clears the active SparkSession for current thread. Subsequent calls to getOrCreate will * return the first created context instead of a thread-local override. * * @since 2.0.0 */

def clearActiveSession(): Unit = {

activeThreadSession.remove()

}

Go back and check again , analysis Paths from GC Root, I found two strange points : First, this reference relation is used ShutdownHookManager Is referenced by a thread of the inner class of , Not a user initiated thread reference , Neither Spark Internal thread reference . Second, the status of this thread is NEW.

inheritableThreadLocals of org.apache.hive.common.util.ShutdownHookManager$1 "Thread-28" tid=68 [NEW] 94184 120

Hive Of ShutdownHookManager Is a utility class , This class applies static methods to JVM(java.lang.ApplicationShutdownHooks) Registered one, registered one Shutdown hook Threads . In the hook Allows users to add some threads to perform cleanup , When the system exits, it is executed according to the priority of registration .

// org.apache.hive.common.util.ShutdownHookManager

static {

MGR.addShutdownHookInternal(DELETE_ON_EXIT_HOOK, -1);

Runtime.getRuntime().addShutdownHook(

new Thread() {

@Override

public void run() {

MGR.shutdownInProgress.set(true);

for (Runnable hook : getShutdownHooksInOrder()) {

try {

hook.run();

} catch (Throwable ex) {

LOG.warn("ShutdownHook '" + hook.getClass().getSimpleName() +

"' failed, " + ex.toString(), ex);

}

}

}

}

);

}

The problem is fixed :

- User thread instantiation SparkSession.

SparkSessionObject is saved to the current threadactiveThreadSessionIn the object .- Program instantiates new ClassLoader Object and load it into

org.apache.hive.common.util.ShutdownHookManagerclass . ShutdownHookManagerClass executes static code , Instantiate the cleanup thread , And register with the system . This thread inherits the parent thread'sactiveThreadSessionobject .- User off SparkSession Object reference ,

ShutdownHookManagerClass hook Thread persistence SparkSession Object reference , Cause memory overflow .

terms of settlement

SparkSession Add a class InheritableThreadLocal Object to reference SparkSession, This is convenient in different parts of the program , Different threads get SparkSession object , And carry out relevant operations . But it leads to this Memory Leak It's a little embarrassing .

Tried to modify Spark The code is used every time ClassLoader load org.apache.hive.common.util.ShutdownHookManager Class time , Not put SparkSession Object is passed to the child thread , It turns out that it doesn't work , Because in Hive Many new ones will also be generated in the code of ClassLoader object , And reload org.apache.hive.common.util.ShutdownHookManager class , In this case, relative to Spark system , It's out of control .

Directly in ShutdownHookManager Let's find a way , Clean up all registered by reflection hook Thread all inherited ThreadLocal object , System returns to normal .

/** Cleans up and shuts down the Spark SQL environments. */

def stop() {

logDebug("Clear SparkSession and SparkContext")

catalogEventListener.stop()

if (sqlContext != null) {

sqlContext = null

}

if (spark != null) {

spark.stop()

spark = null

}

SparkSession.clearActiveSession

val clazz = Class.forName("java.lang.ApplicationShutdownHooks")

val field = clazz.getDeclaredField("hooks")

field.setAccessible(true)

val inheritableThreadLocalsField = classOf[Thread].getDeclaredField("inheritableThreadLocals")

inheritableThreadLocalsField.setAccessible(true)

val hooks = field.get(clazz).asInstanceOf[java.util.IdentityHashMap[Thread, Thread]].asScala

hooks.keys.map(inheritableThreadLocalsField.set(_, null))

}

FileSourceScanExec Conduct Parquet file Split There is something wrong with the strategy

Problem description :

A dozen M Of parqeut The document will be split into several online Task Running at the same time , There is only one of them when running Task Actually read the file , The rest Task Read empty files .

reason : Because although we are right parquet The document was cut , In the reading parqeut The document will be based on split Read the start and length To get the corresponding RowGroup, If RowGroup Of middle The position can be read as soon as it can be read , Otherwise, empty data is read .

Debug journal

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: Planning scan with bin packing, max size: 4194304 bytes, open cost is considered as scanning 4194304 bytes.

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: defaultMaxSplitBytes : 134217728

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: openCostInBytes : 4194304

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: default.parallelism : None

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: defaultParallelism : 53

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: totalBytes : 98047688

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: bytesPerCore : 1849956

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: maxSplitBytes : 4194304

21:16:10.180 main INFO org.apache.spark.sql.execution.FileSourceScanExec: splitFiles : 4194304

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(0,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200606/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 0-4194304, partition values: [20200606]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(1,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200606/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 4194304-8388608, partition values: [20200606]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(2,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200606/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 8388608-12582912, partition values: [20200606]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(3,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200607/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 0-4194304, partition values: [20200607]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(4,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200607/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 4194304-8388608, partition values: [20200607]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(5,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200608/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 0-4194304, partition values: [20200608]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(6,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200608/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 4194304-8388608, partition values: [20200608]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(7,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200608/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 8388608-12582912, partition values: [20200608]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(8,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200608/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 12582912-16777216, partition values: [20200608]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(9,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200609/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 0-4194304, partition values: [20200609]))

21:16:10.189 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(10,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200609/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 4194304-8388608, partition values: [20200609]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(11,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200609/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 8388608-12582912, partition values: [20200609]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(12,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200609/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 12582912-16777216, partition values: [20200609]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(13,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200610/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 0-4194304, partition values: [20200610]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(14,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200610/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 4194304-8388608, partition values: [20200610]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(15,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200610/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 8388608-12582912, partition values: [20200610]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(16,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200610/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 12582912-16777216, partition values: [20200610]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(17,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200608/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 16777216-19269825, partition values: [20200608]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(18,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200609/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 16777216-19067647, partition values: [20200609]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(19,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200606/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 12582912-13440409, partition values: [20200606]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(20,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200610/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 16777216-16864703, partition values: [20200610]))

21:16:10.190 main INFO org.apache.spark.sql.execution.FileSourceScanExec: generate new FilePartition : FilePartition(21,WrappedArray(path: hdfs://nameservice/data/hive/pdd/pdd_bot_marketing/dws_group_reminder_detail/dt=20200607/part-00000-5c7f7d21-a16a-4aad-893c-eb9bb4bdcdad.c000, range: 8388608-8433584, partition values: [20200607]))

Relevant troubleshooting logs

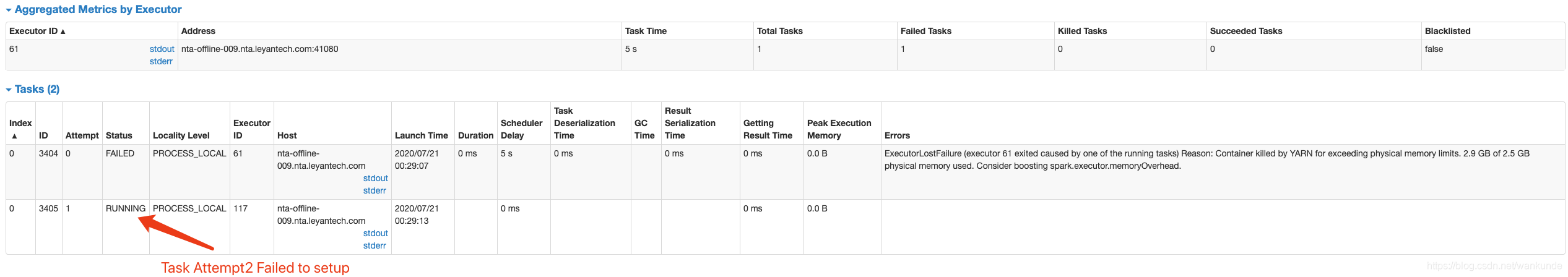

The execution of the dynamic insert partition table task failed

error analysis

This is a dynamic insert partition table sql job, first Task After a failure , the second task When starting, I found the first task The output file of still exists ,task attempt Boot failure , The task exits directly .

The root cause is dynamic partition insertion job Of task There is a problem with the submission control of output documents , Have given feedback to the community Issue.SPARK-32395

task attempt1 journal

00:29:10.344 Executor task launch worker for task 3404 INFO org.apache.hadoop.io.compress.CodecPool: Got brand-new compressor [.snappy]

00:29:12.192 Executor task launch worker for task 3404 INFO org.apache.parquet.hadoop.InternalParquetRecordWriter: Flushing mem columnStore to file. allocated memory: 29701024

00:29:12.474 SIGTERM handler ERROR org.apache.spark.executor.CoarseGrainedExecutorBackend: RECEIVED SIGNAL TERM

00:29:12.589 Executor task launch worker for task 3404 ERROR org.apache.spark.util.Utils: Aborting task

java.io.IOException: can not write PageHeader(type:DICTIONARY_PAGE, uncompressed_page_size:7498, compressed_page_size:5643, dictionary_page_header:DictionaryPageHeader(num_values:625, encoding:PLAIN_DICTIONARY))

at org.apache.parquet.format.Util.write(Util.java:224)

at org.apache.parquet.format.Util.writePageHeader(Util.java:61)

at org.apache.parquet.format.converter.ParquetMetadataConverter.writeDictionaryPageHeader(ParquetMetadataConverter.java:1125)

at org.apache.parquet.hadoop.ParquetFileWriter.writeDictionaryPage(ParquetFileWriter.java:336)

at org.apache.parquet.hadoop.ColumnChunkPageWriteStore$ColumnChunkPageWriter.writeToFileWriter(ColumnChunkPageWriteStore.java:198)

task attempt2 journal

00:29:16.512 Executor task launch worker for task 3405 ERROR org.apache.spark.util.Utils: Aborting task

org.apache.hadoop.fs.FileAlreadyExistsException: /data/hive/pdd/pdd_bot_marketing/app_order_reminder_dispatch_stats/.spark-staging-f6716d57-31eb-44f0-9f55-dfc0939e1fde/dt=20200716/part-00000-f6716d57-31eb-44f0-9f55-dfc0939e1fde.c000.snappy.parquet for client 192.168.127.9 already exists

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2938)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2827)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2712)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:604)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:115)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:412)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2226)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2222)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2220)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)

at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:2134)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1781)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1705)

at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:437)

at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:433)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:433)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:374)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:926)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:907)

at org.apache.parquet.hadoop.util.HadoopOutputFile.create(HadoopOutputFile.java:74)

at org.apache.parquet.hadoop.ParquetFileWriter.<init>(ParquetFileWriter.java:248)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:390)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:349)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:150)

at org.apache.spark.sql.execution.datasources.DynamicPartitionDataWriter.newOutputWriter(FileFormatDataWriter.scala:241)

at org.apache.spark.sql.execution.datasources.DynamicPartitionDataWriter.write(FileFormatDataWriter.scala:262)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$executeTask$1(FileFormatWriter.scala:273)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1411)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.executeTask(FileFormatWriter.scala:281)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.$anonfun$write$15(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:444)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:447)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

.....

; not retrying

00:29:16.583 main ERROR FileFormatWriter: Aborting job 6de09c5c-b425-4d01-b5c0-1aa0a6e3f58e.

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0.1 in stage 162.0 (TID 3405) can not write to output file: org.apache.hadoop.fs.FileAlreadyExistsException: /data/hive/pdd/pdd_bot_marketing/app_order_reminder_dispatch_stats/.spark-staging-f6716d57-31eb-44f0-9f55-dfc0939e1fde/dt=20200716/part-00000-f6716d57-31eb-44f0-9f55-dfc0939e1fde.c000.snappy.parquet for client 192.168.127.9 already exists

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2938)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2827)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2712)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:604)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:115)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:412)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2226)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2222)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2220)

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2023)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:1972)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:1971)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1971)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:950)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:950)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:950)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2203)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2152)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2203)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2152)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2141)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:752)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2093)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.write(FileFormatWriter.scala:195)

at org.apache.spark.sql.execution.datasources.InsertIntoHadoopFsRelationCommand.run(InsertIntoHadoopFsRelationCommand.scala:178)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult$lzycompute(commands.scala:108)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult(commands.scala:106)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.executeCollect(commands.scala:120)

at org.apache.spark.sql.Dataset.$anonfun$logicalPlan$1(Dataset.scala:229)

at org.apache.spark.sql.Dataset.$anonfun$withAction$1(Dataset.scala:3616)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$5(SQLExecution.scala:100)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:160)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:87)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:763)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3614)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:229)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:100)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:763)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:97)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:606)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:763)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:601)

at org.apache.spark.sql.SparkSqlRunner.run(SparkSqlRunner.scala:34)

at com.leyan.insight.Monitor$.executeSql$1(Monitor.scala:196)

at com.leyan.insight.Monitor$.$anonfun$main$20(Monitor.scala:204)

at com.leyan.insight.Monitor$.$anonfun$main$20$adapted(Monitor.scala:183)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:198)

at com.leyan.insight.Monitor$.$anonfun$main$13(Monitor.scala:183)

at com.leyan.insight.Monitor$.$anonfun$main$13$adapted(Monitor.scala:122)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

边栏推荐

- How to take satisfactory photos / videos from hololens

- leetcode-1:两数之和

- 【剑指offer】面试题50:第一个只出现一次的字符——哈希表查找

- Dan bin Investment Summit: on the importance of asset management!

- Spark 3.0 DPP实现逻辑

- USB interface electromagnetic compatibility (EMC) solution

- Deveco studio2.1 operation item error

- 【剑指offer】面试题52:两个链表的第一个公共节点——栈、哈希表、双指针

- Transactions_ Basic demonstrations and transactions_ Default auto submit & manual submit

- 【剑指offer】面试题46:把数字翻译成字符串——动态规划

猜你喜欢

Tools - common methods of markdown editor

C语言:数据的存储

Implement custom spark optimization rules

After configuring corswebfilter in grain mall, an error is reported: resource sharing error:multiplealloworiginvalues

【剑指offer】面试题46:把数字翻译成字符串——动态规划

【剑指offer】面试题53-Ⅰ:在排序数组中查找数字1 —— 二分查找的三个模版

【剑指offer】面试题50:第一个只出现一次的字符——哈希表查找

Photoelectric isolation circuit design scheme (six photoelectric isolation circuit diagrams based on optocoupler and ad210an)

C语言:动态内存函数

![[daily question 1] 558. Intersection of quadtrees](/img/96/16ec3031161a2efdb4ac69b882a681.png)

[daily question 1] 558. Intersection of quadtrees

随机推荐

Use double stars instead of math.pow()

扩展Log4j支持日志文件根据时间分割文件和过期文件自动删除功能

【剑指offer】面试题52:两个链表的第一个公共节点——栈、哈希表、双指针

EMC design scheme of CAN bus

Leetcode 456.132 mode monotone stack /medium

[正则表达式] 匹配开头和结尾

JS uses unary operators to simplify string to number conversion

Spark RPC

Two stage submission and three stage submission

Spark3中Catalog组件设计和自定义扩展Catalog实现

使用Prometheus监控Spark任务

/dev/loop1占用100%问题

Record record record

npm install错误 unable to access

Spark RPC

Network equipment hard core technology insider router Chapter 18 dpdk and its prequel (III)

Spark lazy list files 的实现

Spark动态资源分配的资源释放过程及BlockManager清理过程

Fluent -- layout principle and constraints

使用双星号代替Math.pow()