当前位置:网站首页>How powerful are spectral graph neural networks

How powerful are spectral graph neural networks

2022-06-21 06:27:00 【w55100】

Preface

title It's a copy of another famous article before "how powerful …"

The paper constructs linear GNN To 1-WL Equivalence relation under certain conditions .

For reasons of personal interest, I don't care much about the model part .

How Powerful are Spectral Graph Neural Networks

https://arxiv.org/abs/2205.11172v1

ICML2022

One 、 Basic theory

For now spectral My research is actually , Basically, they all remove activation Of .

Because with activation Words , Many properties are difficult to deduce a convergent result in chain form .

Of course, you might give an example of a big guy with a math background subspace My article is with activation Certified together .

But other articles , from optimization The departure was good ,polynomial Let's just say , Basically, they tend to get rid of it on the mainstream stage .( What I understand is for mathematical elegance)

and , Here's the thing to watch out for , Even that article , It can only be applied to activation === ReLU , There is no way to extend it to other activation Of .

Let's say so ,“ If activation Can be merge To theoretical Take some with you , Otherwise, discard ” It is already a very natural thing .

This article ICML2022 Of paper The starting point of is roughly here .

Since everyone's theory is based on the analysis of how to get rid of it , Why do you have to bring it with you in practice .( In the past, we could put this gap, Explained as the difference between theory and practice , Fool the past with the ideal hypothesis of theoretical research )

Can we simply prove that there is no activation It's good enough , I don't want to practice at all ?

This is the starting point of my understanding of the full text .

The study did not activation The upper limit of expression ability .

Yes, of course , The words used in the author's original text are linear GNN.

It means to get rid of non-linear function Of GNN.

use g ( L ^ ) g(\hat{L}) g(L^) Express arbitrarily spectral filter.

linear GNN = g ( L ^ ) X W \text{linear GNN} =g(\hat{L})XW linear GNN=g(L^)XW

as everyone knows , arbitrarily linear GNN from filter and transformation Two parts .

Condition

- one-dimensional prediction

- no multiple eigenvalues

- no missing frequency component

Satisfy the above 3 Conditions ,linear GNN Can approach any 1 Dimensional prediction.

For example, for a 2 Classification problem , The output is out.shape = (N,1)

The author said that for multi-dimensional prediction, Violence can be used ,

Every dimension One linear GNN.( Need different filter and transformation)

It's because of a linear GNN You can only express one completely at most dimension.

4.1 The original text is a little messy .Proposition 4.2 Say if X X X The line is not rank , Then there is always multi-dimension prediction yes linear GNN Inaccessible .

I'm done Appendix The proof in the book shows what he wants to say …

Use your own words to express .

Two 、Section 4.1 multi-dimensional Partial disassembly

First , The rows of the characteristic matrix are less than the rank , It's a high probability event . because S h a p e ( X ) = ( N , d ) Shape(X)=(N,d) Shape(X)=(N,d) , Generally speaking N > > d N>>d N>>d.

here , If we want to use it linear GNN = gXW This framework is used to approximate a k-dimensional prediction, The output (N,k).

k=1 when , Expressive ability is sufficient .

k>1 when , Inadequate expression .

That's why I'm here k>1 when , Put forward to use k individual linear GNN To learn separately k individual dimension.

We might as well look at the situation in the airspace first .

consider k=1

2.0 prove k=1 Time is sufficient

The original text proves that there is something wrong .

The first part , R d − ∪ i = 1 n V i ≠ { 0 } \mathbb{R}^{d}-\cup_{i=1}^{n} V_{i} \ne \{0\} Rd−∪i=1nVi={ 0} Can be controlled by V i V_i Vi is a proper subspace derived ?

Q:N individual proper subspace Of union still proper subspace?

A: It's not right , There is a basic theorem :A vector space cannot be written as a finite union of proper subspaces.

This proof is wrong . Let's treat it as a clerical error ..

Think again k>1.

2.1 Nature of airspace

Order rank RowRank ( X ) = r \text{RowRank}(X) = r RowRank(X)=r.

from X X X In looking for r r r Rows form a set of maximal linearly independent vectors , this r r r Row labels of rows form a new set I = { s 1 , s 2 , . . . , s r } \mathbb{I} = \{s_1,s_2,...,s_r\} I={ s1,s2,...,sr}.

Then the matrix formed by this group of maximal linear independent vectors can be written as X I = { X s i } ∈ R r × d X_{\mathbb{I}} = \{ X_{s_{i}}\} \in \mathbb{R}^{r\times d} XI={ Xsi}∈Rr×d.

We can express the original characteristic matrix with this set of maximal linear independent vectors , It's written in X = P X I , M ∈ R N × r X = PX_{\mathbb{I}}, M \in \mathbb{R}^{N\times r} X=PXI,M∈RN×r.

Suppose there is a W ∈ R d × k W \in \mathbb{R}^{d\times k} W∈Rd×k bring X I W X_{\mathbb{I}}W XIW Reduce to row rank 1 Matrix .

namely RowRank ( X I W ) = 1 \text{RowRank}(X_{\mathbb{I}}W)=1 RowRank(XIW)=1, namely X I W X_{\mathbb{I}}W XIW Each column of is the same .

So clearly linear GNN Output

Z = g X W = g ( P X I ) W = g P ( X I W ) Z= gXW = g(PX_{\mathbb{I}})W =gP(X_{\mathbb{I}}W) Z=gXW=g(PXI)W=gP(XIW) Satisfy

RowRank ( Z ) ≤ RowRank ( X I W ) = 1 \text{RowRank}(Z)\le \text{RowRank}(X_{\mathbb{I}}W)=1 RowRank(Z)≤RowRank(XIW)=1 ,

also Z ≠ 0 Z \ne \mathbb{0} Z=0, namely RowRank ( Z ) > 0 \text{RowRank}(Z) \gt 0 RowRank(Z)>0.

therefore RowRank ( Z ) = 1 \text{RowRank}(Z)=1 RowRank(Z)=1.

therefore Z Each column of is the same .

The nature of the airspace itself is not strong , Because in the airspace g No particularity .

But it has more powerful performance in frequency domain .

2.2 Frequency domain properties

Remember the frequency domain characteristics X ^ = U T X ∈ R N × d \hat{X} = U^TX \in \mathbb{R}^{N\times d} X^=UTX∈RN×d, W ∈ R d × k W \in \mathbb{R}^{d\times k} W∈Rd×k

similarly

Order rank RowRank ( X ^ ) = r \text{RowRank}(\hat{X}) = r RowRank(X^)=r.

from X ^ \hat{X} X^ In looking for r r r Rows form a set of maximal linearly independent vectors , this r r r Row labels of rows form a new set I = { s 1 , s 2 , . . . , s r } \mathbb{I} = \{s_1,s_2,...,s_r\} I={ s1,s2,...,sr}.

Then the matrix formed by this group of maximal linear independent vectors can be written as X ^ I = { X ^ s i } ∈ R r × d \hat{X}_{\mathbb{I}} = \{ \hat{X}_{s_{i}}\} \in \mathbb{R}^{r\times d} X^I={ X^si}∈Rr×d.

We can express the original characteristic matrix with this set of maximal linear independent vectors , It's written in X ^ = M X ^ I , M ∈ R N × r \hat{X} = M\hat{X}_{\mathbb{I}}, M \in \mathbb{R}^{N\times r} X^=MX^I,M∈RN×r.

therefore

Z = g ( L ^ ) X W = U g ( Λ ) X ^ W = U g ( Λ ) M X ^ I W Z= g(\hat{L})XW = Ug(\Lambda)\hat{X}W =Ug(\Lambda)M\hat{X}_{\mathbb{I}}W Z=g(L^)XW=Ug(Λ)X^W=Ug(Λ)MX^IW

hold g Become the first , Convenient operation .

remember Z ^ = U T Z = g ( Λ ) M X ^ I W \hat{Z} = U^TZ = g(\Lambda)M\hat{X}_{\mathbb{I}}W Z^=UTZ=g(Λ)MX^IW

Yes

Z ^ I = ( U T Z ) I = ( g ( Λ ) M X ^ I W ) I = ( g ( Λ ) M ) I X ^ I W \hat{Z}_{\mathbb{I}} = (U^TZ)_{\mathbb{I}} = (g(\Lambda)M\hat{X}_{\mathbb{I}}W)_{\mathbb{I}} = (g(\Lambda)M)_{\mathbb{I}} \hat{X}_{\mathbb{I}}W Z^I=(UTZ)I=(g(Λ)MX^IW)I=(g(Λ)M)IX^IW

Frequency domain g Of a special nature , It's a diagonal matrix . therefore ,

g ( Λ ) M = [ g i i M i ] i ∈ [ N ] g(\Lambda)M = [g_{ii}M_i]_{i\in[N]} g(Λ)M=[giiMi]i∈[N] , among g i i g_{ii} gii Express i That's ok i Diagonal elements of columns , M i M_i Mi It means the first one i That's ok .

( g ( Λ ) M ) I = ( [ g i i M i ] i ∈ [ N ] ) I = g ( Λ ) I I M I (g(\Lambda)M)_{\mathbb{I}}= ([g_{ii}M_i]_{i\in[N]})_{\mathbb{I}} = g(\Lambda)_{\mathbb{I}\mathbb{I}}M_{\mathbb{I}} (g(Λ)M)I=([giiMi]i∈[N])I=g(Λ)IIMI

Considering

X ^ I = ( M X ^ I ) I = M I X ^ I \hat{X}_{\mathbb{I}}= (M\hat{X}_{\mathbb{I}} )_{\mathbb{I}} =M_{\mathbb{I}}\hat{X}_{\mathbb{I}} X^I=(MX^I)I=MIX^I

This means that we can make M I = E r M_{\mathbb{I}} =E_{r} MI=Er Become a r × r r\times r r×r Unit matrix .

So in the frequency domain

( g ( Λ ) M ) I = g ( Λ ) I I M I = g ( Λ ) I I (g(\Lambda)M)_{\mathbb{I}} = g(\Lambda)_{\mathbb{I}\mathbb{I}}M_{\mathbb{I}}=g(\Lambda)_{\mathbb{I}\mathbb{I}} (g(Λ)M)I=g(Λ)IIMI=g(Λ)II

With the above properties , You can further write

Z ^ I = g ( Λ ) I I X ^ I W \hat{Z}_{\mathbb{I}} = g(\Lambda)_{\mathbb{I}\mathbb{I}}\hat{X}_{\mathbb{I}}W Z^I=g(Λ)IIX^IW

and

Z ^ = g ( Λ ) M X ^ I W \hat{Z} = g(\Lambda)M\hat{X}_{\mathbb{I}}W Z^=g(Λ)MX^IW

According to the above formula, it is found that

if W W W bring RowRank ( X ^ I W ) = 1 \text{RowRank}(\hat{X}_{\mathbb{I}}W)=1 RowRank(X^IW)=1,

We will get a conclusion similar to the airspace part

RowRank ( Z ^ I ) = 1 \text{RowRank}(\hat{Z}_{\mathbb{I}})=1 RowRank(Z^I)=1

RowRank ( Z ^ ) = 1 \text{RowRank}(\hat{Z})=1 RowRank(Z^)=1

namely , When g ( Λ ) I I g(\Lambda)_{\mathbb{I}\mathbb{I}} g(Λ)II Reversible ,

If Z ^ I \hat{Z}_{\mathbb{I}} Z^I The columns are the same , Z ^ \hat{Z} Z^ The columns of are also synchronized .

namely , When g ( Λ ) I I g(\Lambda)_{\mathbb{I}\mathbb{I}} g(Λ)II Reversible ,

There is no model that makes Z ^ I \hat{Z}_{\mathbb{I}} Z^I The columns are the same , and Z ^ \hat{Z} Z^ The columns of are different Z Z Z.

here , about k>1 Of prediction, The model cannot be learned completely .

I call this property Columns, etc nature .

2.3 The boundary conditions

It is noted that an important premise for the above conclusion is that when g ( Λ ) I I g(\Lambda)_{\mathbb{I}\mathbb{I}} g(Λ)II reversible .

Can this precondition hold ? The answer is yes .

Because our goal is to prove , There is a kind of Z Z Z.

So find a counterexample .

Just make Z ^ \hat{Z} Z^ Satisfy : Z ^ I \hat{Z}_{\mathbb{I}} Z^I None of the elements in are 0.

according to Z ^ I = g ( Λ ) I I X ^ I W \hat{Z}_{\mathbb{I}} = g(\Lambda)_{\mathbb{I}\mathbb{I}}\hat{X}_{\mathbb{I}}W Z^I=g(Λ)IIX^IW Can launch g ( Λ ) I I g(\Lambda)_{\mathbb{I}\mathbb{I}} g(Λ)II All diagonal elements of are not 0, So the reversible condition holds .

2.4 Consider this conclusion for classical Spectral GNN The establishment of

Q: If you join activation, Can we learn a lesson that makes Z ^ I \hat{Z}_{\mathbb{I}} Z^I The columns are the same ( And it doesn't include 0), and Z ^ \hat{Z} Z^ The columns of are different Z Z Z?

obviously , In the traditional way , use ReLU As activation, There are a lot of Z It's impossible to learn .

For example, I hope

Z ^ = ( 1 1 2 2 5 6 7 8 ) \hat{Z} = \begin{pmatrix} 1 & 1 \\ 2 & 2 \\ 5&6 \\ 7&8 \end{pmatrix} Z^=⎝⎜⎜⎛12571268⎠⎟⎟⎞

The first two lines serve as I = { 1 , 2 } \mathbb{I} = \{1,2\} I={ 1,2}.

use ReLU Never learn .

in tanh And that sort of thing doesn't work .

And simply stack Layers I can't do it .

Because the output of the last layer will face the same problem .

So you could say , This conclusion is important for understanding tradition spectral GNN The upper limit of expression ability is very enlightening .

grace , It's so elegant !

Yes 2 There's a solution

1. abandon spectral, change to the use of sth. spatial GNN.

I have already proved , Above this Columns, etc Nature is spectral domain On g by diagonal The special nature of .

Once abandoned spectral, obtain non-diagonal filter Will automatically eliminate this property .

2. Front use spectral,output layer Change to non-spectral.

This may solve part of the problem .

2.5 Practical significance

Q: I got it! spectral Can't learn to wait , What's the use of this ? Is there a case where you need to output local columns, etc ?

For the output layer .

I can only think of , some multi-label May appear in the multi category task of .

For example, a movie belongs to both romance and thriller ( Blind detective ?)

But it's hard to say about the hidden layer in the middle .

For some highly relevant dimension pair, Columns and so on may be very strong properties .

2.6 Connection with some past work

The author mentioned .

We can use individual polynomial coefficients for each output channel to solve this problem

I can't remember which article I read , That's what I did .

hold g Take it apart , In different channel Go to school again coefficients…

I only remember that I smiled …

It seems that there is more than one article .

One day, I will remember to fill the pit .

3、 ... and 、Section4.2&4.3

- Multiple Eigenvalue

- Missing frequency components

It's easy to understand , If feature There is no frequency component in the , No matter how you pass filter Go to scale Can't solve .

This corresponds to the third condition.

The good news is , The author in Appendix E. Chinese vs 10 individual benchmark dataset Made statistics , This proves to be very rare .

( This problem should have been discussed long ago ? I have the impression that ... Because it is seldom a natural conclusion to have multiple roots on a large graph . The absence of frequency components does not exist for data sets with strong enough characteristics .)

contrary , The problems that really need to be explored are those discussed in other previous work .

We know that the big picture has no double root , But we care about the density between different roots ( distance ).

d p = λ p + 1 − λ p d_{p} = \lambda_{p+1}-\lambda_{p} dp=λp+1−λp.

I can't remember which article came to this conclusion , This d Whether it is greater than or less than a certain threshold will improve the performance ...

Four 、 section 4.4

Proposition 4.3…

I don't know why he has to prove it again .. I remember it was like GIN It has been certified once .

mp Under the framework of GNN The ability to express will not exceed 1-WL .

Does it smell good directly .

Corollary 4.4 Under the two conditions of no double characteristic root and no missing frequency component ,1-WL It can also distinguish all non isomorphic nodes .

Theorem 4.5 Graphs without multiple eigenvalues ,order of automorphism Not more than 3.

It means at most 3 individual g^3 = E , E is identity matrix.

Then there is infinite automorphism . g 4 = g 3 ∗ g = g , g 5 = g 2 , g 6 = g 3 = E g^4 = g^3*g=g, g^5=g^2, g^6 = g^3 = E g4=g3∗g=g,g5=g2,g6=g3=E

Automorphism group {E,g,g^2}, order by 3.

Theorem 4.6 Further , If there are no double eigenvalues and no missing frequency components at the same time , except identity There will be no other automorphism.

Automorphism group {E}, order=1.

It means , Now run 1-WL and linearGNN There is no difference between .

Will output completely different labels to different nodes .

Appendix C It is discussed in random feature Influence

To be changed …

5、 ... and 、 Section 4.5 nonlinear

Simply speaking

nonlinear Sure mix frequency components.

I remember the previous conclusion was ,non linear Can be introduced high frequency components.

(Q: How to prove ?)

Baseline

ChebyNet

SGC

APPNP

GNN-LF/HF

GPRGNN

ARMA

BernNet

Look at it like this , The progress in this field can be said to be very slow .

Only the big guys can move something in from other fields .

Ordinary people can't pour water if they want to .

On average ,1 Years will come out 1~1.5 individual model.

Reference resources

//https://www.mathcounterexamples.net/a-vector-space-written-as-finite-union-of-proper-subspaces/

边栏推荐

- IP - 射频数据转换器 -04- API使用指南 - ADC状态指示函数

- Roll in Dachang series LRU cache elimination algorithm

- Niuke-top101-bm25

- MSF内网渗透

- 三维引擎软件Vizard入门

- Aurora8B10B IP使用 -02- IP功能设计技巧

- leetcode 675. Cutting down trees for golf competitions - (day29)

- Survey shows that data analysis is crucial to product success

- 当今的数学是否过于繁琐?

- Introduction to 3D engine software wizard

猜你喜欢

![[is the network you are familiar with really safe?] Wanziwen](/img/b4/6092ab3fd728e5d453ec38b089d027.png)

[is the network you are familiar with really safe?] Wanziwen

nametuple的源码为什么要使用.replace(‘,‘, ‘ ‘).split()而不是.split(‘,‘)

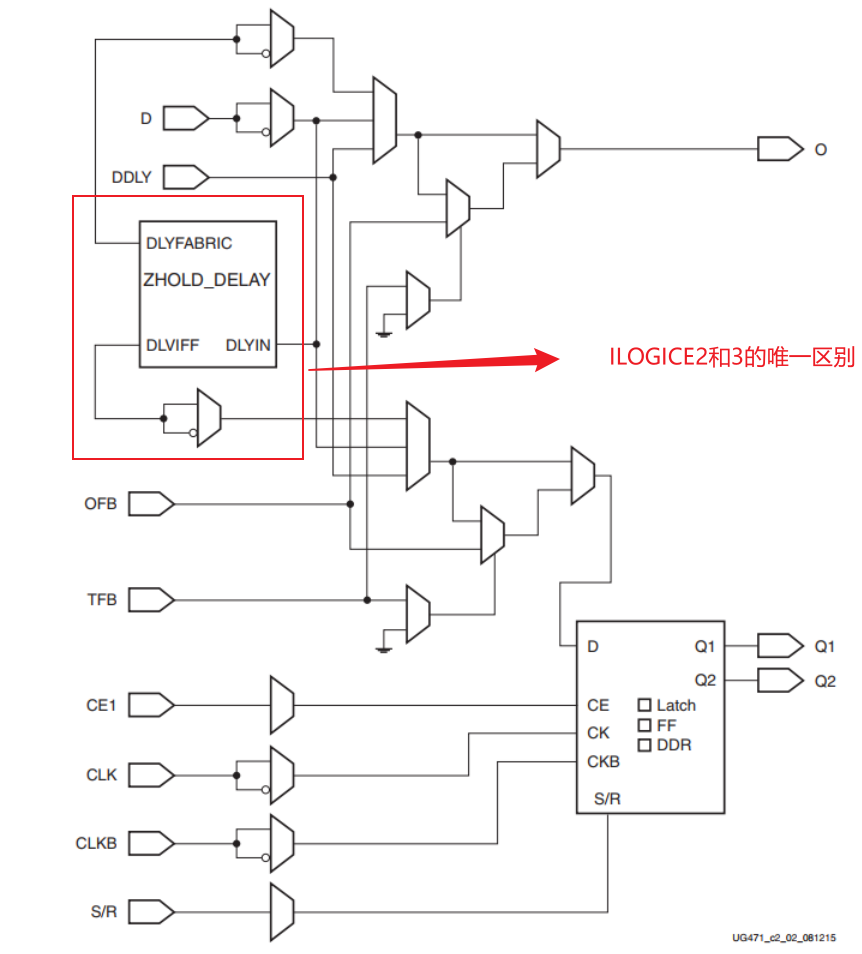

FPGA - 7系列 FPGA SelectIO -03- 逻辑资源之ILOGIC

构建和保护小型网络考试

Pycharm的快捷键Button 4 Click是什么?

Aurora8b10b IP usage-02-ip function design skills

Deeply understand the gradient disappearance of RNN and why LSTM can solve the gradient disappearance

Aurora8B10B IP使用 -02- IP功能设计技巧

【数据挖掘】期末复习 第三章

【数据挖掘】期末复习 第二章

随机推荐

Pycharm的快捷键Button 4 Click是什么?

Backtracking method of graph coloring problem (the most easy to understand)

DDD Practice Manual (4. aggregate aggregate)

第8期:云原生—— 大学生职场小白该如何学

Roll in Dachang series LRU cache elimination algorithm

Asp. Net web API 2 Lesson 18 - working with entity relations in OData

第6期:大学生应该选择哪种主流编程语言

Construction and protection of small-scale network examination

FPGA - 7 Series FPGA selectio -05- logic of logic resources

How to limit intranet speed

[data mining] final review Chapter 2

Aurora8B10B IP使用 -01- 简介与端口描述

Fastdfs cluster

【数据挖掘】期末复习 第二章

构建和保护小型网络考试

FPGA - 7系列 FPGA SelectIO -05- 逻辑资源之OLOGIC

回答问题:你认为AGI应该采用什么思路?

MySQL数据库基础:子查询

WordPress pseudo original tool - update website one click pseudo original publishing software

【毕业季】纸短情长,浅谈大二以前的学习经历