当前位置:网站首页>MapReduce & yarn theory

MapReduce & yarn theory

2022-06-26 09:38:00 【[email protected]】

MapReduce&Yarn theory

Preface

- install zookeeper Please refer to Linux - zookeeper Cluster building

- zookeeper For basic use, please refer to zookeeper Command and API

- Hadoop For theoretical study, please refer to Hadoop theory

- HDFS For theoretical study, please refer to HDFS theory

- install HDFS Please refer to Linux -HDFS Deploy

- HDFS For basic use, please refer to HDFS Command and API

MapReduce

Hadoop MapReduce It is the data processing layer . It handles storage in HDFS A large amount of structured and unstructured data in

MapReduce The data is processed in parallel by dividing the job into a set of independent tasks . therefore , Parallel processing improves speed and reliability

Hadoop MapReduce Data processing is carried out in two stages Map and Reduce Stage :

- map Stage —— This is the first stage of data processing . At this stage , We specify all the complex logic / Business rules / Expensive code

- Reduce Stage —— This is the second stage of processing . At this stage , We specify lightweight processing , Such as polymerization / Sum up

MapReduce framework

MRv1 role

Client

- Work as a unit

- Planning job calculation distribution

- Submit assignment resources to HDFS

- Finally submit the assignment to JobTracker

JobTracker

- The core , Lord , Single point

- Schedule all jobs

- Monitor the resource load of the whole cluster

TaskTracker

- from , Own node resource management

- and JobTracker heartbeat , Reporting resources , obtain Task

disadvantages

- JobTracker: Too much load , A single point of failure

- Resource management and computing scheduling are strongly coupled , Other computing frameworks need to implement resource management repeatedly

- Different frameworks cannot manage resources globally

MapReduce Execute the process

- Client submits a MapReduce Of jar Give it to JobClient( submission :hadoop jar …)

- JobClient adopt RPC and JobTracker) communicate , Return a store jar The address of the package (HDFS) and JobId

- Client The resources needed to run the job ( Include JAR file 、 Configuration files and calculated input shards ) Copied to the HDFS Work in id Named directory (Path = HDFS Address on + JobId)

- Start submitting tasks ( Description of the task , No jar, Include Jobid,jar Storage location , Configuration information, etc )

- JobTracker Perform the initialization task

- Read HDFS Files to be processed on , Start calculating input slices , Each slice corresponds to one MapperTask

- TaskTracker Get the task through the heartbeat mechanism ( Description of the task )

- Download what you need jar, Configuration files, etc

- TaskTracker Start a Java Child Subprocesses

- To perform specific tasks (MapperTask or ReducerTask) Writes the result to HDFS among

MapReduce Workflow

InputFiles - File input

Store... In the input file MapReduce Job data . stay HDFS in , Input file resides , The input file format is arbitrary , You can also use line based log files and binary formatsInputFormat - File format

InputFormat Define how to split and read these input files , It selects the file or other object to import ,InputFormat establish InputSplitSplits - section

It means that a single Mapper Data processed . For each split , Will create a map Mission , therefore map The number of tasks is equal to Split The number of , The framework is divided into records , from Mapper HandleRecordReader - Record reading

It is associated with Split signal communication . Then convert the data to fit Mapper Read key value pairs ,RecordReader By default TextInputFormat Convert data to key value pairs , Until the file is read , It assigns byte offsets to each line that exists in the file , These key value pairs are then sent further to Mapper Further treatmentMapper - mapping

It deals with RecordReader Generated input record , And generate intermediate key value pairs , The intermediate output is completely different from the input pair ,Mapper The output of is the complete set of key value pairs

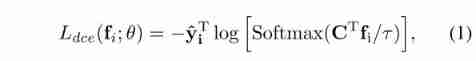

Hadoop The framework will not mapper The output of is stored in HDFS On , Because the data is temporary , write in HDFS Unnecessary multiple copies will be created , then Mapper Pass the output to Combiner Further processingCombiner - Merge

Combiner yes Mini-reducer, It's right Mapper The output of performs local aggregation , It minimizes Mapper and Reducer Data transfer between , therefore , When the merge function is complete , The framework passes the output to Partitioner Further processingPartitioner - Partition

If we use multiple Reducer when ,Partitioner Will appear , It gets Combiner And execute partition

according to MapReduce To partition the output , By hash function ,key( or key Subset ) Derived partition

according to MapReduce Key value in , For each Combiner Output partitioning , Then records with the same key value enter the same partition , Then each partition is sent to Reducer ,MapReduce Partitions in execution allow Mapper The output is evenly distributed to Reducer OnShuffling and Sorting - Shuffle and sort

After partition , The output is transferred to Reducer node , Shuffling is the physical movement of data over the network , When all Mapper After completion , stay Reducer Adjust the output , then , The framework merges and sorts these intermediate outputs , And then I'm going to take that as Reducer The input ofReducer - reduction

then Reducer take Mapper Generate a set of intermediate key value pairs as input , Then run one of them Reducer Function to generate output ,Reducer The output of is the final output , The framework then stores the output in HDFS OnRecordWriter - Record write

It converts these output key value pairs from Reducer Stage write output fileOutputFormat - Output format

OutputFormat Defined RecordReader How to write these output key value pairs in the output file ,Hadoop Provide examples to HDFS Write files in , therefore OutputFormat Examples will Reducer The final output of is written to HDFS

Yarn

Hadoop 2.0 The newly introduced resource management system , from MRv1 Evolved from

YARN from ResourceManager、NodeManager And for each application ApplicationMaster form

take MRv1 in JobTracker Resource management and task scheduling are separated from each other , By ResourceManager and ApplicationMaster Process implementation

Yarn framework

MRv2 role

Client - client : Submit MapReduce Homework

Resource Manager - Explorer : It is YARN The main daemon of , Responsible for resource allocation and management among all applications . Whenever a processing request is received , It will forward it to the corresponding node manager , And allocate corresponding resources to complete the request . It has two main components

- Scheduler - Scheduler : It performs scheduling based on allocated applications and available resources . It is a pure scheduler , Means that it does not perform other tasks , Such as monitoring or tracking , And there is no guarantee that the task will restart if it fails .YARN The scheduler supports the use of Capacity scheduler、Fair scheduler And other plug-ins to partition cluster resources

- Application Manager - Application Manager : It is responsible for accepting applications and negotiating from ResourceManager The first container of . If the task fails , It will also restart Application Master Containers

Node Manager - Node manager : It is responsible for Hadoop A single node on the cluster , And manage applications and workflows as well as specific nodes , The main work is with ResourceManager Keep in sync , towards ResourceManager register , And send heartbeat with node health status , Monitor resource usage , Perform log management , And according to ResourceManager To kill the container , Also responsible for creating container processes , And start it according to the request of the main process of the application

Application Master - Applications :Application Is a single job submitted to the framework ,Application Responsible for working with ResourceManager Negotiate resources , Track single Application Status and progress of ,Application Start the context by sending a container that contains everything the application needs to run (container Launch Context, CLC) from NodeManager Request container , After launching the application , It will from time to time ResourceManager Send health report

Container - Containers : It is on a single node RAM、CPU Collection of physical resources such as kernel and disk , The container is started by the container context (CLC) call , This is a containing environment variables 、 Security Token 、 Records of dependencies and other information

Yran Execute the process

- The client submits the application

- The resource manager assigns a container to start the application manager

- The application manager registers itself with the resource manager

- Application Manager negotiates containers from resource manager

- The application manager notifies the node manager to start the container

- The application code executes in the container

- The client contacts the resource manager / Application manager to monitor the status of applications

- After processing , Application Manager unregisters resource manager

Reference resources

In this paper, the reference

https://techvidvan.com/tutorials/mapreduce-job-execution-flow/

https://www.geeksforgeeks.org/hadoop-yarn-architecture/

This column is about big data learning , If you have any questions, please point out , Learning together !

版权声明

本文为[[email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/177/202206260905385612.html

边栏推荐

- Badge series 4: use of circle Ci

- Statistics of various target quantities of annotations (XML annotation format)

- js---获取对象数组中key值相同的数据,得到一个新的数组

- QPM suspended window setting information

- 【CVPR 2021】Unsupervised Multi-Source Domain Adaptation for Person Re-Identification (UMSDA)

- "One week's work on Analog Electronics" - optocoupler and other components

- 2021年全国职业院校技能大赛(中职组)网络安全竞赛试题(2)详解

- "One week to finish the model electricity" - 55 timer

- Logview Pro can be used if the log is too large

- halcon 光度立体

猜你喜欢

Learning to Generalize Unseen Domains via Memory-based Multi-Source Meta-Learning for Person Re-ID

正则表达的学习

Behavior tree file description

計算領域高質量科技期刊分級目錄

《一周搞定模电》—功率放大器

Real time data analysis tool

《一周学习模电》-电容、三极管、场效应管

jz2440---使用uboot燒錄程序

Record a time when the server was taken to mine

Joint Noise-Tolerant Learning and Meta Camera Shift Adaptation for Unsupervised Person Re-ID

随机推荐

Redis 新手入门

Merrill Lynch data tempoai is new!

Champions League data set (Messi doesn't cry - leaving Barcelona may reach another peak)

【CVPR 2021】Intra-Inter Camera Similarity for Unsupervised Person Re-Identification (IICS++)

《一周搞定模电》—功率放大器

51 single chip microcomputer ROM and ram

Function function of gather()

MapReduce&Yarn理论

3 big questions! Redis cache exceptions and handling scheme summary

异常记录-23

js---获取对象数组中key值相同的数据,得到一个新的数组

Construction practice of bank intelligent analysis and decision-making platform

OpenCV depthframe -> pointcloud 导致 segmentation fault!

点击遮罩层关闭弹窗

php不让图片跟数据一起上传(不再是先上传图片再上传数据)

Detectron2 draw confusion matrix, PR curve and confidence curve

install realsense2: The following packages have unmet dependencies: libgtk-3-dev

Several connection query methods of SQL (internal connection, external connection, full connection and joint query)

Solve Django's if Version (1, 3, 3): raise improverlyconfigured ('mysqlclient 1.3.3 or new is required

Super data processing operator helps you finish data processing quickly