当前位置:网站首页>Deep learning model compression and acceleration technology (VII): mixed mode

Deep learning model compression and acceleration technology (VII): mixed mode

2022-07-07 20:18:00 【Breeze_】

Catalog

Compression and acceleration of deep learning model refers to The model is simplified by using the redundancy of neural network parameters and the redundancy of network structure , Without affecting the completion of the task , Get fewer parameters 、 A more streamlined model . The compressed model has smaller computational resource requirements and memory requirements , Compared with the original model, it can meet a wider range of application needs . In the context of the increasing popularity of deep learning technology , The strong application demand for deep learning model makes people occupy less memory 、 Low computing resource requirements 、 At the same time, it still ensures a high accuracy “ Little model ” Pay special attention to . The model compression and acceleration of deep learning by using the redundancy of neural network has aroused widespread interest in academia and industry , All kinds of jobs emerge in endlessly .

In this paper, the reference 2021 Published in the Journal of software 《 A survey of compression and acceleration of deep learning models 》 Summarized and learned .

Related links :

Deep learning model compression and acceleration technology ( One ): Parameter pruning

Deep learning model compression and acceleration technology ( Two ): Parameter quantification

Deep learning model compression and acceleration technology ( 3、 ... and ): Low rank decomposition

Deep learning model compression and acceleration technology ( Four ): Parameters of the Shared

Deep learning model compression and acceleration technology ( 5、 ... and ): Compact network

Deep learning model compression and acceleration technology ( 7、 ... and ): Mixed mode

summary

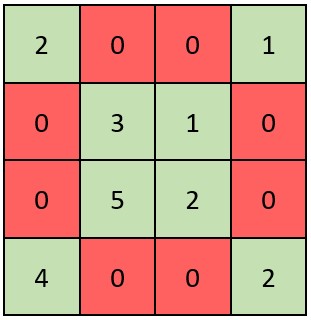

| Model compression and acceleration technology | describe |

|---|---|

| Parameter pruning (A) | Design evaluation criteria for the importance of parameters , Based on this criterion, the importance of network parameters is judged , Delete redundant parameters |

| Parameter quantification (A) | Change the network parameters from 32 Bit full precision floating-point number quantized to lower bits |

| Low rank decomposition (A) | The dimension reduction of high-dimensional parameter vector is decomposed into sparse low-dimensional vector |

| Parameters of the Shared (A) | Using structured matrix or clustering method to map the internal parameters of the network |

| Compact network (B) | From convolution kernel 、 Special layer and network structure 3 Design a new lightweight network at three levels |

| Distillation of knowledge (B) | Refine the information from the larger teacher model to the smaller student model |

| Mixed mode (A+B) | The combination of the first several methods |

A: Compression parameters B: Compression structure

Mixed mode

Definition

A combination of commonly used model compression and acceleration techniques , It's a hybrid way .

characteristic

The hybrid method can integrate the advantages of various compression and acceleration methods , Further strengthen the compression and acceleration effect , It will be an important research direction in the field of deep learning model compression and acceleration in the future .

1. Combine parameter pruning and parameter quantification

- Ullrich wait forsomeone [165] be based on Soft weight sharing The regularization term of , Parameter quantification and parameter pruning are realized in the process of model retraining .

- Tung wait forsomeone [166] An integrated compression and acceleration framework of parameter pruning and parameter quantization is proposed Compression learning by in parallel pruning-quantization(CLIP-Q).

- Han wait forsomeone [167] Put forward Deep compression, Prune parameters 、 Combination of parameter quantization and Huffman coding , Achieved a good compression effect ; And on the basis of it, soft / Hardware co compression design , Put forward Efficient inference engine(Eie) frame [168].

- Dubey wait forsomeone [169] Also use this 3 A combination of two methods for network compression .

2. Combine parameter pruning and parameter sharing

- Louizos wait forsomeone [170] Adopt Bayesian principle , Introduce sparsity through prior distribution to prune the network , Use a posteriori uncertainty to determine the optimal fixed-point accuracy to encode weights .

- Ji wait forsomeone [171] Enter by reordering / Output dimension for pruning , The irregular distribution weights with small values are clustered into structured groups , Achieve better hardware utilization and higher sparsity .

- Zhang wait forsomeone [172] Not only do regularizers encourage sparsity , It also learns which parameter groups should share a common value to explicitly identify highly correlated neurons .

3. Combined parameter quantification and knowledge distillation

- Polino wait forsomeone [173] It is proposed to add knowledge distillation loss Quantitative training method , There are floating-point model and quantitative model , Use quantitative model to calculate forward loss, And calculate the gradient , To update the floating point model . Before each forward calculation , Update the quantization model with the updated floating-point model .

- Mishra wait forsomeone [174] It is proposed to use high-precision teacher model to guide the training of low-precision student model , Yes 3 Ideas : The teacher model and the quantified student model are jointly trained ; The pre trained teacher model guides the quantitative student model to train from scratch ; Both the teacher model and the student model are pre trained , But the student model has been quantified , Then fine tune under the guidance of the teacher model .

reference

[165] Ullrich K, Meeds E, Welling M. Soft weight-sharing for neural network compression. arXiv Preprint arXiv: 1702.04008, 2017.

[166] Tung F, Mori G. Clip-q: Deep network compression learning by in-parallel pruning-quantization. In: Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition. 2018. 78737882.

[167] Han S, Mao H, Dally WJ. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv Preprint arXiv: 1510.00149, 2015.

[168] Han S, Liu X, Mao H, et al. EIE: Efficient inference engine on compressed deep neural network. ACM SIGARCH Computer Architecture News, 2016,44(3):243254.

[169] Dubey A, Chatterjee M, Ahuja N. Coreset-based neural network compression. In: Proc. of the European Conf. on Computer Vision (ECCV). 2018. 454470.

[170] Louizos C, Ullrich K, Welling M. Bayesian compression for deep learning. In: Advances in Neural Information Processing Systems.\2017. 32883298.

[171] Ji Y, Liang L, Deng L, et al. TETRIS: Tile-matching the tremendous irregular sparsity. In: Advances in Neural Information Processing Systems. 2018. 41154125.

[172] Zhang D, Wang H, Figueiredo M, et al. Learning to share: Simultaneous parameter tying and sparsification in deep learning. In: Proc. of the 6th Int’l Conf. on Learning Representations. 2018.

[173] Polino A, Pascanu R, Alistarh D. Model compression via distillation and quantization. arXiv Preprint arXiv: 1802.05668, 2018.

[174] Mishra A, Marr D. Apprentice: Using knowledge distillation techniques to improve low-precision network accuracy. arXiv Preprint arXiv: 1711.05852, 2017.

边栏推荐

- Leetcode force buckle (Sword finger offer 36-39) 36 Binary search tree and bidirectional linked list 37 Serialize binary tree 38 Arrangement of strings 39 Numbers that appear more than half of the tim

- JNI 初级接触

- POJ 1742 coins (monotone queue solution) [suggestions collection]

- [solution] package 'XXXX' is not in goroot

- 力扣 88.合并两个有序数组

- Cloud 组件发展升级

- vulnhub之school 1

- pom. XML configuration file label: differences between dependencies and dependencymanagement

- Try the tuiroom of Tencent cloud (there is an appointment in the evening, which will be continued...)

- equals 方法

猜你喜欢

CIS芯片测试到底怎么测?

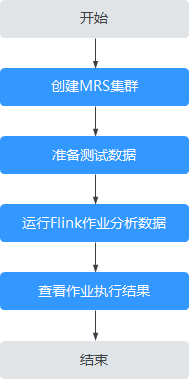

Mrs offline data analysis: process OBS data through Flink job

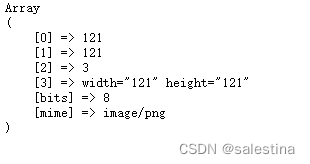

php 获取图片信息的方法

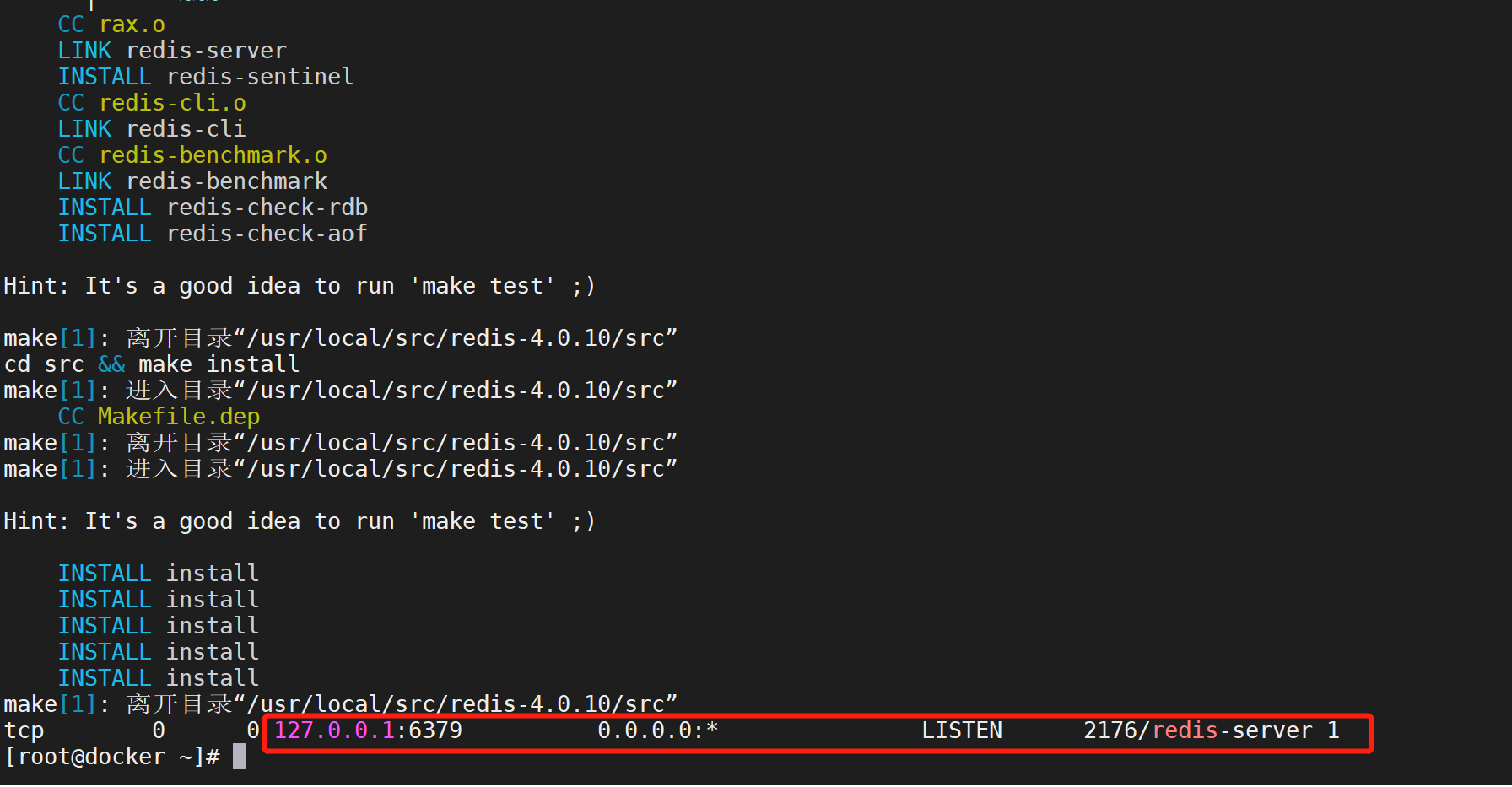

One click deployment of any version of redis

![About cv2 dnn. Readnetfromonnx (path) reports error during processing node with 3 inputs and 1 outputs [exclusive release]](/img/59/33381b8d45401607736f05907ee381.png)

About cv2 dnn. Readnetfromonnx (path) reports error during processing node with 3 inputs and 1 outputs [exclusive release]

CSDN syntax description

vulnhub之school 1

![[philosophy and practice] the way of program design](/img/c8/93f2ac7c5beb95f64b7883ad63c74c.jpg)

[philosophy and practice] the way of program design

With st7008, the Bluetooth test is completely grasped

力扣 599. 两个列表的最小索引总和

随机推荐

力扣 1790. 仅执行一次字符串交换能否使两个字符串相等

php 获取图片信息的方法

torch. nn. functional. Pad (input, pad, mode= 'constant', value=none) record

【Auto.js】自动化脚本

Force buckle 599 Minimum index sum of two lists

CJSON内存泄漏的注意事项

恢复持久卷上的备份数据

Traversal of Oracle stored procedures

When easygbs cascades, how to solve the streaming failure and screen jam caused by the restart of the superior platform?

网络原理(1)——基础原理概述

JVM class loading mechanism

JVM 类加载机制

Implement secondary index with Gaussian redis

Data island is the first danger encountered by enterprises in their digital transformation

Graduation season | regretful and lucky graduation season

POJ 1742 coins (monotone queue solution) [suggestions collection]

Network principle (1) - overview of basic principles

数据孤岛是企业数字化转型遇到的第一道险关

力扣 989. 数组形式的整数加法

PHP method of obtaining image information