当前位置:网站首页>The real king of caching, Google guava is just a brother

The real king of caching, Google guava is just a brother

2022-07-05 13:26:00 【Hollis Chuang】

source :cnblogs.com/rickiyang/

p/11074158.html

1. Caffine Cache Algorithmic advantages -W-TinyLFU

2. Use

2.1 Cache fill policy

2.2 Recovery strategy

3. Remove event monitoring

4. Write to external storage

5. Statistics

3. SpringBoot The default Cache-Caffine Cache

1. Introduce dependencies :

2. Add annotations to enable caching support

3. The configuration file is used to inject the relevant parameters

4. Use annotations to cache Additions and deletions

I just said Guava Cache, His advantage is that it encapsulates get,put operation ; Provides thread safe caching operations ; Provide expiration policy ; Provide recycling strategy ; Cache monitoring . When the cached data exceeds the maximum , Use LRU Algorithm replacement . In this article, we're going to talk about a new local caching framework :Caffeine Cache. It also stands on the shoulders of giants -Guava Cache, With his ideas to optimize the algorithm developed from .

This post mainly introduces Caffine Cache How to use , as well as Caffine Cache stay SpringBoot The use of .

1. Caffine Cache Algorithmic advantages -W-TinyLFU

When it comes to optimization ,Caffine Cache What has been optimized ? We just mentioned LRU, There are also some common cache elimination algorithms FIFO,LFU:

FIFO: fifo , In this elimination algorithm , The first to enter the cache will be eliminated first , It leads to a very low hit rate .

LRU: Least recently used algorithm , Every time we access the data, we put it at the end of our team , If you need to eliminate data , You just need to knock out the team leader . There's still a problem , If there's a data in 1 I visited... In 20 minutes 1000 Time , And then 1 Minutes did not access this data , But there are other data access , This led to the elimination of our hot data .

LFU: Use at least recently , Use extra space to record how often each data is used , Then choose the lowest frequency to eliminate . This avoids LRU Can't deal with time periods .

Each of the three strategies has its own advantages and disadvantages , The cost of implementation is also higher than the other , At the same time, the hit rate is better than one .Guava Cache Although there are so many functions , But it's essentially right LRU Encapsulation , If there is a better algorithm , And it can provide so many functions , By comparison, it's dwarfed .

LFU The limitations of : stay LFU As long as the probability distribution of data access patterns remains constant over time , Its hit rate can become very high . For example, a new play has come out , We use LFU Cache it for him , This new play has been visited hundreds of millions of times in the past few days , This frequency is also in our LFU Hundreds of millions of times . But the new plays always pass , For example, a month later, the first few episodes of this new play are actually out of date , But his visits are really too high , Other TV shows can't eliminate this new play at all , So there are limitations in this model .

LRU The advantages and limitations of :LRU Can be very good to deal with sudden traffic situation , Because he doesn't need data . but LRU Predicting the future with historical data is limited , It thinks that the last data coming is the most likely to be accessed again , To give it the highest priority .

Under the limitation of the existing algorithms , It will cause more or less damage to the hit rate of cache data , And life strategy is an important indicator of cache .HighScalability There's an article on the website , From before Google It was invented by an engineer W-TinyLFU—— A modern cache .Caffine Cache It's based on this algorithm .Caffeine For use Window TinyLfu Recovery strategy , Provides a Near the best hit rate .

When the data access pattern does not change over time ,LFU Can bring the best cache hit ratio . However LFU There are two disadvantages :

First , It needs to maintain frequency information for each entry , Every visit needs to be updated , It's a huge expense ;

secondly , If data access patterns change over time ,LFU You can't change the frequency of , As a result, previously frequently accessed records may occupy the cache , However, the records with more visits in the later period cannot be hit .

therefore , Most cache designs are based on LRU Or a variety of it . by comparison ,LRU There is no need to maintain expensive cache record meta information , At the same time, it can also reflect the data access patterns that change over time . However , Under a lot of loads ,LRU It still needs more space to keep up with LFU Consistent cache hit ratio . therefore , One “ modern ” The cache of , Should be able to combine the strengths of both .

TinyLFU Maintain the frequency information of recent visit records , As a filter , When the new record comes , Only satisfaction TinyLFU Only the required records can be inserted into the cache . As mentioned earlier , As a modern cache , It needs to address two challenges :

One is how to avoid the high cost of maintaining frequency information ;

The other is how to react to access patterns that change over time .

Let's start with the former ,TinyLFU With the help of data streams Sketching technology ,Count-Min Sketch Obviously, it's an effective way to solve this problem , It can store frequency information in much smaller space , And the guarantee is very low False Positive Rate. But considering the second question , It's going to be a lot more complicated , Because we know , whatever Sketching It's difficult for data structures to reflect time changes , stay Bloom Filter aspect , We can have Timing Bloom Filter, But for the CMSketch Come on , How to do Timing CMSketch It's not that easy .TinyLFU A time attenuation design mechanism based on sliding window is adopted , With the aid of a simple reset operation : Add one record at a time to Sketch When , Will add... To a counter 1, When the counter reaches a size W When , Take all the records of Sketch All the values are divided by 2, The reset Operation can play the role of attenuation .

W-TinyLFU It is mainly used to solve some sparse burst access elements . In some scenarios where the number is small but the burst traffic is large ,TinyLFU You will not be able to save such elements , Because they can't accumulate enough frequency in a given time . therefore W-TinyLFU It's a combination LFU and LRU, The former is used for most scenarios , and LRU Used to handle burst traffic .

In the scheme of processing frequency recording , You may think of using hashMap De storage , every last key Corresponding to a frequency value . If the amount of data is very large , Is that it hashMap It's going to be very big . It can be associated with Bloom Filter, For each key, use n individual byte Each store a flag to determine key Is in collection . The principle is to use k individual hash Function to key Hash into an integer .

stay W-TinyLFU Use in Count-Min Sketch Record the frequency of our visits , And this is a variant of the bloon filter . As shown in the figure below :

If you need to record a value , Then we need to go through a variety of Hash The algorithm processes it hash, And then in the corresponding hash In the record of the algorithm +1, Why need more than one hash The algorithm ? Since this is a compression algorithm, there must be conflicts , For example, we set up a byte Array of , By calculating the hash The location of . Such as zhang SAN and li si , It's possible that the two of them hash The values are the same , For example, all of them are 1 that byte[1] This position will increase the corresponding frequency , Zhang San visits 1 Ten thousand times , Li Si visits 1 Second, that byte[1] This position is 1 Ten thousand 1, If you take Li Si's interview rating, you will find that it is 1 Ten thousand 1, But Li Si only visited 1 Time , To solve this problem , So we used a lot of hash The algorithm can be understood as long[][] A concept of two-dimensional arrays , For example, in the first algorithm, Zhang San and Li Si conflict , But in the second one , The big probability in the third one doesn't conflict , For example, an algorithm might have 1% The probability of conflict , The probability that the four algorithms will collide is 1% The fourth power of . Through this mode, we take all the algorithms when we get the access rate of Li Si , The lowest frequency of Li Si's visits . So his name is Count-Min Sketch.

2. Use

Caffeine Cache Of github Address : Am I .

The latest version is :

<dependency>

<groupId>com.github.ben-manes.caffeine</groupId>

<artifactId>caffeine</artifactId>

<version>2.6.2</version>

</dependency>2.1 Cache fill policy

Caffeine Cache Three cache filling strategies are provided : Manual 、 Synchronous loading and asynchronous loading .

1. Manual loading

In every time get key When specifying a synchronous function , If key If it doesn't exist, call this function to generate a value .

/**

* Manual loading

* @param key

* @return

*/

public Object manulOperator(String key) {

Cache<String, Object> cache = Caffeine.newBuilder()

.expireAfterWrite(1, TimeUnit.SECONDS)

.expireAfterAccess(1, TimeUnit.SECONDS)

.maximumSize(10)

.build();

// If one key non-existent , Then it will enter the specified function generation value

Object value = cache.get(key, t -> setValue(key).apply(key));

cache.put("hello",value);

// Determine whether there is any, if not, return null

Object ifPresent = cache.getIfPresent(key);

// Remove a key

cache.invalidate(key);

return value;

}

public Function<String, Object> setValue(String key){

return t -> key + "value";

}2. Synchronous loading

structure Cache When ,build Method passes in a CacheLoader Implementation class . Realization load Method , adopt key load value.

/**

* Synchronous loading

* @param key

* @return

*/

public Object syncOperator(String key){

LoadingCache<String, Object> cache = Caffeine.newBuilder()

.maximumSize(100)

.expireAfterWrite(1, TimeUnit.MINUTES)

.build(k -> setValue(key).apply(key));

return cache.get(key);

}

public Function<String, Object> setValue(String key){

return t -> key + "value";

}3. Load asynchronously

AsyncLoadingCache It is inherited from LoadingCache Class , Asynchronous loading uses Executor To call a method and return a CompletableFuture. Asynchronous load caching uses a reactive programming model .

If you want to call it synchronously , Shall provide CacheLoader. When expressed asynchronously , One should be provided AsyncCacheLoader, And return a CompletableFuture.

/**

* Load asynchronously

*

* @param key

* @return

*/

public Object asyncOperator(String key){

AsyncLoadingCache<String, Object> cache = Caffeine.newBuilder()

.maximumSize(100)

.expireAfterWrite(1, TimeUnit.MINUTES)

.buildAsync(k -> setAsyncValue(key).get());

return cache.get(key);

}

public CompletableFuture<Object> setAsyncValue(String key){

return CompletableFuture.supplyAsync(() -> {

return key + "value";

});

}2.2 Recovery strategy

Caffeine Provides 3 Two recycling strategies : Size based recycling , Time based recycling , Based on reference recycling .

1. Size based expiration method

There are two ways to recycle based on size : One is based on cache size , One is based on weight .

// Evict based on cached count

LoadingCache<String, Object> cache = Caffeine.newBuilder()

.maximumSize(10000)

.build(key -> function(key));

// Evict based on cache weight ( Weights are only used to determine cache size , Will not be used to determine whether the cache is evicted )

LoadingCache<String, Object> cache1 = Caffeine.newBuilder()

.maximumWeight(10000)

.weigher(key -> function1(key))

.build(key -> function(key));maximumWeight And maximumSize You can't use it at the same time .

2. Time based expiration methods

// Exit based on fixed expiration strategy

LoadingCache<String, Object> cache = Caffeine.newBuilder()

.expireAfterAccess(5, TimeUnit.MINUTES)

.build(key -> function(key));

LoadingCache<String, Object> cache1 = Caffeine.newBuilder()

.expireAfterWrite(10, TimeUnit.MINUTES)

.build(key -> function(key));

// Exit based on different expiration strategies

LoadingCache<String, Object> cache2 = Caffeine.newBuilder()

.expireAfter(new Expiry<String, Object>() {

@Override

public long expireAfterCreate(String key, Object value, long currentTime) {

return TimeUnit.SECONDS.toNanos(seconds);

}

@Override

public long expireAfterUpdate(@Nonnull String s, @Nonnull Object o, long l, long l1) {

return 0;

}

@Override

public long expireAfterRead(@Nonnull String s, @Nonnull Object o, long l, long l1) {

return 0;

}

}).build(key -> function(key));Caffeine Three timing evictions are provided :

expireAfterAccess(long, TimeUnit): Start timing after the last access or write , Expire after a specified time . If there has been a request to visit the key, Then the cache will never expire .expireAfterWrite(long, TimeUnit): Start timing after the last write to the cache , Expire after a specified time .expireAfter(Expiry): Custom policy , The expiration date is from Expiry Achieve independent computing . The cache delete strategy uses lazy delete and scheduled delete . The time complexity of these two deletion strategies is O(1).

3. Reference based expiration methods

Java There are four types of references in

| Reference type | Garbage collection time | purpose | Time to live |

|---|---|---|---|

| Strong citation Strong Reference | Never | The general state of an object | JVM To stop when running |

| Soft citation Soft Reference | When there is not enough memory | Object caching | Terminate when out of memory |

| Weak reference Weak Reference | During garbage collection | Object caching | gc Termination after operation |

| Virtual reference Phantom Reference | Never | Virtual references can be used to track the activity of objects being recycled by the garbage collector , When an object associated with a virtual reference is collected by the garbage collector, a system notification is received | JVM To stop when running |

// When key and value When there is no reference, the cache is evicted

LoadingCache<String, Object> cache = Caffeine.newBuilder()

.weakKeys()

.weakValues()

.build(key -> function(key));

// Eviction when the garbage collector needs to free memory

LoadingCache<String, Object> cache1 = Caffeine.newBuilder()

.softValues()

.build(key -> function(key));Be careful :AsyncLoadingCache Weak references and soft references are not supported .

Caffeine.weakKeys(): Use weak reference storage key. If there is no other place for this key There is a strong reference to , Then the cache will be recycled by the garbage collector . Because the garbage collector only depends on identity (identity) equal , So this causes the entire cache to use identity (==) To compare key, Instead of using equals().

Caffeine.weakValues() : Use weak reference storage value. If there is no other place for this value There is a strong reference to , Then the cache will be recycled by the garbage collector . Because the garbage collector only depends on identity (identity) equal , So this causes the entire cache to use identity (==) To compare key, Instead of using equals().

Caffeine.softValues() : Use soft reference storage value. When the memory is full , Soft references to objects that will use the least recent use (least-recently-used ) The way of garbage collection . Because the use of soft references is to wait until the memory is full before recycling , So we usually recommend that you configure the cache to use the maximum amount of memory .softValues() Will use identity equal (identity) (==) instead of equals() To compare the value of .

Caffeine.weakValues() and Caffeine.softValues() It can't be used together .

3. Remove event monitoring

Cache<String, Object> cache = Caffeine.newBuilder()

.removalListener((String key, Object value, RemovalCause cause) ->

System.out.printf("Key %s was removed (%s)%n", key, cause))

.build();4. Write to external storage

CacheWriter Method can write all the data in the cache to a third party .

LoadingCache<String, Object> cache2 = Caffeine.newBuilder()

.writer(new CacheWriter<String, Object>() {

@Override public void write(String key, Object value) {

// Write to external storage

}

@Override public void delete(String key, Object value, RemovalCause cause) {

// Remove external storage

}

})

.build(key -> function(key));If you have multilevel caching , This method is still very practical .

Be careful :CacheWriter It can't be associated with weak bonds or AsyncLoadingCache Use it together .

5. Statistics

And Guava Cache The statistics are the same .

Cache<String, Object> cache = Caffeine.newBuilder()

.maximumSize(10_000)

.recordStats()

.build();By using Caffeine.recordStats(), It can be transformed into a set of Statistics . adopt Cache.stats() Return to one CacheStats.CacheStats Provide the following statistical methods :

hitRate(): Returns the cache hit ratio

evictionCount(): Number of cache recycles

averageLoadPenalty(): Average time to load new values 3. SpringBoot The default Cache-Caffine Cache

SpringBoot 1.x Default local in version cache yes Guava Cache. stay 2.x(Spring Boot 2.0(spring 5) ) Already used in version Caffine Cache To replace the Guava Cache. After all, there is a better cache elimination strategy .

Let's talk about SpringBoot2.x How to use cache.

1. Introduce dependencies :

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-cache</artifactId>

</dependency>

<dependency>

<groupId>com.github.ben-manes.caffeine</groupId>

<artifactId>caffeine</artifactId>

<version>2.6.2</version>

</dependency>2. Add annotations to enable caching support

add to @EnableCaching annotation :

@SpringBootApplication

@EnableCaching

public class SingleDatabaseApplication {

public static void main(String[] args) {

SpringApplication.run(SingleDatabaseApplication.class, args);

}

}3. The configuration file is used to inject the relevant parameters

properties file

spring.cache.cache-names=cache1

spring.cache.caffeine.spec=initialCapacity=50,maximumSize=500,expireAfterWrite=10sor Yaml file

spring:

cache:

type: caffeine

cache-names:

- userCache

caffeine:

spec: maximumSize=1024,refreshAfterWrite=60sIf you use refreshAfterWrite To configure , Must specify a CacheLoader. If you don't use this configuration, you don't need this bean, As mentioned above , The CacheLoader All caches managed by the cache manager... Will be associated , So it has to be defined as CacheLoader<Object, Object>, Autoconfig will ignore all generic types .

import com.github.benmanes.caffeine.cache.CacheLoader;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @author: rickiyang

* @date: 2019/6/15

* @description:

*/

@Configuration

public class CacheConfig {

/**

* It's equivalent to building LoadingCache When the object build() Method to specify the load policy method after expiration

* This must be specified Bean,refreshAfterWrite=60s Attribute is valid

* @return

*/

@Bean

public CacheLoader<String, Object> cacheLoader() {

CacheLoader<String, Object> cacheLoader = new CacheLoader<String, Object>() {

@Override

public Object load(String key) throws Exception {

return null;

}

// Overriding this method will oldValue Value back to , And then refresh the cache

@Override

public Object reload(String key, Object oldValue) throws Exception {

return oldValue;

}

};

return cacheLoader;

}

}Caffeine Common configuration instructions :

initialCapacity=[integer]: Initial cache size

maximumSize=[long]: The maximum number of buffers

maximumWeight=[long]: The maximum weight of the cache

expireAfterAccess=[duration]: Expires for a fixed time after the last write or access

expireAfterWrite=[duration]: The last write expires after a fixed time

refreshAfterWrite=[duration]: There is a fixed time interval after the cache is created or last updated , Refresh cache

weakKeys: open key The weak references

weakValues: open value The weak references

softValues: open value The soft references

recordStats: Develop statistical functions

Be careful :

expireAfterWrite and expireAfterAccess Simultaneous existence , With expireAfterWrite Subject to .

maximumSize and maximumWeight You can't use it at the same time

weakValues and softValues You can't use it at the same time It should be noted that , Use configuration file to configure cache items , In general, it can meet the needs of use , But flexibility is not very high , If we have a lot of cache entries, writing them will result in a long configuration file . So in general, you can also choose to use bean To initialize Cache example .

The following demonstration uses bean The way to inject :

package com.rickiyang.learn.cache;

import com.github.benmanes.caffeine.cache.CacheLoader;

import com.github.benmanes.caffeine.cache.Caffeine;

import org.apache.commons.compress.utils.Lists;

import org.springframework.cache.CacheManager;

import org.springframework.cache.caffeine.CaffeineCache;

import org.springframework.cache.support.SimpleCacheManager;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import java.util.ArrayList;

import java.util.List;

import java.util.concurrent.TimeUnit;

/**

* @author: rickiyang

* @date: 2019/6/15

* @description:

*/

@Configuration

public class CacheConfig {

/**

* Create based on Caffeine Of Cache Manager

* Initialize some key Deposit in

* @return

*/

@Bean

@Primary

public CacheManager caffeineCacheManager() {

SimpleCacheManager cacheManager = new SimpleCacheManager();

ArrayList<CaffeineCache> caches = Lists.newArrayList();

List<CacheBean> list = setCacheBean();

for(CacheBean cacheBean : list){

caches.add(new CaffeineCache(cacheBean.getKey(),

Caffeine.newBuilder().recordStats()

.expireAfterWrite(cacheBean.getTtl(), TimeUnit.SECONDS)

.maximumSize(cacheBean.getMaximumSize())

.build()));

}

cacheManager.setCaches(caches);

return cacheManager;

}

/**

* Initialize some cached key

* @return

*/

private List<CacheBean> setCacheBean(){

List<CacheBean> list = Lists.newArrayList();

CacheBean userCache = new CacheBean();

userCache.setKey("userCache");

userCache.setTtl(60);

userCache.setMaximumSize(10000);

CacheBean deptCache = new CacheBean();

deptCache.setKey("userCache");

deptCache.setTtl(60);

deptCache.setMaximumSize(10000);

list.add(userCache);

list.add(deptCache);

return list;

}

class CacheBean {

private String key;

private long ttl;

private long maximumSize;

public String getKey() {

return key;

}

public void setKey(String key) {

this.key = key;

}

public long getTtl() {

return ttl;

}

public void setTtl(long ttl) {

this.ttl = ttl;

}

public long getMaximumSize() {

return maximumSize;

}

public void setMaximumSize(long maximumSize) {

this.maximumSize = maximumSize;

}

}

} Created a SimpleCacheManager As Cache Management object of , Then two... Are initialized Cache object , Store separately user,dept Cache of type . Of course build Cache The parameter settings I wrote are relatively simple , When you use it, you can configure the parameters according to your needs .

4. Use annotations to cache Additions and deletions

We can use spring Provided @Cacheable、@CachePut、@CacheEvict And other annotations for easy use caffeine cache .

If more than one is used cahce, such as redis、caffeine etc. , You must specify a CacheManage by @primary, stay @Cacheable Annotation does not specify cacheManager Then use the tag primary the .

cache There are mainly the following comments on aspects 5 individual :

@Cacheable Trigger cache entry ( This is generally put on the method of creation and acquisition ,

@CacheableThe annotation will first query whether there is a cache , Can use cache , If not, the method is executed and cached )@CacheEvict Trigger cached eviction( The method used to delete )

@CachePut Update cache without affecting method execution ( The method used to modify , Methods under this annotation are always executed )

@Caching Combining multiple caches on a single method ( This annotation allows a method to set multiple annotations at the same time )

@CacheConfig Set up some cache related common configurations at the class level ( Use with other caches )

The way @Cacheable and @CachePut The difference between :

@Cacheable: Whether its annotation method is executed depends on Cacheable Conditions in , Many times a method may not be implemented .

@CachePut: This annotation does not affect the execution of the method , That is to say, no matter what the conditions of its configuration , Methods are executed , More often than not, it's used to modify .

Briefly Cacheable Use of various methods in the class :

public @interface Cacheable {

/**

* To be used cache Name

*/

@AliasFor("cacheNames")

String[] value() default {};

/**

* Same as value(), Decide to use that / Some cache

*/

@AliasFor("value")

String[] cacheNames() default {};

/**

* Use SpEL Expression to set the value of the cache key, If you do not set the default method, all parameters will be used as key Part of

*/

String key() default "";

/**

* Used to generate key, And key() Can't share

*/

String keyGenerator() default "";

/**

* Set what to use cacheManager, Must be set first cacheManager Of bean, This is using the bean Name

*/

String cacheManager() default "";

/**

* Use cacheResolver To set the cache used , Use the same cacheManager, But with the cacheManager You can't use it at the same time

*/

String cacheResolver() default "";

/**

* Use SpEL Expression sets the condition of the departure cache , Effective before method execution

*/

String condition() default "";

/**

* Use SpEL Set the conditions for starting cache , Here's how the method takes effect after execution , So there can be a method in the condition after execution value

*/

String unless() default "";

/**

* For synchronization , When the cache fails ( There is no expiration date and so on ) When , If multiple threads access the annotated method at the same time

* Only one thread is allowed to execute a method through

*/

boolean sync() default false;

}Annotation based usage :

package com.rickiyang.learn.cache;

import com.rickiyang.learn.entity.User;

import org.springframework.cache.annotation.CacheEvict;

import org.springframework.cache.annotation.CachePut;

import org.springframework.cache.annotation.Cacheable;

import org.springframework.stereotype.Service;

/**

* @author: rickiyang

* @date: 2019/6/15

* @description: Local cache

*/

@Service

public class UserCacheService {

/**

* lookup

* Check the cache first , If you can't find , Will look up the database and store it in the cache

* @param id

*/

@Cacheable(value = "userCache", key = "#id", sync = true)

public void getUser(long id){

// Lookup database

}

/**

* to update / preservation

* @param user

*/

@CachePut(value = "userCache", key = "#user.id")

public void saveUser(User user){

//todo Save database

}

/**

* Delete

* @param user

*/

@CacheEvict(value = "userCache",key = "#user.id")

public void delUser(User user){

//todo Save database

}

}If you don't want to use annotations to manipulate the cache , It can also be used directly SimpleCacheManager Get cached key And then operate .

Notice the above key Used spEL expression .Spring Cache There are some SpEL Context data , The following table is taken directly from Spring Official documents :

| name | Location | describe | Example |

|---|---|---|---|

| methodName | root object | The name of the currently called method | #root.methodname |

| method | root object | Currently called method | #root.method.name |

| target | root object | Currently called target object instance | #root.target |

| targetClass | root object | The class of the target object being called | #root.targetClass |

| args | root object | Parameter list of the currently called method | #root.args[0] |

| caches | root object | The cache list used by the current method call | #root.caches[0].name |

| Argument Name | Execution context | Parameters of the currently called method , Such as findArtisan(Artisan artisan), Can pass #artsian.id Get parameters | #artsian.id |

| result | Execution context | Return value after method execution ( Only if the judgment after method execution is valid , Such as unless cacheEvict Of beforeInvocation=false) | #result |

Be careful :

1. When we want to use root The properties of an object are key We can also “#root” Omit , because Spring The default is root Object properties . Such as

@Cacheable(key = "targetClass + methodName +#p0")2. When using method parameters, we can directly use “# Parameter name ” perhaps “#p Parameters index”. Such as :

@Cacheable(value="userCache", key="#id")

@Cacheable(value="userCache", key="#p0")SpEL Provides a variety of operators

| type | Operator |

|---|---|

| Relationship | <,>,<=,>=,==,!=,lt,gt,le,ge,eq,ne |

| The arithmetic | +,- ,* ,/,%,^ |

| Logic | &&,||,!,and,or,not,between,instanceof |

| Conditions | ?: (ternary),?: (elvis) |

| Regular expressions | matches |

| Other types | ?.,?[…],![…],^[…],$[…] |

End

Previous recommendation

Be called upon to boycott ,7-Zip Pseudo open source still leaves a back door ?

The maximum concurrency of a server tcp How many connections ?65535?

How to choose cache read / write strategies in different business scenarios ?

There is Tao without skill , It can be done with skill ; No way with skill , Stop at surgery

Welcome to pay attention Java Road official account

Good article , I was watching ️

边栏推荐

- Cf:a. the third three number problem

- 龙芯派2代烧写PMON和重装系统

- How to protect user privacy without password authentication?

- APICloud Studio3 API管理与调试使用教程

- 个人组件 - 消息提示

- Word document injection (tracking word documents) incomplete

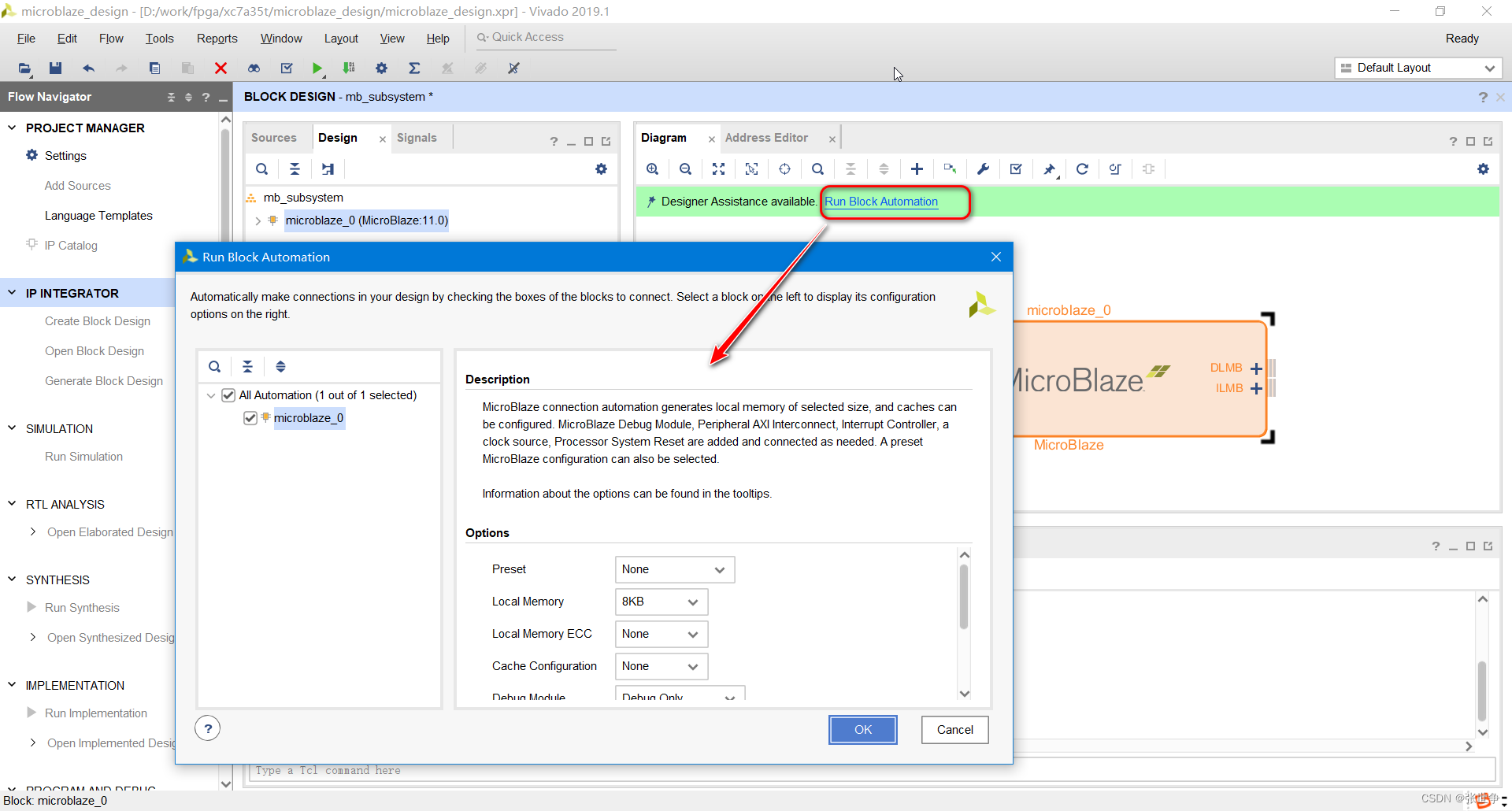

- FPGA learning notes: vivado 2019.1 add IP MicroBlaze

- js判断数组中是否存在某个元素(四种方法)

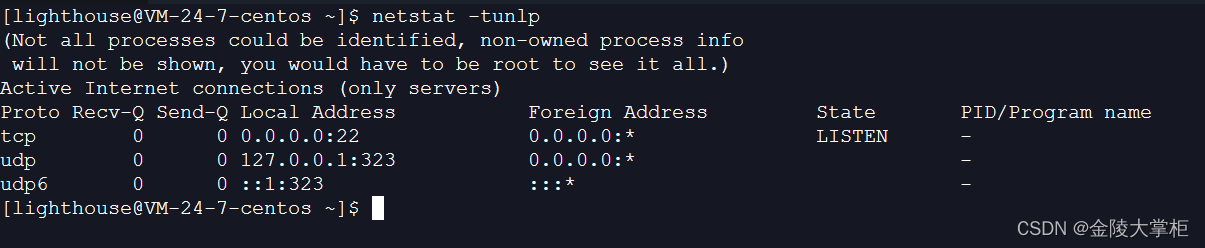

- 初次使用腾讯云,解决只能使用webshell连接,不能使用ssh连接。

- Write API documents first or code first?

猜你喜欢

CAN和CAN FD

stm32逆向入门

What is a network port

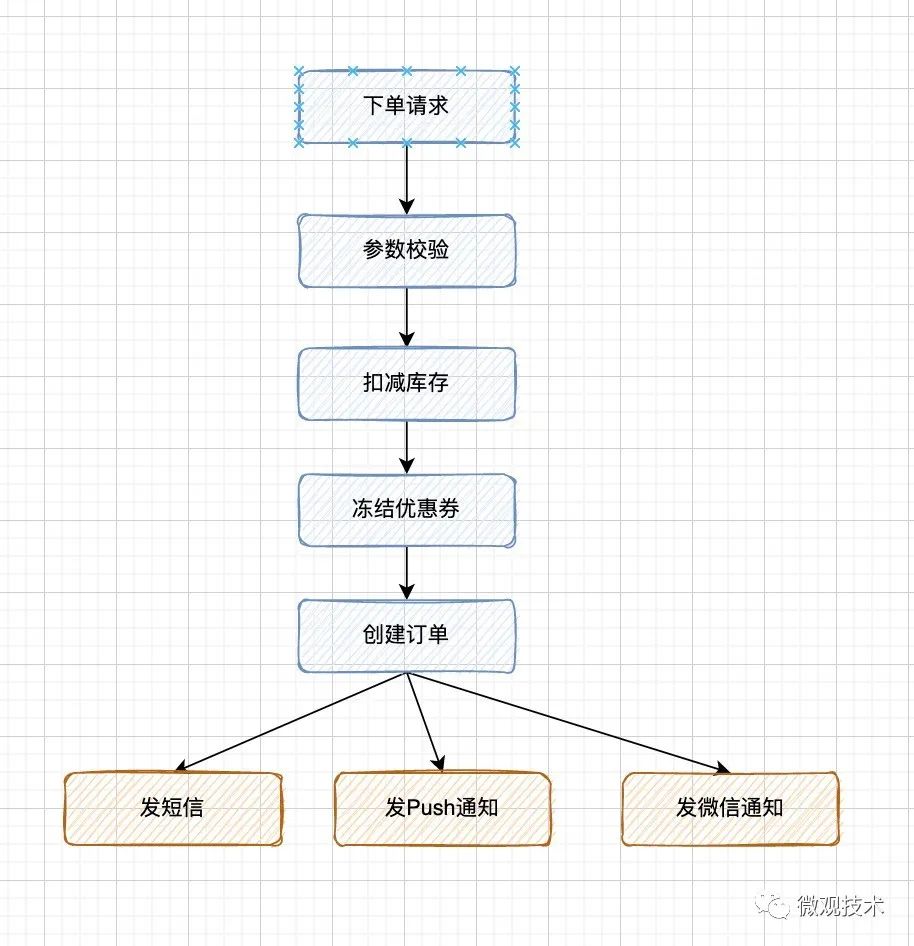

Talk about seven ways to realize asynchronous programming

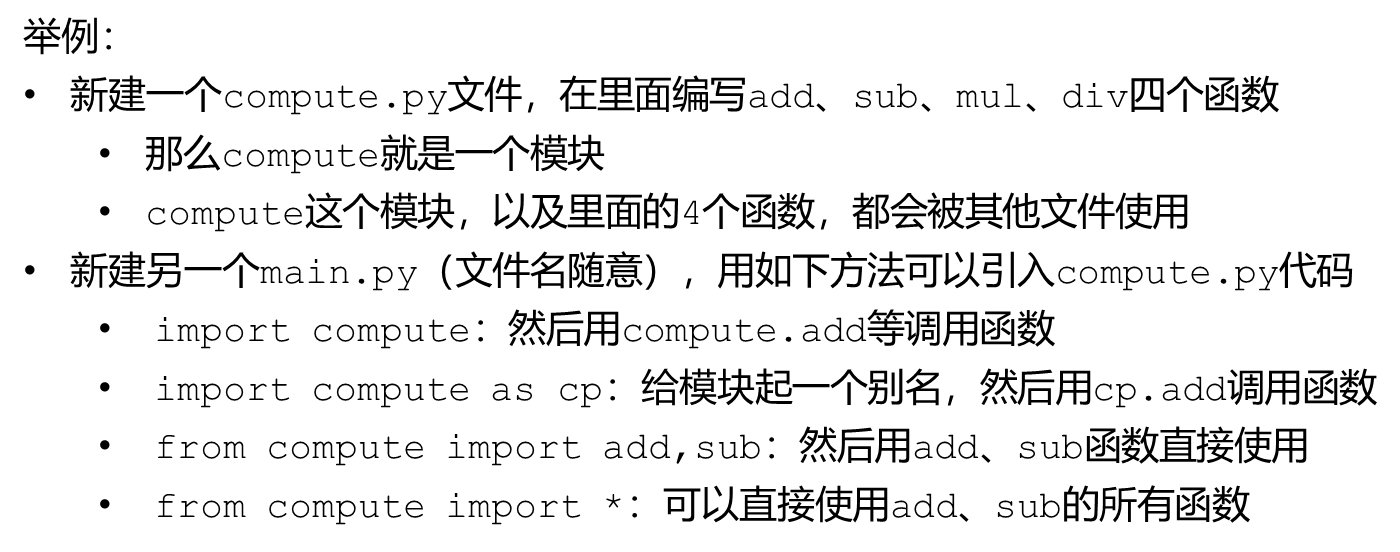

Put functions in modules

Mmseg - Mutli view time series data inspection and visualization

MMSeg——Mutli-view时序数据检查与可视化

FPGA learning notes: vivado 2019.1 add IP MicroBlaze

![[深度学习论文笔记]UCTransNet:从transformer的通道角度重新思考U-Net中的跳跃连接](/img/b6/f9da8a36167db10c9a92dabb166c81.png)

[深度学习论文笔记]UCTransNet:从transformer的通道角度重新思考U-Net中的跳跃连接

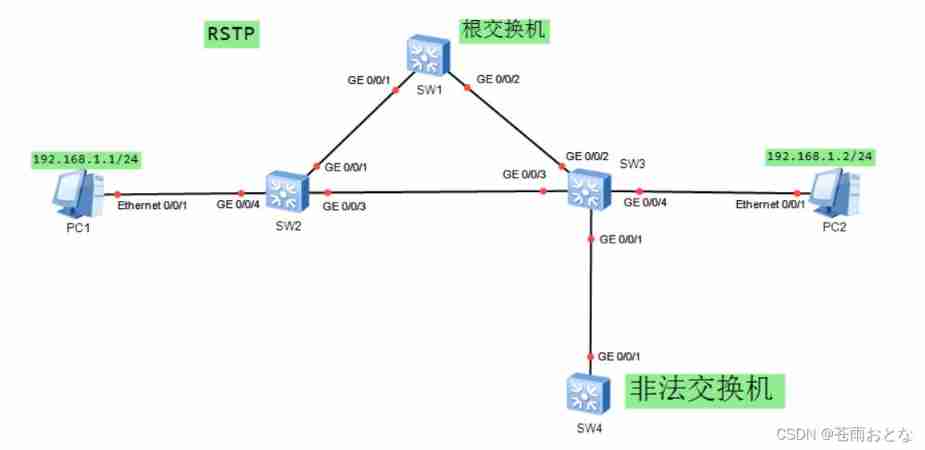

Principle and configuration of RSTP protocol

随机推荐

Cloudcompare - point cloud slice

Talking about fake demand from takeout order

mysql econnreset_Nodejs 套接字报错处理 Error: read ECONNRESET

FPGA 学习笔记:Vivado 2019.1 添加 IP MicroBlaze

Default parameters of function & multiple methods of function parameters

记录一下在深度学习-一些bug处理

[deep learning paper notes] hnf-netv2 for segmentation of brain tumors using multimodal MR imaging

今年上半年,通信行业发生了哪些事?

49. Grouping of alphabetic ectopic words: give you a string array, please combine the alphabetic ectopic words together. You can return a list of results in any order. An alphabetic ectopic word is a

Cf:a. the third three number problem

RHCSA10

时钟周期

Hundred days to complete the open source task of the domestic database opengauss -- openguass minimalist version 3.0.0 installation tutorial

Alibaba cloud SLB load balancing product basic concept and purchase process

[深度学习论文笔记]使用多模态MR成像分割脑肿瘤的HNF-Netv2

南理工在线交流群

山东大学暑期实训一20220620

蜀天梦图×微言科技丨达梦图数据库朋友圈+1

go map

Jenkins installation