当前位置:网站首页>Masked Autoencoders Are Scalable Vision Learners (MAE)

Masked Autoencoders Are Scalable Vision Learners (MAE)

2022-07-05 22:46:00 【Lian Li o】

Catalog

Introduction

- stay ViT Of paper in , The author dug the pit of self supervised learning , and MAE (Masked AutoEncoders) It belongs to the work of filling the pit , it stay ImageNet It even achieves the effect comparable to supervised learning through self encoder , This is CV The subsequent large-scale model training in the field has laid a solid foundation

Autoencoders ( Self encoder ) Medium Auto It means self supervised learning , On behalf of model learning label From the picture itself

Approach

Motivation

- MAE Be similar to BERT Medium MLM, By means of CV Field is introduced into Masked autoencoding To conduct self supervised learning . In a further introduction MAE Before the structure , We can take a look at NLP and CV in masked autoencoding The difference between :

- (1) Architectural gap: in the past ,CNN Has always been a CV The most popular model in the field , And integrate in convolution mask tokens But not so intuitive . But fortunately , This problem has been Vision Transformers (ViT) It's solved

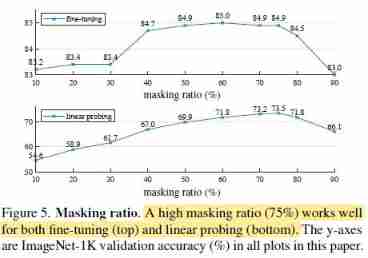

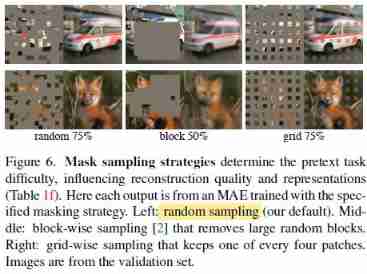

- (2) Information density: The information density of language and vision is different . Language is an artificial signal with high information density containing a lot of semantics , When we train a model to predict the hidden words in a sentence , Models require complex language understanding . But the image is a natural signal with a lot of spatial redundant information , For example, an occluded pixel can be simply interpolated by adjacent pixels without any high-level understanding of the image . This problem can be solved in a very simple way : Randomly mask most of the pictures patches, This helps to reduce the information redundancy of the image and promote the overall understanding of the image by the model ( In the following figure, from left to right masked image、MAE reconstruction and ground-truth, The covering ratio is 80%)

- (3) The autoencoder’s decoder: The decoder of the self encoder is responsible for mapping the implicit representation obtained by the encoder back to the input space . In language tasks , The decoder is responsible for reconstructing high semantic level words , therefore BERT There is only one decoder in MLP. But in visual tasks , The decoder is responsible for reconstructing pixels at low semantic levels , here The design of decoder is very important , It must have enough ability to restore the information obtained by the encoder to low semantic level information

MAE

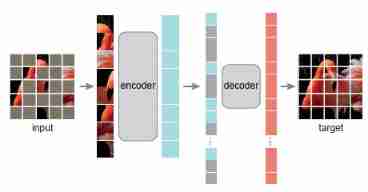

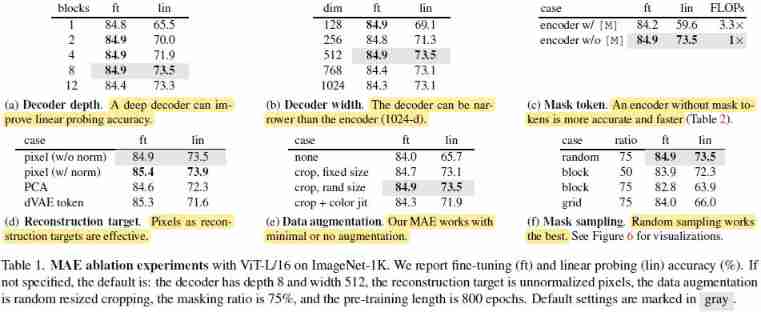

- MAE It is a simple self encoder , Be similar to BERT Medium MLM,MAE adopt Some of the random occlusion images patches And reconstruct to carry out self supervised learning . Through the above analysis , We can design the following structure :

- (1) MAE Randomly mask most of the input image patches (e.g., 75%) And reconstruct it in the pixel space .Mask token Is a vector that can be learned

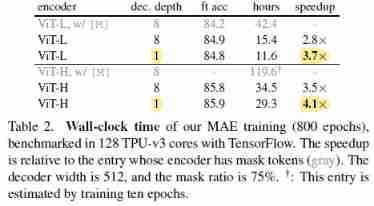

- (2) use Asymmetric encoder-decoder framework , Encoder Only for uncovered patches Encoding , more Lightweight decoder For all patches refactoring ( Including the vector sum obtained by the encoder Mask token, And they all have to add positional embeddings). This asymmetrical design can save a lot of computation , Further improve MAE extensibility (“ Asymmetric ” It means that the encoder only sees part patches, The decoder sees everything patches, Handle all at the same time patches Our decoder is lighter )

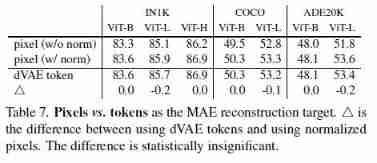

- (3) Loss function . The last layer of the decoder is a linear layer , Each output represents a patch Pixel value . The loss function uses Mean square loss (MSE), also Loss only in masked patches Count up (This choice is purely result-driven: computing the loss on all pixels leads to a slight decrease in accuracy (e.g., ∼0.5%).). besides , We also studied a variant , Let the decoder predict patches Of normalized pixel values, This helps to improve the quality of life representation quality ( Of course , This variant method must be known to be covered in advance patches The mean and variance of , Therefore, it doesn't work when making image reconstruction prediction )

After pre-training, the decoder is discarded and the encoder is applied to uncorrupted images (full sets of patches) for recognition tasks.

Simple implementation (no sparse operations are needed)

- (1) Through the linear projection layer and positional embedding Enter... For each patch All generate a token

- (2) Random shuffle all token Sequence and according to masking ratio Remove a large part of the end tokens, And that creates visible patches For input encoder . After the encoder finishes encoding , And then encoded patches After the sequence, add mask tokens, And restore the whole sequence to the original sequence , Then add the position coding and send it to the decoder (This simple implementation introduces negligible overhead as the shuffling and unshuf-fling operations are fast.)

Experiments

ImageNet Experiments

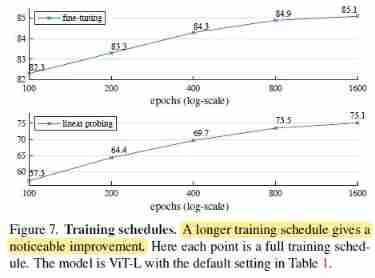

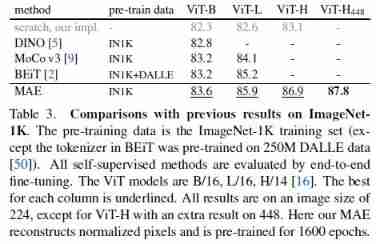

- We are ImageNet-1K On the training set Self supervised pre training , Then do it on the same data set There's supervised training To test the effect of self supervised learning . When doing supervised learning , Two methods are used :(1) end-to-end fine-tuning, It is allowed to change all parameters of the model during supervised learning ;(2) linear probing, In supervised learning, only the parameters of the last linear layer of the model can be changed

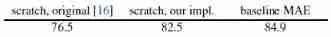

- Baseline: ViT-Large (ViT-L/16). because ViT-L The model is very large , stay ImageNet It is easy to over fit , So we added Strong regularization (scratch, our impl.) And trained 200 individual epoch, But the result is still worse than just fine-tune 了 50 individual epoch Of MAE:

Main Properties

Comparisons with Previous Results

Comparisons with self-supervised methods

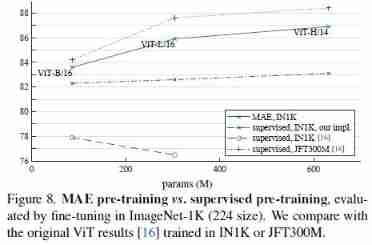

Comparisons with supervised pre-training

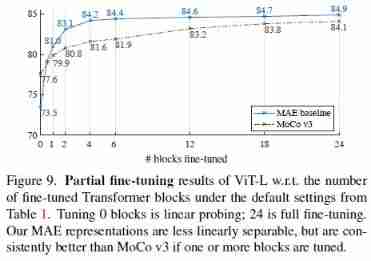

Partial Fine-tuning

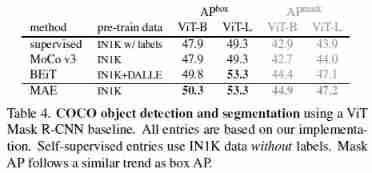

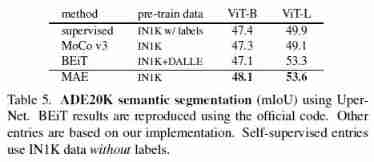

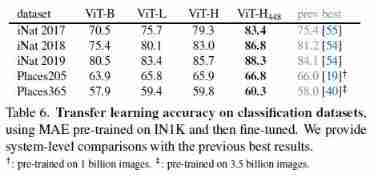

Transfer Learning Experiments

References

边栏推荐

- All expansion and collapse of a-tree

- What changes has Web3 brought to the Internet?

- [Chongqing Guangdong education] National Open University autumn 2018 0088-21t Insurance Introduction reference questions

- Postman core function analysis - parameterization and test report

- Postman核心功能解析-参数化和测试报告

- Unity Max and min constraint adjustment

- 谷歌地图案例

- Thinkphp5.1 cross domain problem solving

- d3dx9_ What if 29.dll is missing? System missing d3dx9_ Solution of 29.dll file

- [groovy] mop meta object protocol and meta programming (execute groovy methods through metamethod invoke)

猜你喜欢

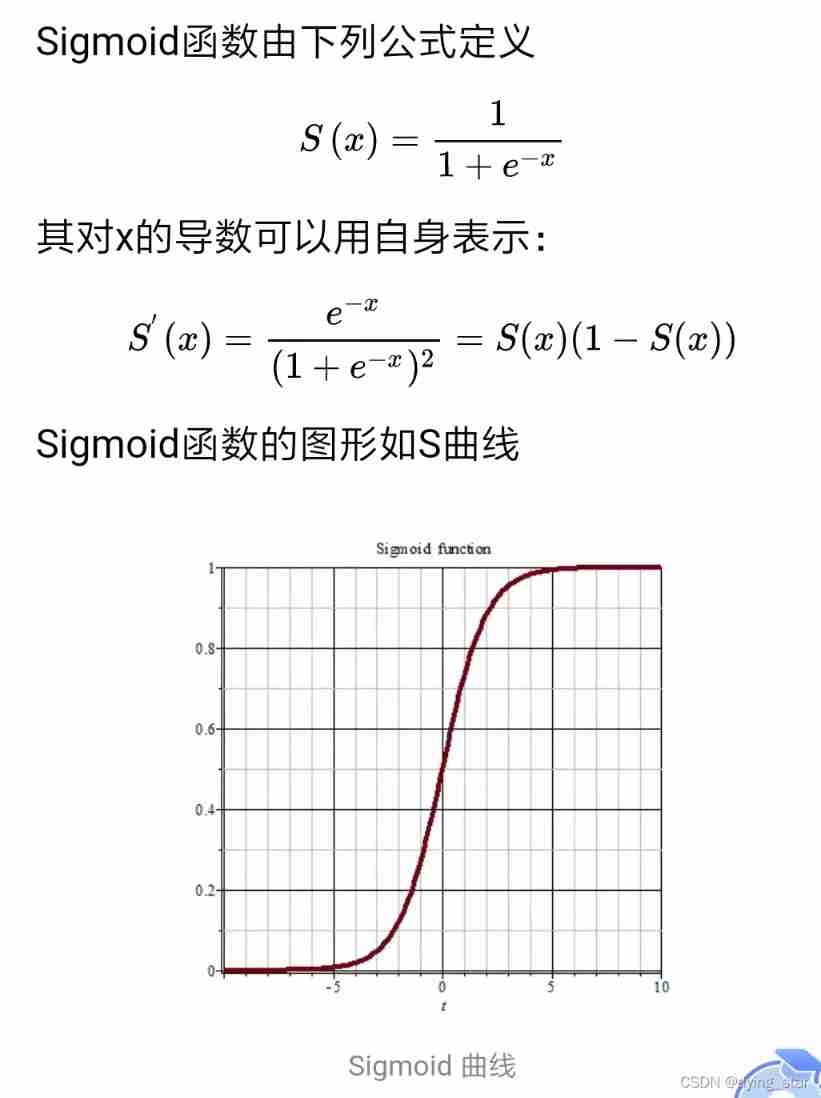

Activate function and its gradient

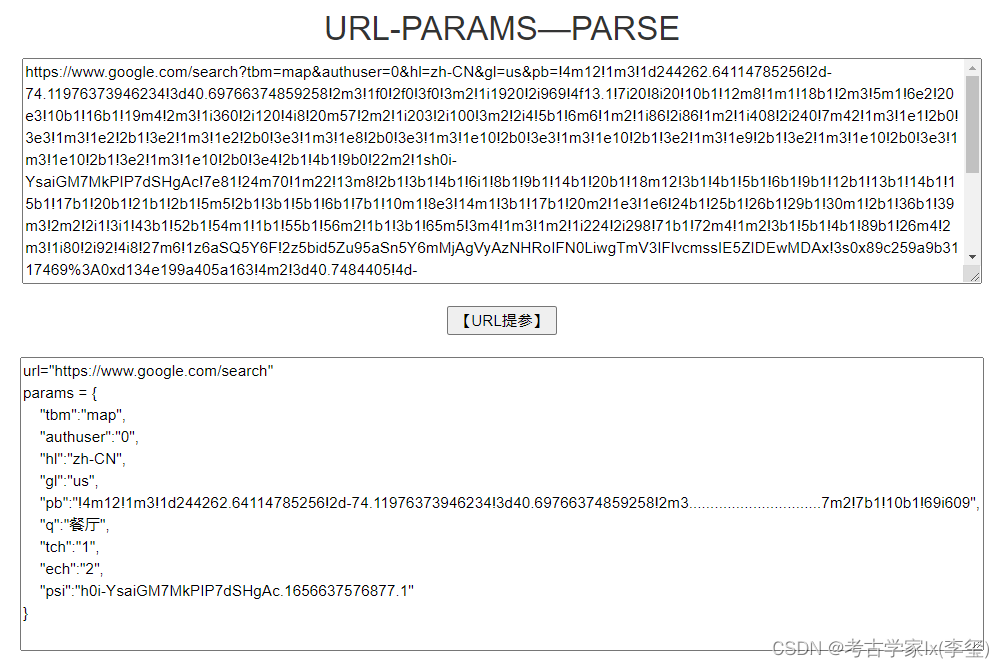

Google Maps case

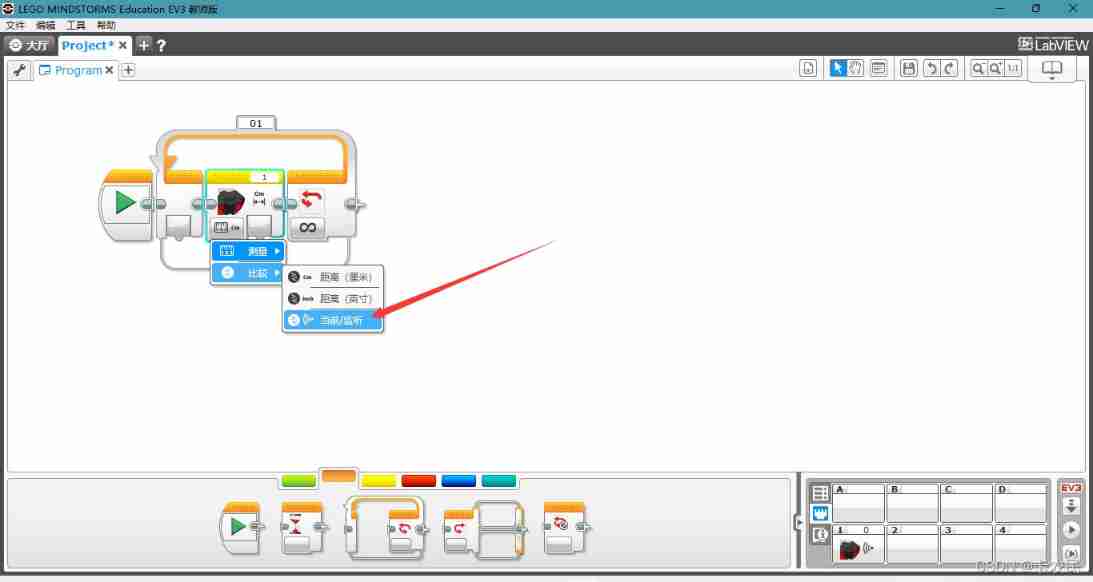

Ultrasonic sensor flash | LEGO eV3 Teaching

![[error record] file search strategy in groovy project (src/main/groovy/script.groovy needs to be used in the main function | groovy script directly uses the relative path of code)](/img/b6/b2036444255b7cd42b34eaed74c5ed.jpg)

[error record] file search strategy in groovy project (src/main/groovy/script.groovy needs to be used in the main function | groovy script directly uses the relative path of code)

如何快速体验OneOS

d3dx9_ What if 29.dll is missing? System missing d3dx9_ Solution of 29.dll file

关于MySQL的30条优化技巧,超实用

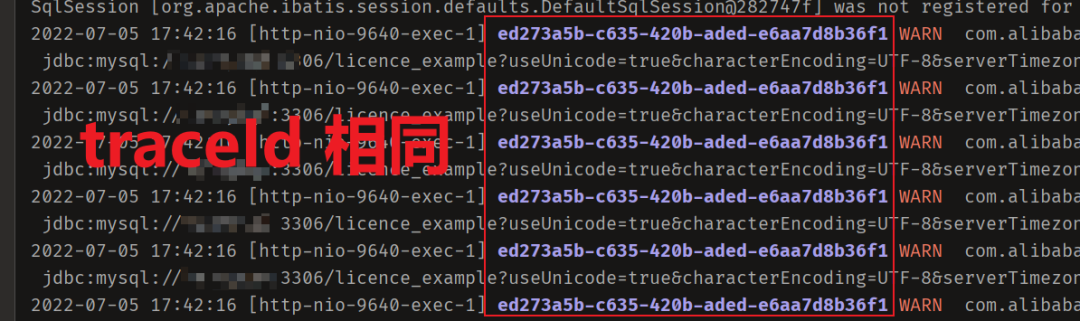

Starting from 1.5, build a micro Service Framework -- log tracking traceid

90后测试员:“入职阿里,这一次,我决定不在跳槽了”

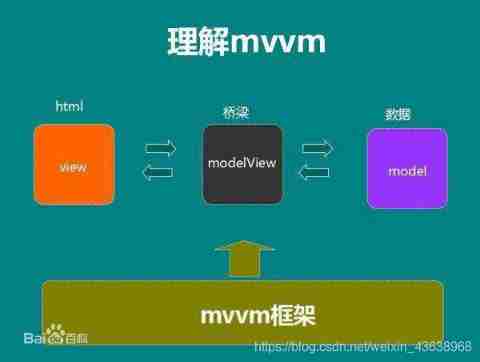

The difference between MVVM and MVC

随机推荐

FBO and RBO disappeared in webgpu

Postman core function analysis - parameterization and test report

Tensor attribute statistics

Metaverse Ape上线倒计时,推荐活动火爆进行

VOT Toolkit环境配置与使用

[error record] groovy function parameter dynamic type error (guess: groovy.lang.missingmethodexception: no signature of method)

Event trigger requirements of the function called by the event trigger

Global and Chinese markets of tantalum heat exchangers 2022-2028: Research Report on technology, participants, trends, market size and share

Usage Summary of scriptable object in unity

119. Pascal‘s Triangle II. Sol

Thinkphp5.1 cross domain problem solving

Binary tree (III) -- heap sort optimization, top k problem

Metaverse Ape猿界应邀出席2022·粤港澳大湾区元宇宙和web3.0主题峰会,分享猿界在Web3时代从技术到应用的文明进化历程

Go language learning tutorial (XV)

點到直線的距離直線的交點及夾角

分布式解决方案之TCC

Global and Chinese market of networked refrigerators 2022-2028: Research Report on technology, participants, trends, market size and share

Double pointer of linked list (fast and slow pointer, sequential pointer, head and tail pointer)

All expansion and collapse of a-tree

Lesson 1: serpentine matrix