当前位置:网站首页>How to find the optimal learning rate

How to find the optimal learning rate

2022-07-01 18:35:00 【Zi Yan Ruoshui】

Link to the original text : How to find the optimal learning rate - You know

After a lot of alchemy, students know , Hyperparameters are a very mysterious thing , such as batch size, Learning rate, etc , There are no rules and reasons for setting these things , The super parameters set in the paper are generally determined by experience . But hyperparameters are often particularly important , For example, learning rate , If you set a too large learning rate , that loss It's going to explode , The learning rate set is too small , The waiting time is very long , So do we have a scientific way to determine our initial learning rate ?

In this article , I will talk about a very simple but effective way to determine a reasonable initial learning rate .

The selection strategy of learning rate is constantly changing in the process of network training , At the beginning , Random parameter comparison , So we should choose a relatively large learning rate , such loss Falling faster ; After training for a period of time , The update of parameters should be smaller , Therefore, the learning rate is generally attenuated , There are many ways of attenuation , For example, multiply the learning rate by a certain number of steps 0.1, There are also exponential decay and so on .

One of our concerns here is how to determine the initial learning rate , Of course, there are many ways , A stupid way is to start from 0.0001 Start trying , And then use 0.001, The learning rate of each order of magnitude runs the network , Then watch loss The situation of , A relatively reasonable learning rate , But this method takes too much time , Can there be a simpler and more effective way ?

A simple way

Leslie N. Smith stay 2015 A paper in “Cyclical Learning Rates for Training Neural Networks” Medium 3.3 This section describes a great way to find the initial learning rate , At the same time, I recommend you to read this paper , There are some very enlightening learning rate setting ideas .

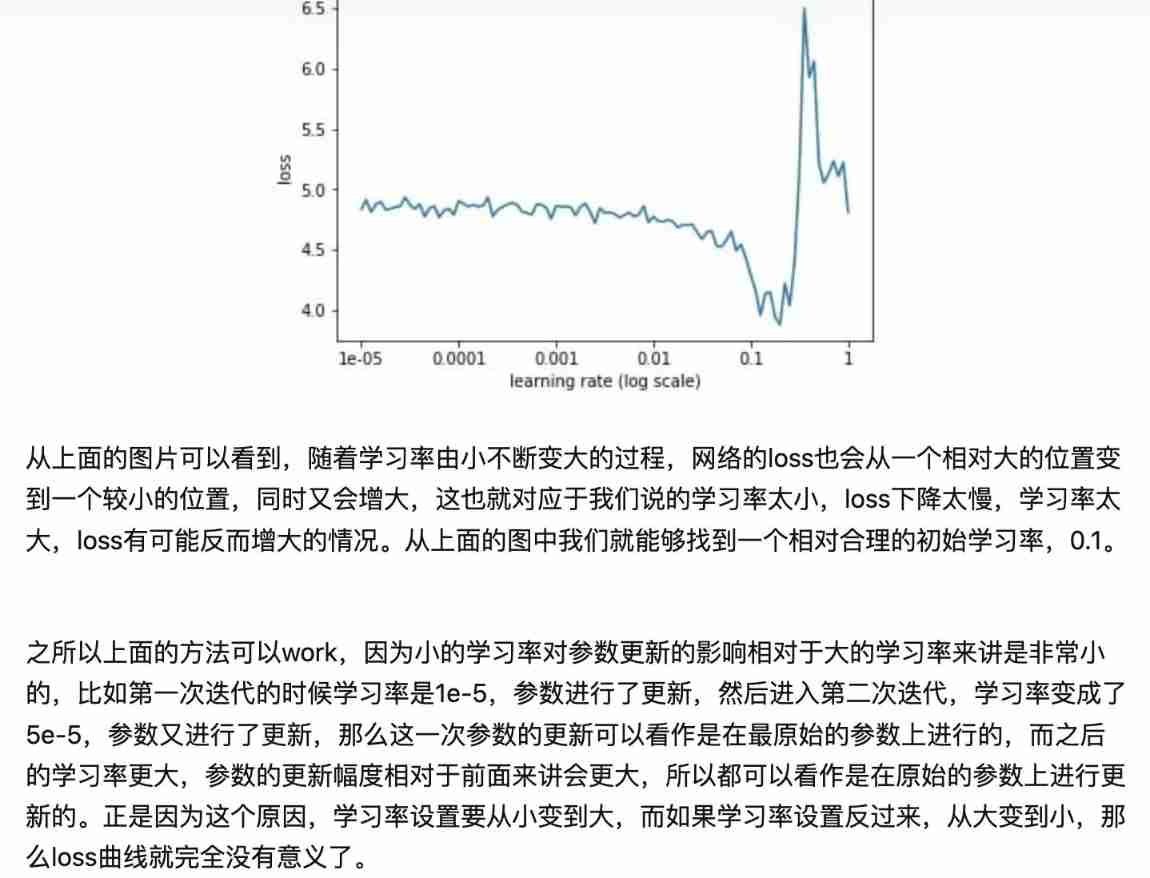

In this paper, this method is used to estimate the minimum learning rate and maximum learning rate allowed by the network , We can also use it to find our optimal initial learning rate , The method is very simple . First, we set a very small initial learning rate , such as 1e-5, And then in each batch Then update the network , At the same time, increase the learning rate , Count each one batch Calculated loss. Finally, we can describe the change curve of learning and loss The curve of change , From this, we can find the best learning rate .

As the number of iterations increases , The curve of increasing learning rate , And different learning rates loss The curve of .

边栏推荐

- Blue Bridge Cup real topic: the shortest circuit

- 开发那些事儿:EasyCVR平台添加播放地址鉴权

- The method of real-time tracking the current price of London Silver

- Subnet division and summary

- Mysql database design

- Develop those things: add playback address authentication to easycvr platform

- Opencv map reading test -- error resolution

- Data warehouse (3) star model and dimension modeling of data warehouse modeling

- 因子分析怎么计算权重?

- [CF1476F]Lanterns

猜你喜欢

Leetcode 1380. Lucky numbers in the matrix (save the minimum number of each row and the maximum number of each column)

What are the legal risks of NFT brought by stars such as curry and O'Neill?

Mysql database design

主成分之综合竞争力案例分析

Apache iceberg source code analysis: schema evolution

Mujoco's biped robot Darwin model

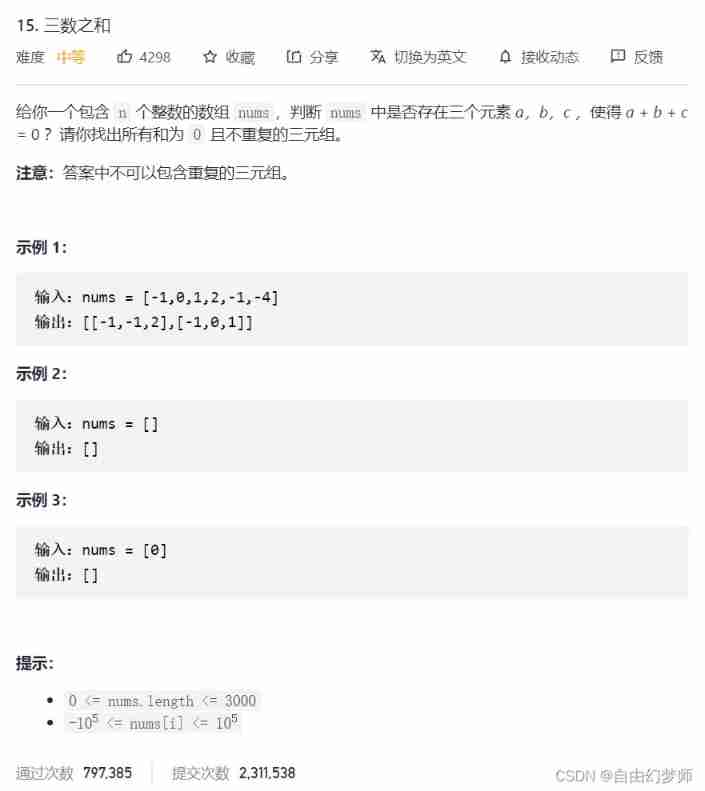

Sum of three numbers

Check log4j problems using stain analysis

Static timing analysis (STA) in ic/fpga design

Computer network interview assault

随机推荐

Step size of ode45 and reltol abstol

因子分析怎么计算权重?

Common design parameters of solid rocket motor

540. Single element in ordered array

PCL learning materials

Cloud computing - make learning easier

Is Huishang futures a regular futures platform? Is it safe to open an account in Huishang futures?

Redis master-slave realizes 10 second check and recovery

Cloud picture says | distributed transaction management DTM: the little helper behind "buy buy buy"

Draw drawing process of UI drawing process

Single element of an ordered array

SCP -i private key usage

Penetration practice vulnhub range Keyring

Three dimensional anti-terrorism Simulation Drill deduction training system software

主成分之综合竞争力案例分析

期货账户的资金安全吗?怎么开户?

Financial judgment questions

t10_ Adapting to Market Participantsand Conditions

MySQL + JSON = King fried

Calculation of intersection of two line segments