当前位置:网站首页>[yolov3 loss function]

[yolov3 loss function]

2022-07-05 11:42:00 【Network starry sky (LUOC)】

List of articles

YOLOv3 The source code parsing 1- The overall structure of the code

Resolved code address :

github:tensorflow-yolov3

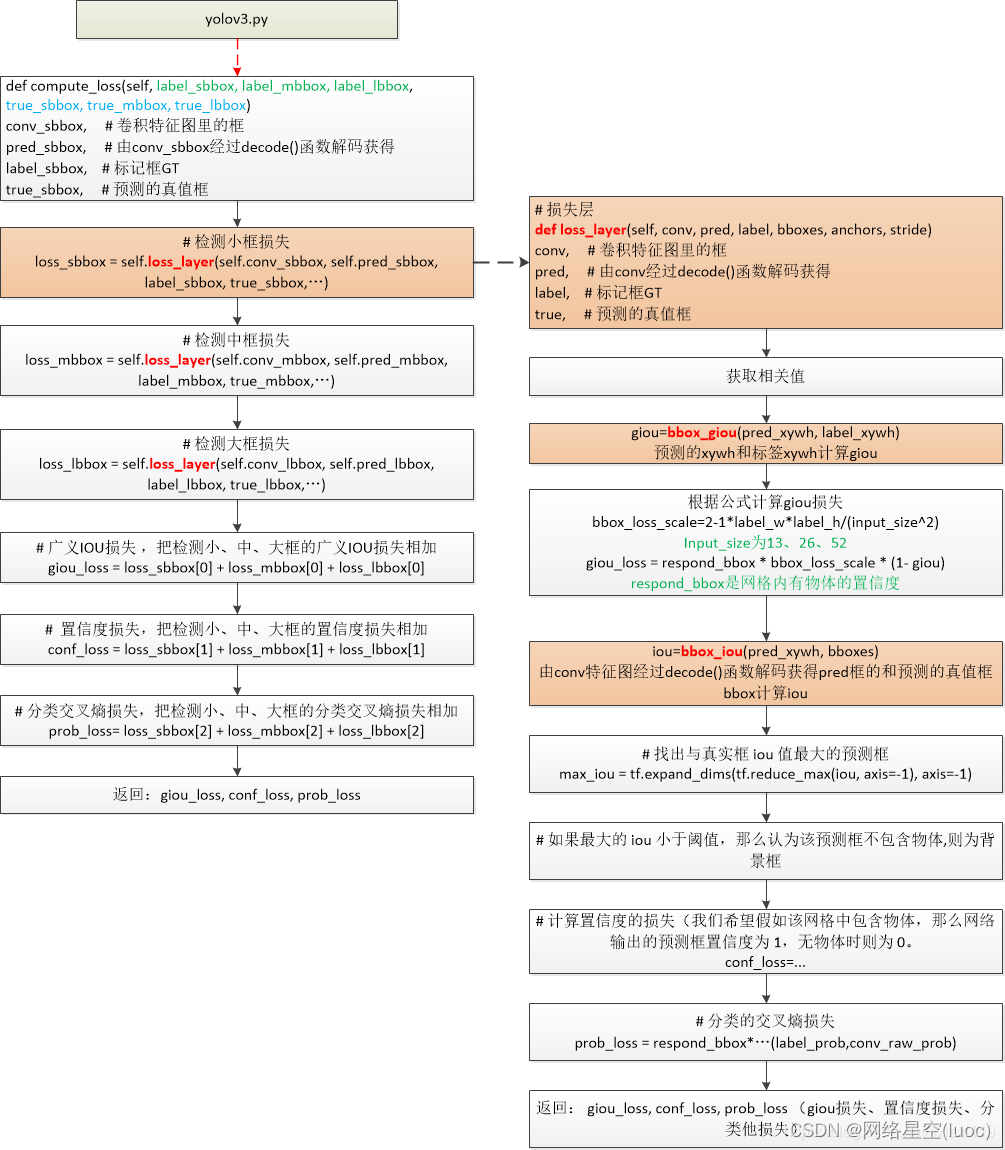

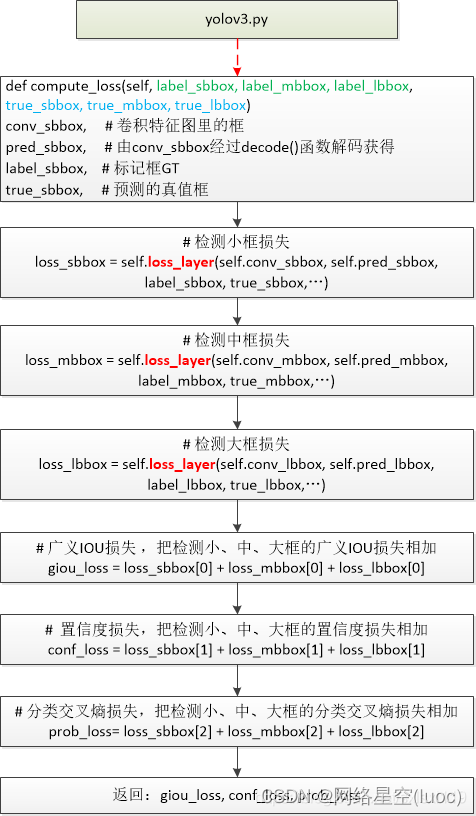

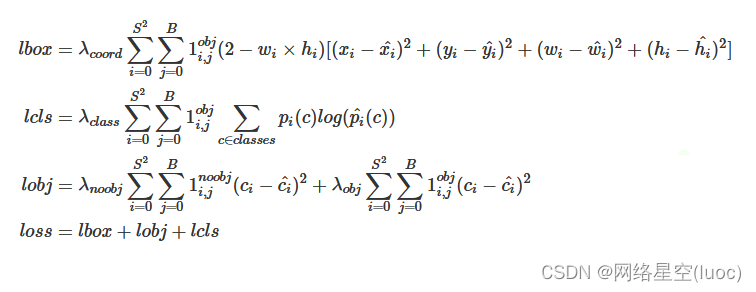

This paper analytically calculates the losses of each part compute_loss() part :

yolov3 The loss function of is a little more complicated , I spent some time reading many articles about yolov3 Loss function of , At last, I have a little bit of eyes , The next article is about yolov3 The theoretical part of the loss function . This source code analysis is mainly mentioned in the structure diagram and source code comments , There is no special text description in the relevant part .

Start in train.py Of the 55 Line call compute_loss() Calculate the loss of model training , And then again yolov3.py Enter into compute_loss() function

compute_loss() function

In fact, this part of the code does not perform the core calculation , It mainly passes in related parameters , And then call loss_layer() Function to perform related calculations .

chart :

Source code :

# Calculate the loss

''' conv_sbbox, # The original value in the convolution characteristic graph pred_sbbox, # from conv_sbbox after decode() Function decode to get label_sbbox, # [52,52,3,85] Marker box GT The coordinate values of , Degree of confidence , Multiple values such as probability true_sbbox, # Only the marking box GT The coordinate values of (3,150,4) true_box The following values are passed in during actual training self.true_sbboxes: train_data[4]==sbboxes == bboxes_xywh[..,0] # Get the coordinate values of small, medium and large marker boxes self.true_mbboxes: train_data[5]==mbboxes == bboxes_xywh[..,1] # Get the coordinate values of small, medium and large marker boxes self.true_lbboxes: train_data[6]==lbboxes == bboxes_xywh[..,2] # Get the coordinate values of small, medium and large marker boxes '''

def compute_loss(self, label_sbbox, label_mbbox, label_lbbox, true_sbbox, true_mbbox, true_lbbox):

# Detect small frame loss 52*52

with tf.name_scope('smaller_box_loss'):

loss_sbbox = self.loss_layer(self.conv_sbbox, self.pred_sbbox, label_sbbox, true_sbbox,

anchors = self.anchors[0], stride = self.strides[0])

# Detect the loss of the middle frame 26*26

with tf.name_scope('medium_box_loss'):

loss_mbbox = self.loss_layer(self.conv_mbbox, self.pred_mbbox, label_mbbox, true_mbbox,

anchors = self.anchors[1], stride = self.strides[1])

# Detect the loss of large frame 13*13

with tf.name_scope('bigger_box_loss'):

loss_lbbox = self.loss_layer(self.conv_lbbox, self.pred_lbbox, label_lbbox, true_lbbox,

anchors = self.anchors[2], stride = self.strides[2])

# In a broad sense IOU Loss , Turn the test down 、 in 、 The broad sense of large frame IOU Loss addition

with tf.name_scope('giou_loss'):

giou_loss = loss_sbbox[0] + loss_mbbox[0] + loss_lbbox[0]

# Loss of confidence , Turn the test down 、 in 、 The confidence loss of the big box is added

with tf.name_scope('conf_loss'):

conf_loss = loss_sbbox[1] + loss_mbbox[1] + loss_lbbox[1]

# Classification cross entropy loss , Turn the test down 、 in 、 The classification cross entropy loss of the large frame is added

with tf.name_scope('prob_loss'):

prob_loss = loss_sbbox[2] + loss_mbbox[2] + loss_lbbox[2]

return giou_loss, conf_loss, prob_loss

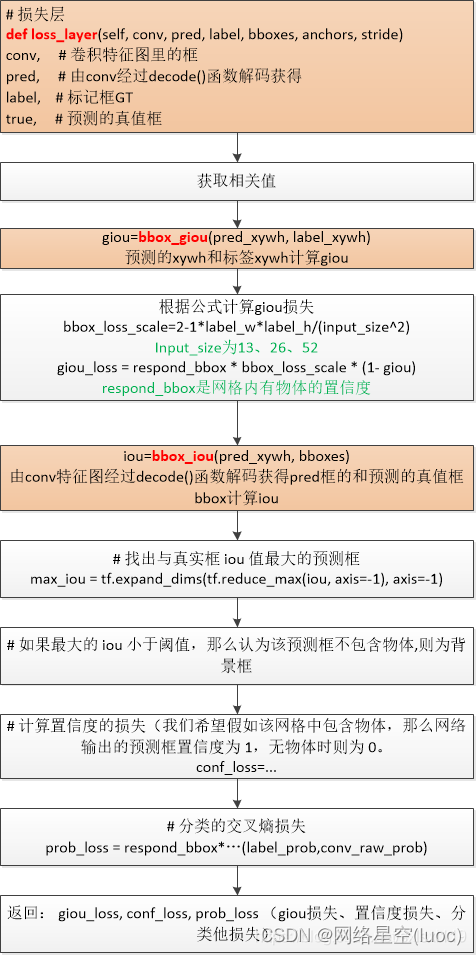

loss_layer() function

chart :

Source code :

# Loss layer

''' conv, # The original value in the convolution characteristic graph pred, # from conv after decode() Function decode to get label, # Marker box GT The coordinate values of , Degree of confidence , Multiple values such as probability [52,52,3,85] bboxes, # Only the marking box GT The coordinate values of (3,150,4) anchors # The benchmark anchor The width and height of stride # The zoom ratio of the feature image to the original image '''

def loss_layer(self, conv, pred, label, bboxes, anchors, stride):

conv_shape = tf.shape(conv) # The value obtained by convolution of the original graph

batch_size = conv_shape[0] # How many pictures at a time

output_size = conv_shape[1] # Feature map size 13*13, 26*26, 52*52

input_size = stride * output_size # 13*32 26*16 52*8

# conv is reshaped here to 5 dimensions

conv = tf.reshape(conv, (batch_size, output_size, output_size,

self.anchor_per_scale, 5 + self.num_class))

# this is the logit before going into sigmoid functions

conv_raw_conf = conv[:, :, :, :, 4:5] # Original confidence , The starting value is the value of the characteristic image

conv_raw_prob = conv[:, :, :, :, 5:] # The original prediction probability , The starting value is the value of the characteristic image

pred_xywh = pred[:, :, :, :, 0:4] # Prediction box xywh

pred_conf = pred[:, :, :, :, 4:5] # Prediction confidence

# true coordinates (x, y, w, h) Mark coordinates GT

label_xywh = label[:, :, :, :, 0:4] # Mark box coordinates

# what is this?

respond_bbox = label[:, :, :, :, 4:5] # Degree of confidence , Determine whether there are objects in the grid

# true probabilities

label_prob = label[:, :, :, :, 5:] # Truth probability

# GIOU Loss

# label_xywh and pred_xywh are used to compute giou

# Mark the... Of the box xywh And prediction box xywh To calculate giou

giou = tf.expand_dims(self.bbox_giou(pred_xywh, label_xywh), axis=-1) # In the axis Position adds a dimension ,-1 Represents the last dimension

input_size = tf.cast(input_size, tf.float32) # Data type conversion

bbox_loss_scale = 2.0 - 1.0 * label_xywh[:, :, :, :, 2:3] * label_xywh[:, :, :, :, 3:4] / (input_size ** 2)

giou_loss = respond_bbox * bbox_loss_scale * (1- giou)

# Loss of confidence

# bboxes (true_bboxes) and pred_xywh are used to compute iou

# Calculation true_bboxes and pred_xywh The cross and parallel of

iou = self.bbox_iou(pred_xywh[:, :, :, :, np.newaxis, :], bboxes[:, np.newaxis, np.newaxis, np.newaxis, :, :])

# Find the real box iou The prediction box with the largest value

max_iou = tf.expand_dims(tf.reduce_max(iou, axis=-1), axis=-1)

# If the biggest iou Less than threshold , Then it is considered that the prediction frame does not contain objects , Is the background box

respond_bgd = (1.0 - respond_bbox) * tf.cast( max_iou < self.iou_loss_thresh, tf.float32 )

conf_focal = self.focal(respond_bbox, pred_conf) # ???

# Calculate the loss of confidence ( We hope that if the grid contains objects , Then the confidence of the prediction frame of the network output is 1, When there is no object, it is 0.

conf_loss = conf_focal * (

respond_bbox * tf.nn.sigmoid_cross_entropy_with_logits(labels=respond_bbox, logits=conv_raw_conf)

+

respond_bgd * tf.nn.sigmoid_cross_entropy_with_logits(labels=respond_bbox, logits=conv_raw_conf)

)

# cross-entropy for classifications

# Cross entropy loss of classification

prob_loss = respond_bbox * tf.nn.sigmoid_cross_entropy_with_logits(labels=label_prob, logits=conv_raw_prob)

giou_loss = tf.reduce_mean(tf.reduce_sum(giou_loss, axis=[1,2,3,4]))

conf_loss = tf.reduce_mean(tf.reduce_sum(conf_loss, axis=[1,2,3,4]))

prob_loss = tf.reduce_mean(tf.reduce_sum(prob_loss, axis=[1,2,3,4]))

return giou_loss, conf_loss, prob_loss

Where called 3 A small function :

Focal loss Give Way one stage detecor It has also become a cow , It's solved class imbalance The problem of . It solves the imbalance of positive and negative samples and the problem of distinguishing simple and complex samples at the same time .

def focal(self, target, actual, alpha=1, gamma=2):

focal_loss = alpha * tf.pow(tf.abs(target - actual), gamma)

return focal_loss

Calculation giou

# Calculation giou

def bbox_giou(self, boxes1, boxes2):

boxes1 = tf.concat([boxes1[..., :2] - boxes1[..., 2:] * 0.5,

boxes1[..., :2] + boxes1[..., 2:] * 0.5], axis=-1)

boxes2 = tf.concat([boxes2[..., :2] - boxes2[..., 2:] * 0.5,

boxes2[..., :2] + boxes2[..., 2:] * 0.5], axis=-1)

boxes1 = tf.concat([tf.minimum(boxes1[..., :2], boxes1[..., 2:]),

tf.maximum(boxes1[..., :2], boxes1[..., 2:])], axis=-1)

boxes2 = tf.concat([tf.minimum(boxes2[..., :2], boxes2[..., 2:]),

tf.maximum(boxes2[..., :2], boxes2[..., 2:])], axis=-1)

boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1])

boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1])

left_up = tf.maximum(boxes1[..., :2], boxes2[..., :2]) # Top left

right_down = tf.minimum(boxes1[..., 2:], boxes2[..., 2:]) # The lower right

inter_section = tf.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

# Calculate the distance between two bounding boxes iou value

iou = inter_area / union_area

# Calculate the minimum closed convex surface C The coordinates of the upper left corner and the lower right corner

enclose_left_up = tf.minimum(boxes1[..., :2], boxes2[..., :2])

enclose_right_down = tf.maximum(boxes1[..., 2:], boxes2[..., 2:])

enclose = tf.maximum(enclose_right_down - enclose_left_up, 0.0)

# Calculate the minimum closed convex surface C The area of

enclose_area = enclose[..., 0] * enclose[..., 1]

# according to GIoU Formula calculation GIoU value

giou = iou - 1.0 * (enclose_area - union_area) / enclose_area

return giou

The intersection and union ratio of two frames

# The intersection and union ratio of two frames

def bbox_iou(self, boxes1, boxes2):

boxes1_area = boxes1[..., 2] * boxes1[..., 3]

boxes2_area = boxes2[..., 2] * boxes2[..., 3]

boxes1 = tf.concat([boxes1[..., :2] - boxes1[..., 2:] * 0.5,

boxes1[..., :2] + boxes1[..., 2:] * 0.5], axis=-1)

boxes2 = tf.concat([boxes2[..., :2] - boxes2[..., 2:] * 0.5,

boxes2[..., :2] + boxes2[..., 2:] * 0.5], axis=-1)

left_up = tf.maximum(boxes1[..., :2], boxes2[..., :2])

right_down = tf.minimum(boxes1[..., 2:], boxes2[..., 2:])

inter_section = tf.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

iou = 1.0 * inter_area / union_area

return iou

边栏推荐

- 以交互方式安装ESXi 6.0

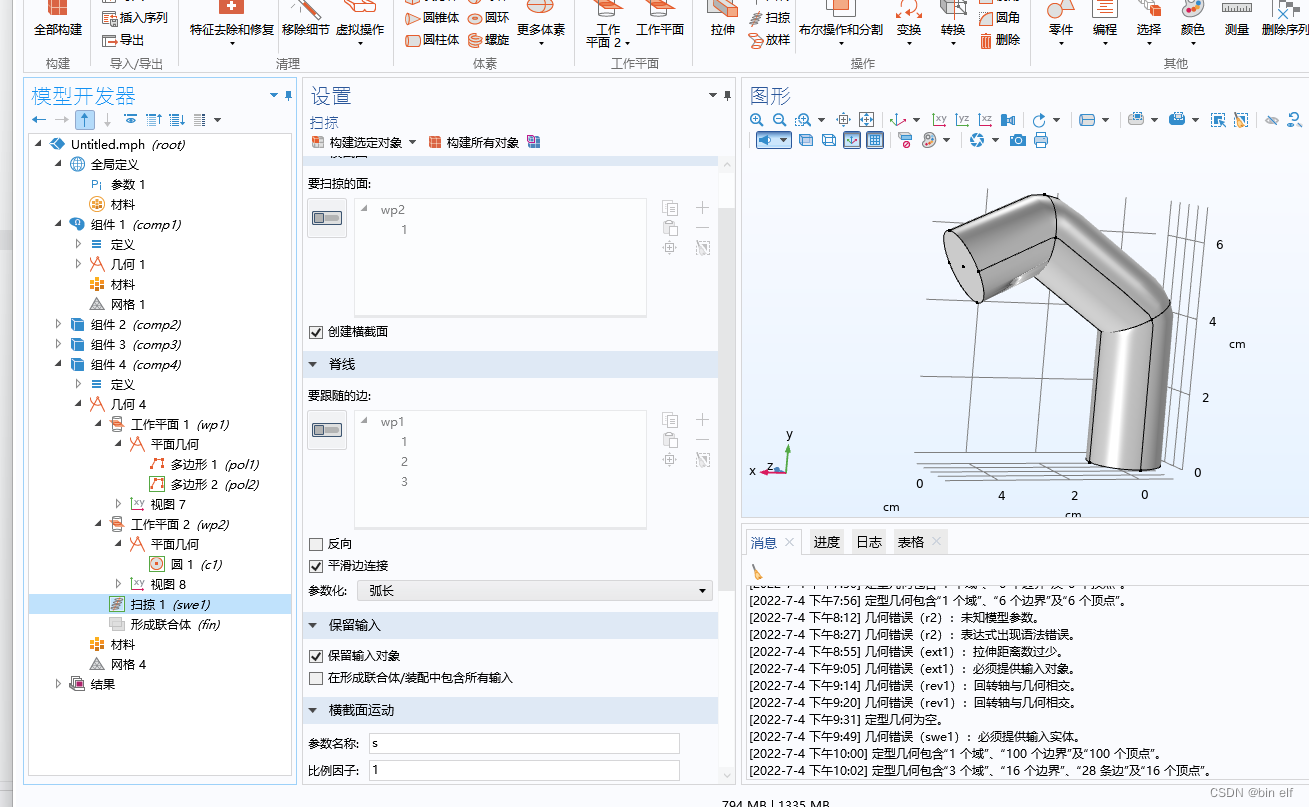

- COMSOL -- 3D casual painting -- sweeping

- What does cross-border e-commerce mean? What do you mainly do? What are the business models?

- 龙蜥社区第九次运营委员会会议顺利召开

- 15 methods in "understand series after reading" teach you to play with strings

- 13. (map data) conversion between Baidu coordinate (bd09), national survey of China coordinate (Mars coordinate, gcj02), and WGS84 coordinate system

- How to understand super browser? What scenarios can it be used in? What brands are there?

- 7 大主题、9 位技术大咖!龙蜥大讲堂7月硬核直播预告抢先看,明天见

- I used Kaitian platform to build an urban epidemic prevention policy inquiry system [Kaitian apaas battle]

- redis主从模式

猜你喜欢

![[crawler] bugs encountered by wasm](/img/29/6782bda4c149b7b2b334238936e211.png)

[crawler] bugs encountered by wasm

XML parsing

【 YOLOv3中Loss部分计算】

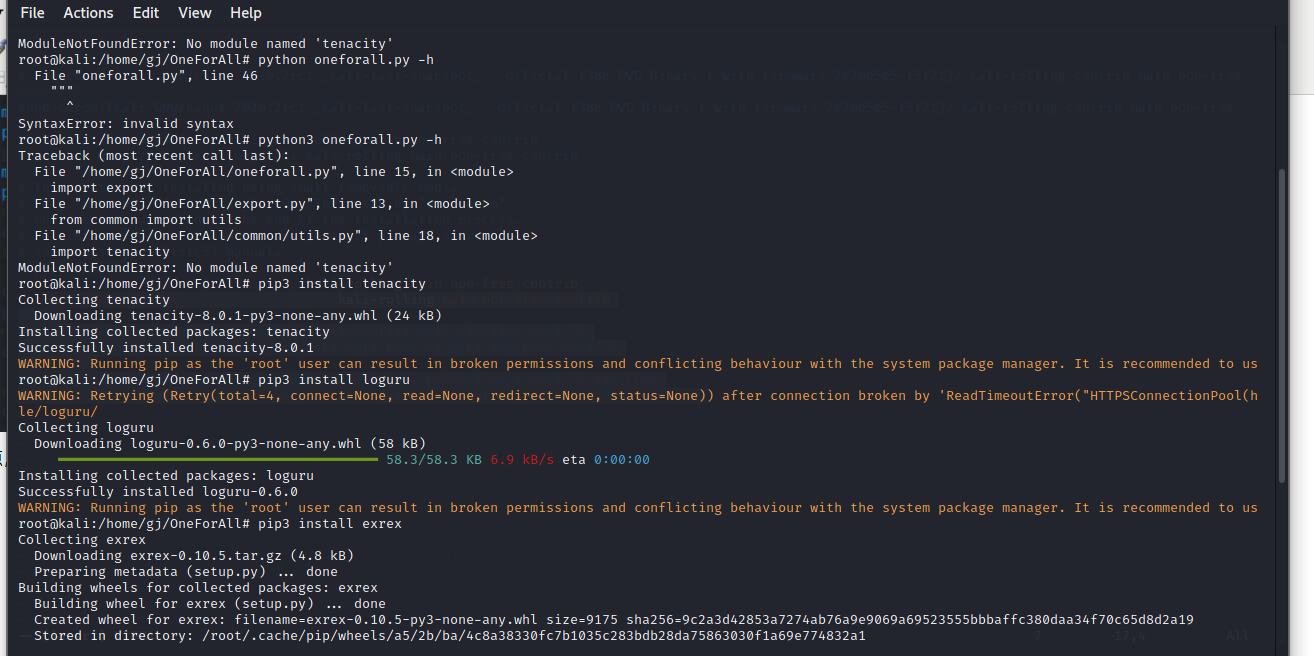

Oneforall installation and use

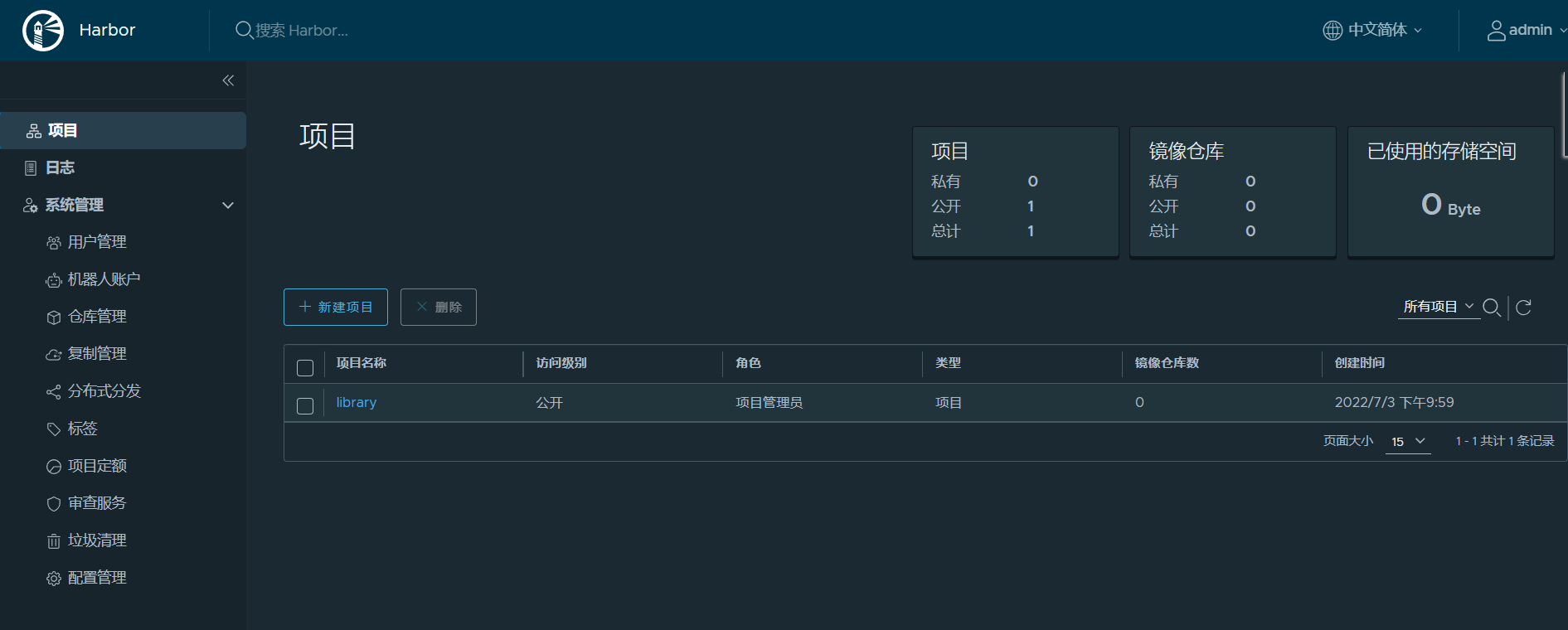

Harbor image warehouse construction

高校毕业求职难?“百日千万”网络招聘活动解决你的难题

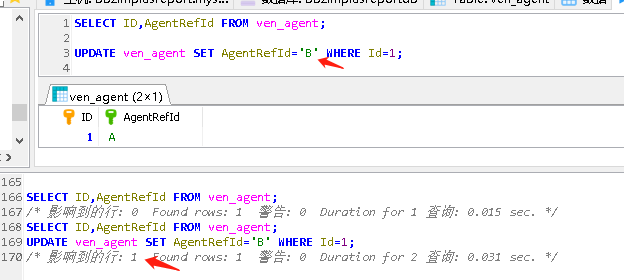

MySQL 巨坑:update 更新慎用影响行数做判断!!!

12. (map data) cesium city building map

COMSOL -- 3D casual painting -- sweeping

![[office] eight usages of if function in Excel](/img/ce/ea481ab947b25937a28ab5540ce323.png)

[office] eight usages of if function in Excel

随机推荐

MySQL 巨坑:update 更新慎用影响行数做判断!!!

COMSOL -- 3D casual painting -- sweeping

POJ 3176 cow bowling (DP | memory search)

汉诺塔问题思路的证明

SET XACT_ ABORT ON

pytorch-权重衰退(weight decay)和丢弃法(dropout)

【主流Nivida显卡深度学习/强化学习/AI算力汇总】

Sklearn model sorting

2048游戏逻辑

技术管理进阶——什么是管理者之体力、脑力、心力

解决readObjectStart: expect { or n, but found N, error found in #1 byte of ...||..., bigger context ..

Question and answer 45: application of performance probe monitoring principle node JS probe

简单解决redis cluster中从节点读取不了数据(error) MOVED

Harbor image warehouse construction

Harbor镜像仓库搭建

redis主从模式

COMSOL--建立几何模型---二维图形的建立

spark调优(一):从hql转向代码

redis主从中的Master自动选举之Sentinel哨兵机制

FFmpeg调用avformat_open_input时返回错误 -22(Invalid argument)