Preface

This year's epidemic has changed the shopping habits of all kinds of people , E-commerce market share continues to rise , As a new way of transaction, live e-commerce is reshaping the flow entry pattern , More and more consumers enter the product page through live broadcast with goods . So in order to get a better live broadcast effect , It often takes more time to prepare product highlights 、 Preferential measures 、 Show session , Each link has a direct impact on the final trading result . In the past, merchants carried goods in the live broadcasting room with fixed scenes , It's easy to make the audience feel tired , When the audience can't see their products, they often leave because they are not interested , Unless the super comedian , Otherwise, the anchor will not be able to keep every audience interested in all the commodity links , The result may be that the number of live viewers decreases with the introduction of products .

Now with the help of Huawei's machine learning service, image segmentation technology can be implemented according to different commodity categories 、 The need for digital real-time replacement of various static and dynamic scenes , Let the live broadcast become vivid and interesting with the switching of various styles . The technology uses semantic segmentation to segment the host portrait , For example, when introducing household goods, you can immediately switch to a home style room , When introducing outdoor sports equipment, you can also switch to outdoor in real time , Through this innovative experience, the audience can also find the immersive sense of substitution .

Function is introduced

Demo Based on Huawei's machine learning service, image segmentation and hand key point recognition are two major technologies , Development of background switching function through gesture , In order to avoid misoperation , This time Demo Set to switch the background only when you wave heavily , Support forward switching after loading custom background ( Turn right ) And switch back ( Turn left ), The operation mode is the same as that of the mobile phone , Support dynamic video background , At the same time, if you want to use customized gestures for background switching or other gesture effects , Huawei can be integrated ML Kit Hand key points identification for customized development .

Is it a very imaginative interactive experience ? Let's take a look at how it works .

Development steps

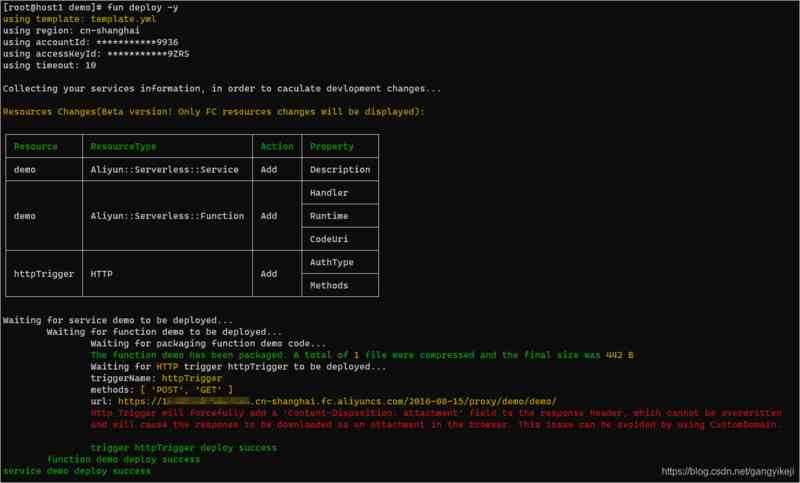

- add to HUAWEI agcp Plugins and Maven The code base .

buildscript {

repositories {

google()

jcenter()

maven {url 'https://developer.huawei.com/repo/'}

}

dependencies {

...

classpath 'com.huawei.agconnect:agcp:1.4.1.300'

}

}

allprojects {

repositories {

google()

jcenter()

maven {url 'https://developer.huawei.com/repo/'}

}

}- Full SDK Way integration .

dependencies{

// Introduce the basis of image segmentation SDK

implementation 'com.huawei.hms:ml-computer-vision-segmentation:2.0.4.300'

// Introduce multi class segmentation model package

implementation 'com.huawei.hms:ml-computer-vision-image-segmentation-multiclass-model:2.0.4.300'

// Introduce portrait segmentation model package

implementation 'com.huawei.hms:ml-computer-vision-image-segmentation-body-model:2.0.4.300'

// Introduce the basics of gesture recognition SDK

implementation 'com.huawei.hms:ml-computer-vision-handkeypoint:2.0.4.300'

// The model package of hand key point detection is introduced

implementation 'com.huawei.hms:ml-computer-vision-handkeypoint-model:2.0.4.300'

}- Add configuration in the header .

stay apply plugin: 'com.android.application' Add apply plugin: 'com.huawei.agconnect'

- Automatically update machine learning models

stay AndroidManifest.xml Add... To the file

<manifest

...

<meta-data

android:name="com.huawei.hms.ml.DEPENDENCY"

android:value="imgseg,handkeypoint" />

...

</manifest>- Create an image segmentation detector .

MLImageSegmentationAnalyzer imageSegmentationAnalyzer = MLAnalyzerFactory.getInstance().getImageSegmentationAnalyzer();// Image segmentation analyzer

MLHandKeypointAnalyzer handKeypointAnalyzer = MLHandKeypointAnalyzerFactory.getInstance().getHandKeypointAnalyzer();// Gesture recognition Analyzer

MLCompositeAnalyzer analyzer = new MLCompositeAnalyzer.Creator()

.add(imageSegmentationAnalyzer)

.add(handKeypointAnalyzer)

.create();- Create recognition result processing class .

public class ImageSegmentAnalyzerTransactor implements MLAnalyzer.MLTransactor<MLImageSegmentation> {

@Override

public void transactResult(MLAnalyzer.Result<MLImageSegmentation> results) {

SparseArray<MLImageSegmentation> items = results.getAnalyseList();

// Developers process the identification results as needed , We need to pay attention to , Only the test results are processed here .

// Not callable ML Kit Other detection related interfaces provided .

}

@Override

public void destroy() {

// Detection end callback method , For releasing resources, etc .

}

}

public class HandKeypointTransactor implements MLAnalyzer.MLTransactor<List<MLHandKeypoints>> {

@Override

public void transactResult(MLAnalyzer.Result<List<MLHandKeypoints>> results) {

SparseArray<List<MLHandKeypoints>> analyseList = results.getAnalyseList();

// Developers process the identification results as needed , We need to pay attention to , Only the test results are processed here .

// Not callable ML Kit Other detection related interfaces provided .

}

@Override

public void destroy() {

// Detection end callback method , For releasing resources, etc .

}

}- Set the recognition result processor , Implement the binding between analyzer and result processor .

imageSegmentationAnalyzer.setTransactor(new ImageSegmentAnalyzerTransactor());

handKeypointAnalyzer.setTransactor(new HandKeypointTransactor());- establish LensEngine

Context context = this.getApplicationContext();

LensEngine lensEngine = new LensEngine.Creator(context,analyzer)

// Set camera front and back mode ,LensEngine.BACK_LENS For post ,LensEngine.FRONT_LENS For the front .

.setLensType(LensEngine.FRONT_LENS)

.applyDisplayDimension(1280, 720)

.applyFps(20.0f)

.enableAutomaticFocus(true)

.create();- Start the camera , Read the video stream , For identification .

// Please do it yourself SurfaceView Other logic of the control .

SurfaceView mSurfaceView = new SurfaceView(this);

try {

lensEngine.run(mSurfaceView.getHolder());

} catch (IOException e) {

// Exception handling logic .

}- Test complete , Stop the analyzer , Release detection resources .

if (analyzer != null) {

try {

analyzer.stop();

} catch (IOException e) {

// exception handling .

}

}

if (lensEngine != null) {

lensEngine.release();

}# summary

Sum up , By introducing packages 、 Establish detection 、 Analysis and result processing and other simple steps can quickly realize this small black Technology . In addition, through image segmentation technology , There are a lot of things we can do , For example, the masked bullet screen in the video website , Combined with some front-end rendering technology, it is easy to avoid bullet screen covering human body parts , Or use existing materials to make beautiful photo portraits of various sizes , One of the advantages of semantic segmentation is that it can control the objects you want to segment accurately , Besides portraits, you can also treat delicious food 、 Pets 、 Architecture 、 The scenery and even flowers and plants are divided , No longer have to knock on the computer professional repair software .

Github Demo

For more detailed development guidelines, please refer to the official website of Huawei developer alliance :https://developer.huawei.com/consumer/cn/hms/huawei-mlkit

---

Link to the original text :https://developer.huawei.com/consumer/cn/forum/topic/0204395267288570031?fid=18

The original author :timer