当前位置:网站首页>Deep Learning Basics Overfitting, Underfitting Problems, and Regularization

Deep Learning Basics Overfitting, Underfitting Problems, and Regularization

2022-08-02 05:29:00 【hello689】

引自《统计学习方法》李航, 当假设空间含有不同复杂度(例如,不同的参数个数)的模型时,就要面临模型选择的问题.We want to choose to study a suitable or model.如果在假设空间中存在’真’模型,Then the selected model should be close to real. 具体地,The selected model to have the same number with the true model parameters,The selected model parameter vector close to the true model parameter vector.

1. 过拟合

过拟合现象:Model of the known data to predict very well,For unknown data to predict the phenomenon of poor(训练集效果好,In the test set and validation set effect is poor).

背后的原理:If the constantly pursue to the predictive ability of training data,The selected model complexity tend to be higher than the complexity of the true model.(李航-Statistical learning methods of)

From the model complexity perspective:模型过于复杂,The noise data also study in,Led to the decrease of the model generalization performance.

From the perspective of the data set is:数据集规模Relative to the model complexity too小,The features of the model of excessive mining data set.

解决过拟合常用方法:

- 增加数据集;数据增强,扩充数据,Synthesis of new data generated against network.

- 正则化方法:BN和dropout

- 添加BN层,BnTo a certain extent, can improve the model generalization.

- dropout,Some random hidden neurons,So in the process of training, it won't update every time.

- 降低模型复杂度,Can reduce network layer,To switch to participate less number of model;

- Reduce training round number,(也叫early stopping,The iterative convergence model training data sets before stop iterative,来防止过拟合.)做法:每个epoch,记录最好的结果.When the tenepoch,Fail to improve on the accuracy of test set,那就说明,The model can be truncated.

- 集成学习方法:把多个模型集成在一起,降低单一模型的过拟合风险.

- 交叉检验:这个有点复杂,几乎没用过,没有仔细了解.

2. 欠拟合

现象:Whether also in training set and test set,The effect of the model are.

原因:

- 模型过于简单;Model of learning ability is poor;

- Extraction of features is bad;When the data characteristic of the training is not、Characteristics and the existing sample label when the correlation is not strong,Fitting model easy to seen.

解决办法:

- 增加模型复杂度,Such as change the high in the linear model for nonlinear model;Add the network layer in the neural network or neuron number.

- 增加新特征:Can consider features combination such as project work.

- If the loss function to add the regular item,Can consider to reduce the regularization coefficient λ \lambda λ.

3. 正则化

写在前边:什么是正则化,不太好理解;监督学习的两个基本策略:经验风险最小化和结构风险最小化;Assuming that sample enough,So think the empirical risk minimum model is the optimal model of;When sample size is small,Empirical risk minimization to the learning effect is not very good,会产生过拟合的现象;The structural risk minimization(等价于正则化)Who had been made to fit in order to prevent.

正则化是结构风险最小化策略的实现,Is the empirical risk and add a正则化项或罚项.正则化项一般是模型复杂度的单调递增函数,模型越复杂,正则化值就越大.

Regularization item generally has the following form:

min f ∈ F 1 N ∑ i = 1 N L ( y i , f ( x i ) ) + λ J ( f ) \min _{f \in \mathcal{F}} \frac{1}{N} \sum_{i=1}^{N} L\left(y_{i}, f\left(x_{i}\right)\right)+\lambda J(f) f∈FminN1i=1∑NL(yi,f(xi))+λJ(f)

Among them is the first experience,第二项是正则化项. λ \lambda λTo adjust the coefficient between the two.

The first experience less risk model may be more complex(有多个非零参数),Then the second model complexity will be larger.正则化的作用是选择经验风险与模型复杂度同时较小的模型.

参考:李航《统计学习方法》 p18;

边栏推荐

- 吴恩达机器学习系列课程笔记——第十八章:应用实例:图片文字识别(Application Example: Photo OCR)

- 七分钟深入理解——卷积神经网络(CNN)

- 吴恩达机器学习系列课程笔记——第九章:神经网络的学习(Neural Networks: Learning)

- 数学建模学习(76):多目标线性规划模型(理想法、线性加权法、最大最小法),模型敏感性分析

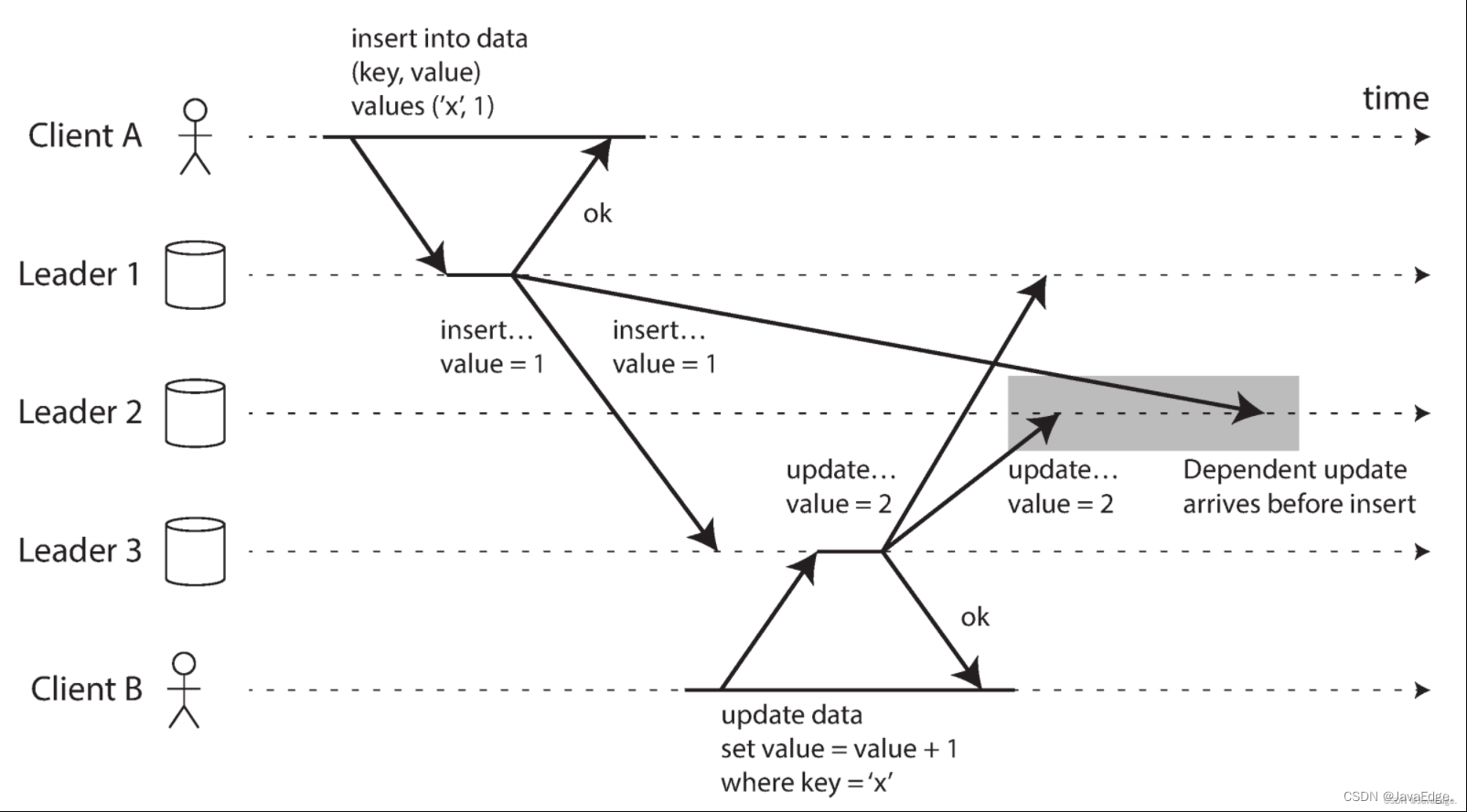

- 数据复制系统设计(2)-同步复制与异步复制

- RuoYi-App启动教程

- 多主复制的适用场景(2)-需离线操作的客户端和协作编辑

- 复制延迟案例(4)-一致前缀读

- WIN10什么都没开内存占用率过高, WIN7单网卡设置双IP

- 使用Ansible编写playbook自动化安装php7.3.14

猜你喜欢

随机推荐

SCI期刊最权威的信息查询步骤!

吴恩达机器学习系列课程笔记——第十八章:应用实例:图片文字识别(Application Example: Photo OCR)

使用Ansible编写playbook自动化安装php7.3.14

SCI writing strategy - with common English writing sentence patterns

Jetson Nano 2GB Developer Kit 安装说明

其他语法和模块的导出导入

深蓝学院-视觉SLAM十四讲-第六章作业

MySQL8.0与MySQL5.7区别

三维目标检测之OpenPCDet环境配置及demo测试

Research Notes (8) Deep Learning and Its Application in WiFi Human Perception (Part 2)

深度学习基础之批量归一化(BN)

Computer Basics

树莓派上QT连接海康相机

ES6中变量的使用及结构赋值

吴恩达机器学习系列课程笔记——第十四章:降维(Dimensionality Reduction)

不会多线程还想进 BAT?精选 19 道多线程面试题,有答案边看边学

吴恩达机器学习系列课程笔记——第六章:逻辑回归(Logistic Regression)

最后写入胜利(丢弃并发写入)

论人生自动化

asyncawait和promise的区别