Preface

It's too much trouble checking logs for new projects , Check between multiple machines , I don't know if it's the same request . Use when printing the log MDC Add a traceId, So this one traceId How to transfer across systems ?

official account :『 Liu Zhihang 』, Record the skills in work study 、 Development and source notes ; From time to time to share some of the life experience . You are welcome to guide !

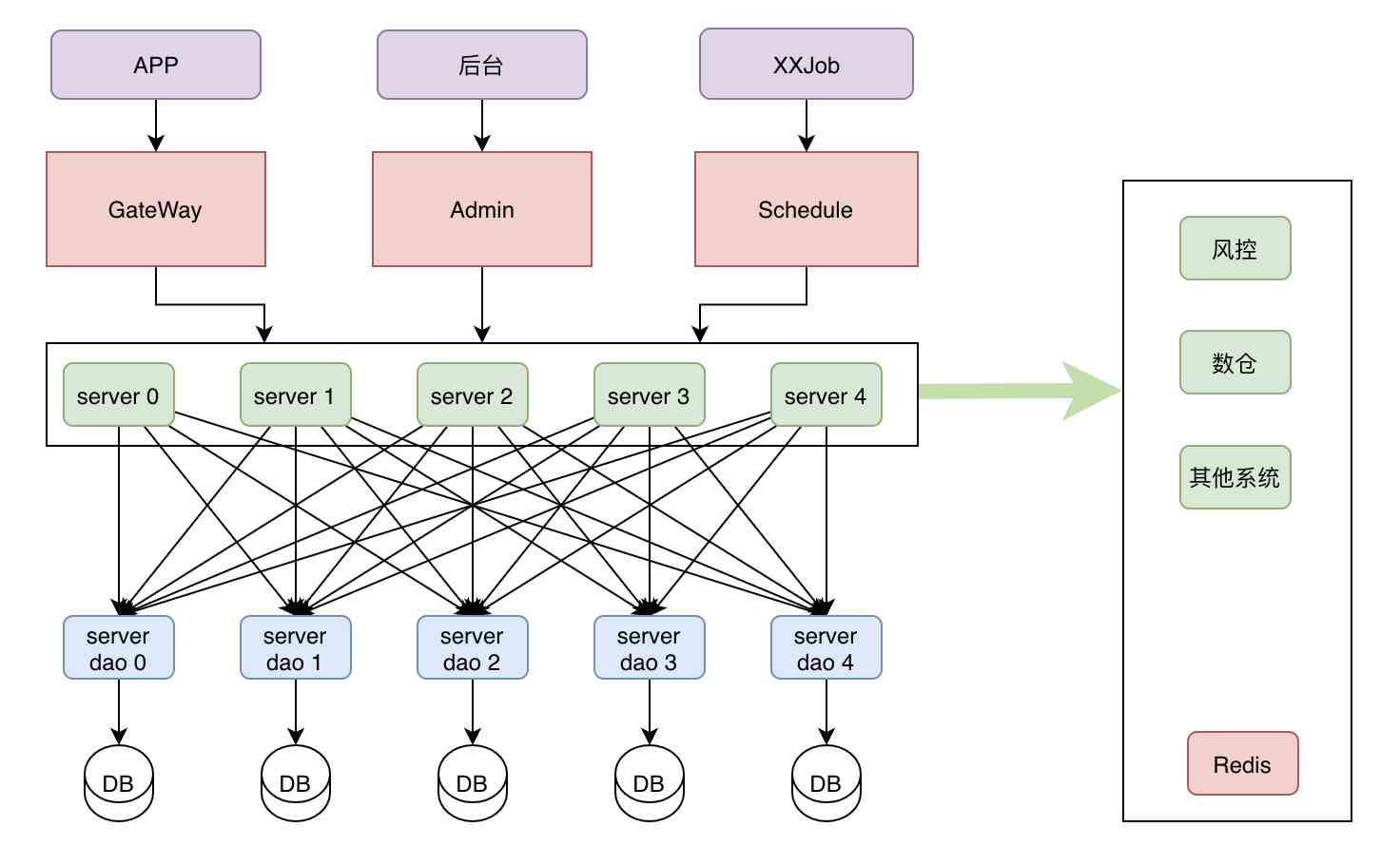

background

It's also a note on new project development , Because it's a distributed architecture , It involves the interaction between systems

At this time, there will be a very common problem :

- A single system is a cluster deployment , Logs are distributed across multiple servers ;

- Multiple system logs on multiple machines , But a request , It's even more difficult to check the log .

Solution

- Use SkyWalking traceid Link tracking ;

- Use Elastic APM Of trace.id Link tracking ;

- Make your own traceId and put To MDC Inside .

MDC

MDC(Mapped Diagnostic Context) It's a mapping , Used to store context data for a specific thread running context . therefore , If you use log4j Logging , Each thread can have its own MDC, The MDC Global to the entire thread . Any code belonging to the thread can easily access the thread's MDC Exists in .

How to use MDC

- stay log4j2-spring.xml Add... To the log format of

%X{traceId}To configure .

<Property name="LOG_PATTERN">

[%d{yyyy-MM-dd HH:mm:ss.SSS}]-[%t]-[%X{traceId}]-[%-5level]-[%c{36}:%L]-[%m]%n

</Property>

<Property name="LOG_PATTERN_ERROR">

[%d{yyyy-MM-dd HH:mm:ss.SSS}]-[%t]-[%X{traceId}]-[%-5level]-[%l:%M]-[%m]%n

</Property>

<!-- Omit -->

<!-- Configuration of the output console -->

<Console name="Console" target="SYSTEM_OUT" follow="true">

<!-- Format of output log -->

<PatternLayout charset="UTF-8" pattern="${LOG_PATTERN}"/>

</Console>

- New interceptors

Intercept all requests , from header In order to get traceId Then put MDC in , If not , Directly UUID Generate a .

@Slf4j

@Component

public class LogInterceptor implements HandlerInterceptor {

private static final String TRACE_ID = "traceId";

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response, Object handler, Exception arg3) throws Exception {

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, ModelAndView arg3) throws Exception {

}

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

String traceId = request.getHeader(TRACE_ID);

if (StringUtils.isEmpty(traceId)) {

MDC.put(TRACE_ID, UUID.randomUUID().toString());

} else {

MDC.put(TRACE_ID, traceId);

}

return true;

}

}

- Configure interceptors

@Configuration

public class WebConfig implements WebMvcConfigurer {

@Resource

private LogInterceptor logInterceptor;

@Override

public void addInterceptors(InterceptorRegistry registry) {

registry.addInterceptor(logInterceptor)

.addPathPatterns("/**");

}

}

How to transfer across Services traceId

- FeignClient

Because it's used here FeignClient Call between services , Just add a request interceptor

@Configuration

public class FeignInterceptor implements RequestInterceptor {

private static final String TRACE_ID = "traceId";

@Override

public void apply(RequestTemplate requestTemplate) {

requestTemplate.header(TRACE_ID, MDC.get(TRACE_ID));

}

}

- Dubbo

If it is Dubbo You can extend Filter Way of transmission traceId

- To write filter

@Activate(group = {"provider", "consumer"})

public class TraceIdFilter implements Filter {

@Override

public Result invoke(Invoker<?> invoker, Invocation invocation) throws RpcException {

RpcContext rpcContext = RpcContext.getContext();

String traceId;

if (rpcContext.isConsumerSide()) {

traceId = MDC.get("traceId");

if (traceId == null) {

traceId = UUID.randomUUID().toString();

}

rpcContext.setAttachment("traceId", traceId);

}

if (rpcContext.isProviderSide()) {

traceId = rpcContext.getAttachment("traceId");

MDC.put("traceId", traceId);

}

return invoker.invoke(invocation);

}

}

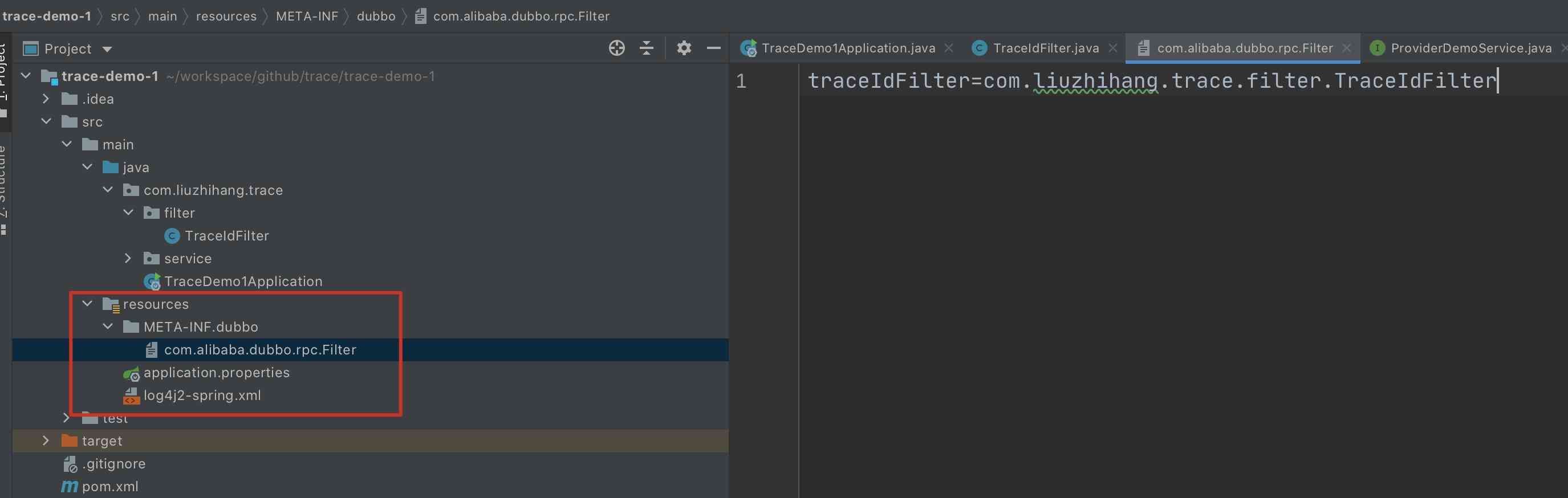

- Appoint filter

src

|-main

|-java

|-com

|-xxx

|-XxxFilter.java ( Realization Filter Interface )

|-resources

|-META-INF

|-dubbo

|-org.apache.dubbo.rpc.Filter ( Plain text file , The content is :xxx=com.xxx.XxxFilter)

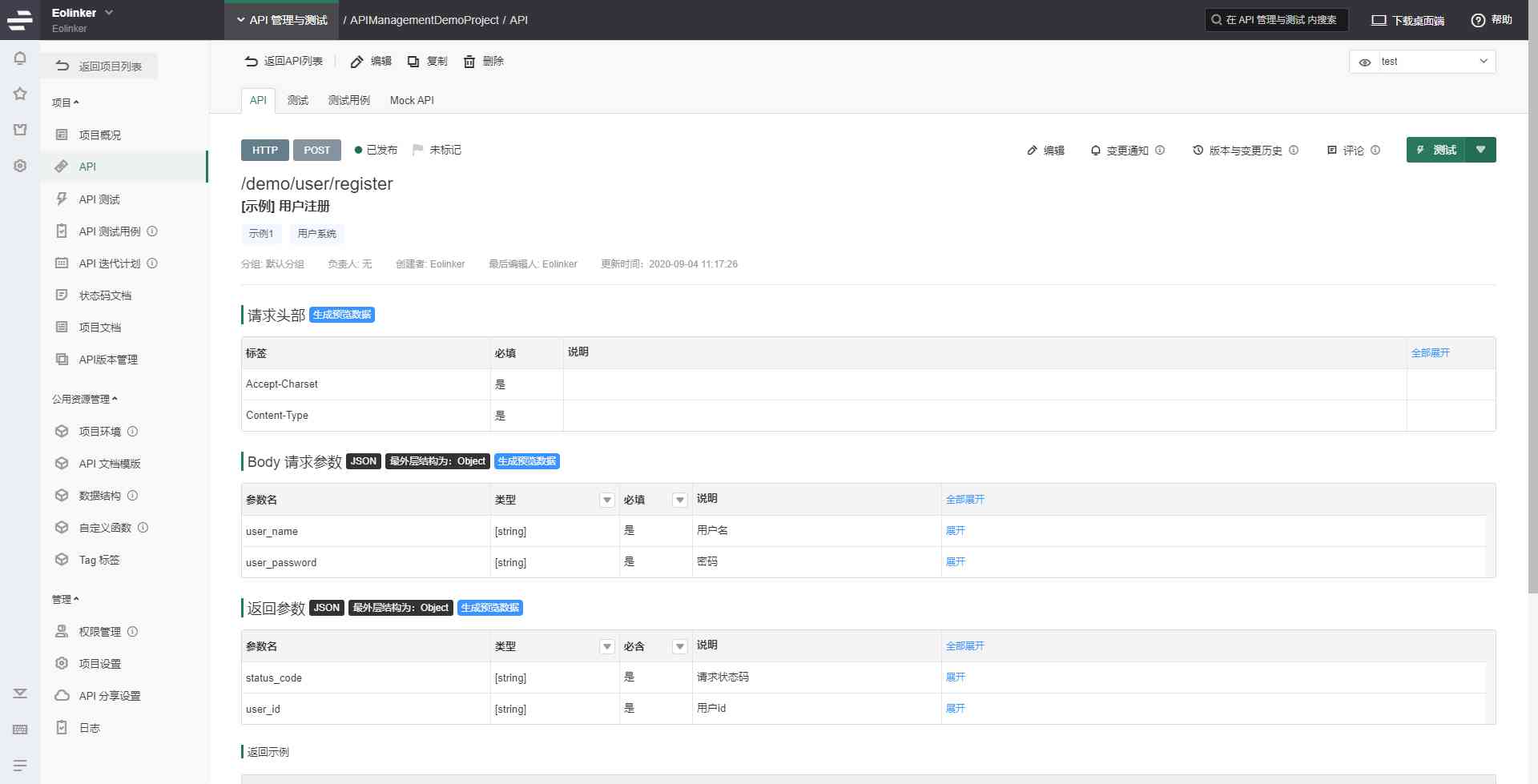

The screenshot is as follows :

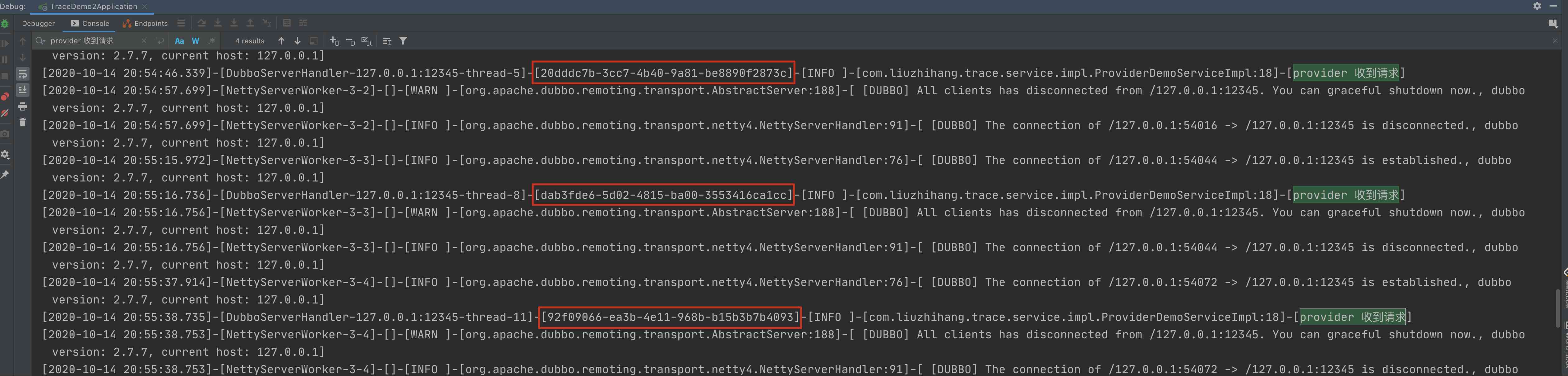

The test results are as follows :

dubbo filter The source code address is at the end of the article

You can also pay attention to the official account , send out traceid obtain

The other way

Of course, if the partners use SkyWalking perhaps Elastic APM It can also be injected in the following ways :

- SkyWalking

<dependency>

<groupId>org.apache.skywalking</groupId>

<artifactId>apm-toolkit-log4j-2.x</artifactId>

<version>{project.release.version}</version>

</dependency

And then [%traceId] Configure in log4j2.xml Of documents pattern Then you can

-

Elastic APM

- Specified at startup enable_log_correlation by true

- take

%X{trace.id}Configure in log4j2.xml Of documents pattern in

Expand

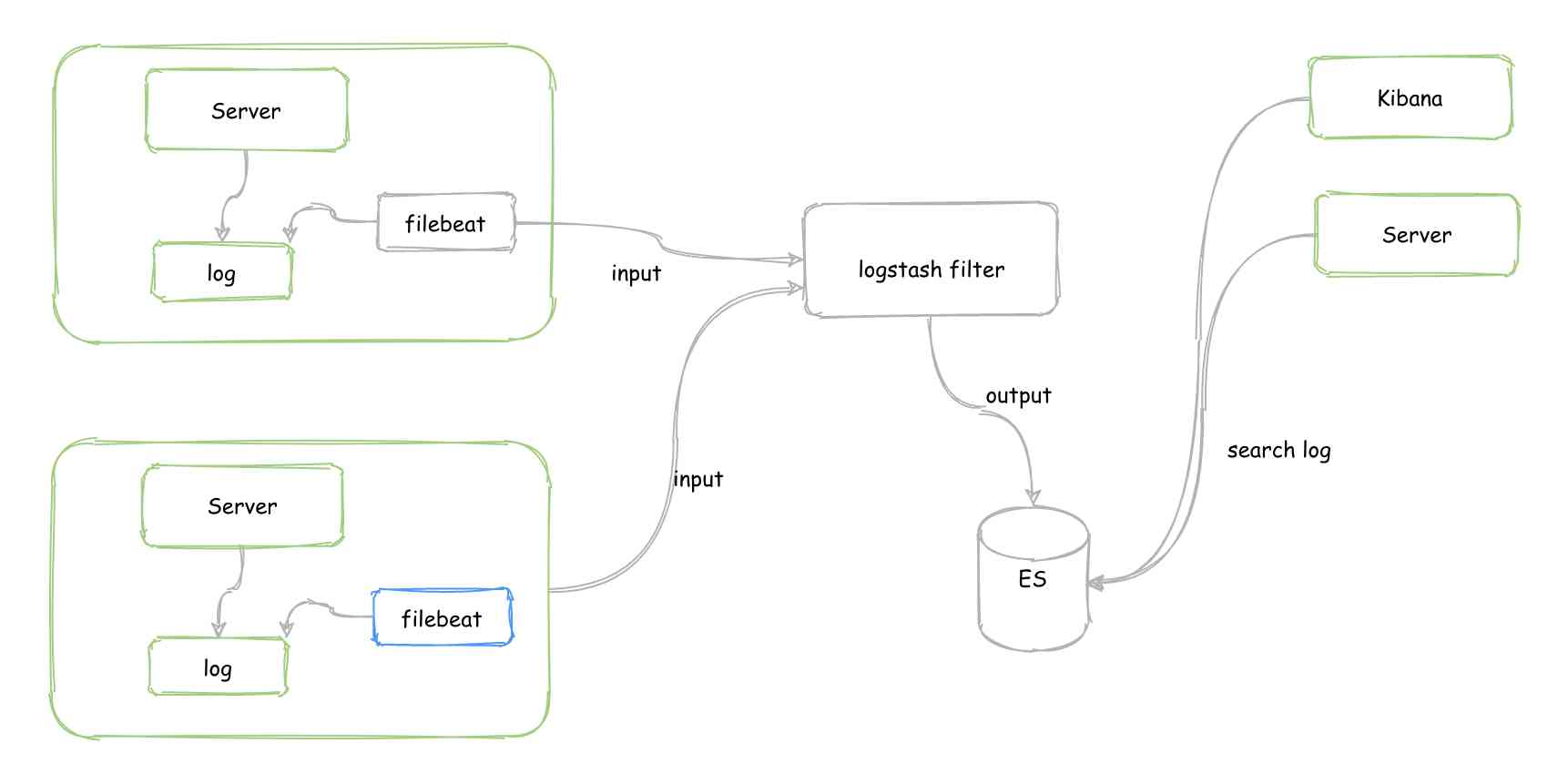

Unified log collection

Although there are traceId It can trace the whole link and query the log , But it's also on multiple servers after all , In order to improve the query efficiency , Consider putting the logs together .

The commonly used method is based on ELK The log system of :

- Use filebeat The collection log is submitted to logstash

- logstash For word segmentation, filtering, etc , Output to Elasticsearch

- Use Kinbana Or develop your own visualization tools from Elasticsearch Query log

Conclusion

This paper mainly records the problems encountered in the recent development process , I hope it will help my little friends . deficiencies , Welcome to correct . If you have other suggestions or opinions, please leave a message to discuss , Common progress .

Related information

- Log4j 2 API:https://logging.apache.org/log4j/2.x/manual/thread-context.html

- SkyWalking:https://github.com/apache/skywalking/tree/master/docs/en/setup/service-agent/java-agent

- Elastic APM:https://www.elastic.co/guide/en/apm/agent/java/current/log-correlation.html

- Dubbo filter:http://dubbo.apache.org/zh-cn/docs/dev/impls/filter.html

- this paper Dubbo filter demo:https://github.com/liuzhihang/trace