PostgreSQL upgrade

To upgrade PostgreSQL 9.1 to PostgreSQL 11 ( stride across 9.2、9.3、9.4、9.5、9.6、10 Six major versions ) For example , This article will share dozens of upgrades over the past year PostgreSQL Practical experience of production clusters .

The same applies to PostgreSQL 9.1 Later large version upgrades .

preparation

Database upgrade is well known

By email or IM Know the upgrade information and relevant precautions , So that relevant students can arrange work in advance and provide online support during the upgrade . Especially applications that need to stop services , It is necessary to inform the end user of the downtime window in advance .

Check the existing logs for errors

Have you ever encountered such a scene ?

After upgrading the database , Development students found that there was an error in the application , For example, you have no permission to access a table , Even some applications cannot access the database , Complaints are all about database upgrading . here , Put the question fix Is it over ? Of course not. , Also find out the reason , Which step is wrong . At the end of the day , I found that there was no problem with the upgrade operation . At this time, you may want to check the previous database log , If you haven't deleted it . Finally, I know , This problem existed before upgrading .

Or after the database is upgraded , You check the database log , At first glance, there is no access to some tables . At this time, you may be blind , A operation , Finally put the problem fix 了 , The time has already passed the previously known time window . Check the log later , Just know that this is an existing problem , It has nothing to do with database upgrade , Wasted so much valuable upgrade time .

So some errors are not caused by database upgrade , But there are problems before upgrading . This step is to find errors as early as possible , Eliminate errors unrelated to the upgrade in advance .

It can be checked by the following command PostgreSQL journal :

grep -i -E 'error:|fatal:|warning:' postgresql-*|less

If there is an error , Check the context of the error :

grep -A 2 -i -E 'error:|fatal:|warning:' postgresql-*|less

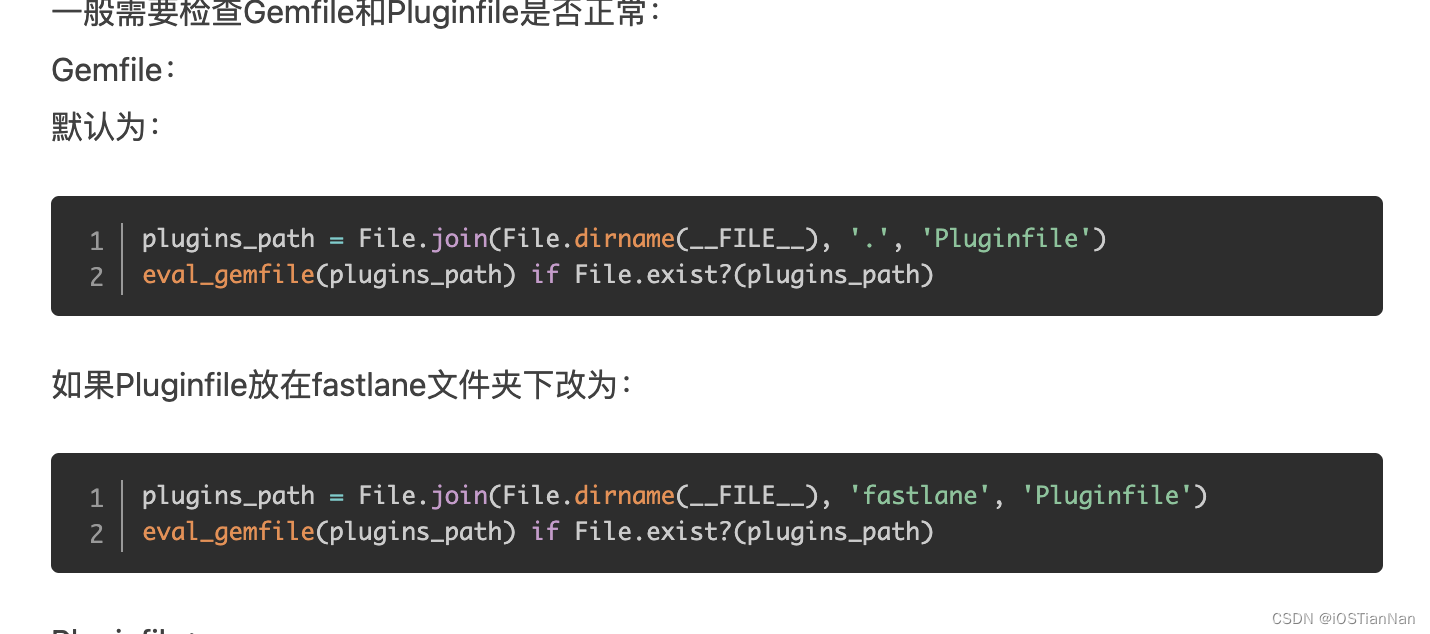

Merge ACL

If the cluster does not do configuration management ( Such as Ansible), Or there is no mechanism to ensure the cluster instances pg_hba.conf Completely consistent or consistent with certain rules , You need to check and compare manually , Avoid subsequent master-slave switching due to ACL Inconsistent and unable to access the database .

pg_hba.conf And other configuration files suggest configuration management . Manual comparison , So many lines , Also, each instance of the cluster should be compared . Write a script to compare and merge , It is not intuitive and the script has bug Not easy to find , It's too late for the subsequent application to be affected . Some instances also open the access rights of all subnets ( Such as 10.0.0.0/8), You have to turn on all access permissions for the entire cluster , However ACL Let go of , Database security is reduced .

High version cluster initialization

Cluster initialization

Here, take configuration management automation as an example .

Ansible:

ansible-playbook playbooks/cluster.yml -i inv.ini -e 'server_group=cluster1' -D

Salt:

salt -E 'db[1-2].az1|db3.az2' state.sls cluster

postgres database

If in postgres The database stores information , Such as some metadata 、procedure、view etc. , You can choose to import when initializing the cluster or import separately later .

If it can be integrated into the above configuration management, it is best .

Archive

It should be noted that , Because a large number of WAL log, You need to set up a new cluster archive_command Command to avoid unnecessary IO、 Backup, etc. or avoid archiver Process blocking . Recover after data migration archive_command For the original order .

If set to :

archive_command = 'cd .'

or

archive_command = '/bin/true'

Port

If the database is upgraded locally , Before the upgrade is complete , The new cluster needs to use temporary ports .

Such as :

port = 6432

PostgreSQL extensions

Some early PostgreSQL extension Not through CREATE EXTENSION Created , adopt \dx It's invisible ,pg_dump Produced SQL None of them CREATE EXTENSION, At this time, additional execution CREATE EXTENSION sentence .

The new version corresponds to PostgreSQL extensions Relevant software has passed the above ansible playbook or salt states install .

It is assumed here that CREATE DATABASE And CREATE EXTENSION . For example, it has been included in configuration management automation , Skip this step .

With CentOS For example , Use the following command to view the installed extensions of the old version database , Such as

rpm -qa|grep pg

Use the following command to view the database instances that pass CREATE EXTENSION Installed extensions , Such as

\dx

By comparing the above two results , Find out the failure CREATE EXTENSION Created extensions .

Suppose the earlier version postgis extension Not through CREATE EXTENSION establish . In the new version, manually create .

psql -p 6432 -U postgres -c "CREATE ROLE alvin;"

psql -p 6432 -U postgres -c "CREATE DATABASE alvindb WITH OWNER = alvin;"

psql -p 6432 -d alvindb -U postgres -c "CREATE EXTENSION postgis;"

matters needing attention

If encountered EXTENSION Compatibility problems of software dependent on different versions , Without affecting the original database , You may need to uninstall or upgrade .

Use the extension installed by the source code or the dependency related to the extension , It can be executed through the source directory when it is installed

make uninstallTo uninstall .Related articles :PostGIS Extension creation failure reason investigation .

Stop the affected scheduled tasks

Some clusters deploy VACUUM Scheduled tasks for , Backup timing task , Or other tasks .

During database upgrade , You need to stop the affected scheduled tasks , Avoid unnecessary failure or affect database upgrade .

With postgres Take the next scheduled task as an example .

You can manually view one instance by one ,

su - postgres

crontab -l

You can also view it through the configuration management tool .

Ansible:

ansible -i inv.ini -m shell -a 'sudo -iu postgres crontab -l' cluster1

Salt:

salt -E 'db[1-2].az1|db3.az2' cmd.run 'sudo -iu postgres crontab -l'

Keep observing database logs

Each machine in the old cluster and the new cluster has a separate window , Continuously observe the log with the following command .

This command will automatically get the latest log .

cd log

ls -lth|head -2|grep post && tail -f $(ls -lth|head -2|grep post|awk '{print $NF}')|grep -i -E 'error:|fatal:|warning:'

At the same time, observe whether there are write operations and error logs

ls -lth|head -2|grep post && tail -f $(ls -lth|head -2|grep post|awk '{print $NF}')|grep -i -E 'error:|fatal:|warning:|insert |update |delete |copy '

Turn off alarm monitoring

Turn off database related monitoring , Avoid unnecessary alarms . This includes the alarms of the old cluster and the new cluster .

If the setting granularity of the alarm mechanism is fine , Try to keep the necessary alarms of the original cluster , Prevent adverse effects on the original cluster during the upgrade process or alarms in the original cluster during the upgrade process .

Inform the development students to prepare for data migration

Database write operation

If the application can accept stopping the write operation , You need to develop students to stop or seal the write operation related tasks .

For example, data needs to be written constantly , You need to customize the incremental data synchronization scheme or select the appropriate database upgrade scheme .

You can check whether there is a new write in the log through the following command :

grep log_statement postgresql.conf

log_statement = 'mod'

cd pg_log

ls -lth|head -2|grep post && tail -f $(ls -lth|head -2|grep post|awk '{print $NF}')|grep -i -E 'insert |update |delete '

If there are still unplanned writes , You can check the still written ip, And then according to ip Check the corresponding server group Feed back to the development students for confirmation .

grep -i -E 'insert |update |delete |copy ' postgresql-*.log|grep -Eo '[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}'|sort|uniq

Database read operation

For read operations , The original library can provide read-only services during data migration , But at the moment of switching between new and old libraries, the read operation will flash off in seconds . For example, the read operation cannot be affected and there is no load balancer Words , Better solutions can be considered .

To use the vip For example , During the entire instance upgrade : The main library read operation flashed off three times , Don't write ; Each slave library read operation will flash off once .

As follows :

- To ensure that the main database is absolutely read-only , Before upgrading, the main database vip Drift to slave Library . The main library read operation will flash , The write operation will fail . All libraries are readable during upgrade , Don't write .

- There will be a second flash when switching between new and old libraries .

- When the upgrade is complete , Put the main warehouse vip Drift back to the main library machine , The main library read operation will flash . Write operations vip After drift, it will be normally writable .

Observe the application

Observe whether the application has alarm or error . If there are errors caused by database upgrade , Need timely feedback .

Check the existing database connection

Check the real-time connection of each instance of the original database cluster before upgrading , After upgrading, observe whether there are corresponding new connections in the new cluster instance .

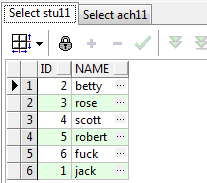

PostgreSQL 9.2 and later versions

SELECT

datname,

usename,

application_name,

client_addr,

client_hostname,

state,

COUNT(1) connections

FROM

pg_stat_activity

WHERE pid <> pg_backend_pid()

GROUP BY

datname,

usename,

application_name,

client_addr,

client_hostname,

state

ORDER BY

connections DESC,

datname,

usename,

application_name,

client_addr,

client_hostname,

state;

PostgreSQL 9.1

SELECT

datname,

usename,

application_name,

client_addr,

client_hostname,

COUNT(1) connections

FROM

pg_stat_activity

WHERE procpid <> pg_backend_pid()

GROUP BY

datname,

usename,

application_name,

client_addr,

client_hostname

ORDER BY

connections DESC,

datname,

usename,

application_name,

client_addr,

client_hostname;

The main library is set to read-only

Instance level is read-only

If you need to upgrade the entire instance , You can set the entire instance as read-only .

Modify the configuration file :

vi postgresql.conf

take default_transaction_read_only Set to on :

default_transaction_read_only = on

reload take effect . Existing connections do not need terminate, Immediate effect .

psql -U postgres -d postgres -p 5432 -c 'SHOW default_transaction_read_only'

psql -U postgres -d postgres -p 5432 -c 'SELECT pg_reload_conf()'

psql -U postgres -d postgres -p 5432 -c 'SHOW default_transaction_read_only'

Database level read-only

When you need to migrate a single database or multiple databases from an instance , You need to set read-only at the database level . For example, there are multiple databases in an instance , And the database is relatively large , Exceed 1T, From performance 、 Backup task 、 Consider factors such as disk space , The database needs to be migrated ; Or migrate databases of different departments or business lines from common instances .

The implementation is as follows SQL You can set the database level to read-only :

ALTER DATABASE alvindb SET default_transaction_read_only = on;

But we need to pay attention , Only valid for new connections , That is, before migrating data terminate Existing connections .

PostgreSQL 9.2 and later versions

View connections :

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle in transaction';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb';

terminate Connect :

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle in transaction';

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle';

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb';

PostgreSQL 9.1

View connections :

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND current_query = '<IDLE> in transaction';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND current_query = '<IDLE>';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb';

terminate Connect :

SELECT pg_terminate_backend(procpid) FROM pg_stat_activity WHERE datname = 'alvindb' AND current_query = '<IDLE> in transaction';

SELECT pg_terminate_backend(procpid) FROM pg_stat_activity WHERE datname = 'alvindb' AND current_query = '<IDLE>';

SELECT pg_terminate_backend(procpid) FROM pg_stat_activity WHERE datname = 'alvindb';

Main library vip Drift to slave Library ( Instance level )

When upgrading the entire instance , To ensure that the main database is absolutely read-only , Application and use vip Connected can be vip Drift to slave Library .

vip After drifting, you can use the following command , Query on the master database and the slave database respectively vip Connection status after drift :

netstat -tnp|grep 5432|grep 10.20.20.10

netstat -tnp|grep 5432|grep 10.20.20.10|wc -l

Data migration

The following steps are screen In the implementation of .

Data migration here adopts Easy Dump shell Scripting tools , Encapsulates the pg_dump Of 16 Kind of case, After setting the relevant parameters , A line of command is enough .

At the same time, the corresponding pg_dump Command for reference .

Here are some common case ( To quote Easy Dump Original document ).

Dump all schema and data

If you need to dump all the databases and users in one of the following cases, just use this easiest way to dump a PostgreSQL instance.

- The instance size is quite small

- You have got enough time to wait for the hours long dump

Easy Dump command

bash pg_dump.sh -v -M ALL

PostgreSQL pg_dump command

time "${PGBIN}"/pg_dumpall -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -s 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -e &>>"${lv_restore_log}"

Dump all tables of a database

In some cases you need to dump the users separately and then dump the database.

- The database size is quite small

- You've got enough time to wait for the hours long dump

- You are separating one database from a huge instance on which there are multiple databases or you just don't need other databases

Easy Dump command

bash pg_dump.sh -v -M DB -d alvindb

PostgreSQL pg_dump command

time "${PGBIN}"/pg_dump -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -d "${lv_dbname}" 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -d "${lv_dbname}" -e &>>"${lv_restore_log}"

Dump all tables, specified tables are dumped in parallel

In some cases you need to dump a database and dump some of the tables in parallel.

- PostgreSQL database to be dumped contains one or more huge tables or time consuming tables

- You need to minimize the dump time to reduce the affect on the application

Easy Dump command

bash pg_dump.sh -v -M DB -d alvindb -T "public.tb_vacuum alvin.tb_alvindb_vacuum" -L -t 3

PostgreSQL pg_dump command

Firstly dump the database with exclusion.

You can use one -T option to specify table pattern. Please note that the table pattern is not regular expression and in rare cases like same table name exists in in various schemas it might not work as expected.

time "${PGBIN}"/pg_dump -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -d "${lv_dbname}" -T "public|alvin.tb_vacuum|tb_alvindb_vacuum" 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -d "${lv_dbname}" -e &>>"${lv_restore_log}"

You can also use multiple -T options to specify all tables to be excluded.

time "${PGBIN}"/pg_dump -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -d "${lv_dbname}" -T "public.tb_vacuum" -T "alvin.tb_alvindb_vacuum" 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -d "${lv_dbname}" -e &>>"${lv_restore_log}"

Then dump specified tables in parallel.

time "${PGBIN}"/pg_dump -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -d "${DBNAME}" -t "public.tb_vacuum" 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -d "${DBNAME}" -e &>>"${lv_restore_log}" &

time "${PGBIN}"/pg_dump -v -U "${DBUSER}" -h "${DBHOST}" -p "${DBPORT}" -d "${DBNAME}" -t "alvin.tb_alvindb_vacuum" 2>>"${lv_dump_log}" | "${PGBIN}"/psql -U postgres -p "${DBPORT_TARGET}" -d "${DBNAME}" -e &>>"${lv_restore_log}" &

Check during data migration

Check the monitor

see CPU、load、IO And network traffic .

see dump process

date && ps -ef|grep -E 'dump|psql'

date && ps -ef|grep 'dump'

date && ps -ef|grep 'psql'

Check the database instance size

date && psql -p 6432 -U postgres -c '\l+'

Check the data migration progress through the script log

tail -f *.log

Check whether there are errors in data migration

grep -i -E 'error:|fatal:|warning:' *.log

See what's going on SQL

PostgreSQL 9.2 and later versions

psql -p 6432 -U postgres -c "SELECT * FROM pg_stat_activity WHERE application_name = 'psql' and pid <> pg_backend_pid() ORDER BY backend_start" -x

PostgreSQL 9.1

Currently, there is generally no need to upgrade to PostgreSQL 9.1 Of , Unless you want to migrate to PostgreSQL 9.1 The library of .

psql -p 6432 -U postgres -c "SELECT * FROM pg_stat_activity WHERE application_name = 'psql' and procpid <> pg_backend_pid() ORDER BY backend_start" -x

Check the master-slave delay

PostgreSQL 10 and later versions

SELECT

application_name,

pg_size_pretty(pg_wal_lsn_diff(pg_current_wal_lsn(), replay_lsn)) AS diff

FROM pg_stat_replication;

PostgreSQL 9.6 and earlier versions

Currently, there is generally no need to upgrade to PostgreSQL 9.x Of .

SELECT

application_name,

pg_size_pretty(pg_xlog_location_diff(pg_current_xlog_location(), replay_location)::bigint) as diff

FROM

pg_stat_replication;

Check whether there is a lock block Data migration

psql -p 6432 -U postgres -c "SELECT * FROM pg_locks WHERE not granted;" -x

Check if there is AUTOVACUUM

PostgreSQL 9.2 and later versions

psql -p 6432 -U postgres -c "SELECT * FROM pg_stat_activity WHERE query ~ 'auto' AND pid <> pg_backend_pid() ORDER BY backend_start" -x

PostgreSQL 9.1

Currently, there is generally no need to upgrade to PostgreSQL 9.1 Of , Unless you want to migrate to PostgreSQL 9.1 The library of .

psql -p 6432 -U postgres -c "SELECT * FROM pg_stat_activity WHERE current_query ~ 'auto' AND procpid <> pg_backend_pid() ORDER BY backend_start" -x

ANALYZE

To prevent data migration , Query performance is affected due to statistical information and other reasons , Need to carry out ANALYZE.

ANALYZE TABLES

If data migration , If multiple tables are migrated in parallel , The table that has been migrated can be migrated first ANALYZE.

time psql -U postgres -d alvindb -p 6432 -U postgres -c 'ANALYZE VERBOSE alvin.tb_test' && echo Done|mail -s "ANALYZE alvin.tb_test completed" "[email protected]" &

ANALYZE DATABASE

First ANALYZE database postgres:

time psql -U postgres -d postgres -p 6432 -U postgres -c 'ANALYZE VERBOSE' && echo Done|mail -s "ANALYZE postgres completed" "[email protected]" &

After the entire database data migration , The whole database ANALYZE.

time psql -U postgres -d alvindb -p 6432 -U postgres -c 'ANALYZE VERBOSE' && echo Done|mail -s "ANALYZE alvindb completed" "[email protected]" &

Switching between new and old clusters

Switch between new and old clusters after there is no delay between master and slave .

Modify the configuration file

Modify the following configuration file

vi postgresql.conf

Restore the following parameters

port = 5432

archive_command = 'xxx'

meanwhile , The following configuration file port Change to the corresponding value .

recovery.conf

Check the master-slave delay

PostgreSQL 10 and later versions

SELECT

application_name,

pg_size_pretty(pg_wal_lsn_diff(pg_current_wal_lsn(), replay_lsn)) AS diff

FROM pg_stat_replication;

PostgreSQL 9.6 and earlier versions

Currently, there is generally no need to upgrade to PostgreSQL 9.x Of .

SELECT

application_name,

pg_size_pretty(pg_xlog_location_diff(pg_current_xlog_location(), replay_location)::bigint) as diff

FROM

pg_stat_replication;

The number of old clusters decreases wal journal

After no master-slave delay, perform the following operations .

To save space , Reduce unnecessary in old clusters wal journal , Modify the following configuration file of the old cluster

vi postgresql.conf

Modify the following parameters , Such as

wal_keep_segments = 1000

reload take effect , And execute in the next step at the latest CHECKPOINT Automatically delete redundant wal journal .

psql -U postgres -d postgres -p 5432 -c 'select pg_reload_conf()'

perform CHECKPOINT

To ensure that the old cluster can be stopped as soon as possible and the new cluster can be started as soon as possible to provide services , Execute before switching CHECKPOINT :

date && time psql -p 5432 -U postgres -c 'CHECKPOINT'

date && time psql -p 6432 -U postgres -c 'CHECKPOINT'

Stop the old instance and start the new instance

Run the following command , After stopping the original instance, start the new instance .

/usr/pg91/bin/pg_ctl stop -D /data/pg91 -mi && /usr/pg11/bin/pg_ctl stop -D /data/pg11 -mf && /usr/pg11/bin/pg_ctl start -D /data/pg11

If only one database is migrated instead of the entire instance , Then the original instance does not need to be stopped , Just change the name of the original database .

Check archive log

confirm archiver The process is running normally without archive lagging ,

cd pg_wal

ps -ef|grep postgres|grep 'archiver'|grep -v grep && ls -lt $(ps -ef|grep postgres|grep 'archiver'|grep -v grep|awk '{print $NF}')

You can manually execute the following commands in the main library , And in archive Check... In the catalog archiver Is the process working .

SELECT pg_switch_wal();

Upgrade other software

If the software related to the database version used in the cluster , It also needs to be upgraded accordingly . Such as archive_command Software and backup related software involved in .

Main library vip Drift back to the main library

If the upgrade is started vip drift , At this time, we need to vip Drift back to the main library .

vip After drifting, you can use the following command , Query on the master database and the slave database respectively vip Connection status after drift :

netstat -tnp|grep 5432|grep 10.20.20.10

netstat -tnp|grep 5432|grep 10.20.20.10|wc -l

From the library can terminate Main library vip That's connected :

View connections :

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle in transaction';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle';

SELECT * FROM pg_stat_activity WHERE datname = 'alvindb';

terminate Connect :

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle in transaction';

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb' AND state = 'idle';

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE datname = 'alvindb';

Check the database log

Continuously observe the log with the following command , Ensure that there are no new errors after the upgrade .

cd log

ls -lth|head -2|grep post && tail -f $(ls -lth|head -2|grep post|awk '{print $NF}')|grep -i -E 'error:|fatal:|warning:'

Check database connection

Make sure that a new database is connected to the new cluster . If the application is not automatically connected to the database , Then confirm with the development students whether it is necessary to restart the application .

Check and monitor

Check and compare various monitoring before and after upgrading , Such as qps、wal size per second And so on to ensure that the business returns to normal .

If more applications , For which access qps Lower applications , This method is not obvious , It is better for the business party to check the application log or relevant business monitoring .

Inform business students to verify

Develop students or test students to verify , Make sure the application is running properly . And observe the business monitoring before and after the upgrade , Such as order quantity monitoring .

Resume scheduled tasks

For example, there is an absolute path to use the database software in the scheduled task script , You need to change the path of the new version , In order to avoid reporting errors in scheduled tasks .

After the script confirms that there is no problem , You can resume scheduled tasks or run unfinished tasks again .

Such as using configuration management tools , Such as Ansible, Use directly after modifying relevant configurations Ansible Just update the configuration .

Especially multiple scripts (vacuum、 Backup 、 Regularly clean up data and other tasks ) When using the absolute path of the database software , Such as /usr/pg91/bin/, Using configuration management will avoid omission , Reduce manual operation , The quality is more guaranteed .

Turn on alarm monitoring

Check whether there are monitoring items on the temporary port of the original cluster or the new cluster that need to be cleaned . After no problem , Recovery monitoring .

Finishing work

The database upgrade is complete

By email or IM Generally known .

Information update

Check whether there are documents or system records that are related to the database version and need to be manually updated . Or whether it is necessary to close related ticket.

Follow up observation, monitoring and log

If abnormal , Investigate and solve in time .

To sum up your experience

If you encounter problems during the upgrade , Record and summarize in detail how to optimize or solve in the future database upgrade .

If there is already configuration management , During the upgrade process, if you find something you didn't consider case It can also be optimized , Or configure and manage more manual operation steps .

Celebrate

Life needs a sense of ceremony , Work is also . Treat your friends well !

Link to the original text :

https://www.cnblogs.com/dbadaily/p/postgresql-upgrade.html

If the URL you browse is inconsistent with this link , It is an unauthorized reprint , For a better reading experience , I suggest reading the original .

official account

Focus on DBA Daily official account , The first time to receive an update of the article .

Through the front line DBA Our daily work , Learn practical database technology dry goods !

The official account is recommended by quality articles.

PostgreSQL VACUUM It's easy to understand

Wrote a simple and easy to use shell frame

Huashan discusses the sword PostgreSQL sequence

GitLab supports only PostgreSQL now

PostgreSQL 9.1 More related articles on the road to ascension

- [ Reprint ]Web Front end development engineer's programming ability is soaring

[ background ] If you're just entering web Front end R & D , I want to try how deep the water is , Read this article : If you've been doing it for two or three years web Product front end R & D , Confused, unable to find a way to improve , Read this article : If you've been a front-end developer for four or five years , There is no problem that can hold you ...

- 【 turn 】Web Front end R & D Engineer's programming ability is soaring

classification : Javascript | come from Haiyu's blog I read this article today . It's very interesting . Find that you still have a long way to go . [ background ] If you're just entering WEB Front end R & D , I want to try how deep the water is , Read this article : Such as ...

- Web Front end R & D Engineer's programming ability is soaring

I read this article today . It's very interesting , So I reprinted . Look at what stage we are in . [ background ] If you're just entering web Front end R & D , I want to try how deep the water is , Read this article : If you've been doing it for two or three years web Product front end R & D , Confused and unable to find ...

- Web Front end development engineer's programming ability is soaring

[ background ] If you're just entering web Front end R & D , I want to try how deep the water is , Read this article : If you've been doing it for two or three years web Product front end R & D , Confused, unable to find a way to improve , Read this article : If you've been a front-end developer for four or five years , There is no problem that can hold you ...

- from pg_hba.conf Talk about documents postgresql Connection authentication for

I've been working on postgresql Things that are , build postgresql Database cluster environment . To operate the database, it is necessary to access the database environment from the remote host , For example, the database administrator's remote management database , Remote client access database files . And in the po ...

- PostgreSQL The road to learning one

PostgreSQL An extension of PostGIS Is the most famous open source GIS database . install PostgreSQL Is the first step . 1. download PostgreSQL The binary installation file of PostgreSQL Official website –>Downloa ...

- The road to big data week07--day06 (Sqoop The relational database (oracle、mysql、postgresql etc. ) Data and hadoop A tool for data conversion )

In order to facilitate later learning , I'm learning Hive In the process of learning a tool , That's it Sqoop, You will find out later sqoop It is the simplest framework we are learning about big data framework . Sqoop It's a Hadoop And data in relational databases ...

- ASP.NET MVC Use Petapoco miniature ORM frame +NpgSql Drive connection PostgreSQL database

I saw Xiaodie Jinghong in the garden some time ago About the green version of Linux.NET——“Jws.Mono”. Because I'm right .Net The program runs in Linux I'm very interested in , I also read some books about mono Information , But there has been no time to take the time ...

- Go to IOE,MySQL Perfect victory PostgreSQL

Reprinted from : http://www.innomysql.net/article/15612.html ( For reprint only , Does not mean that this site and the blogger agree with the point of view in the article or confirm the information in the article ) Preface Last week I took part in 2015 China Database in ...

- Video tutorial --ASP.NET MVC Use Petapoco miniature ORM frame +NpgSql Drive connection PostgreSQL database

We agreed to record and <ASP.NET MVC Use Petapoco miniature ORM frame +NpgSql Drive connection PostgreSQL database > The video corresponding to this blog , Because there is no time for one month ...

Random recommendation

- JavaScript My view on object oriented

preface stay JavaScript Discuss object orientation in the big world of , There are two points to be mentioned :1.JavaScript It's a prototype based object-oriented language 2. Simulate the object-oriented approach of class languages . For why we should simulate the object-oriented of class language , I personally think : some ...

- cygwin To configure git

about windows Users , Use git bash There are often garbled situations , So a high quality and noble Software , It's worth recommending , That's it cygwin download cygwin after , During installation , install git, install vim Editor Then in ...

- java Heap memory and stack memory

java There are two types of memory , Heap memory and stack memory : Heap memory is used to store arrays and new The object of , Like a file , Byte streams are stored in the heap , Stack memory opens an index for this file , That is the address of this file , And stored on the stack . Object by GC Process freeing memory ...

- objective-c A chain type addition calculator realizes

The realization idea of a chain adder 1. Effect when using Calculate * manger=[Calculate new]; int result=manger.add(123).add(123).sub(1 ...

- android webview type=file Upload files , Android terminal code

http://stackoverflow.com/questions/5907369/file-upload-in-webview http://blog.csdn.net/longlingli/ar ...

- swift Embedded in project oc

Reference material It should be noted that And oc contain swift The difference is swift contain oc You need to include the... To be used in the bridge file oc The header file demo:swiftPlayOc( Extraction code :37c6)

- C primer plus Chapter 8 of reading notes

The title of this chapter is character input / Output and input confirmation . The main content is to discuss the use of I/O The standard function of . 1.getchar() and putchar() These two functions have been used before , Let's discuss the next buffer through these two functions . #include &qu ...

- SessionState Configuration of [ Reprint ]

ASP.NET The session state module is in Web.config In file <System.web> Under the mark <Sessionstate> Of the tag mode Attribute to determine the four possible values of this attribute : Off. In ...

- HTML5 Server push Events (Server-sent Events)

Server push Events (Server-sent Events)WebSocket A protocol in which the server sends events to the client & One way communication of data . At present, all mainstream browsers support the server to send events , Except, of course, Internet ...

- form Form action Submit to write js In the to , meanwhile onclick Events are also written in js In the to . Its action It can also be done through ajax To submit .

1,html Script <body> <div style="display: none;"> <form id="submitForm" ...