当前位置:网站首页>Reptile exercises (I)

Reptile exercises (I)

2022-07-04 13:00:00 【InfoQ】

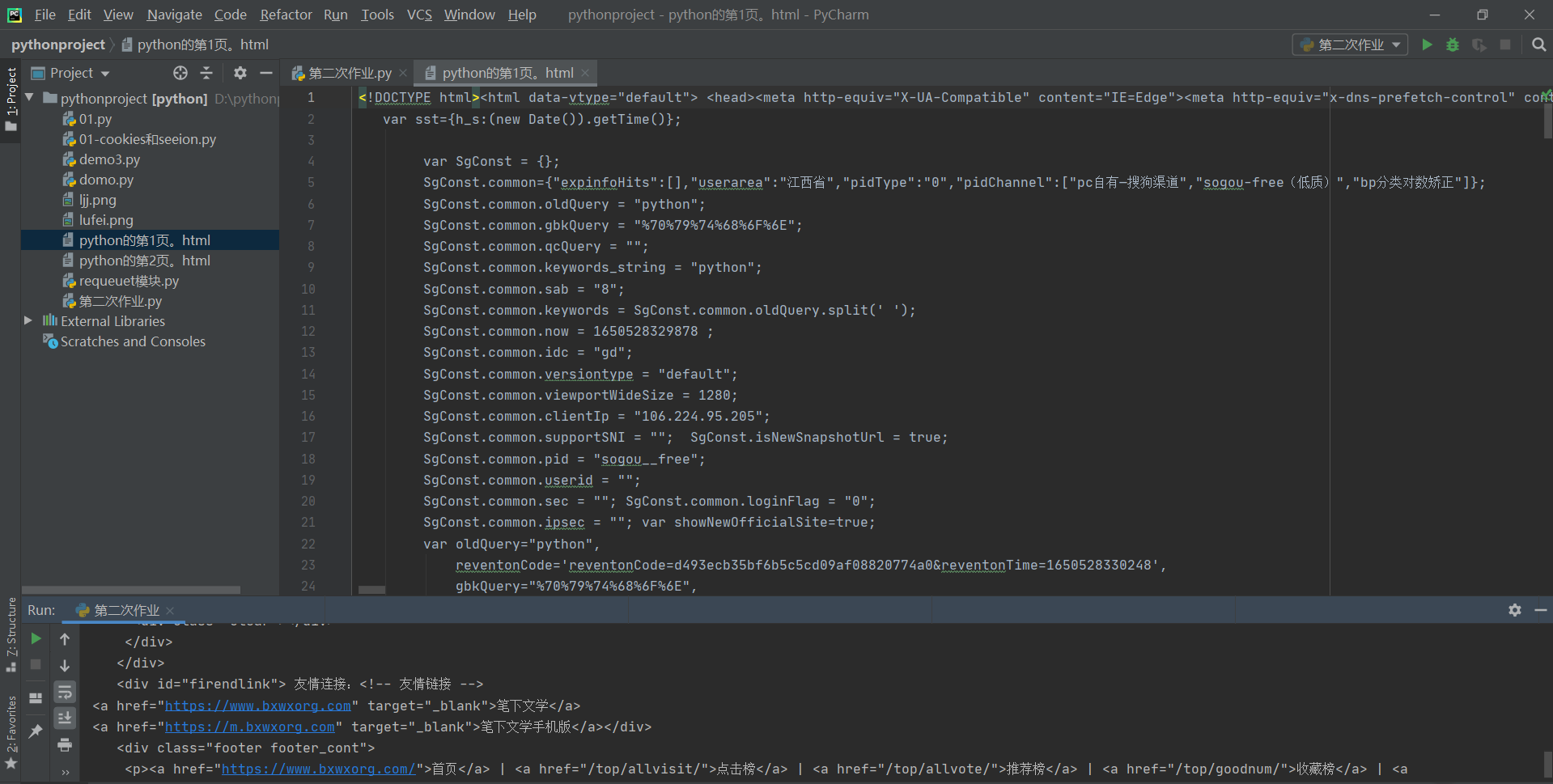

import requests

word = input(" Please enter the search content ")

start = int(input(" Please enter the start page "))

end = int(input(" Please enter the end page "))

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36 Edg/100.0.1185.44'

}

for n in range(start, end + 1):

url = f'https://www.sogou.com/web?query={word}&page={n}'

# print(url)

response = requests.get(url, headers=headers)

with open(f'{word} Of the {n} page .html', "w", encoding="utf-8")as file:

file.write(response.content.decode("utf-8"))

https://www.sogou.com/web?query=python&page=2&ie=utf8url = f'https://www.sogou.com/web?query={word}&page={n}'

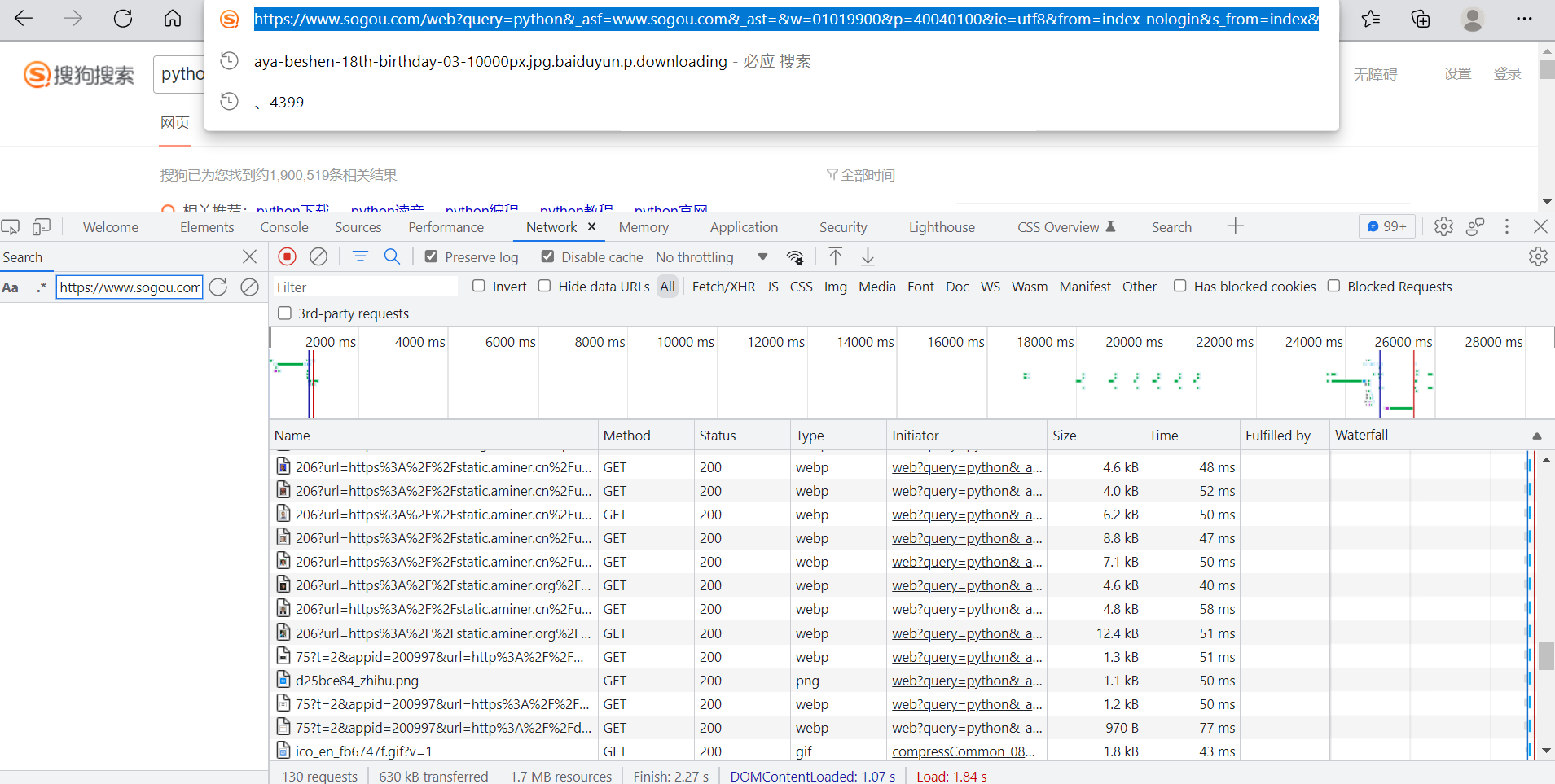

https://www.sogou.com/web?query=Python&_asf=www.sogou.com&_ast=&w=01019900&p=40040100&ie=utf8&from=index-nologin&s_from=index&sut=12736&sst0=1650428312860&lkt=0%2C0%2C0&sugsuv=1650427656976942&sugtime=1650428312860

https://www.sogou.com/web?query=java&_ast=1650428313&_asf=www.sogou.com&w=01029901&p=40040100&dp=1&cid=&s_from=result_up&sut=10734&sst0=1650428363389&lkt=0%2C0%2C0&sugsuv=1650427656976942&sugtime=1650428363389

https://www.sogou.com/web?query=C%E8%AF%AD%E8%A8%80&_ast=1650428364&_asf=www.sogou.com&w=01029901&p=40040100&dp=1&cid=&s_from=result_up&sut=11662&sst0=1650428406805&lkt=0%2C0%2C0&sugsuv=1650427656976942&sugtime=1650428406805

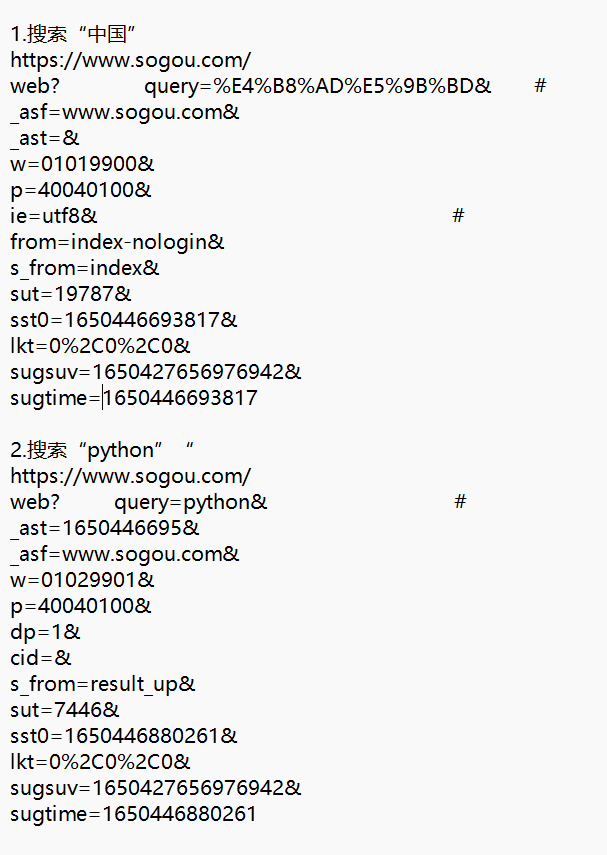

https://www.sogou.com/web?

https://www.sogou.com/web?query=Python&

https://www.sogou.com/web?query=Python&page=2&ie=utf8

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36 Edg/100.0.1185.44'

'cookie' = "IPLOC=CN3600; SUID=191166B6364A910A00000000625F8708; SUV=1650427656976942; browerV=3; osV=1; ABTEST=0|1650428297|v17; SNUID=636A1DCD7B7EA775332A80CB7B347D43; sst0=663; [email protected]@@@@@@@@@; LSTMV=229,37; LCLKINT=1424"

'URl' = "https://www.sogou.com/web?query=Python&_ast=1650429998&_asf=www.sogou.com&w=01029901&cid=&s_from=result_up&sut=5547&sst0=1650430005573&lkt=0,0,0&sugsuv=1650427656976942&sugtime=1650430005573"

url="https://www.sogou.com/web?query={}&page={}:

" ":" ",

# Build the format of the dictionary ,',' Never forget

# headers It's a keyword. You can't write it wrong , If you make a mistake, you will have the following error reports

import requests

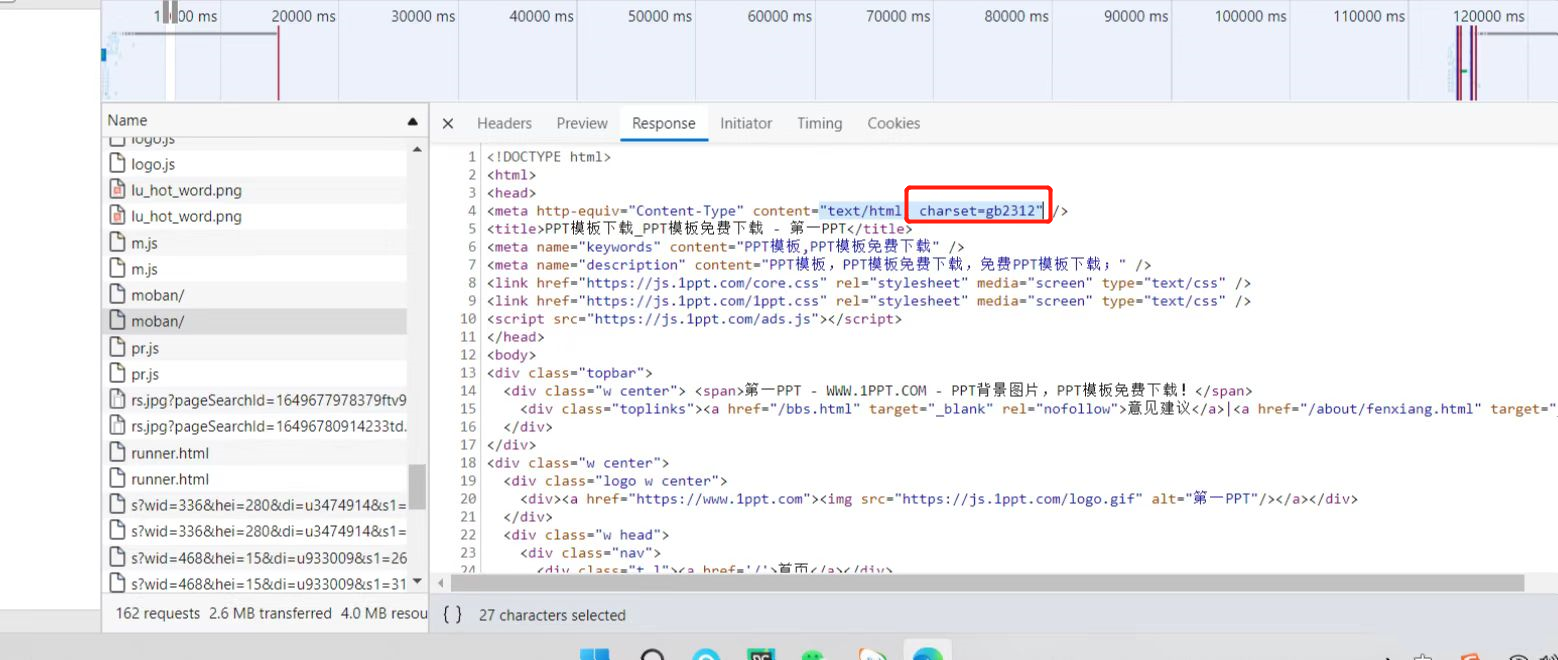

url = "https://www.bxwxorg.com/"

hearders = {

'cookie':'Hm_lvt_46329db612a10d9ae3a668a40c152e0e=1650361322; mc_user={"id":"20812","name":"20220415","avatar":"0","pass":"2a5552bf13f8fa04f5ea26d15699233e","time":1650363349}; Hm_lpvt_46329db612a10d9ae3a668a40c152e0e=1650363378',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36 Edg/100.0.1185.44'

}

response = requests.get(url, hearders=hearders)

print(response.content.decode("UTF-8"))

Traceback (most recent call last):

File "D:/pythonproject/ The second assignment .py", line 141, in <module>

response = requests.get(url, hearders=hearders)

File "D:\python37\lib\site-packages\requests\api.py", line 75, in get

return request('get', url, params=params, **kwargs)

File "D:\python37\lib\site-packages\requests\api.py", line 61, in request

return session.request(method=method, url=url, **kwargs)

TypeError: request() got an unexpected keyword argument 'hearders'

# reason : Three hearders Write consistently , however headers Is the key word , So the report type is wrong

# But it's written heades There will be another form of error reporting

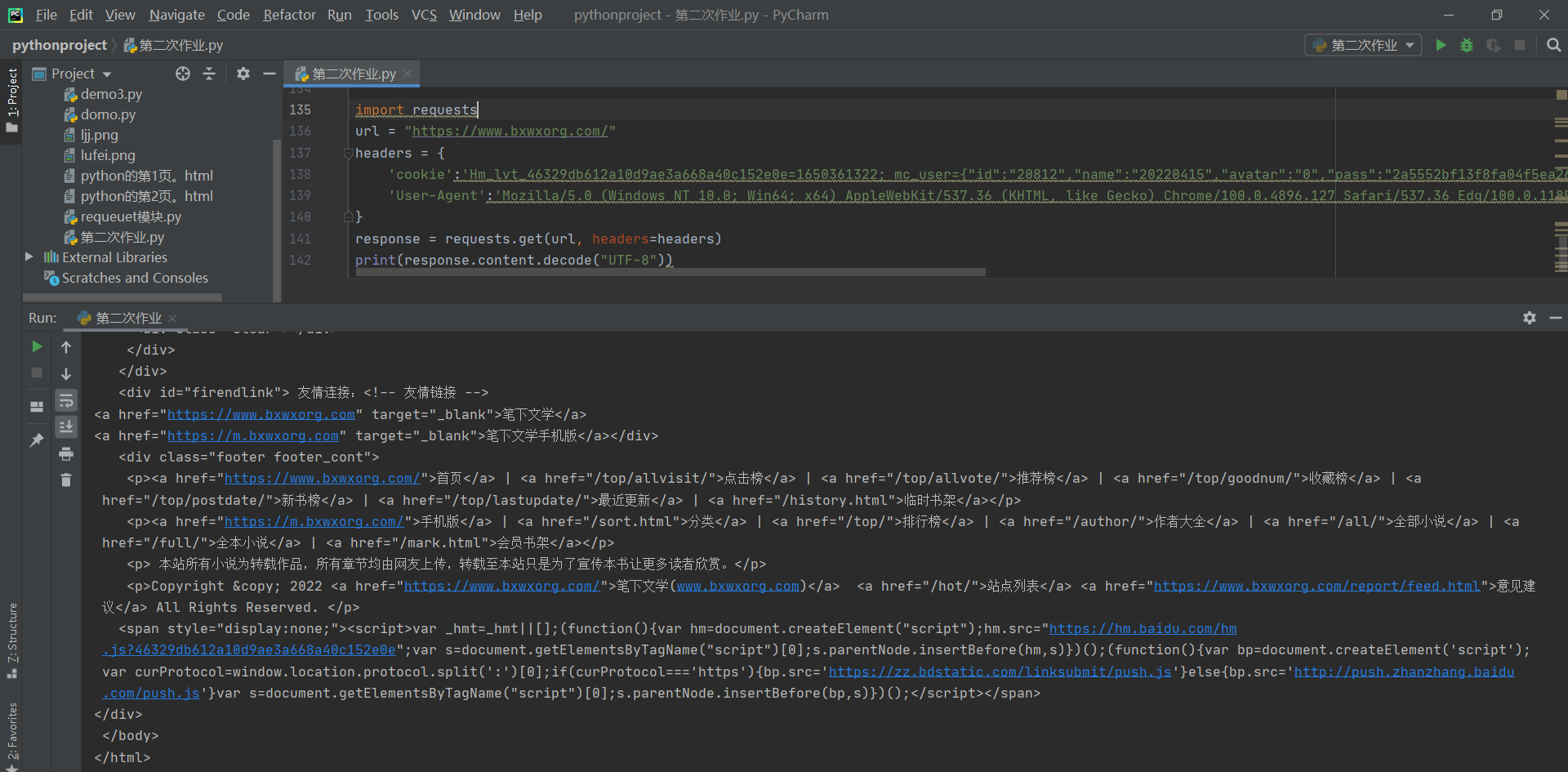

import requests

word = input(" Please enter the search content ")

start = int(input(" Please enter the start page "))

end = int(input(" Please enter the end page "))

heades = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36 Edg/100.0.1185.44'

}

for n in range(start, end + 1):

url = f'https://www.sogou.com/web?query={word}&page={n}'

# print(url)

response = requests.get(url, headers=headers)

with open(f'{word} Of the {n} page .html', "w", encoding="utf-8")as file:

file.write(response.content.decode("utf-8"))

Traceback (most recent call last):

File "D:/pythonproject/ The second assignment .py", line 117, in <module>

response = requests.get(url, headers=headers)

NameError: name 'headers' is not defined

# reason : Three hearders Inconsistent writing , So the registration is wrong

# The correct way of writing is , You'd better not make a mistake !

import requests

url = "https://www.bxwxorg.com/"

headers = {

'cookie':'Hm_lvt_46329db612a10d9ae3a668a40c152e0e=1650361322; mc_user={"id":"20812","name":"20220415","avatar":"0","pass":"2a5552bf13f8fa04f5ea26d15699233e","time":1650363349}; Hm_lpvt_46329db612a10d9ae3a668a40c152e0e=1650363378',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/100.0.4896.127 Safari/537.36 Edg/100.0.1185.44'

}

response = requests.get(url, headers=headers)

print(response.content.decode("UTF-8"))

for n in range(start, end + 1):

边栏推荐

猜你喜欢

Introduction to the button control elevatedbutton of the fleet tutorial (the tutorial includes the source code)

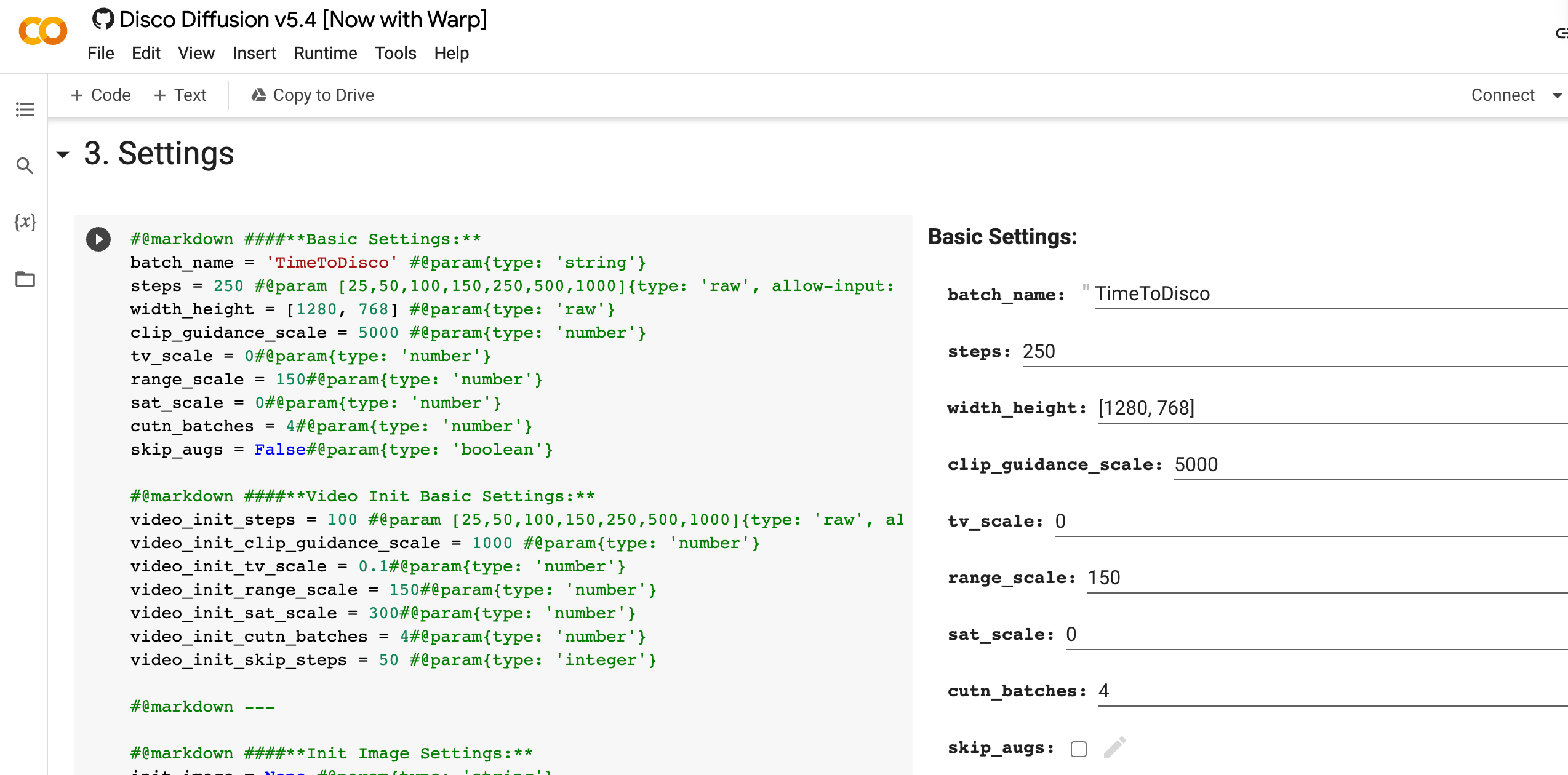

AI painting minimalist tutorial

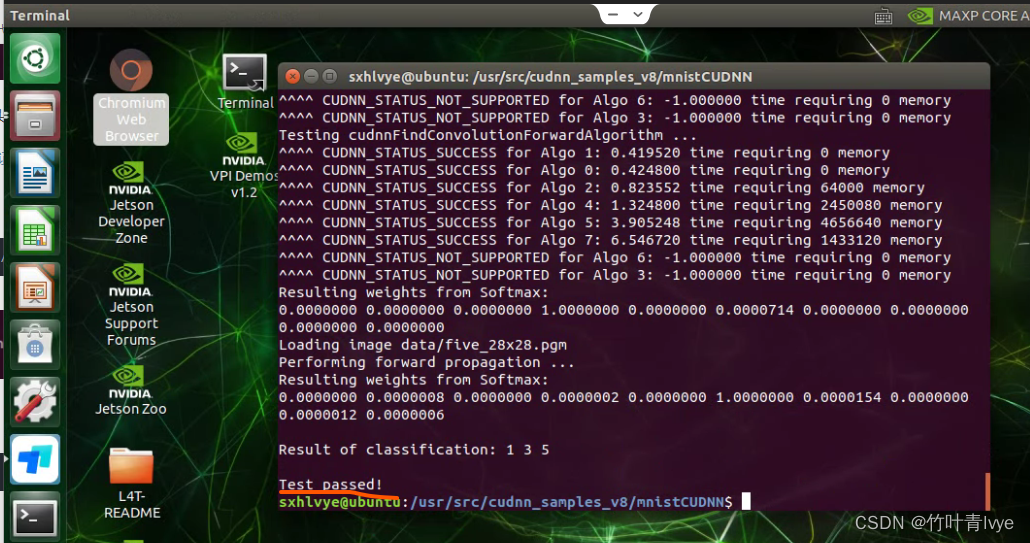

Jetson TX2配置Tensorflow、Pytorch等常用库

Transformer principle and code elaboration (pytorch)

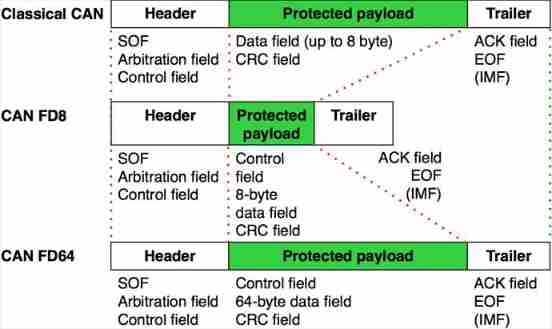

Communication tutorial | overview of the first, second and third generation can bus

PostgreSQL 9.1 soaring Road

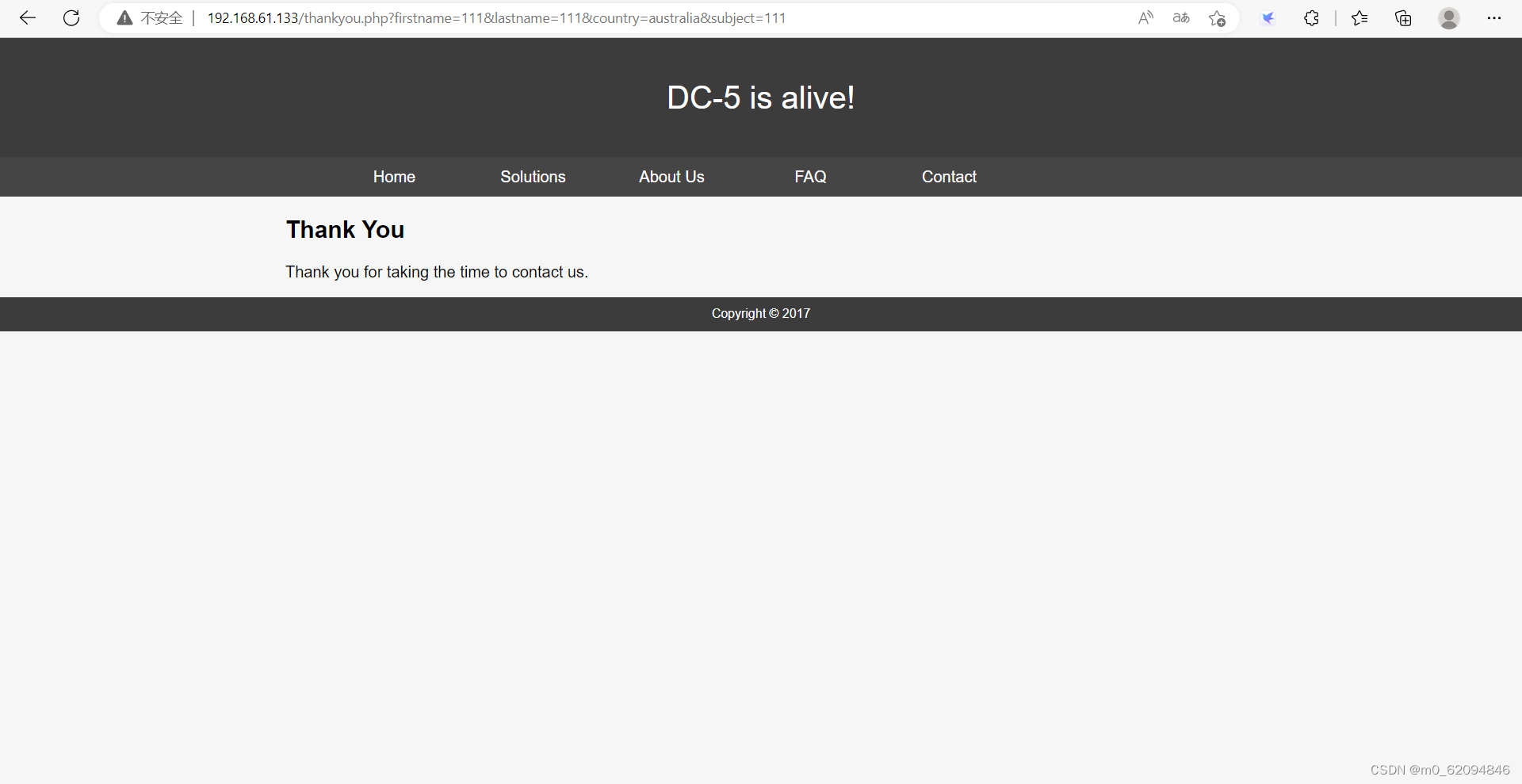

DC-5 target

高效!用虚拟用户搭建FTP工作环境

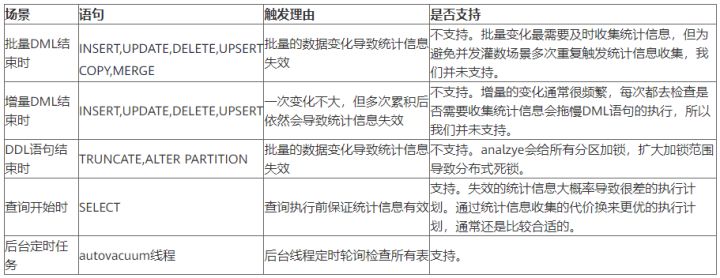

Master the use of auto analyze in data warehouse

Read the BGP agreement in 6 minutes.

随机推荐

Fundamentals of container technology

一个数据人对领域模型理解与深入

Full arrangement (medium difficulty)

Definition of cognition

Is there an elegant way to remove nulls while transforming a Collection using Guava?

[leetcode] 96 and 95 (how to calculate all legal BST)

When to use pointers in go?

6 分钟看完 BGP 协议。

Cann operator: using iterators to efficiently realize tensor data cutting and blocking processing

DGraph: 大规模动态图数据集

室外LED屏幕防水吗?

使用宝塔部署halo博客

17. Memory partition and paging

C language: find the palindrome number whose 100-999 is a multiple of 7

C fonctions linguistiques

eclipse链接数据库中测试SQL语句删除出现SQL语句语法错误

PostgreSQL 9.1 飞升之路

WPF双滑块控件以及强制捕获鼠标事件焦点

AI 绘画极简教程

Entity framework calls Max on null on records - Entity Framework calling Max on null on records