当前位置:网站首页>Loss function~

Loss function~

2022-07-02 23:03:00 【Miss chenshen】

Concept :

The loss function is the function used to calculate the difference between the tag value and the predicted value , In the process of machine learning , There are a variety of loss functions to choose from , A typical distance vector , Absolute vector, etc .

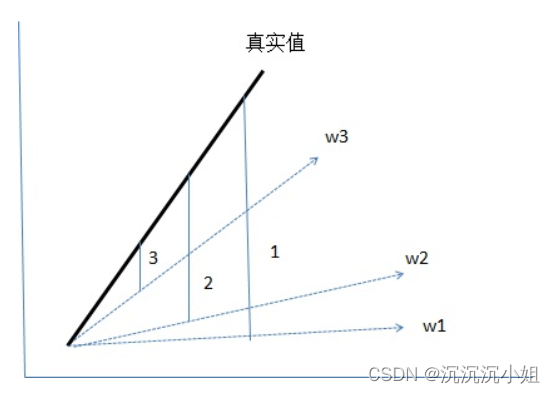

The diagram above is a schematic diagram for automatic learning of linear equations . Thick lines are real linear equations , The dotted line is a schematic diagram of the iterative process ,w1 Is the weight of the first iteration ,w2 Is the weight of the second iteration ,w3 Is the weight of the third iteration . As the number of iterations increases , Our goal is to make wn Infinitely close to the real value .

In the figure 1/2/3 The three labels are 3 Predict in the next iteration Y Value and reality Y The difference between values ( The difference here means the loss function , Yes, of course , There are many formulas for calculating the difference in practical application ), The difference here is represented by absolute difference on the diagram . There is also a square difference in multidimensional space , Mean square deviation and other different distance calculation formulas , That is, the loss function .

Common calculation methods of loss function :

1. nn.L1Loss Loss function

L1Loss The calculation method is very simple , Take the average of the absolute error between the predicted value and the real value .

criterion = nn.L1Loss()

loss = criterion(sample, target)

print(loss)

# 1The final output is 1

Calculation logic is as follows :

- First calculate the sum of absolute differences :|0-1|+|1-1|+|2-1|+|3-1| = 4

- And then average :4/4 = 1

2. nn.SmoothL1Loss

SmoothL1Loss Also called Huber Loss, Error in (-1,1) It's the square loss , Other things are L1 Loss .

criterion = nn.SmoothL1Loss()

loss = criterion(sample, target)

print(loss)

# 0.625The final output is 0.625

Calculation logic is as follows :

- First calculate the sum of absolute differences :

- And then average :2.5/4 = 0.625

3. nn.MSELoss

Square loss function . The calculation formula is the average of the sum of squares between the predicted value and the real value .

criterion = nn.MSELoss()

loss = criterion(sample, target)

print(loss)

# 1.5The final output is 1.5

Calculation logic is as follows :

- First calculate the sum of absolute differences :

- And then average :6/4 = 1.5

4. nn.BCELoss

Cross entropy for binary classification , Its calculation formula is complex , Here is mainly a concept , In general, it won't be used .

![loss(o,t) = - \frac{1}{N}\sum_{i=1}^{N}\left [ t_i*log(o_i) + (1-t_i)*log(1-o_i) \right ]](http://img.inotgo.com/imagesLocal/202207/02/202207022103224504_1.gif)

criterion = nn.BCELoss()

loss = criterion(sample, target)

print(loss)

# -13.8155边栏推荐

猜你喜欢

![P7072 [CSP-J2020] 直播获奖](/img/bc/fcbc2b1b9595a3bd31d8577aba9b8b.png)

P7072 [CSP-J2020] 直播获奖

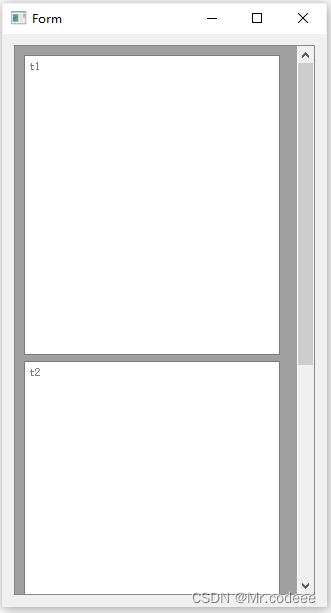

Qt QScrollArea

Xiaopeng P7 had an accident and the airbag did not pop up. Is this normal?

![[favorite poems] OK, song](/img/1a/e4a4dcca494e4c7bb0e3568f708288.png)

[favorite poems] OK, song

Lambda expression: an article takes you through

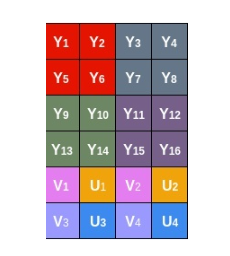

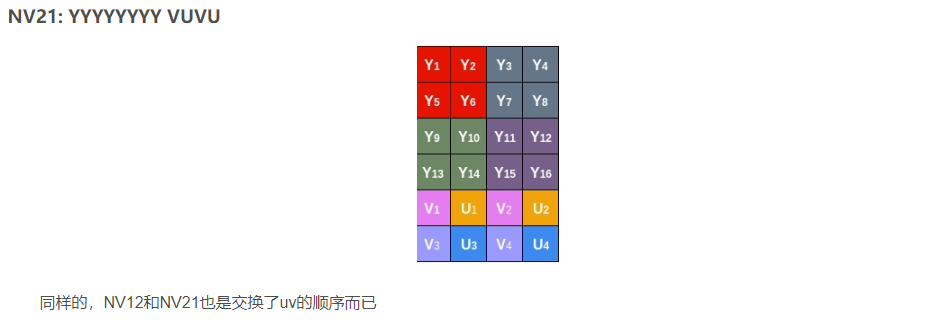

Construction of Hisilicon 3559 universal platform: rotation operation on the captured YUV image

![[leetcode] most elements [169]](/img/72/d3e46a820796a48b458cd2d0a18f8f.png)

[leetcode] most elements [169]

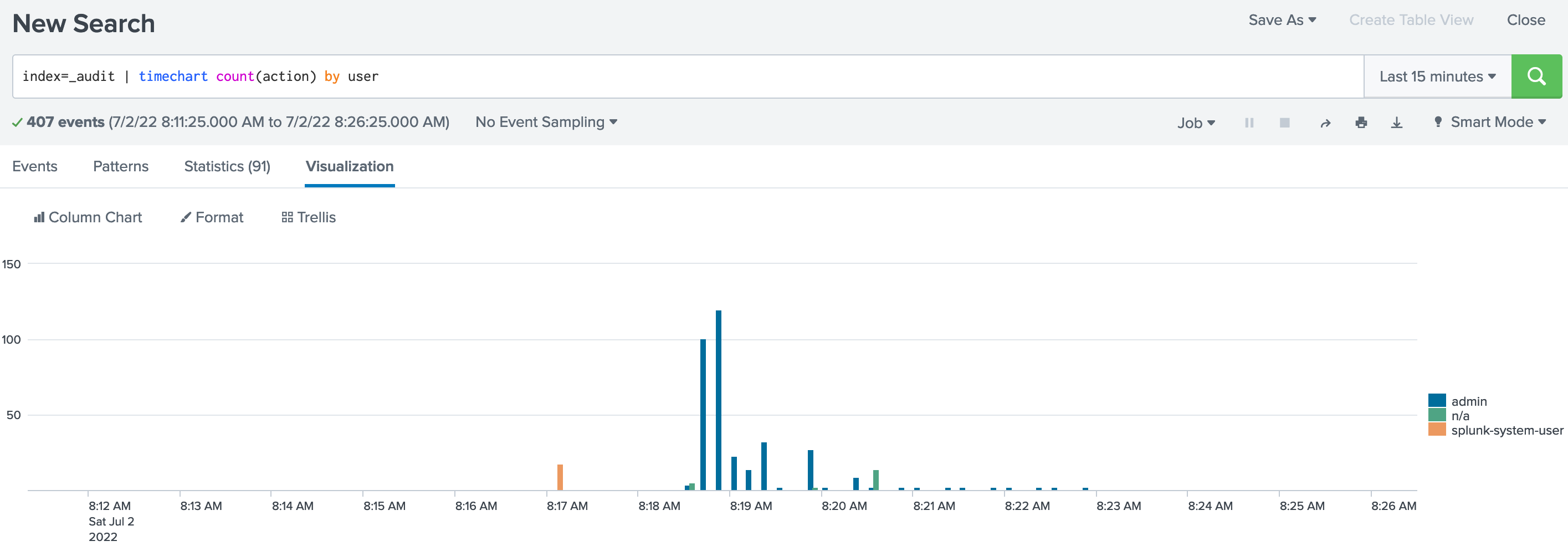

Splunk audit setting

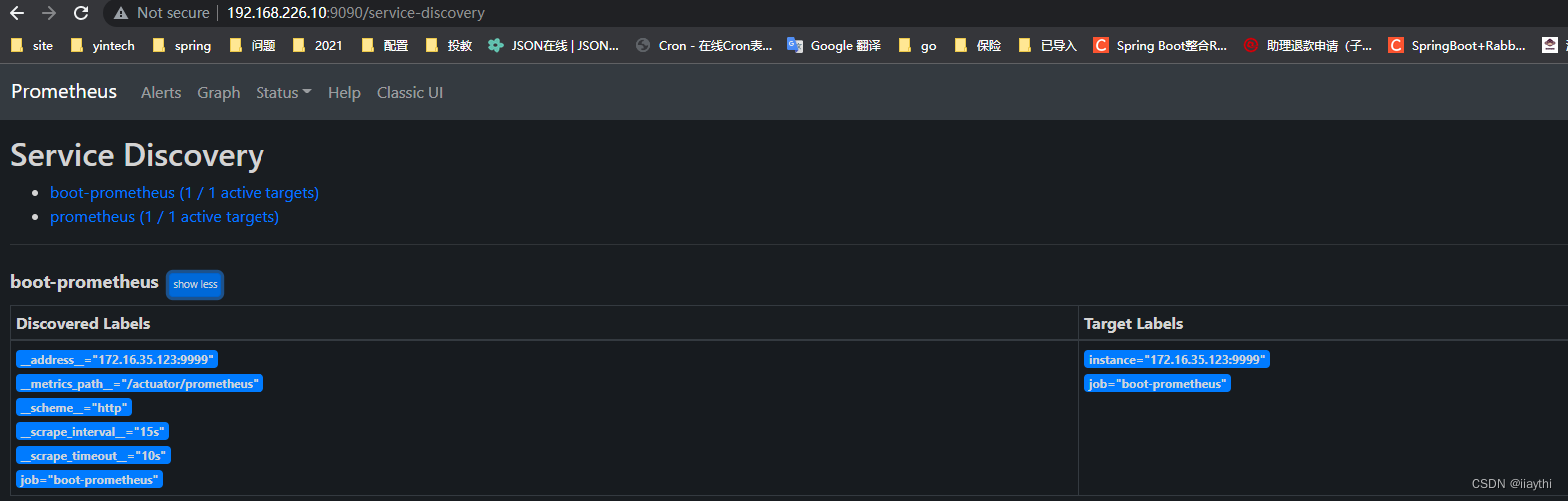

boot actuator - prometheus使用

Construction of Hisilicon 3559 universal platform: draw a frame on the captured YUV image

随机推荐

MySQL reset password, forget password, reset root password, reset MySQL password

Gas station [problem analysis - > problem conversion - > greed]

AES高级加密协议的动机阐述

The motivation of AES Advanced Encryption Protocol

地方经销商玩转社区团购模式,百万运营分享

Share 10 JS closure interview questions (diagrams), come in and see how many you can answer correctly

[leetcode] there are duplicate elements [217]

Qt QScrollArea

Mask R-CNN

ServletContext learning diary 1

Go语言sqlx库操作SQLite3数据库增删改查

Qt QProgressBar详解

Value sequence < detailed explanation of daily question >

[chestnut sugar GIS] how does global mapper batch produce ground contour lines through DSM

QT qpprogressbar details

Antd component upload uploads xlsx files and reads the contents of the files

位的高阶运算

Odoo13 build a hospital HRP environment (detailed steps)

Jerry's prototype has no touch, and the reinstallation becomes normal after dismantling [chapter]

Jerry's charge unplugged, unable to touch the boot [chapter]