当前位置:网站首页>Learning curve learning_curve function in sklearn

Learning curve learning_curve function in sklearn

2022-08-04 06:04:00 【I'm fine please go away thank you】

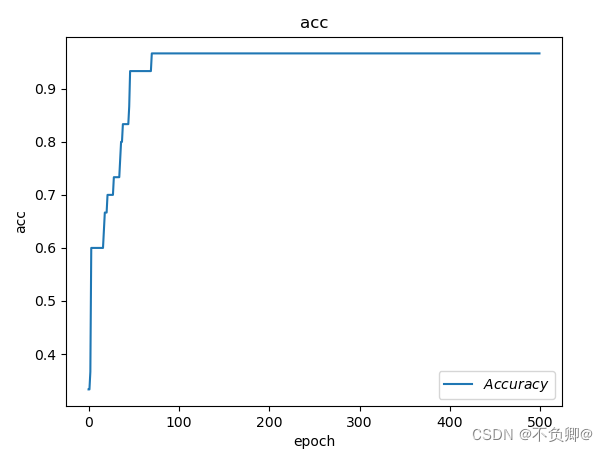

I. Operating principle

Learning curve.

Determine the train and test scores for cross-validation for different training set sizes.

The cross-validation generator splits the entire dataset into k times in the train and test data.Subsets of the training set with different sizes will be used to train the estimator, and scores will be computed for each training subset size and test set.Afterwards, for each training subset size, the scores for all k runs are averaged.

Second, function format

sklearn.model_selection.learning_curve(estimator, X, y, groups=None, train_sizes=array([0.1, 0.33, 0.55, 0.78, 1. ]), cv='warn',scoring=None, exploit_incremental_learning=False, n_jobs=None, pre_dispatch='all', verbose=0, shuffle=False, random_state=None, error_score='raise-deprecating')

2.1 Parameters

estimator: The type of object that implements the "fit" and "predict" methods

An object of this type that is cloned for each validation.

X: array class, shape (n_samples, n_features)

training vector, where n_samples is the number of samples and n_features is the number of features.

y: Array class, shape (n_samples) or (n_samples, n_features), optional

Classification or regression relative to the target of X; none for unsupervised learning.

groups: Array class, shape (n_samples,), optional

Label groupings of samples to use when splitting the dataset into train/test sets.Only used to connect cross-validation instance groups (eg GroupKFold).

train_sizes: Array class, shape (n_ticks), dtype float or int

The relative or absolute number of training examples that will be used to generate the learning curve.If dtype is float, it is considered to be part of the maximum size of the training set (determined by the chosen validation method), i.e., it must be within (0, 1], otherwise it will be interpreted as an absolute size Note that for classification,The number of samples must usually be large enough to contain at least one sample in each class (default: np.linspace(0.1, 1.0, 5))

cv:int, cross-validation generator or iterable, optional

Determines the cross-validation split strategy.Possible inputs for cv are:

- None, to use the default three-fold cross-validation (will be changed to five-fold in v0.22)

- Integer specifying the number of folds in a (hierarchical) KFold,

- CV splitter

- Iterable of sets (train, test) split into indexed arrays.

- For integer/no input, if the estimator is a classifier and y is binary or multiclass, use StratifiedKFold.In all other cases, KFold is used.

scoring: string, callable or None, optional, default: None

string (see model evaluation documentation) or scorer callable with signature scorer(estimator, X, y)/function.

exploit_incremental_learning: boolean, optional, default: False

If the estimator supports incremental learning, this parameter will be used to speed up fitting different training set sizes.

n_jobs: int or None, optional (default=None)

Number of jobs to run in parallel.None means 1.-1 means use all processors.See Glossary for more details.

pre_dispatch: integer or string, optional

The number of pre-dispatch jobs to execute in parallel (default is all).This option can reduce allocated memory.The string can be an expression like "2*n_jobs".

verbose: integer, optional

Control verbosity: higher, more messages.

shuffle: boolean, optional

Whether to shuffle the training data before prefixing with ``train_sizes''.

random_state: int, RandomState instance or None, optional (default=None)

If int, random_state is the seed used by the random number generator; otherwise, false.If a RandomState instance, random_state is the random number generator; if None, the random number generator is the RandomState instance used by np.random.Used when shuffle is True.

error_score: 'raise' | 'raise-deprecating' or numeric

The value to assign to the score if there is an error in the estimator fit.If set to "raise", an error will be raised.If set to "raise-deprecating", a FutureWarning will be printed before an error occurs.Raises a FitFailedWarning if a numeric value is given.This parameter does not affect the reinstall step, which will always throw an error.The default is "deprecated", but since version 0.22 it will be changed to np.nan.

2.2 return value

train_sizes_abs: array, shape(n_unique_ticks,), dtype int

The number of training examples that have been used to generate the learning curve.Note that the number of ticks may be less than n_ticks as duplicate entries will be removed.

train_scores: array, shape (n_ticks, n_cv_folds)

Training set scores.

test_scores: array, shape (n_ticks, n_cv_folds)

Test set scores.

Original link: https://blog.csdn.net/gracejpw/article/details/102370364

边栏推荐

猜你喜欢

随机推荐

自动化运维工具Ansible(6)Jinja2模板

组原模拟题

MySQL最左前缀原则【我看懂了hh】

记一次flink程序优化

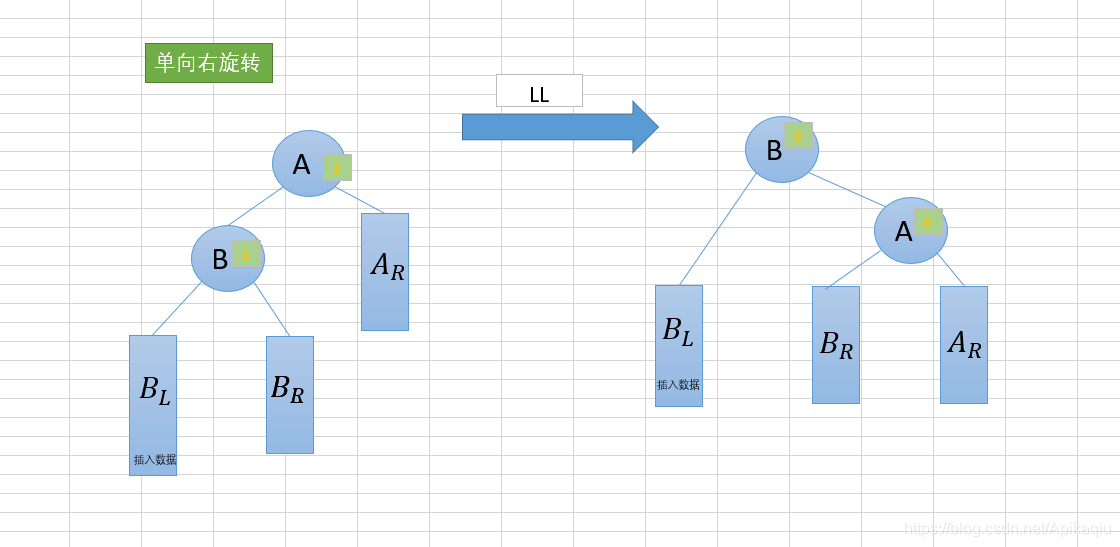

(十五)B-Tree树(B-树)与B+树

(十)树的基础部分(二)

(九)哈希表

【go语言入门笔记】13、 结构体(struct)

pgsql函数中的return类型

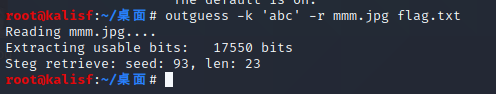

CTFshow—Web入门—信息(9-20)

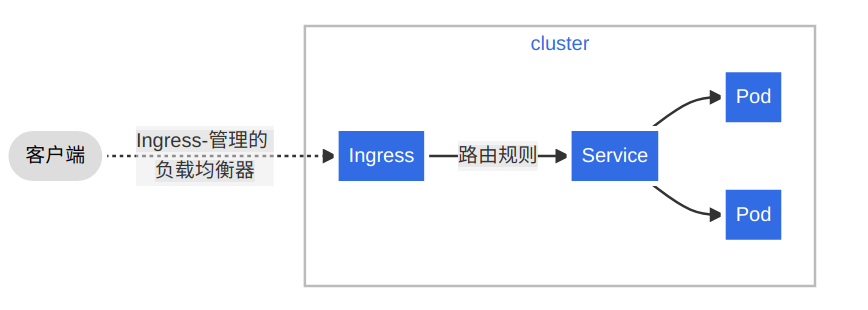

Kubernetes基础入门(完整版)

iptables防火墙

NFT市场可二开开源系统

关系型数据库-MySQL:多实例配置

k9s-终端UI工具

flink自定义轮询分区产生的问题

剑指 Offer 2022/7/1

智能合约安全——delegatecall (1)

智能合约安全——私有数据访问

TensorFlow:tf.ConfigProto()与Session