当前位置:网站首页>【无标题】

【无标题】

2022-07-05 19:26:00 【富士康质检员张全蛋】

单节点

这里我们采集 node-exporter 为例进行说明,首先使用 Prometheus 采集数据,然后将 Prometheus 数据远程写入 VM 远程存储,由于 VM 提供了 vmagent 组件,最后我们使用 VM 来完全替换 Prometheus,可以使架构更简单、更低的资源占用。

这里我们将所有资源运行在 kube-vm 命名空间之下:

* kubectl create ns kube-vm

首先我们这 kube-vm 命名空间下面使用 DaemonSet 控制器运行 node-exporter,对应的资源清单文件如下所示:

# vm-node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-vm

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

labels:

app: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

containers:

- name: node-exporter

image: prom/node-exporter:v1.3.1

args:

- --web.listen-address=$(HOSTIP):9111

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.hwmon # 禁用不需要的一些采集器

- --no-collector.nfs

- --no-collector.nfsd

- --no-collector.nvme

- --no-collector.dmi

- --no-collector.arp

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/containerd/.+|/var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

ports:

- containerPort: 9111

env:

- name: HOSTIP

valueFrom:

fieldRef:

fieldPath: status.hostIP

resources:

requests:

cpu: 150m

memory: 180Mi

limits:

cpu: 150m

memory: 180Mi

securityContext:

runAsNonRoot: true

runAsUser: 65534

volumeMounts:

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: root

mountPath: /host/root

mountPropagation: HostToContainer

readOnly: true

tolerations: # 添加容忍

- operator: "Exists"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: root

hostPath:

path: /

由于前面章节中我们也创建了 node-exporter,为了防止端口冲突,这里我们使用参数 --web.listen-address=$(HOSTIP):9111 配置端口为 9111。直接应用上面的资源清单即可。

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-node-exporter.yaml

* kubectl get pods -n kube-vm -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-c4d76 1/1 Running 0 118s 192.168.0.109 node2 <none> <none>

node-exporter-hzt8s 1/1 Running 0 118s 192.168.0.111 master1 <none> <none>

node-exporter-zlxwb 1/1 Running 0 118s 192.168.0.110 node1 <none> <none>

然后重新部署一套独立的 Prometheus,为了简单我们直接使用 static_configs 静态配置方式来抓取 node-exporter 的指标,配置清单如下所示:

# vm-prom-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-vm

data:

prometheus.yaml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: "nodes"

static_configs:

- targets: ['192.168.0.109:9111', '192.168.0.110:9111', '192.168.0.111:9111']

relabel_configs: # 通过 relabeling 从 __address__ 中提取 IP 信息,为了后面验证 VM 是否兼容 relabeling

- source_labels: [__address__]

regex: "(.*):(.*)"

replacement: "${1}"

target_label: 'ip'

action: replace

上面配置中通过 relabel 操作从 __address__ 中将 IP 信息提取出来,后面可以用来验证 VM 是否兼容 relabel 操作。

同样要给 Prometheus 数据做持久化,所以也需要创建一个对应的 PVC 资源对象:

# apiVersion: storage.k8s.io/v1

# kind: StorageClass

# metadata:

# name: local-storage

# provisioner: kubernetes.io/no-provisioner

# volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 20Gi

storageClassName: local-storage

local:

path: /data/k8s/prometheus

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-data

namespace: kube-vm

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: local-storage

然后直接创建 Prometheus 即可,将上面的 PVC 和 ConfigMap 挂载到容器中,通过 --config.file 参数指定配置文件文件路径,指定 TSDB 数据路径等,资源清单文件如下所示:

# vm-prom-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: kube-vm

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-data

- name: config-volume

configMap:

name: prometheus-config

containers:

- image: prom/prometheus:v2.35.0

name: prometheus

args:

- "--config.file=/etc/prometheus/prometheus.yaml"

- "--storage.tsdb.path=/prometheus" # 指定tsdb数据路径

- "--storage.tsdb.retention.time=2d"

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

name: http

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: "/prometheus"

name: data

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: kube-vm

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http

直接应用上面的资源清单即可。

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-prom-config.yaml

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-prom-pvc.yaml

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-prom-deploy.yaml

* kubectl get pods -n kube-vm -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-c4d76 1/1 Running 0 27m 192.168.0.109 node2 <none> <none>

node-exporter-hzt8s 1/1 Running 0 27m 192.168.0.111 master1 <none> <none>

node-exporter-zlxwb 1/1 Running 0 27m 192.168.0.110 node1 <none> <none>

prometheus-dfc9f6-2w2vf 1/1 Running 0 4m58s 10.244.2.102 node2 <none> <none>

* kubectl get svc -n kube-vm

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 10.103.38.114 <none> 9090:31890/TCP 4m10s

部署完成后可以通过 http://<node-ip>:31890 访问 Prometheus,正常可以看到采集的 3 个 node 节点的指标任务。

同样的方式重新部署 Grafana,资源清单如下所示:

# vm-grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: kube-vm

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-data

containers:

- name: grafana

image: grafana/grafana:main

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

securityContext:

runAsUser: 0

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: admin321

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-vm

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

storageClassName: local-storage

local:

path: /data/k8s/grafana

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data

namespace: kube-vm

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-storage

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-grafana.yaml

* kubectl get svc -n kube-vm |grep grafana

grafana NodePort 10.97.111.153 <none> 3000:31800/TCP 62s

同样通过 http://<node-ip>:31800 就可以访问 Grafana 了,进入 Grafana 配置 Prometheus 数据源。

然后导入 16098 这个 Dashboard,导入后效果如下图所示。

到这里就完成了使用 Prometheus 收集节点监控指标,接下来我们来使用 VM 来改造现有方案。

远程存储 VictoriaMetrics

首先需要一个单节点模式的 VM,运行 VM 很简单,可以直接下载对应的二进制文件启动,也可以使用 docker 镜像一键启动,我们这里同样部署到 Kubernetes 集群中。资源清单文件如下所示。

# vm-grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

selector:

matchLabels:

app: victoria-metrics

template:

metadata:

labels:

app: victoria-metrics

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: victoria-metrics-data

containers:

- name: vm

image: victoriametrics/victoria-metrics:v1.76.1

imagePullPolicy: IfNotPresent

args:

- -storageDataPath=/var/lib/victoria-metrics-data

- -retentionPeriod=1w

ports:

- containerPort: 8428

name: http

volumeMounts:

- mountPath: /var/lib/victoria-metrics-data

name: storage

---

apiVersion: v1

kind: Service

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

type: NodePort

ports:

- port: 8428

selector:

app: victoria-metrics

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: victoria-metrics-data

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 20Gi

storageClassName: local-storage

local:

path: /data/k8s/vm

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node2

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: victoria-metrics-data

namespace: kube-vm

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: local-storage

这里我们使用 -storageDataPath 参数指定了数据存储目录,然后同样将该目录进行了持久化,-retentionPeriod 参数可以用来配置数据的保持周期,上面是保存1个礼拜,如果不配置默认是1个月。直接应用上面的资源清单即可。

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-single-node-deploy.yaml

* kubectl get svc victoria-metrics -n kube-vm

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

victoria-metrics NodePort 10.106.216.248 <none> 8428:31953/TCP 75m

* kubectl get pods -n kube-vm -l app=victoria-metrics

NAME READY STATUS RESTARTS AGE

victoria-metrics-57d47f4587-htb88 1/1 Running 0 3m12s

* kubectl logs -f victoria-metrics-57d47f4587-htb88 -n kube-vm

2022-04-22T08:59:14.431Z info VictoriaMetrics/lib/logger/flag.go:12 build version: victoria-metrics-20220412-134346-tags-v1.76.1-0-gf8de318bf

2022-04-22T08:59:14.431Z info VictoriaMetrics/lib/logger/flag.go:13 command line flags

2022-04-22T08:59:14.431Z info VictoriaMetrics/lib/logger/flag.go:20 flag "retentionPeriod"="1w"

2022-04-22T08:59:14.431Z info VictoriaMetrics/lib/logger/flag.go:20 flag "storageDataPath"="/var/lib/victoria-metrics-data"

2022-04-22T08:59:14.431Z info VictoriaMetrics/app/victoria-metrics/main.go:52 starting VictoriaMetrics at ":8428"...

2022-04-22T08:59:14.432Z info VictoriaMetrics/app/vmstorage/main.go:97 opening storage at "/var/lib/victoria-metrics-data" with -retentionPeriod=1w

......

2022-04-22T08:59:14.449Z info VictoriaMetrics/app/victoria-metrics/main.go:61 started VictoriaMetrics in 0.017 seconds

2022-04-22T08:59:14.449Z info VictoriaMetrics/lib/httpserver/httpserver.go:91 starting http server at http://127.0.0.1:8428/

到这里我们单节点的 VictoriaMetrics 就部署成功了。接下来我们只需要在 Prometheus 中配置远程写入我们的 VM 即可,更改 Prometheus 配置:(如果有多个Prometheus,可以统一的写入到一个vm里面就可以了,将Prometheus做分片,每个分片里面配置remote_write,这样压力就不在Prometheus上面了,就在vm上面了)

# vm-prom-config2.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-vm

data:

prometheus.yaml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

remote_write: # 远程写入到远程 VM 存储

- url: http://victoria-metrics:8428/api/v1/write

scrape_configs:

- job_name: "nodes"

static_configs:

- targets: ['192.168.0.109:9111', '192.168.0.110:9111', '192.168.0.111:9111']

relabel_configs: # 通过 relabeling 从 __address__ 中提取 IP 信息,为了后面验证 VM 是否兼容 relabeling

- source_labels: [__address__]

regex: "(.*):(.*)"

replacement: "${1}"

target_label: 'ip'

action: replace

重新更新 Prometheus 的配置资源对象:

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-prom-config2.yaml

# 更新后执行 reload 操作重新加载 prometheus 配置

* curl -X POST "http://192.168.0.111:31890/-/reload"

配置生效后 Prometheus 就会开始将数据远程写入 VM 中,我们可以查看 VM 的持久化数据目录是否有数据产生来验证:

* ll /data/k8s/vm/data/

total 0

drwxr-xr-x 4 root root 38 Apr 22 17:15 big

-rw-r--r-- 1 root root 0 Apr 22 16:59 flock.lock

drwxr-xr-x 4 root root 38 Apr 22 17:15 small

现在我们去直接将 Grafana 中的数据源地址修改成 VM 的地址:

修改完成后重新访问 node-exporter 的 dashboard,正常可以显示,证明 VM 是兼容的。

替换 Prometheus

上面我们将 Prometheus 数据远程写入到了 VM,但是 Prometheus 开启 remote write 功能后会增加其本身的资源占用,理论上其实我们也可以完全用 VM 来替换掉 Prometheus,这样就不需要远程写入了,而且本身 VM 就比 Prometheus 占用更少的资源。

现在我们先停掉 Prometheus 的服务:

* kubectl scale deploy prometheus --replicas=0 -n kube-vm

然后将 Prometheus 的配置文件挂载到 VM 容器中,使用参数 -promscrape.config 来指定 Prometheus 的配置文件路径,如下所示:

# vm-single-node-deploy2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: victoria-metrics

namespace: kube-vm

spec:

selector:

matchLabels:

app: victoria-metrics

template:

metadata:

labels:

app: victoria-metrics

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: victoria-metrics-data

- name: prometheus-config

configMap:

name: prometheus-config

containers:

- name: vm

image: victoriametrics/victoria-metrics:v1.76.1

imagePullPolicy: IfNotPresent

args:

- -storageDataPath=/var/lib/victoria-metrics-data

- -retentionPeriod=1w

- -promscrape.config=/etc/prometheus/prometheus.yaml

ports:

- containerPort: 8428

name: http

volumeMounts:

- mountPath: /var/lib/victoria-metrics-data

name: storage

- mountPath: /etc/prometheus

name: prometheus-config

记得先将 Prometheus 配置文件中的 remote_write 模块去掉,然后重新更新 VM 即可:

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-prom-config.yaml

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-single-node-deploy2.yaml

* kubectl get pods -n kube-vm -l app=victoria-metrics

NAME READY STATUS RESTARTS AGE

victoria-metrics-8466844968-ncfnp 1/1 Running 2 (3m3s ago) 3m45s

* kubectl logs -f victoria-metrics-8466844968-ncfnp -n kube-vm

......

2022-04-22T10:01:59.837Z info VictoriaMetrics/app/victoria-metrics/main.go:61 started VictoriaMetrics in 0.022 seconds

2022-04-22T10:01:59.837Z info VictoriaMetrics/lib/httpserver/httpserver.go:91 starting http server at http://127.0.0.1:8428/

2022-04-22T10:01:59.837Z info VictoriaMetrics/lib/httpserver/httpserver.go:92 pprof handlers are exposed at http://127.0.0.1:8428/debug/pprof/

2022-04-22T10:01:59.838Z info VictoriaMetrics/lib/promscrape/scraper.go:103 reading Prometheus configs from "/etc/prometheus/prometheus.yaml"

2022-04-22T10:01:59.838Z info VictoriaMetrics/lib/promscrape/config.go:96 starting service discovery routines...

2022-04-22T10:01:59.839Z info VictoriaMetrics/lib/promscrape/config.go:102 started service discovery routines in 0.000 seconds

2022-04-22T10:01:59.840Z info VictoriaMetrics/lib/promscrape/scraper.go:395 static_configs: added targets: 3, removed targets: 0; total targets: 3

从 VM 日志中可以看出成功读取了 Prometheus 的配置,并抓取了 3 个指标(node-exporter)。 现在我们再去 Grafana 查看 node-exporter 的 Dashboard 是否可以正常显示。先保证数据源是 VM 的地址。

这样我们就使用 VM 替换掉了 Prometheus,我们也可以这 Grafana 的 Explore 页面去探索采集到的指标。

UI 界面

VM 单节点版本本身自带了一个 Web UI 界面 - vmui,不过目前功能比较简单,可以直接通过 VM 的 NodePort 端口进行访问。

* kubectl get svc victoria-metrics -n kube-vm

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

victoria-metrics NodePort 10.106.216.248 <none> 8428:31953/TCP 75m

我们这里可以通过 http://<node-ip>:31953 访问到 vmui:

可以通过 /vmui 这个 endpoint 访问 UI 界面:

如果你想查看采集到的指标 targets,那么可以通过 /targets 这个 endpoint 来获取:

这些功能基本上可以满足我们的一些需求,但是还是太过简单,如果你习惯了 Prometheus 的 UI 界面,那么我们可以使用 promxy 来代替 vmui,而且 promxy 还可以进行多个 VM 单节点的数据聚合,以及 targets 查看等,对应的资源清单文件如下所示:

# vm-promxy.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: promxy-config

namespace: kube-vm

data:

config.yaml: |

promxy:

server_groups:

- static_configs:

- targets: [victoria-metrics:8428] # 指定vm地址,有多个则往后追加即可

path_prefix: /prometheus # 配置前缀

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: promxy

namespace: kube-vm

spec:

selector:

matchLabels:

app: promxy

template:

metadata:

labels:

app: promxy

spec:

containers:

- args:

- "--config=/etc/promxy/config.yaml"

- "--web.enable-lifecycle"

- "--log-level=trace"

env:

- name: ROLE

value: "1"

command:

- "/bin/promxy"

image: quay.io/jacksontj/promxy

imagePullPolicy: Always

name: promxy

ports:

- containerPort: 8082

name: web

volumeMounts:

- mountPath: "/etc/promxy/"

name: promxy-config

readOnly: true

- args: # container to reload configs on configmap change

- "--volume-dir=/etc/promxy"

- "--webhook-url=http://localhost:8082/-/reload"

image: jimmidyson/configmap-reload:v0.1

name: promxy-server-configmap-reload

volumeMounts:

- mountPath: "/etc/promxy/"

name: promxy-config

readOnly: true

volumes:

- configMap:

name: promxy-config

name: promxy-config

---

apiVersion: v1

kind: Service

metadata:

name: promxy

namespace: kube-vm

spec:

type: NodePort

ports:

- port: 8082

selector:

app: promxy

直接应用上面的资源对象即可:

* kubectl apply -f https://p8s.io/docs/victoriametrics/manifests/vm-promxy.yaml

* kubectl get pods -n kube-vm -l app=promxy

NAME READY STATUS RESTARTS AGE

promxy-5f7dfdbc64-l4kjq 2/2 Running 0 6m45s

* kubectl get svc promxy -n kube-vm

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

promxy NodePort 10.110.19.254 <none> 8082:30618/TCP 6m12s

访问 Promxy 的页面效果和 Prometheus 自带的 Web UI 基本一致的。

这里面我们简单介绍了单机版的 victoriametrics 的基本使用。

边栏推荐

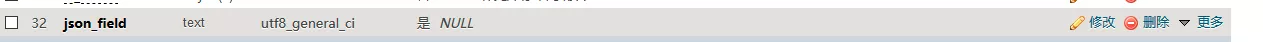

- How MySQL queries and modifies JSON data

- 爬虫练习题(二)

- The basic grammatical structure of C language

- Common interview questions in Android, 2022 golden nine silver ten Android factory interview questions hit

- 1亿单身男女撑起一个IPO,估值130亿

- PG基础篇--逻辑结构管理(用户及权限管理)

- vagrant2.2.6支持virtualbox6.1版本

- Shell编程基础(第8篇:分支语句-case in)

- Debezium系列之:postgresql从偏移量加载正确的最后一次提交 LSN

- 数据库 逻辑处理功能

猜你喜欢

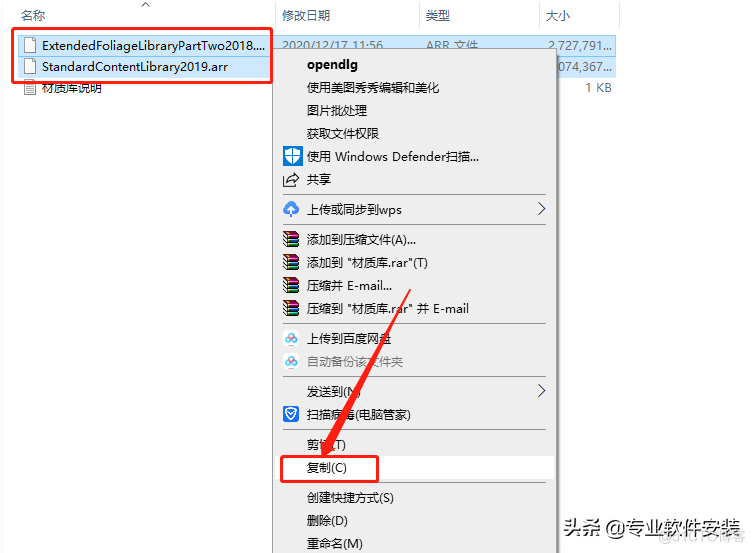

Fuzor 2020软件安装包下载及安装教程

How MySQL queries and modifies JSON data

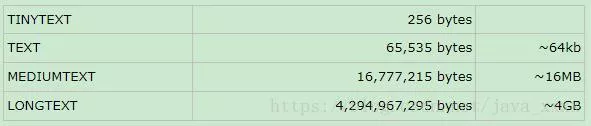

MySql中的longtext字段的返回问题及解决

Bitcoinwin (BCW)受邀参加Hanoi Traders Fair 2022

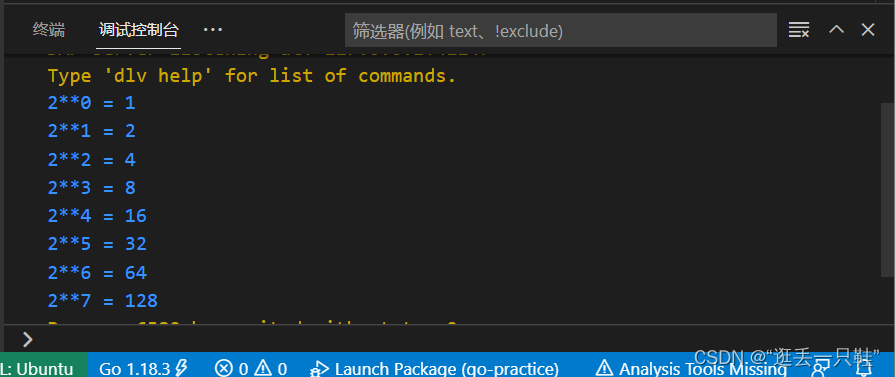

Go语言 | 02 for循环及常用函数的使用

Common - Hero Minesweeper

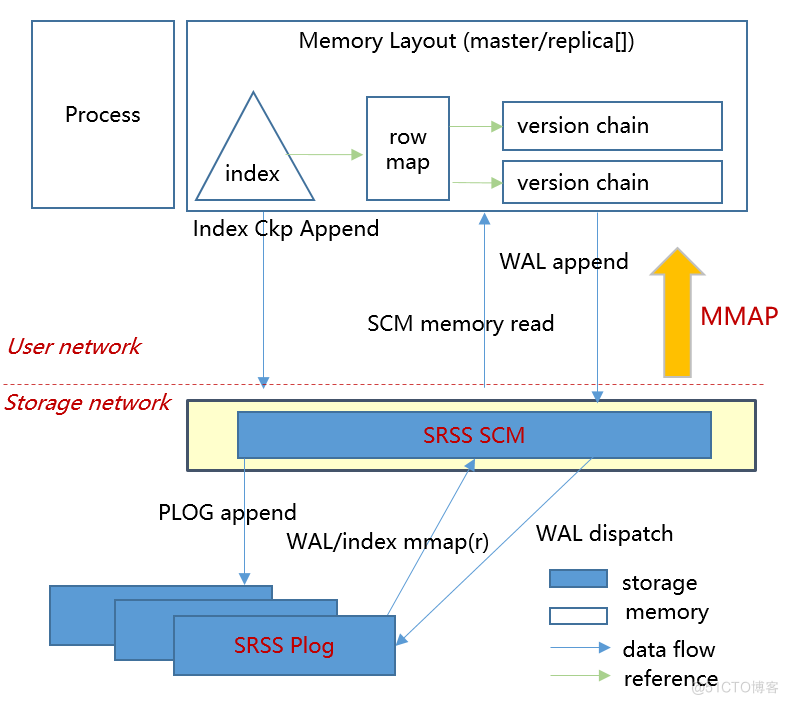

HiEngine:可媲美本地的云原生内存数据库引擎

S7-200SMART利用V90 MODBUS通信控制库控制V90伺服的具体方法和步骤

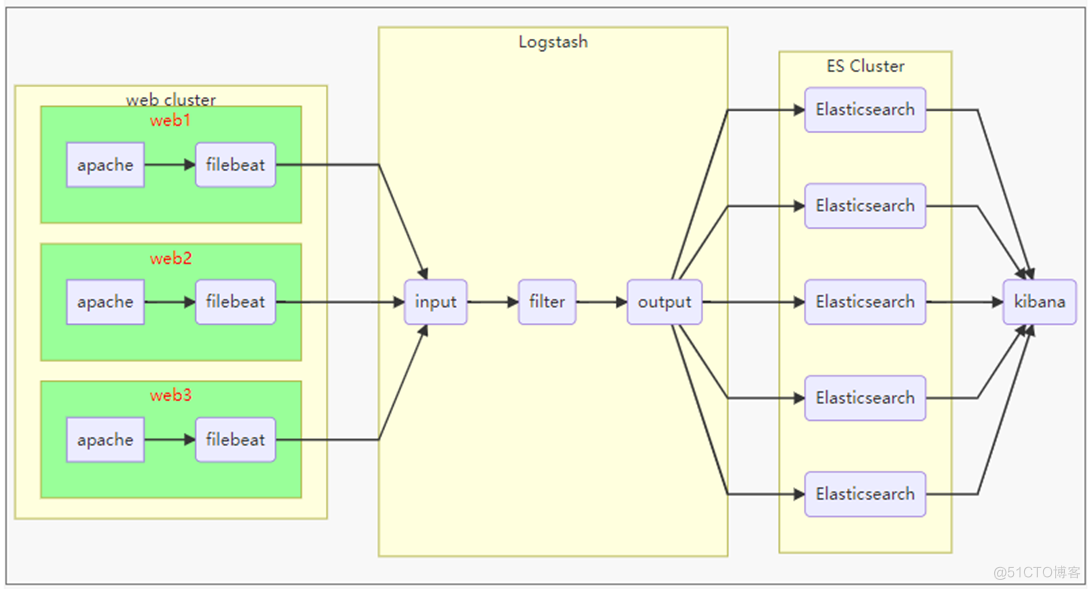

ELK分布式日志分析系统部署(华为云)

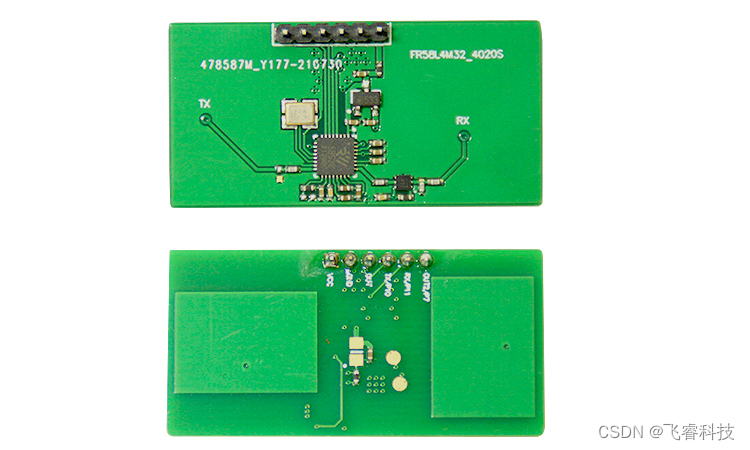

Microwave radar induction module technology, real-time intelligent detection of human existence, static micro motion and static perception

随机推荐

What are the reliable domestic low code development platforms?

HAC cluster modifying administrator user password

成功入职百度月薪35K,2022Android开发面试解答

不愧是大佬,字节大牛耗时八个月又一力作

MMO項目學習一:預熱

IFD-x 微型红外成像仪(模块)关于温度测量和成像精度的关系

Debezium系列之:修改源码支持drop foreign key if exists fk

【FAQ】华为帐号服务报错 907135701的常见原因总结和解决方法

潘多拉 IOT 开发板学习(HAL 库)—— 实验8 定时器中断实验(学习笔记)

Go语言 | 02 for循环及常用函数的使用

详解SQL中Groupings Sets 语句的功能和底层实现逻辑

Go语言 | 01 WSL+VSCode环境搭建避坑指南

Go语言 | 03 数组、指针、切片用法

acm入门day1

Talking about fake demand from takeout order

Summer Challenge database Xueba notes, quick review of exams / interviews~

力扣 729. 我的日程安排表 I

从零实现深度学习框架——LSTM从理论到实战【实战】

flume系列之:拦截器过滤数据

Debezium系列之:记录mariadb数据库删除多张临时表debezium解析到的消息以及解决方法