当前位置:网站首页>Learning multi-level structural information for small organ segmentation

Learning multi-level structural information for small organ segmentation

2022-07-04 06:06:00 【Never_ Jiao】

Learning multi-level structural information for small organ segmentation

Journal Publishing :Signal Processing( Engineering technology 2 District )

Time of publication :2022 year

Abstract

Deep neural networks in the liver 、 Kidney and other medical image segmentation has achieved great success , Its accuracy has exceeded the human level . However , Small organ segmentation ( For example, pancreas ) It's still a challenging task . To solve these problems , Extracting and aggregating multi-scale robust features becomes very important . In this paper , We go through Integration area 、 Boundary and pixel level information to develop multi-level structure loss , To supervise feature fusion and accurate segmentation . The novel pixel by pixel term can provide complementary information with regional and boundary losses , This helps to find more local information from the image . We further developed a multi branch network with a saliency guidance module , To better aggregate the characteristics of the three levels . Adopt the segmentation architecture from coarse to fine , Use the prediction of rough stage to obtain the bounding box of fine stage . For three benchmark data sets ( namely NIH pancreas 、ISICDM Pancreas and MSD Spleen dataset ) Conducted a comprehensive evaluation , It shows that compared with several most advanced methods of pancreas and spleen segmentation , Our model can significantly improve the segmentation accuracy . Besides , Ablation studies show that , The multi-level structure feature is conducive to the training stability and the convergence of coarse to fine methods .

Introduction

Image segmentation is the central theme of medical image processing , It divides the image volume into sub regions according to biological structure and function . Various methods for image segmentation have been developed , For example, regional growth [1]、 clustering [2]、 Figure cutting method [3] And model-based [4,5]. However , Because of the shape 、 High variability in size and location , from CT Segmenting a small organ from a scan is still challenging . Pancreas is a typical representative of human small organs . Traditional segmentation based on multi map registration [6] In the liver 、 The segmentation accuracy of kidney and spleen can reach 90% above , The accuracy of segmentation on pancreas is only 70% about . Due to the great progress of deep learning , Especially convolutional neural network (CNN) Such as FCN [7]、U-Net [8] and DeepLab [9], The accuracy of medical image segmentation has been significantly improved in the past few years . However , Because there is ambiguity in distinguishing pixels around the boundary , It is still difficult to accurately segment boundaries . therefore , Multiscale features [10,11] And effective feature fusion methods [11-13] It is deeply studied to assist in accurate segmentation . Xie and Tu [10] An end-to-end edge detection system is developed to automatically learn the types of rich hierarchical features . Chen et al [14] An efficient depth contour sensing network is proposed , It is used for accurate gland segmentation under the unified multi task learning framework . Shen et al [15] A multi task full convolution network architecture is proposed to jointly learn and predict tumor regions and tumor boundaries . Xu et al [16] A deep multi-channel framework is designed to automatically utilize and fuse complex information , Include area 、 Location and boundary clues . Duan et al [17] Use the complete convolution network to estimate the probability map of region and edge position , The network is combined into a single nested level set optimization framework to achieve multi region segmentation . Pang et al [18] The boundary attention module is introduced to bridge the semantic gap between multi-level features . Zhang and Pang [19] The edge and significance information for segmentation are determined , And proposed the cross guidance network . The depth edge prior is also introduced into the network , To accurately integrate edge information to process image denoising 、 Super resolution and segmentation tasks [20-22]. lately , Zhou et al [23] Developed a method for RGB A new multi label learning network for semantic segmentation of hot city scenes , The network is semantic 、 The network is trained in terms of binary and boundary characteristics . Zhou et al [24] A cross flow and cross scale adaptive fusion network is proposed , And use the purification loss to accurately learn the boundaries and details of the object . Besides , Regularization method has been introduced CNN, To utilize the prior information of image edges , For example, total variation regularization [25] Sum graph total variation regularization [26], adopt softmax The activation function integrates it into CNN In the framework of . The above method improves the segmentation accuracy by adopting multi task method or introducing additional network structure to optimize the context feature extraction process . However , Used for measurement, prediction and ground truth The design of loss function of similarity also plays an important role in accurate segmentation .

Suppose a 2D Images U ∈ X, Where the strength of the specified position is expressed as U(x, y ), Tag data V ∈ X And U Share the same dimension , among X Is a topological vector space . Then train the feedforward for the image segmentation task CNN It can be formally expressed as the following two-level optimization problem

The upper minimization is called loss function E : X × X → R Map real valued variables to real numbers , The lower minimization represents CNN Model F(·; U , θ ), among P θ For its output . The loss function measures P θ And marked ground truth Differences between , It is F(·; U , θ ) The minimum value of , It has similar functions with the objective function in the variational model .Chan and Vese [5] Proposed active contour (AC) The model deploys an effective a priori on the image boundary , In the foreground - Background segmentation has achieved great success , To minimize

among Γ It's a closed curve ,c 1 , c 2 It's a curve Γ Internal and external images U(x, y ) The average of , The term Length (Γ) Express Γ The length of , The term Area (Γ) Express Γ The area within ,μ, ν, λ1 , λ2 Is a positive parameter . From then on , It's been studied Chan-Vese Many variants of the model . for example , Yang et al [27] A high-order weighted variational model for image segmentation is proposed , Its weight is automatically estimated according to the edge information of the observed image . Wu et al [28] An effective regularization term is introduced , It combines an adaptive weighting matrix to enhance the diffusion along the tangent direction of the edge . in fact ,AC The model not only minimizes the distance between the solution and the input image , It also minimizes the length and area of the interface and foreground area , This has been used as a loss function for deep neural networks to find better boundaries . Hu et al [29] Use active contour model to help deep network learn information about salient objects . Marcos et al [30] A deep structured active contour is proposed , To combine a priori with constraints ( For example, continuous boundary 、 Smooth edges and sharp corners ) Integrate into the segmentation process . Chen et al [31] A loss function is proposed , Its inspiration comes from the construction of area and length items for bop General idea of active contour model for medical image segmentation . Jin et al [32] A new loss function is introduced , Use the formula based on level set ground truth Spatial correlation in . Hatamizad et al [33] By using the precise boundary drawing ability of active contour model , A deep active lesion segmentation model is introduced . Zhang et al [34] Convex CV Model [35] Integrated into the CNN In structure , To generate more accurate contour segmentation . Kim and Ye [36] Based on active contour model (2) The new loss function of , For semi supervised and unsupervised deep Networks . Ma、He and Yang [37] By minimizing geodesic active contour energy in an end-to-end manner , A level set function regression network is proposed . However , The above losses may lose the impact on small organ segmentation , Because there is less boundary information available . Besides , We summarized the table 1 Loss function for medical segmentation in , It can be divided into regional losses 、 Boundary losses and their combinations .

In order to aggregate complementary information from images , Especially pixel level information , We propose multi-level structure loss function and multi-level structure network , Coding multi-scale context features from different perspectives , To achieve better small organ segmentation . say concretely , Our loss function can measure the prediction and... From low-level pixel classification to intermediate edge localization and high-level region segmentation ground truth Similarity between . Next , We have developed a new multi-layered network , It contains three branches for learning different characteristics , The significance guidance module is used to segment small organs using multi-scale information . We use a coarse to fine segmentation framework for automatic segmentation . Both rough and fine models use ResNet18 As the backbone , And apply empty space pyramid pool (ASPP) Module to promote multi-scale feature extraction and fusion . Our segmentation model was evaluated on two pancreatic segmentation datasets , namely NIH Data sets [56] and ISICDM Data sets 1, And the medical decathlon (MSD) Data sets 2 Subset of spleen . With several of the most advanced based on 2D and 3D Compare the segmentation methods of learning , Our model shows better accuracy and efficiency .

The rest of this paper is arranged as follows . The first 2 Section describes the details of our method , Including problem formulation and network architecture . We developed a bull CNN, To better integrate the 3 The multi-level structural features in the section . The first 4 Section provides the implementation details of our model . The first 5 Section describes the evaluation and experimental results . Last , We summarize the paper and present it in Chapter 6 Section discusses possible future work .

Introduction

Our minimization problem

Considering that the image function can be in the region 、 Measure at contour and pixel levels , We propose the following multistage structural losses , It is composed of complementary information of small organs or unbalanced segmentation

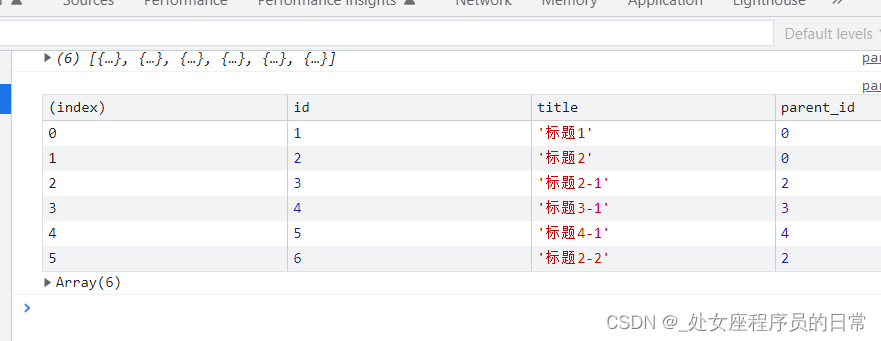

Among them, the term E R (P θ , V) , E B (P θ , V) and E P (P θ , V) Measure areas separately 、 Boundary and pixel level prediction P and ground truth V Differences between . Pictured 1 Shown , The first item can describe the overall appearance of the foreground , The other two can identify the differences on the boundary . Different from most existing loss functions that focus on regional and edge information , We propose a novel pixel by pixel penalty to capture local features ignored by region and boundary terms . By using three-level features , Our method can ideally identify the structural information in the learning process and produce more ideal boundaries .

Fig.1 Illustration of multi-level structure information from global to local scope , It predicts ( Red border ) and ground truth( The blue border ) The relationship between them can be through the region 、 Boundary and pixel level measurements . ( Explanation of the color reference in this legend , Please refer to the online version of this article .)

Region loss

Regional terms are widely used in segmentation tasks , It measures the area predicted by the network and the corresponding ground truth Overlap between ; See chart 1 (a) The blue area in . There are many choices of regional terms , For the sake of illustration , We focus on binary segmentation . hypothesis Ω yes Rn Bounded open subset of . Binary cross entropy (BCE) Usually used for training CNN Model for medical image segmentation tasks , Where represents pixel by pixel multiplication . Cross entropy is designed to predict each pixel to find the target region , and Dice Loss concerns overlap areas . BCE and Dice Many variants of loss have been studied for medical segmentation .

Ronneberge wait forsomeone [8] The class equilibrium weight of cross entropy is introduced , It uses weights to balance the number of positive and negative samples . Caliva et al [47] Use the distance graph as the weight of cross entropy , Focus on the difficult boundary areas . TopK Loss [45] It also focuses on difficult samples by discarding simple samples . Lin et al [46] Firstly, the focus loss of target detection is proposed , Then used for image segmentation , It introduces a modulation factor to control the importance of simple samples .IoU Loss [39] The design of is similar to Dice Loss , Among them, the cross association is minimized in the deep neural network . Sudre et al [41] By minimizing the overlapping area of foreground and background, a generalized Dice Loss . Yang、Kweon and Kim [44] In a broad sense Dice Add a term to the coefficient that penalizes false negatives and false positives , A novel loss function is introduced . Salehi [40] Balance the true negative and false positive situations by introducing a weight , Put forward Tversky Loss . Hashemite et al [43] Based on Tversky Loss of asymmetric similarity , To achieve a better trade-off between accuracy and recall . Abraham et al [42] By combining focal loss and Tversky loss The idea of focal Tversky loss. Compound losses have also been used for segmentation , for example nnU-Net [48] Of Dice And cross entropy loss ,anatomyNet [49] Of Dice And focus loss .

Boundary loss

For small organ segmentation and unbalanced segmentation , Area loss , for example Dice and CE, Treat all samples and categories equally , This leads to training instability and prediction boundaries biased towards most categories . Kervadec wait forsomeone [51] A boundary loss is proposed , To use integrals on the interface between regions rather than on regions , It is expressed as the distance measurement on the contour space ( See chart 1 (b) Visual description of )

among σ Is defined in ground truth Signed distance function on .

among D Is the object area ,∂D It's the interface . In order to reduce the gap between the prediction boundary and the target boundary Hausdorff distance ,Karimi and Septimiu [50] Use the following differentiable Hausdorff Distance to train CNN

among VDTM and PDTM Express ground truth V And forecasting P Distance transformation diagram of , In the form of ( Only for VDTM, Not given PDTM?)

Xue et al [52] Put forward to use CNN Return to ground truth Signed distance function of . Mortz et al [54] Developed the outline Dice Coefficient to quantify the extent of the predicted segmented surface relative to the reference segmentation . Lan、Xiang and Zhang [53] By minimizing the elastic energy of long and thin structural curves , A loss function based on elastic interaction is proposed . Azzopardi wait forsomeone [55] The objective function of geometric constraints is introduced , This function uses prior knowledge to construct and adjust the segmentation of carotid artery structure .

Pixel-wise loss

However , When the pixel approaches the boundary , Signed distance function (7) The value of tends to zero , This leads to inaccurate segmentation near the organ boundary . as everyone knows , The boundary describes the important high-level details of the target . therefore , We introduce a loss term per pixel to enhance the effect of regularization on pixel level prediction . Bansal et al [57] An efficient PixelNet, It shows that a small number of pixels sampled from each image is enough for learning to obtain satisfactory segmentation performance .PixelNet The advantages of are twofold , Sampling only needs to be calculated on demand , It also provides flexibility to allow the network to focus on rare samples . Kirilov et al [58] Put forward PointRend Neural network module , It is used to perform point-based segmentation prediction at the adaptively selected position based on the iterative subdivision algorithm , Image segmentation is regarded as a rendering problem . PointRend Used as a CNN Post processing of the model , It can output high-resolution predictions on finer grids , To get a clear boundary between objects . obviously , Learning pixel level information is very important for segmentation accuracy and model efficiency , Especially when rough segmentation has been obtained .

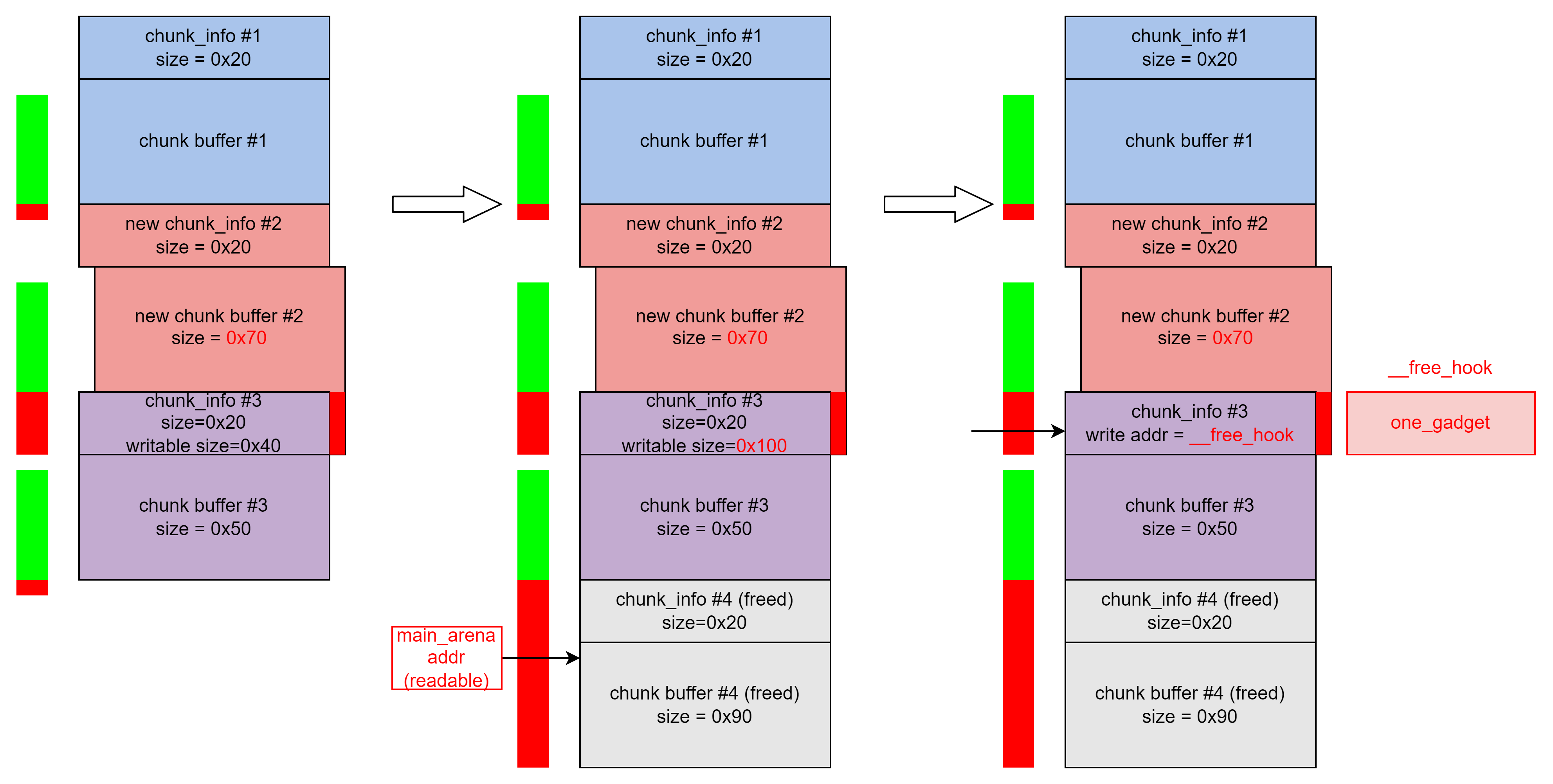

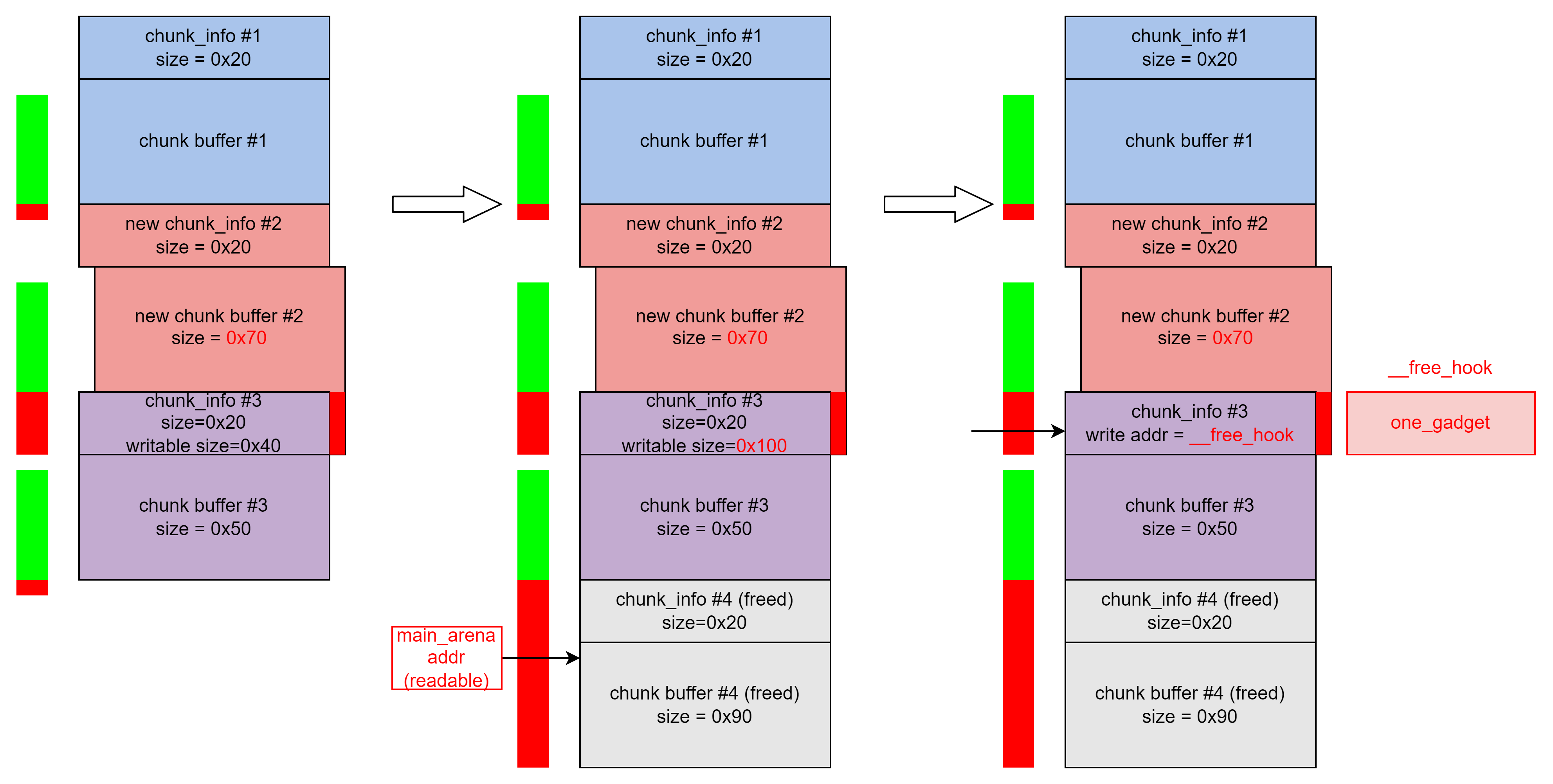

Our coarse-to-fine model

Our goal is to use 2D Network from CT Segmentation in abdominal scanning 3D Medical images , Such as pancreas and spleen . For such a small organ segmentation problem , The method from coarse to fine is a good choice , It uses rough phase predictions to narrow the input of the fine phase [59,60].

We will scan 3D The image is represented as U, The size is W×H×L, among W、H and L They are along the coronal 、 Number of slices in sagittal and axial views . Then we cut each volume into two-dimensional slices along each axis , Expressed as UC,w ( w = 1 , … , W ), US,h ( h = 1 , 2 , … , H) and U^A ,l^ ( l = 1 , 2 , . . . , L ). Now? , We take the axial view as an example to illustrate our model from coarse to fine . Rough model predicts rough segmentation from input data , It can be expressed as

The advantage of rough segmentation is that we can not only estimate the bounding box according to the segmentation , It can also generate good initialization for fine models . First , We get a bounding box based on binary segmentation PA,l C = || (PA,l θC ≥ 0 . 5) , It's an all inclusive

P A,l C Non zero pixels and a K The smallest margin of pixels 2D Bounding box . then , We define a clipping function C[·; PA,l C ] To crop and... From a given image PA,l θC Bounding box areas of the same size . On the other hand , stay [60] after , We introduce a saliency transformation module to generate images with attention , As initialization of fine model

among F S (·; θS ) By θS Parameterized transformation function . We further use a refined model to estimate the final segmentation , for example

among F(·; θ ) By θ Parameterized partition function . Please note that , We will the original image UA,l Connect to the input of the fine model , To enhance the edge 、 Shallow features such as lines and corners . It's not hard to find out , The coarse model and the fine model are combined by the significance transformation . Our final division is made up of PA,l = I (PA,l θ ≥ 0 . 5) Obtained binary image .

Network architecture

For the task of small organ segmentation , We do it through inheritance DeepLab-v3 Cascading blocks and ASPP Module to build coarse model and fine model , Through hole convolution and pyramid pool for more in-depth research . For illustrative purposes , We browsed through the network architecture of our fine model , Pictured 2 Shown . We use ResNet-18 As our backbone network , It contains four basic blocks . The input of the fine model includes the saliency map and the original image , To provide good initialization and more image features . When entering block1 Before , We remove the down sampling operation in the first convolution , Keep the information inside the input . We use rate = 2 and rate = 4 Of atrous Convolution to replace block3 and block4 Continuous strides in . The motivation behind these operations is to retain more local information and expand the receptive field , This is important for small goals . ASPP Modules can integrate different atrous rate Get the features to effectively capture multi-scale context information . By further passing the feature through two 1 × 1 Convolution layer , We get the final logits Lθ, It is used to generate predictions by up sampling using bilinear interpolation . For rough Networks , The only difference is that we keep stepping in the first convolution layer to reduce the computational cost . Last , For significance conversion , We simply apply the convolution of two size preserving , Each has 5 × 5 and 3 × 3 Filter size .

Fig.2 Illustration of a fine segmentation model using multilevel structural losses , among 1 × 1 Indicates that the kernel is 1 Convolution of . Although the network uses 3 Slices as a unit , But for the sake of understanding , We only show one slice .

Loss function

We use the rough model and the fine model respectively Dice Losses and multistage structural losses (3). Pictured 2 Shown , The region and boundary terms in the multistage loss are selected as [51] Medium Dice And boundary losses , They are evaluated as a powerful combination of small organ segmentation [61]. Because spatial redundancy limits the information learned by convolutional networks in general pixel level prediction problems , So the final prediction of the network P θ Tends to over smooth organ areas and within organ boundary samples . therefore , We are in the final logits layer L θ The loss term per pixel is defined on ( See chart 2 To illustrate ), It is coarser than the image grid 4 times ( What do you mean ?). especially , We predict that the probability is the closest through interference 0.5 The pixels of are logits Define an uncertain set of pixels on the layer S, These pixels are located near the boundary and are the most difficult to segment . By minimizing the uncertainty set S Binary cross entropy on , We get the following per pixel context loss ( Personal understanding : Finally, there is one Logits layer , Through this Logits layer , Make the prediction probability close to 0.5 The set of pixels that are difficult to accurately classify is set to an uncertain pixel set S, By minimizing this uncertainty set S Binary cross entropy on , Get per pixel context loss )

I S (L θ ) It's an index function , Defined as

The selection of uncertain pixel set is similar to [58] The three-step strategy of . In order to get a clear and accurate boundary , Let's start with random sampling kN Pixel (k = 3) To encrypt the final logits layer L θ The pixel , Follow uniform distribution to generate enough pixels with uncertainty . Next , We estimate by interpolating the original prediction kN Probability of pixels . Last , We choose mN Pixel , among m = 0.75 The highest uncertainty and (1 -m ) N Random pixels , To increase the generalization of data . therefore , Our set of uncertain points contains N A little bit . From the picture 3 In the example , We can find that uncertain pixels are also located near the boundary . Although the boundary loss can improve accuracy by minimizing the difference between the predicted and real values on the boundary , But it considers the boundary as a whole by ignoring the pixel level . We introduce pixel by pixel loss in rough segmentation , And gradually improve the regularization to allow the network to learn more pixel level information . By allocating pixels on a fine grid , Our per pixel loss term can not only improve the segmentation accuracy of uncertain pixels , It can also provide smoother boundaries , Complementary to boundary losses . Besides , Our per pixel loss term can be flexibly combined with other regional and boundary loss terms , And applicable to any existing deep network architecture .

Fig.3 Final logits Select an uncertain pixel set on the layer S A typical visual example of .

Based on the previous discussion , We jointly minimize the following energy functional during the training phase , To achieve efficient multi-level feature fusion

among ω 1 , ω 2 , α, β ∈ R It is a parameter to balance the contributions of different items .

Multi-level structural network

Subject to multitasking model [19,37] The inspiration of success , We have developed a novel network , Learn and fuse image features with our multilevel structure loss . Pictured 4 Shown , Our multi-level structure network uses multiple branches to learn features from region level to edge level and pixel level , And USES the transformer Structure captures remote information from carefully selected points . All three branches are processed in parallel and fused by a novel saliency guidance module , The module can use multi-level features and output the final prediction . below , We will explain these branches in detail .

Fig.4 Illustration of our hierarchical network . Although the network uses 3 Slices as a unit , But for the sake of understanding , We only show one slice .

The region branch

The regional branch adopts ASPP modular , Multiple scales of image context can be captured , And output binary segmentation prediction Pregion θ . In the process of training , We use Dice loss To punish regional prediction .

The boundary branch

We implemented another ASPP Modules as boundary branches , To support its ability to resample features at different scales . Different from most boundary prediction models , The latter outputs a binary diagram , among 1 Indicates the edge , Otherwise 0, Our model learning is done by (7) Defined signed distance graph . As in the previous step , We The boundary loss function is defined as the boundary loss and the sign distance graph L2 The combination of norms , It gives

among σ(·) Represents the sigmoid function . Please note that , Boundary prediction Pboundary θ Positive within the target , Negative outside the target . therefore , stay

There was one before “-”( minus sign ) Symbol .

The pixel branch

For pixel branches , We use non-local transformer Module to learn one-dimensional pixel features , It not only utilizes local features and global context , The characteristics are also emphasized through the self attention mechanism . Pixel branches directly from the trunk ResBlock1 Extract features to enhance low-level features . We estimate the location of uncertainty points based on regional prediction , The selection method of uncertainty points is the same as before , Except encryption ( What does encryption mean ). especially , We choose the uncertainty point according to the rule point instead of the encryption point , To facilitate integration with the saliency guidance module .PointRend [58] Use and share MLP To predict pixel by pixel segmentation , This can only extract local features . Our pixel branch uses transformer Architecture to capture nonlocal features . From the picture 4 The green box in the can see , Our pixel branch contains a transformer Share with one MLP, To use local and nonlocal features for final prediction . Transformer By a similar [62] Composed of multiple self attention blocks , Followed by a point by point MLP layer (1×1 Convolution ). Then point by point MLP Apply after layer ReLu Activation function . We also merged residual connections . As discussed , Binary cross entropy is used as the loss function of pixel branches , To punish the selected point Lpixel θ The forecast .

The multi-level saliency guidance module

Last , We use multi-level significance guidance module to fuse different features for final prediction . The pre prediction characteristics of all branch outputs are 256 Channels . Then we sample the features of the region and boundary branches up to the original size of the input image . The characteristics of uncertainty points are 256 One dimensional data of channels . We first generate pixel features with the same size as region and boundary level features by filling in zero values , Then replace the position of the uncertainty point with the learned features , This means that only the selected uncertain position is non-zero . In our multilevel significant Sex guidance module , Boundary features and pixel features are summarized at the element level , And adopt two depth directions 1×1 Convolution to extract the relationship between boundary and pixel features , Generate two weight graphs , Multiply with the boundary and pixel features respectively to obtain the saliency map . after , Regional features are multiplied by two saliency maps , And then connect them together . Through two 1 × 1 Convolution of , We get the final prediction Pfusion θ . We use area Dice Loss to train the multi-level significance guidance module .

We use the above multi-level structure loss to learn rich features , The boundary loss can alleviate the imbalance of edge prediction , The pixel loss of monitoring the selected point can make up for the local information . say concretely , The loss function used to train our multilayer network can be described as follows :

among ω 1 , ω 2 , α, β, γ ∈ R Is a parameter that balances the contributions of different branches .

Implementation details

All our experiments are in NVIDIA Titan RTX Upper use Pytorch Realized .

Network training and testing

The training phase

We jointly train the coarse model and the fine model in the training stage . follow [63], We use a three-step optimization strategy , It can guarantee the convergence of the coarse to fine method . First , We use ground truth Generate bounding boxes and optimize rough and fine models respectively . secondly , We still use ground truth bounding box, However, the coarse and fine models are jointly optimized through the significance conversion module . Last , We directly optimize the network from coarse to fine , Instead of using ground truth Bounding box . Our loss function (13) Parameters in ω 1 and ω 2 Be selected as ω 1 = 0.3 , ω 2 = 2 / 3 To balance the contribution of rough and fine models . For the multistage structural loss model , Parameters α Initialize to α = 0 And dynamically adjust , bring α At every epoch Gradually increase 0.2 Until you reach α = 1. When the prediction has certain accuracy to ensure the convergence of the whole network , We will consider our pixel loss . therefore ,β The value of is first 12 individual epoch Set in the for β = 0, And then in the rest of epoch Added to β = 1. chart 5 Drawing a rough and fine model view along three axes DSC Loss curve . It can be seen that ,DSC The value of the epoch Convergence with the increase of quantity . Both the rough model and the fine model have two obvious vibrations . In the 8 individual epoch, We started using rough segmentation to draw the bounding box instead of ground truth Bounding box , This leads to rough and fine models DSC Values are rising . The other is the 12 individual epoch, We introduce the loss per pixel into our loss function . However , In a few days epoch after ,DSC The value of decreases to a much lower value , This shows that the loss per pixel can help the convergence of the proposed model . In training , We adopt the early stop plan , Stop training when the performance of the verification set does not improve .

Fig. 5 During training DSC Line chart of loss . When adding our pixel loss ,DSC Losses increase first , But in the next epoch The number of Li soon decreases .

On the other hand , The parameter settings of our multilayer network are given in a similar way . Parameters α Initialize to α = 1, Because regional branches and boundary branches are trained separately . β and γ The value of is first 12 individual epoch Set to β=γ=0, And then in the rest of epoch Add to β=γ=1, This can ensure the convergence of pixel branches and the final prediction .

The test phase

Different from the training process , Our testing phase is implemented in an iterative process , To refine the output step by step . Rough segmentation , Expressed as P0 , Used to estimate bounding boxes and saliency graphs , Both are inputs to the fine model . Then the refined model iteratively updates the bounding box and saliency graph until convergence . We use maximum iteration and relative DSC To track convergence , among RDSC Defined as

among tol = 0.99 In our experiment , The maximum number of iterations is Tmax = 10 . We draft the algorithm under test as Algorithm 1.

Besides , In order to deal with 3D Medical image segmentation , We usually train three models with each view , First, the segmentation results are fused by majority voting . More details can be found in [59,60] Find .

Architecture setting

Let's first discuss how to modify ResNet Backbone architecture , To overcome its shortcomings in dealing with small organ segmentation . The two down sampling operations before the first block can reduce the computational cost by sacrificing sharp details . Due to the coarse to fine structure of our model , The input size of the fine model has been smaller than its original size 1/2. therefore , It is best to remove the down sampling operation to capture more details from the image . Give Way out_stride Represents the ratio of the spatial resolution of the input image to the output resolution . By deleting the maximum pooling layer or the step in the first convolution or their combination , We can get different out_stride . We compared the tables 2 Different from out_stride Segmentation accuracy 、 Every epoch Training time and FLOPs( Multiply and add ). It can be seen that , The best choice is out_stride = 4, By removing the down sampling in the first convolution layer and preserving the maximum pooling layer , Its FLOPs And training time are close to the baseline model .

Datasets and settings

NIH pancreas dataset

NIH The pancreas dataset contains 82 Strengthen the abdomen CT Volume , Its resolution is W × H × L Volume ,W = H = 512 and L ∈ [181, 466]. And [60] similar , We divide the data set into 4 A fix fold, Every fold Contains almost the same number of samples . We use cross validation to evaluate segmentation performance , That is to say 4 Subset 3 On the training network , And use the remaining subset to test it . In the data preprocessing step , We crop the image intensity at [-100, 240] Inside . about NIH Data sets , We train three independently along each axis 2D Model , Coronal 、 Sagittal and axial views , Then the prediction is fused in each iteration by majority voting . stay Imagenet Pre trained on dataset Resnet-18 Used to initialize the backbone , And USES the Adam As an optimizer . We trained about 20 individual epoch, front 8 individual epoch The learning rate of 1e-5, Every time 2 individual epoch falling 1/2. In the 12 individual epoch When introducing pixel by pixel loss , We reallocate the learning rate to 1e-5, And every two epoch With 1/2 Reduce it . The batch size is set to 1, among 3 Slices connected together . During the test , Although the segmentation accuracy continues to improve in the iterative process , But we stop it to balance the trade-off between computational efficiency and segmentation accuracy , One of them is relatively DSC The tolerance is set to tol = 0.99, The maximum number of iterations is fixed as T max = 10. In training and testing , We use K = 20 The margin of the crop pancreas region .

We use similar training methods for multilayer networks . before 12 individual epoch, We omit pixel branching and saliency guidance modules . When the trunk of the fine model converges , We begin to train the pixel branching and saliency guidance module , This ensures that the uncertainty point is selected correctly . For multilevel Networks ,epoch The total number of is set to 25. We have re realized nnU-Net [48] Result , Which cascades 3D The architecture was chosen for small organ segmentation . We trained each cross validation five times , And take the five networks obtained from the training set as an integration to estimate the results , Its pretreatment 、 Post processing and data enhancement follow the suggestions in the original paper .

ISICDM pancreas segmentation challenge dataset

ISICDM The pancreatic segmentation challenge includes 36 A thin and 36 A thick abdomen CT Volume . Scanning device is defined AS, Siemens , Under the standard pancreas scanning protocol .2D The number of slices ranges from [205,376] and [31,76] Between , The thickness of thin data set and thick data set are 1 mm and 3 mm. Follow the cross validation strategy , We divide the two data sets into 6 A subset of , Each child Set contains 6 Volumes . We use two models to deal with thin datasets and thick datasets , Both models are in 6 Subset 5 Training on the subset , And tested on the remaining subset . For thin datasets , We also fuse three along each axis 2D Model as our final model . For thick datasets , We use the axial view model , Because coronal and sagittal views contain too few slices , Cause prediction errors . The weights of thin model and thick model are NIH Pre training on the dataset . We've achieved it again RSTN [60] and nnU-Net [48], among RSTN Use the same data preprocessing for training , And in NIH Pre training is carried out on the data set to obtain better performance . about nnU-Net, We use them separately 3D Cascading architecture and 3D Full resolution architecture to handle ISICDM Thin datasets and thick datasets . We also trained for each cross validation 5 individual nnU-Net Model , Use the same preprocessing as the original paper 、 Post processing and data enhancement .

Medical segmentation decathlon(MSD) dataset

The third is the medical decathlon (MSD) Spleen dataset , It includes 41 individual CT volume . 2D The number of slices is [31,168] Between . according to [64] Recommended settings in , We first crop the intensity of all images to [-125, 275] The data set was randomly divided into two groups , A group contains 21 Volumes for training , The other group contains 20 A volume test for training . And ISICDM The experiments on thick datasets are similar , We realize the prediction of one-dimensional model trained in axial view . Other settings and NIH Data sets are the same . For a fair comparison , We re implemented the comparison model ourselves . according to [64] in VNet Set up , We normalize the volume , And used in training and testing 128 × 128 × 64 Patch for . We also use five network models obtained on the training set in MSD Re implemented on spleen dataset nnU-Net Of 3D Cascading Architecture .

Evaluation

We use Dice Similarity Coefficient (DSC) and Hausdorff distance (HD) To evaluate the performance of our model , Defined as

In theory , high DSC And low HD Indicates better segmentation accuracy .

Experimental results

In theory , high DSC And low HD Indicates better segmentation accuracy . In this section , We evaluated the multistage structural losses (MLL) Model and multi-level structure network (MLN) Model

NIH pancreas dataset

Numerical analysis

Have been to NIH Pancreas segmentation has developed a series of methods based on deep learning . Some works are directly used ground truth To generate a bounding box , Other works use multi model method to learn bounding box . For a fair comparison , We trained two models with or without labeled data to select bounding boxes . The numerical results are summarized in table 3 in , Our model exceeds the most advanced results in both cases . especially , With the [65] Under the same cutting , Our approach offers significantly higher DSC value , This can prove the efficiency of network structure and the advantage of multi-level structure loss function . It shows that our network architecture can capture more than encoder - More information about decoder structure , This is very useful for the segmentation of small organs such as pancreas . On the other hand ,MLL and MLN Models are better than multi model methods , I.e. combination 2D and 3D Volume fusion model [69], And two recently released multi model methods [48,71]. most important of all , our MLL Model exceeds 3D Multi model approach nnU-Net,DSC Improved near 1%, This proves the effectiveness of multilevel structure loss in small organ segmentation . Besides , Our multistage structure loss model and multistage structure network are both better than Yu Et al RSTN Significant improvements have been made (≥1%). [60], It is also developed based on the coarse to fine and saliency transformation architecture . More specifically , We observed our rough model , There is no multistage structure loss , The average segmentation accuracy is 77.96%, Slightly lower than [60] Use in FCN Built 78.23%. However , Our fine model provides better accuracy , After the first iteration 84.02% Of DSC, Far above [60] Medium 82.73%. It makes it clear that , Multi level structure loss and network can help the backbone model obtain better accuracy .

Convergence analysis

We further analyze the convergence of our multistage structural loss model , To verify its reliability . especially , We also track absolute DSC And the relative DSC. Pictured 6(a,b) Shown , The absolute and relative errors of the four cross validation converge with the increasing number of iterations . especially , We observe absolute and relative DSC before 5 It grows rapidly in the next iteration , And in the end 5 Almost unchanged in the next iteration . therefore , Set in the test phase T max = 10 It's reasonable .

On the other hand , We compared the relative of our model DSC And table 4 Two cyclic saliency switching networks in . It can be seen that , Our model with multistage structural losses converges fastest among all comparison methods , It includes two cyclic significance transformation networks and our model with reduced loss function . just as [60] Reported in , It requires on average 5.22 Iterations can exceed 0.99 Relative DSC, Our model only needs 4.29 Sub iteration . This means that our model can not only give higher DSC The forecast , It can also save a certain amount of computing cost when processing data . It is worth mentioning that ,82 There are 2 It's in 10 There is no convergence after iterations , All cases use our multistage structural loss convergence . Besides , We are in the table 5 And table 6 Listed in DSC and HD Iteration with our model . We observe that the loss per pixel is effective in improving the segmentation accuracy and the convergence of the proposed model .

Fig.6 The first two line charts are DSC Line chart and relative DSC. The dotted line is 4 Iteration results of cross validation , The red line is the average result . before 5 In the next iteration ,DSC And the relative DSC It's growing fast , In the following 5 In the next iteration ,DSC And the relative DSC Continue to grow , Although the speed has slowed down . The last two line charts are NIH Ablation analysis of multi-level structure loss on data sets . (a) DSC w.r.t Graph . 4 Cross validation ; (b) relative DSC w.r.t Graph . 4 Cross validation ; DSC w.r.t Graph . Different loss functions ; (d) relative DSC w.r.t Graph . Different loss functions . ( Explanation of the color reference in this legend , Please refer to the online version of this article .)

Ablation analysis

In the following , We conducted a series of ablation studies , Take the study area 、 Validity of boundary and pixel terms . Pictured 6 Shown , Each term in our multistage structure loss contributes to the final segmentation performance . By introducing boundary loss ,DSC Improved 0.43%. When introducing pixel by pixel loss ,DSC Further improved 0.2%. meanwhile , For measuring boundary accuracy Hausdorff The distance is also about 0.6 (mm). On the other hand , chart 6 (d) It is proved that learning multi-level features can help improve the convergence of our coarse to fine model . Besides , We are in the picture 7 A visual description of the ablation experiment is provided in , The pancreas has a long and narrow structure . As shown in the figure , Loss through area , The pancreas has been divided into two independent sub regions . By introducing boundary loss , The isolated two sub areas become connected , The pixel by pixel loss can further improve the accuracy of the boundary .

Fig.7 A typical visual example of our multi-level structure loss . The selected boundaries and pixels are displayed with a blue background . ( Explanation of the color reference in this legend , Please refer to the online version of this article .)

ISIDM pancreas dataset

We further discussed ISICDM Segmentation performance of pancreatic dataset . We trained two models for thin data and thick data respectively , Note that the thin model is in NIH Pre trained on dataset , The thick model is initialized with the thin model . The specific segmentation results are shown in table 7 Shown . We've achieved it again RSTN [60] and nnU-Net [48] To make a fair comparison . It can be seen that , Our model provides better segmentation accuracy not only on thin datasets but also on thick datasets , It also saves a lot of computing time , Especially with nnU-Net comparison . And RSTN comparison , Our models are in 3.92 and 4.42 The second iteration converges , and RSTN Average consumption on thin data sets 6.11 It takes several iterations to achieve 0.99 Relative DSC. therefore , Our model saves a lot of computing time . what's more , And RSTN The model compares , Our multistage structural loss model also provides a higher DSC value , The average is up 1.5%. chart 8 Examples of selective segmentation of fine and coarse data are provided . Visual comparison can prove that our model can recognize small objects more accurately 、 Long and narrow structure .

Fig.8 Our model and ISICDM Cyclic saliency transformation networks on datasets [60] Selective visual comparison between , Among them, red 、 Green and yellow represent ground truth、 Prediction and overlapping areas . On the left is a thin subset , On the right is the thick subset . ( Explanation of the color reference in this legend , Please refer to the online version of this article .)

Because two-dimensional slices and slices in coronal and sagittal views are much less than slices in axial views of thick data , As a result, the segmentation accuracy of the two views is low , So we use the axial view model instead of our method and RSTN Fusion model . Although these three models DSC The value decreased significantly , But our model takes about 1.5% The advantage of RSTN and nnU-Net. what's more , Our multi tiered network provides significantly better performance on thick data sets .

MSD spleen dataset

Numerical analysis

Our model is also applicable to other organ segmentation tasks , For example, spleen segmentation . We implemented the proposed model and without 3D fusion RSTN And axial view , Because the data set is small and varies greatly in the other two dimensions . We use two boundary based methods and two 3D Methods are compared to evaluate our methods , namely EBP Model [64]、LSM Model [72]、V-net [38] and nnU-Net [48], They are all re implemented by ourselves for fair comparison . Please note that ,ground truth Used to EBP and V-net Generate 3-D Bounding box . [64] Reported in RSTN Of DSC by 89.5% , This is much lower than our re implementation , Because the main voting is three axes . As shown in the table 8 Shown , Our model provides the highest DSC And the lowest variance , This is much better than other methods . Besides , Our model takes less computing time than other models , except V-Net, It uses labels to crop the bounding box . Because both of our models need less iterations to meet the stop criteria , Therefore, reasoning time can be saved . It can be seen that , Our multilayer network can average DSC Further improve 0.6%. Because the boundary of spleen is much smoother than that of pancreas , Boundary branches and pixel branches can help to approach ground truth, It plays a strong guiding role in regional characteristics . We are still trying to 9 Selectivity is shown in 2D Segmentation results , Our model is superior to other models in vision .

Fig.9 Our model and MSD Selective visual comparison between cyclic saliency transformation networks on spleen datasets , Among them, red 、 Green and yellow represent ground truth、 Prediction and overlapping areas . ( Explanation of the color reference in this legend , Please refer to the online version of this article .)

Ablation analysis of modules in MLN model

In this section , We explore ablation studies to evaluate the individual contributions of pixel modules and multilevel saliency guidance modules . say concretely , We used three baseline models for comparison , The first is by replacing the pixel module with four shared MLP get , The second is to replace the multi-level saliency guidance module with four 3×3 Convolution layer is obtained , The last one is obtained by replacing the pixel module and the multi-level saliency guidance module . From the table 9 It can be seen that , In the parameter 、FLOP When the amount of reasoning time is similar , Our multi-level saliency guidance module can reduce DSC Improve 0.3%. Our pixel module performs as a nonlocal module by extracting features from uncertain pixels , and PointRend [58] Four shares used in MLP Capture only local features . Through our local characteristics ,DSC Raised again 0.2%. therefore , Both the pixel module and the multi-level saliency guidance module contribute to the segmentation accuracy .

Conclusion

In this work , We promote a novel loss function by punishing multilevel structural information , To better aggregate the multi-scale features of small organ segmentation . We use a hole convolution model from coarse to fine , And fully consider the small size of the target , By designing appropriate receptive fields to retain more low-level features . Comprehensive experiments on public pancreas and spleen datasets have proved the superiority of the proposed method in dealing with small organ segmentation . Our multi-level structure loss not only continuously improves the segmentation accuracy , Moreover, the training stability and the convergence of the fine model are enhanced in the test stage . Numerical experiments also show that , Our multi tiered network is NIH Pancreas dataset and ISICDM The data set is better than the single head model on the thin subset .

Despite the proposed MLL Models and MLN The model shows advantages in the segmentation of pancreas and spleen , However, the performance of the two models is not always consistent . therefore , The theoretical mechanism of multi-level structure information fusion is still an outstanding problem to be studied . Another limitation of our model is 3D Information is still insufficient for our 2D Method . Although we integrate three networks along three views to provide more spatial details , But the three models in different directions are trained independently , There is no spatial correlation , This also increases the calculation cost . Our future work includes developing more effective loss functions and network structures to study multi-level structural information , To achieve accurate medical segmentation .

边栏推荐

- MySQL information_ Schema database

- MySQL的information_schema数据库

- Nexus 6p从8.0降级6.0+root

- How to choose the middle-aged crisis of the testing post? Stick to it or find another way out? See below

- [Chongqing Guangdong education] electronic circuit homework question bank of RTVU secondary school

- Impact relay jc-7/11/dc110v

- Google Chrome browser will support the function of selecting text translation

- "In simple language programming competition (basic)" part 1 Introduction to language Chapter 3 branch structure programming

- Steady! Huawei micro certification Huawei cloud computing service practice is stable!

- 卸载Google Drive 硬盘-必须退出程序才能卸载

猜你喜欢

buuctf-pwn write-ups (8)

Notes and notes

QT get random color value and set label background color code

buuctf-pwn write-ups (8)

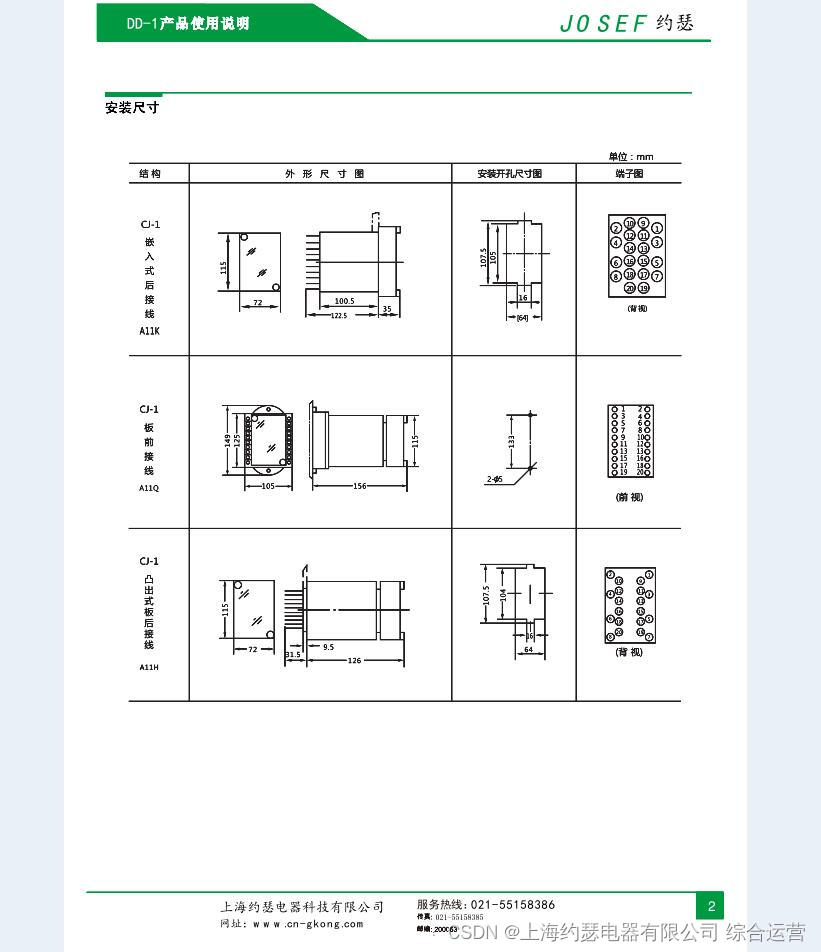

Grounding relay dd-1/60

Functions in C language (detailed explanation)

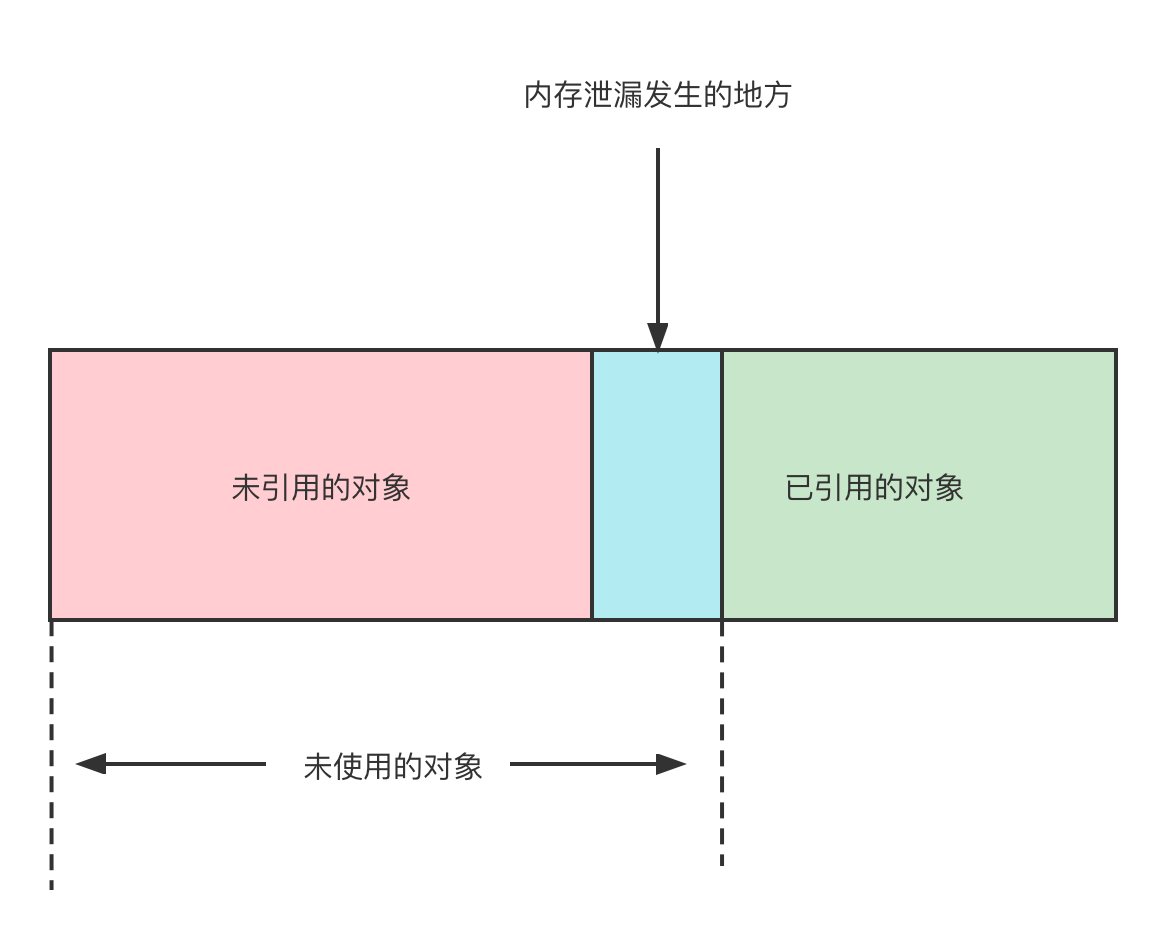

How to avoid JVM memory leakage?

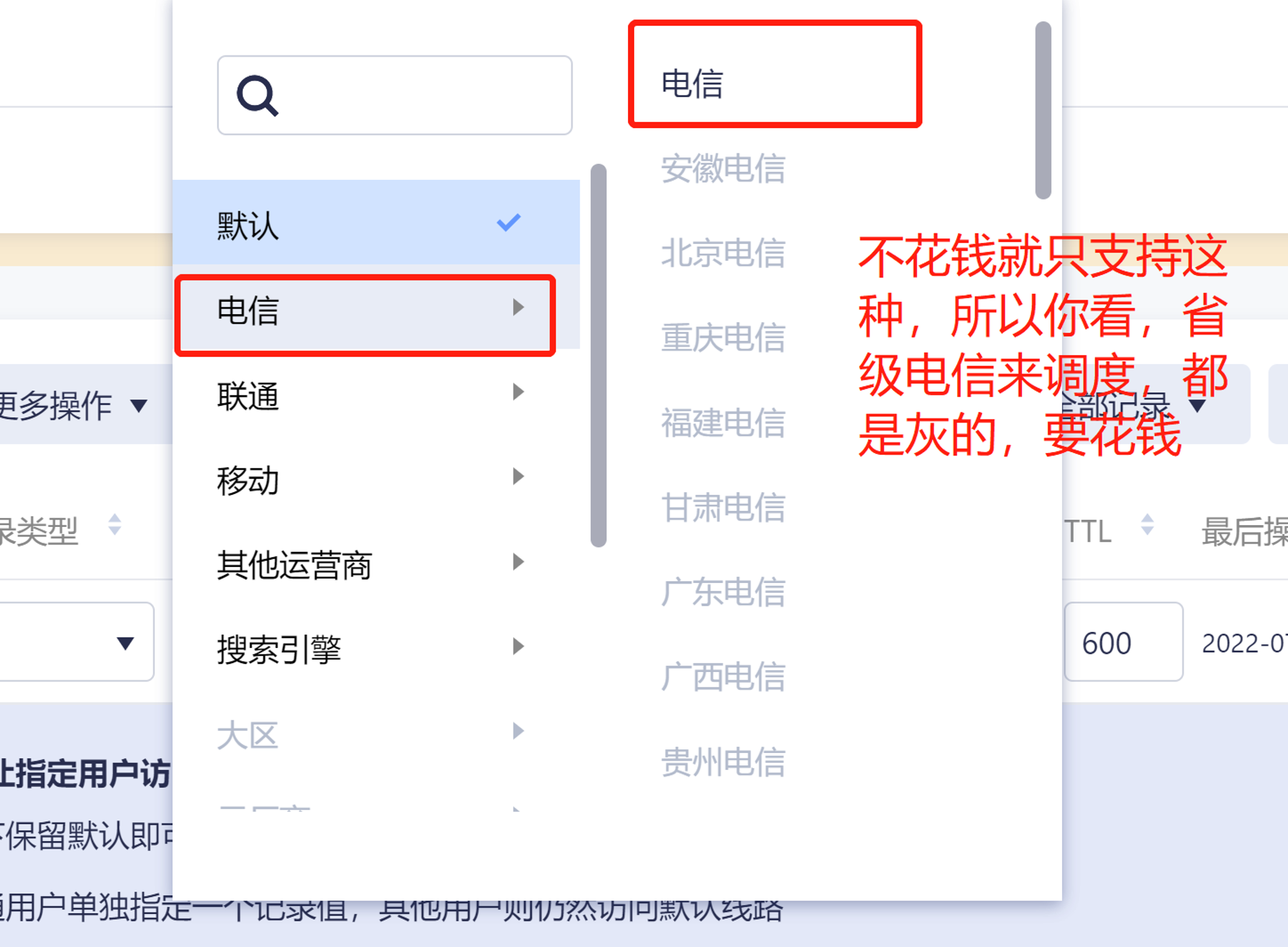

gslb(global server load balance)技术的一点理解

JS扁平化数形结构的数组

Review | categories and mechanisms of action of covid-19 neutralizing antibodies and small molecule drugs

随机推荐

安装 Pytorch geometric

Canoe panel learning video

js如何将秒转换成时分秒显示

win10清除快速访问-不留下痕迹

2022.7.3-----leetcode. five hundred and fifty-six

Luogu deep foundation part 1 Introduction to language Chapter 5 array and data batch storage

FRP intranet penetration, reverse proxy

ES6 modularization

Impact relay jc-7/11/dc110v

【微服务】Nacos集群搭建以及加载文件配置

JS arguments parameter usage and explanation

AWT介绍

Review | categories and mechanisms of action of covid-19 neutralizing antibodies and small molecule drugs

C语言中的函数(详解)

The end of the Internet is rural revitalization

Grounding relay dd-1/60

Detectron: train your own data set -- convert your own data format to coco format

对List进行排序工具类,可以对字符串排序

2022.7.2-----leetcode. eight hundred and seventy-one

[microservice] Nacos cluster building and loading file configuration