当前位置:网站首页>Redis cache penetration, cache breakdown, cache avalanche solution

Redis cache penetration, cache breakdown, cache avalanche solution

2022-07-03 15:11:00 【Miss, do you fix the light bulb】

Please understand redisson, The distributed lock mentioned before and the bloom filter and read-write lock mentioned below all need to be used redisson.

Cache penetration

When visiting a nonexistent key, It will cause you to hit the database directly through the cache , At this time, if a hacker maliciously uses the pressure measurement tool to visit all the time , Cause the database to collapse under excessive pressure .

Solution : Cache nonexistent null values , Given an expiration time , Prevent malicious attacks in a short time . But there will still be malicious input of a large number of nonexistent key, You will still query the database once , Then you can use the bloom filter to intercept before accessing the cache .

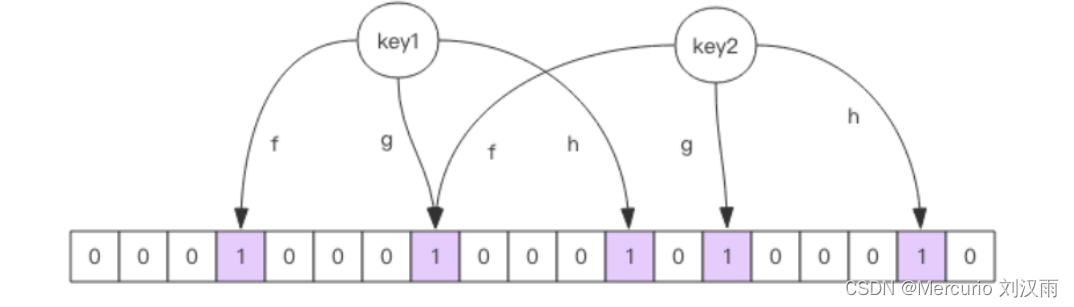

The bloon filter : Through a variety of algorithms hash After taking the mold, set a certain position as 1, The combination of multiple bits is 1 It means being , Bron said that existence may not necessarily exist , But if it doesn't exist, it must not exist . We can add all the data to be cached to the bloom filter before the project starts , In business, new data can also be sent to bloom filter , When querying, judge whether there is... Through bloom , There is no direct return . In fact, there is also a disadvantage of bron filter , Can't delete data , Only reinitialize , The time can be chosen to start when no one uses it in the morning .

Config config = new Config();

config.useSingleServer().setAddress("redis://localhost:6379");

// structure Redisson

RedissonClient redisson = Redisson.create(config);

RBloomFilter<String> bloomFilter = redisson.getBloomFilter("nameList");

// Initialize the bloon filter : The expected element is 100000000L, The error rate is 3%, Based on these two parameters, the bottom level will be calculated bit Array size

bloomFilter.tryInit(100000L,0.03);

// Insert data into the bloom filter

bloomFilter.add("key1");

bloomFilter.add("key2");

bloomFilter.add("key3");

// Determine whether the following numbers are in the bloom filter

System.out.println(bloomFilter.contains("key1"));//false

System.out.println(bloomFilter.contains("key2"));//false

System.out.println(bloomFilter.contains("key4"));//true

}

Cache breakdown

a large number of key Fail at the same time , There are a lot of key The request also causes too much pressure by directly hitting the database

The solution is to make the cache time of data scattered and not invalidate at the same time , Hot data makes it useful

So far above 2 The pseudo code of the scenario is as follows :

public class RedissonBloomFilter {

private static RedisTemplate redisTemplate;

static RBloomFilter<String> bloomFilter;

public static void main(String[] args) {

Config config = new Config();

config.useSingleServer().setAddress("redis://localhost:6379");

// structure Redisson

RedissonClient redisson = Redisson.create(config);

bloomFilter = redisson.getBloomFilter("nameList");

// Initialize the bloon filter : The expected element is 100000000L, The error rate is 3%, Based on these two parameters, the bottom level will be calculated bit Array size

bloomFilter.tryInit(100000L, 0.03);

// Query all data to be cached from the database

List<Map> keys = null;

keys = DAOkeys();

Iterator<Map> iterator = keys.iterator();

// Initialize all data

if (iterator.hasNext()){

Map key = iterator.next();

// Put in the bloom filter

bloomFilter.add(key.id);

// Put into cache

redisTemplate.opsForValue().set(key.id,key);

}

}

Object get(String id) {

// First, judge whether it is in the bloom filter

boolean contains = bloomFilter.contains(id);

if (!contains) {

return " Nonexistent data ";

} else {

// The query cache

Object o = redisTemplate.opsForValue().get(id);

if (StringUtils.isEmpty(o)){

// Query the database

Object obj= DAOById(id);

// Add to cache

redisTemplate.opsForValue().set(id,obj);

// If the queried data is empty, a shorter expiration time , Solve the existing problems key however value It's not worth it

if (obj==null){

// Plus a random expiration time

int time= new Random().nextInt(1000)+1000;

redisTemplate.expire(id,time, TimeUnit.SECONDS);

}

return o;

}else{

return o;

}

}

}

}

Cache avalanche

The cache layer cannot support or is down, resulting in all data hitting the database

Solution :1. Cache needs to support high availability , Use redis sentinel Sentinel mode or redis culster Cluster pattern

2. The back end is fused with current limiting and degraded , Current limiting degraded components sentinel or hystrix

Hot cache key Reconstruction optimization

The hot data reconstruction cache generally requires that it never expire , If the expiration time is up , There are too many concurrent threads to create a cache , have access to redis To ensure that a thread can always create a cache successfully , Other threads that do not get the lock sleep for a few milliseconds and then get the cache again .

Hot cache key Reconstruction optimization

atypism , Inconsistent reading and writing )

Double write refers to synchronously updating the cache when writing to the database

Read / write refers to deleting the cache when writing to the database , Write cache when reading database

Generally, the second one is recommended . But it can be seen from the figure that there are problems under concurrency .

Solution : By adding a read-write lock, it ensures that concurrent reads and writes are queued in sequence and executed serially , It's like reading without a lock .( Be careful lock() The lock of the parameterless method is not obtained , Will spin and wait )

package com.redisson;

import org.redisson.Redisson;

import org.redisson.api.RLock;

import org.redisson.api.RReadWriteLock;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.util.StringUtils;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class IndexController {

@Autowired

private Redisson redisson;

@Autowired

private StringRedisTemplate stringRedisTemplate;

private volatile int count;

@RequestMapping("/deduct_stock")

/* problem : For high concurrency scenarios , In order to ensure that it will not oversold , Data consistency 1.synchronized Shackles on code , But it can only be solved in the same process Stand alone application , Insufficient granularity , If distributed deployment is not feasible // synchronized (this){} 2. have access to setnx Distributed locks control every thread , Fine granularity is feasible . Boolean result = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, "zhuge"); //jedis.setnx(k,v) When there is no successful locking, the front-end error code is returned directly , If you succeed, you can directly carry out inventory operations if (!result) { return "error_code"; } And then in finally Release the lock inside , Avoid program errors , The lock does not release stringRedisTemplate.delete(lockKey); 2.1 Considering that if the program does not report an error , It's a direct collapse , For example, operation and maintenance directly kill process , instead of shutdown, Then it will still deadlock , So the solution is to add an expiration time ****** If this lock is added, there will be a serious oversold problem , The meaning of shackles is only to solve the problem of downtime. Downtime is not a problem , But the application runs slowly, but the lock time is short , There will be lock release inventory that has not been operated , Other threads get the lock to operate again and the lock is released by other threads ,****** Boolean result = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, "zhuge"); //jedis.setnx(k,v) stringRedisTemplate.expire(lockKey, 10, TimeUnit.SECONDS); 2.2 To ensure atomicity ( In fact, it's just cutting corners , I'm afraid it will collapse before the expiration time is added ), Set up key Add the expiration time when Boolean result = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, "value", 30, TimeUnit.SECONDS); 3. Controls whether each thread releases the current lock , Although the strength is fine enough , There is a premise to know , Locking is on the third-party client redis On Deadlock will occur without adding expiration time , However, it can ensure that the lock will be released accurately after the main thread operation inventory is completed But in order to solve the problem of program downtime, the expiration time is added , But adding expiration time presents another problem , If the application is not finished , That is, the inventory operation is not finished , It's time to expire , The next thread can get the lock and start the operation , At this time, my last thread completed the inventory operation , Then release the lock , At this time, the lock of this thread has already been released because the expiration time has expired , Then the lock you release now is to release the lock of the next thread . In this way, oversold occurs repeatedly . Need to solve problem one : Ensure that the current thread can release the lock it has added accurately on the premise of adding the expiration time setnx Only right key Lock , Define a random string as the value of the lock to the lock ky String clientId = UUID.randomUUID().toString(); Boolean result = stringRedisTemplate.opsForValue().setIfAbsent(lockKey, clientId, 30, TimeUnit.SECONDS); And then again finally Go inside this value , Judge that the value obtained by the current thread is the local variable generated by the current thread, and delete it if (clientId.equals(stringRedisTemplate.opsForValue().get(lockKey))) { stringRedisTemplate.delete(lockKey); } Problem 2 needs to be solved : Ensure that on the premise of adding the expiration time , It is also necessary to ensure that the lock is released after the warehouse inventory operation . Lock for life , Give a child thread a scheduled task iterm, After a period of time, check whether the main thread has finished executing , No, just add another expiration time Solution scheme : There is an implemented client toolkit Import <dependency> <groupId>org.redisson</groupId> <artifactId>redisson</artifactId> <version>3.6.5</version> </dependency> register @Bean public Redisson redisson() { // This is stand-alone mode Config config = new Config(); config.useSingleServer().setAddress("redis://192.168.0.60:6379").setDatabase(0); return (Redisson) Redisson.create(config); } Use , similar setnx RLock redissonLock = redisson.getLock(lockKey); // Lock redissonLock.lock(); //setIfAbsent(lockKey, clientId, 30, TimeUnit.SECONDS); // Unlock redissonLock.unlock(); */

public String deductStock() {

String lockKey = "product_101";

RLock redissonLock = redisson.getLock(lockKey);

try {

// Lock

redissonLock.lock(); //setIfAbsent(lockKey, clientId, 30, TimeUnit.SECONDS);

// synchronized (this){ Plan one plus synchronized, But it can only solve the situation of stand-alone application in the same process , If distributed deployment is not feasible

int stock = Integer.parseInt(stringRedisTemplate.opsForValue().get("stock")); // jedis.get("stock")

if (stock > 0) {

int realStock = stock - 1;

stringRedisTemplate.opsForValue().set("stock", realStock + ""); // jedis.set(key,value)

count++;

System.out.println(" Deduction succeeded , Surplus stock :" + realStock);

} else {

System.out.println(" Deduction failed , Insufficient inventory , Total sales "+count);

}

// }

} finally {

redissonLock.unlock();

/*if (lock && clientId.equals(stringRedisTemplate.opsForValue().get(lockKey))) { stringRedisTemplate.delete(lockKey); }*/

}

return "end";

}

// @RequestMapping("/redlock")

// public String redlock() {

// String lockKey = "product_001";

// // You need to be different here redis Example of redisson Client connection , Here's just pseudo code with a redisson The client simplifies

// RLock lock1 = redisson.getLock(lockKey);

// RLock lock2 = redisson.getLock(lockKey);

// RLock lock3 = redisson.getLock(lockKey);

//

// /**

// * According to the multiple RLock Object building RedissonRedLock ( The core difference is here )

// */

// RedissonRedLock redLock = new RedissonRedLock(lock1, lock2, lock3);

// try {

// /**

// * waitTimeout The maximum waiting time to try to acquire the lock , Beyond that , It is considered that the acquisition of lock failed

// * leaseTime Lock holding time , After this time, the lock will automatically fail ( Value should be set to be greater than the time of business processing , Ensure that the business can be processed within the lock validity period )

// */

// boolean res = redLock.tryLock(10, 30, TimeUnit.SECONDS);

// if (res) {

// // Successful lock acquisition , Deal with business here

// }

// } catch (Exception e) {

// throw new RuntimeException("lock fail");

// } finally {

// // in any case , Finally, we need to unlock

// redLock.unlock();

// }

//

// return "end";

// }

//

@RequestMapping("/get_stock")

public String getStock(@RequestParam("clientId") Long clientId) throws InterruptedException {

String lockKey = "product_stock_101";

RReadWriteLock readWriteLock = redisson.getReadWriteLock(lockKey);

RLock rLock = readWriteLock.readLock();

String stock="";

rLock.lock();

System.out.println(" Acquire read lock successfully :client=" + clientId);

stock= stringRedisTemplate.opsForValue().get("stock");

if (StringUtils.isEmpty(stock)) {

System.out.println(" Query the database inventory as 10...");

Thread.sleep(5000);

stringRedisTemplate.opsForValue().set("stock", "10");

}

rLock.unlock();

System.out.println(" The read lock is released successfully :client=" + clientId);

return stock;

}

@RequestMapping("/update_stock")

public String updateStock(@RequestParam("clientId") Long clientId) throws InterruptedException {

String lockKey = "product_stock_101";

RReadWriteLock readWriteLock = redisson.getReadWriteLock(lockKey);

RLock writeLock = readWriteLock.writeLock();

writeLock.lock();

System.out.println(" Get write lock successfully :client=" + clientId);

System.out.println(" Modify the goods 101 The database inventory of is 6...");

stringRedisTemplate.delete("stock");

int i=1/0;

writeLock.unlock();

System.out.println(" The write lock is released successfully :client=" + clientId);

return "end";

}

}

边栏推荐

- Web server code parsing - thread pool

- [transformer] Introduction - the original author of Harvard NLP presented the annotated transformer in the form of line by line implementation in early 2018

- XWiki安装使用技巧

- 基础SQL教程

- 第04章_逻辑架构

- "Seven weapons" in the "treasure chest" of machine learning: Zhou Zhihua leads the publication of the new book "machine learning theory guide"

- Vs+qt multithreading implementation -- run and movetothread

- PyTorch crop images differentiablly

- B2020 points candy

- Global and Chinese markets for indoor HDTV antennas 2022-2028: Research Report on technology, participants, trends, market size and share

猜你喜欢

Pytorch深度学习和目标检测实战笔记

Influxdb2 sources add data sources

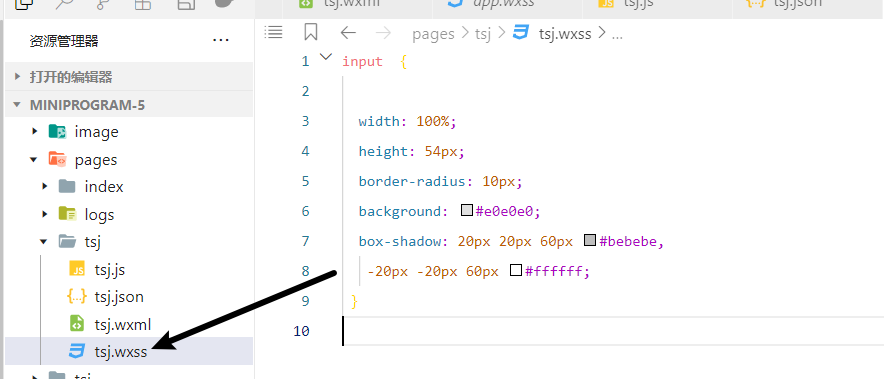

【微信小程序】WXSS 模板样式

Composite type (custom type)

![Mysql报错:[ERROR] mysqld: File ‘./mysql-bin.010228‘ not found (Errcode: 2 “No such file or directory“)](/img/cd/2e4f5884d034ff704809f476bda288.png)

Mysql报错:[ERROR] mysqld: File ‘./mysql-bin.010228‘ not found (Errcode: 2 “No such file or directory“)

Vs+qt multithreading implementation -- run and movetothread

![[engine development] in depth GPU and rendering optimization (basic)](/img/71/abf09941eb06cd91784df50891fe29.jpg)

[engine development] in depth GPU and rendering optimization (basic)

Dataframe returns the whole row according to the value

Open under vs2019 UI file QT designer flash back problem

视觉上位系统设计开发(halcon-winform)

随机推荐

【Transformer】入门篇-哈佛Harvard NLP的原作者在2018年初以逐行实现的形式呈现了论文The Annotated Transformer

.NET六大设计原则个人白话理解,有误请大神指正

Using TCL (tool command language) to manage Tornado (for VxWorks) can start the project

北京共有产权房出租新规实施的租赁案例

[transform] [practice] use pytoch's torch nn. Multiheadattention to realize self attention

[opengl] advanced chapter of texture - principle of flowmap

406. Reconstruct the queue according to height

视觉上位系统设计开发(halcon-winform)-3.图像控件

基础SQL教程

官网MapReduce实例代码详细批注

Kubernetes带你从头到尾捋一遍

[attention mechanism] [first vit] Detr, end to end object detection with transformers the main components of the network are CNN and transformer

Byte practice surface longitude

[ue4] cascading shadow CSM

Matlab r2011b neural network toolbox precautions

视觉上位系统设计开发(halcon-winform)

Yolov5系列(一)——網絡可視化工具netron

QT - draw something else

基于SVN分支开发模式流程浅析

Byte practice plane longitude 2